mirror of

https://github.com/huggingface/trl.git

synced 2025-10-20 18:43:52 +08:00

Compare commits

109 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| d06b131097 | |||

| f3230902b1 | |||

| bbc7eeb29c | |||

| 163dae5579 | |||

| 64c8db2f9a | |||

| 25d4d81801 | |||

| 685620ac6c | |||

| 2b531b9223 | |||

| 4f7f73dd09 | |||

| c60c41688e | |||

| cbb98dabb1 | |||

| a86eaab8e8 | |||

| aa9770c6bd | |||

| 0fe603eca1 | |||

| 843c14574f | |||

| 009b82412f | |||

| 82c8f20601 | |||

| b56e8b3277 | |||

| 0161a8e602 | |||

| 6e34c5932b | |||

| e1531aa526 | |||

| cb6c45474a | |||

| fe55b440e7 | |||

| 431456732c | |||

| 9679d87012 | |||

| 099f0bf42b | |||

| 33f88ead0b | |||

| 7705daa672 | |||

| fe49697e66 | |||

| d1ad5405cb | |||

| 1e88b84ab9 | |||

| c39207460f | |||

| 61af5f26b6 | |||

| 7a89a43c3f | |||

| fead2c8c77 | |||

| b4bb12992e | |||

| b21baddc5c | |||

| 216c119fa9 | |||

| a2747acc0f | |||

| b61a4b95a0 | |||

| 5c5d7687d8 | |||

| 096f5e9da5 | |||

| 2a0ed3a596 | |||

| ff13c5bc6d | |||

| d3e05d6490 | |||

| fadffc22bc | |||

| d405c87068 | |||

| b46716c4f5 | |||

| ec8a5b7679 | |||

| 376d152d3f | |||

| ef57cddbc3 | |||

| 20111ad03a | |||

| a4793c2ede | |||

| 0ddf9f657f | |||

| 3138ef6f5a | |||

| a5b0414f63 | |||

| e174bd50a5 | |||

| 86c117404c | |||

| a94761a02c | |||

| 5fb5af7c34 | |||

| 25fa1bd880 | |||

| 6916e0d2df | |||

| 1704a864e7 | |||

| e547c392f9 | |||

| a31bad83fb | |||

| 31cc361d17 | |||

| ab453ec183 | |||

| 933c91cc66 | |||

| ffad0a19d0 | |||

| e0172fc8ec | |||

| dec9993129 | |||

| c85cdbdbd0 | |||

| e59cce9f81 | |||

| c60fd915c1 | |||

| 08f550674c | |||

| 52fecee883 | |||

| 3cfe194e34 | |||

| ad325152cc | |||

| 1f29725381 | |||

| 23a06c94b8 | |||

| 5c24d5bb2e | |||

| 503ac5d82c | |||

| ce37eadcfa | |||

| 160d0c9d6c | |||

| d1c7529328 | |||

| fc468e0f35 | |||

| 131e5cdd10 | |||

| bb4a9800fa | |||

| 3804a72e6c | |||

| a004b02c4a | |||

| 8b234479bc | |||

| cf20878113 | |||

| d8ae4d08c6 | |||

| a2749d9e0c | |||

| ed87942a47 | |||

| 734624274d | |||

| 237eb9c6a5 | |||

| 2672a942a6 | |||

| b5cce0d13e | |||

| 0b165e60bc | |||

| 404621f0f9 | |||

| 89df6abf21 | |||

| 9523474490 | |||

| 1620da371a | |||

| 9b60207f0b | |||

| a6ebdb6e75 | |||

| 9c3e9e43d0 | |||

| 0610711dda | |||

| 24627e9c89 |

3

.github/workflows/build_documentation.yml

vendored

3

.github/workflows/build_documentation.yml

vendored

@ -16,4 +16,5 @@ jobs:

|

||||

repo_owner: lvwerra

|

||||

version_tag_suffix: ""

|

||||

secrets:

|

||||

token: ${{ secrets.HUGGINGFACE_PUSH }}

|

||||

token: ${{ secrets.HUGGINGFACE_PUSH }}

|

||||

hf_token: ${{ secrets.HF_DOC_BUILD_PUSH }}

|

||||

33

.github/workflows/clear_cache.yml

vendored

Normal file

33

.github/workflows/clear_cache.yml

vendored

Normal file

@ -0,0 +1,33 @@

|

||||

name: "Cleanup Cache"

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

schedule:

|

||||

- cron: "0 0 * * *"

|

||||

|

||||

jobs:

|

||||

cleanup:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Cleanup

|

||||

run: |

|

||||

gh extension install actions/gh-actions-cache

|

||||

|

||||

REPO=${{ github.repository }}

|

||||

|

||||

echo "Fetching list of cache key"

|

||||

cacheKeysForPR=$(gh actions-cache list -R $REPO | cut -f 1 )

|

||||

|

||||

## Setting this to not fail the workflow while deleting cache keys.

|

||||

set +e

|

||||

echo "Deleting caches..."

|

||||

for cacheKey in $cacheKeysForPR

|

||||

do

|

||||

gh actions-cache delete $cacheKey -R $REPO --confirm

|

||||

done

|

||||

echo "Done"

|

||||

env:

|

||||

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

14

.github/workflows/delete_doc_comment.yml

vendored

14

.github/workflows/delete_doc_comment.yml

vendored

@ -1,13 +1,13 @@

|

||||

name: Delete dev documentation

|

||||

name: Delete doc comment

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [ closed ]

|

||||

|

||||

workflow_run:

|

||||

workflows: ["Delete doc comment trigger"]

|

||||

types:

|

||||

- completed

|

||||

|

||||

jobs:

|

||||

delete:

|

||||

uses: huggingface/doc-builder/.github/workflows/delete_doc_comment.yml@main

|

||||

with:

|

||||

pr_number: ${{ github.event.number }}

|

||||

package: trl

|

||||

secrets:

|

||||

comment_bot_token: ${{ secrets.COMMENT_BOT_TOKEN }}

|

||||

12

.github/workflows/delete_doc_comment_trigger.yml

vendored

Normal file

12

.github/workflows/delete_doc_comment_trigger.yml

vendored

Normal file

@ -0,0 +1,12 @@

|

||||

name: Delete doc comment trigger

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [ closed ]

|

||||

|

||||

|

||||

jobs:

|

||||

delete:

|

||||

uses: huggingface/doc-builder/.github/workflows/delete_doc_comment_trigger.yml@main

|

||||

with:

|

||||

pr_number: ${{ github.event.number }}

|

||||

27

.github/workflows/stale.yml

vendored

Normal file

27

.github/workflows/stale.yml

vendored

Normal file

@ -0,0 +1,27 @@

|

||||

name: Stale Bot

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 15 * * *"

|

||||

|

||||

jobs:

|

||||

close_stale_issues:

|

||||

name: Close Stale Issues

|

||||

if: github.repository == 'lvwerra/trl'

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: 3.8

|

||||

|

||||

- name: Install requirements

|

||||

run: |

|

||||

pip install PyGithub

|

||||

- name: Close stale issues

|

||||

run: |

|

||||

python scripts/stale.py

|

||||

32

.github/workflows/tests.yml

vendored

32

.github/workflows/tests.yml

vendored

@ -7,28 +7,30 @@ on:

|

||||

branches: [ main ]

|

||||

|

||||

jobs:

|

||||

|

||||

check_code_quality:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.9]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

- uses: actions/checkout@v2

|

||||

with:

|

||||

python-version: "3.8"

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install .[dev]

|

||||

- name: Check quality

|

||||

run: |

|

||||

make quality

|

||||

fetch-depth: 0

|

||||

submodules: recursive

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- uses: pre-commit/action@v2.0.3

|

||||

with:

|

||||

extra_args: --all-files

|

||||

|

||||

tests:

|

||||

needs: check_code_quality

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.7, 3.8, 3.9]

|

||||

python-version: ['3.8', '3.9', '3.10']

|

||||

os: ['ubuntu-latest', 'macos-latest', 'windows-latest']

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

@ -37,6 +39,10 @@ jobs:

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

|

||||

16

.github/workflows/upload_pr_documentation.yml

vendored

Normal file

16

.github/workflows/upload_pr_documentation.yml

vendored

Normal file

@ -0,0 +1,16 @@

|

||||

name: Upload PR Documentation

|

||||

|

||||

on:

|

||||

workflow_run:

|

||||

workflows: ["Build PR Documentation"]

|

||||

types:

|

||||

- completed

|

||||

|

||||

jobs:

|

||||

build:

|

||||

uses: huggingface/doc-builder/.github/workflows/upload_pr_documentation.yml@main

|

||||

with:

|

||||

package_name: trl

|

||||

secrets:

|

||||

hf_token: ${{ secrets.HF_DOC_BUILD_PUSH }}

|

||||

comment_bot_token: ${{ secrets.COMMENT_BOT_TOKEN }}

|

||||

42

.pre-commit-config.yaml

Normal file

42

.pre-commit-config.yaml

Normal file

@ -0,0 +1,42 @@

|

||||

repos:

|

||||

- repo: https://github.com/PyCQA/isort

|

||||

rev: 5.12.0

|

||||

hooks:

|

||||

- id: isort

|

||||

args:

|

||||

- --profile=black

|

||||

- --skip-glob=wandb/**/*

|

||||

- --thirdparty=wandb

|

||||

- repo: https://github.com/myint/autoflake

|

||||

rev: v1.4

|

||||

hooks:

|

||||

- id: autoflake

|

||||

args:

|

||||

- -r

|

||||

- --exclude=wandb,__init__.py

|

||||

- --in-place

|

||||

- --remove-unused-variables

|

||||

- --remove-all-unused-imports

|

||||

- repo: https://github.com/python/black

|

||||

rev: 22.3.0

|

||||

hooks:

|

||||

- id: black

|

||||

args:

|

||||

- --line-length=119

|

||||

- --target-version=py38

|

||||

- --exclude=wandb

|

||||

- repo: https://github.com/pycqa/flake8

|

||||

rev: 6.0.0

|

||||

hooks:

|

||||

- id: flake8

|

||||

args:

|

||||

- --ignore=E203,E501,W503,E128

|

||||

- --max-line-length=119

|

||||

|

||||

# - repo: https://github.com/codespell-project/codespell

|

||||

# rev: v2.1.0

|

||||

# hooks:

|

||||

# - id: codespell

|

||||

# args:

|

||||

# - --ignore-words-list=nd,reacher,thist,ths,magent,ba

|

||||

# - --skip=docs/css/termynal.css,docs/js/termynal.js

|

||||

@ -36,10 +36,15 @@ First you want to make sure that all the tests pass:

|

||||

make test

|

||||

```

|

||||

|

||||

Then before submitting your PR make sure the code quality follows the standards. You can run the following command to format and test:

|

||||

Then before submitting your PR make sure the code quality follows the standards. You can run the following command to format:

|

||||

|

||||

```bash

|

||||

make style && make quality

|

||||

make precommit

|

||||

```

|

||||

|

||||

Make sure to install `pre-commit` before running the command:

|

||||

```bash

|

||||

pip install pre-commit

|

||||

```

|

||||

|

||||

## Do you want to contribute to the documentation?

|

||||

|

||||

12

Makefile

12

Makefile

@ -1,15 +1,9 @@

|

||||

.PHONY: quality style test

|

||||

.PHONY: test precommit

|

||||

|

||||

check_dirs := examples tests trl

|

||||

|

||||

test:

|

||||

python -m pytest -n auto --dist=loadfile -s -v ./tests/

|

||||

|

||||

quality:

|

||||

black --check --line-length 119 --target-version py38 $(check_dirs)

|

||||

isort --check-only $(check_dirs)

|

||||

flake8 $(check_dirs)

|

||||

|

||||

style:

|

||||

black --line-length 119 --target-version py38 $(check_dirs)

|

||||

isort $(check_dirs)

|

||||

precommit:

|

||||

pre-commit run --all-files

|

||||

|

||||

91

README.md

91

README.md

@ -3,18 +3,38 @@

|

||||

</div>

|

||||

|

||||

# TRL - Transformer Reinforcement Learning

|

||||

> Train transformer language models with reinforcement learning.

|

||||

> Full stack transformer language models with reinforcement learning.

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/lvwerra/trl/blob/main/LICENSE">

|

||||

<img alt="License" src="https://img.shields.io/github/license/lvwerra/trl.svg?color=blue">

|

||||

</a>

|

||||

<a href="https://huggingface.co/docs/trl/index">

|

||||

<img alt="Documentation" src="https://img.shields.io/website/http/huggingface.co/docs/trl/index.svg?down_color=red&down_message=offline&up_message=online">

|

||||

</a>

|

||||

<a href="https://github.com/lvwerra/trl/releases">

|

||||

<img alt="GitHub release" src="https://img.shields.io/github/release/lvwerra/trl.svg">

|

||||

</a>

|

||||

</p>

|

||||

|

||||

|

||||

## What is it?

|

||||

With `trl` you can train transformer language models with Proximal Policy Optimization (PPO). The library is built on top of the [`transformers`](https://github.com/huggingface/transformers) library by 🤗 Hugging Face. Therefore, pre-trained language models can be directly loaded via `transformers`. At this point most of decoder architectures and encoder-decoder architectures are supported.

|

||||

|

||||

<div style="text-align: center">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/TRL-readme.png">

|

||||

</div>

|

||||

|

||||

`trl` is a full stack library where we provide a set of tools to train transformer language models with Reinforcement Learning, from the Supervised Fine-tuning step (SFT), Reward Modeling step (RM) to the Proximal Policy Optimization (PPO) step. The library is built on top of the [`transformers`](https://github.com/huggingface/transformers) library by 🤗 Hugging Face. Therefore, pre-trained language models can be directly loaded via `transformers`. At this point most of decoder architectures and encoder-decoder architectures are supported. Refer to the documentation or the `examples/` folder for example code snippets and how to run these tools.

|

||||

|

||||

**Highlights:**

|

||||

- `PPOTrainer`: A PPO trainer for language models that just needs (query, response, reward) triplets to optimise the language model.

|

||||

- `AutoModelForCausalLMWithValueHead` & `AutoModelForSeq2SeqLMWithValueHead`: A transformer model with an additional scalar output for each token which can be used as a value function in reinforcement learning.

|

||||

- Example: Train GPT2 to generate positive movie reviews with a BERT sentiment classifier.

|

||||

|

||||

## How it works

|

||||

- [`SFTTrainer`](https://huggingface.co/docs/trl/sft_trainer): A light and friendly wrapper around `transformers` Trainer to easily fine-tune language models or adapters on a custom dataset.

|

||||

- [`RewardTrainer`](https://huggingface.co/docs/trl/reward_trainer): A light wrapper around `transformers` Trainer to easily fine-tune language models for human preferences (Reward Modeling).

|

||||

- [`PPOTrainer`](https://huggingface.co/docs/trl/trainer#trl.PPOTrainer): A PPO trainer for language models that just needs (query, response, reward) triplets to optimise the language model.

|

||||

- [`AutoModelForCausalLMWithValueHead`](https://huggingface.co/docs/trl/models#trl.AutoModelForCausalLMWithValueHead) & [`AutoModelForSeq2SeqLMWithValueHead`](https://huggingface.co/docs/trl/models#trl.AutoModelForSeq2SeqLMWithValueHead): A transformer model with an additional scalar output for each token which can be used as a value function in reinforcement learning.

|

||||

- [Examples](https://github.com/lvwerra/trl/tree/main/examples): Train GPT2 to generate positive movie reviews with a BERT sentiment classifier, full RLHF using adapters only, train GPT-j to be less toxic, [Stack-Llama example](https://huggingface.co/blog/stackllama), etc.

|

||||

|

||||

## How PPO works

|

||||

Fine-tuning a language model via PPO consists of roughly three steps:

|

||||

|

||||

1. **Rollout**: The language model generates a response or continuation based on query which could be the start of a sentence.

|

||||

@ -52,8 +72,59 @@ pip install -e .

|

||||

|

||||

## How to use

|

||||

|

||||

### Example

|

||||

This is a basic example on how to use the library. Based on a query the language model creates a response which is then evaluated. The evaluation could be a human in the loop or another model's output.

|

||||

### `SFTTrainer`

|

||||

|

||||

This is a basic example on how to use the `SFTTrainer` from the library. The `SFTTrainer` is a light wrapper around the `transformers` Trainer to easily fine-tune language models or adapters on a custom dataset.

|

||||

|

||||

```python

|

||||

# imports

|

||||

from datasets import load_dataset

|

||||

from trl import SFTTrainer

|

||||

|

||||

# get dataset

|

||||

dataset = load_dataset("imdb", split="train")

|

||||

|

||||

# get trainer

|

||||

trainer = SFTTrainer(

|

||||

"facebook/opt-350m",

|

||||

train_dataset=dataset,

|

||||

dataset_text_field="text",

|

||||

max_seq_length=512,

|

||||

)

|

||||

|

||||

# train

|

||||

trainer.train()

|

||||

```

|

||||

|

||||

### `RewardTrainer`

|

||||

|

||||

This is a basic example on how to use the `RewardTrainer` from the library. The `RewardTrainer` is a wrapper around the `transformers` Trainer to easily fine-tune reward models or adapters on a custom preference dataset.

|

||||

|

||||

```python

|

||||

# imports

|

||||

from transformers import AutoModelForSequenceClassification, AutoTokenizer

|

||||

from trl import RewardTrainer

|

||||

|

||||

# load model and dataset - dataset needs to be in a specific format

|

||||

model = AutoModelForSequenceClassification.from_pretrained("gpt2")

|

||||

tokenizer = AutoTokenizer.from_pretrained("gpt2")

|

||||

|

||||

...

|

||||

|

||||

# load trainer

|

||||

trainer = RewardTrainer(

|

||||

model=model,

|

||||

tokenizer=tokenizer,

|

||||

train_dataset=dataset,

|

||||

)

|

||||

|

||||

# train

|

||||

trainer.train()

|

||||

```

|

||||

|

||||

### `PPOTrainer`

|

||||

|

||||

This is a basic example on how to use the `PPOTrainer` from the library. Based on a query the language model creates a response which is then evaluated. The evaluation could be a human in the loop or another model's output.

|

||||

|

||||

```python

|

||||

# imports

|

||||

@ -78,7 +149,7 @@ query_txt = "This morning I went to the "

|

||||

query_tensor = tokenizer.encode(query_txt, return_tensors="pt")

|

||||

|

||||

# get model response

|

||||

response_tensor = respond_to_batch(model_ref, query_tensor)

|

||||

response_tensor = respond_to_batch(model, query_tensor)

|

||||

|

||||

# create a ppo trainer

|

||||

ppo_trainer = PPOTrainer(ppo_config, model, model_ref, tokenizer)

|

||||

@ -99,6 +170,8 @@ For a detailed example check out the example python script `examples/sentiment/s

|

||||

<p style="text-align: center;"> <b>Figure:</b> A few review continuations before and after optimisation. </p>

|

||||

</div>

|

||||

|

||||

Have a look at more examples inside [`examples/`](https://github.com/lvwerra/trl/tree/main/examples) folder.

|

||||

|

||||

## References

|

||||

|

||||

### Proximal Policy Optimisation

|

||||

|

||||

96

benchmark/benchmark.py

Normal file

96

benchmark/benchmark.py

Normal file

@ -0,0 +1,96 @@

|

||||

import argparse

|

||||

import math

|

||||

import os

|

||||

import shlex

|

||||

import subprocess

|

||||

import uuid

|

||||

from distutils.util import strtobool

|

||||

|

||||

|

||||

def parse_args():

|

||||

# fmt: off

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("--command", type=str, default="",

|

||||

help="the command to run")

|

||||

parser.add_argument("--num-seeds", type=int, default=3,

|

||||

help="the number of random seeds")

|

||||

parser.add_argument("--start-seed", type=int, default=1,

|

||||

help="the number of the starting seed")

|

||||

parser.add_argument("--workers", type=int, default=0,

|

||||

help="the number of workers to run benchmark experimenets")

|

||||

parser.add_argument("--auto-tag", type=lambda x: bool(strtobool(x)), default=True, nargs="?", const=True,

|

||||

help="if toggled, the runs will be tagged with git tags, commit, and pull request number if possible")

|

||||

parser.add_argument("--slurm-template-path", type=str, default=None,

|

||||

help="the path to the slurm template file (see docs for more details)")

|

||||

parser.add_argument("--slurm-gpus-per-task", type=int, default=1,

|

||||

help="the number of gpus per task to use for slurm jobs")

|

||||

parser.add_argument("--slurm-total-cpus", type=int, default=50,

|

||||

help="the number of gpus per task to use for slurm jobs")

|

||||

parser.add_argument("--slurm-ntasks", type=int, default=1,

|

||||

help="the number of tasks to use for slurm jobs")

|

||||

parser.add_argument("--slurm-nodes", type=int, default=None,

|

||||

help="the number of nodes to use for slurm jobs")

|

||||

args = parser.parse_args()

|

||||

# fmt: on

|

||||

return args

|

||||

|

||||

|

||||

def run_experiment(command: str):

|

||||

command_list = shlex.split(command)

|

||||

print(f"running {command}")

|

||||

fd = subprocess.Popen(command_list)

|

||||

return_code = fd.wait()

|

||||

assert return_code == 0

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

args = parse_args()

|

||||

|

||||

commands = []

|

||||

for seed in range(0, args.num_seeds):

|

||||

commands += [" ".join([args.command, "--seed", str(args.start_seed + seed)])]

|

||||

|

||||

print("======= commands to run:")

|

||||

for command in commands:

|

||||

print(command)

|

||||

|

||||

if args.workers > 0 and args.slurm_template_path is None:

|

||||

from concurrent.futures import ThreadPoolExecutor

|

||||

|

||||

executor = ThreadPoolExecutor(max_workers=args.workers, thread_name_prefix="cleanrl-benchmark-worker-")

|

||||

for command in commands:

|

||||

executor.submit(run_experiment, command)

|

||||

executor.shutdown(wait=True)

|

||||

else:

|

||||

print("not running the experiments because --workers is set to 0; just printing the commands to run")

|

||||

|

||||

# SLURM logic

|

||||

if args.slurm_template_path is not None:

|

||||

if not os.path.exists("slurm"):

|

||||

os.makedirs("slurm")

|

||||

if not os.path.exists("slurm/logs"):

|

||||

os.makedirs("slurm/logs")

|

||||

print("======= slurm commands to run:")

|

||||

with open(args.slurm_template_path) as f:

|

||||

slurm_template = f.read()

|

||||

slurm_template = slurm_template.replace("{{array}}", f"0-{len(commands) - 1}%{args.workers}")

|

||||

slurm_template = slurm_template.replace(

|

||||

"{{seeds}}", f"({' '.join([str(args.start_seed + int(seed)) for seed in range(args.num_seeds)])})"

|

||||

)

|

||||

slurm_template = slurm_template.replace("{{len_seeds}}", f"{args.num_seeds}")

|

||||

slurm_template = slurm_template.replace("{{command}}", args.command)

|

||||

slurm_template = slurm_template.replace("{{gpus_per_task}}", f"{args.slurm_gpus_per_task}")

|

||||

total_gpus = args.slurm_gpus_per_task * args.slurm_ntasks

|

||||

slurm_cpus_per_gpu = math.ceil(args.slurm_total_cpus / total_gpus)

|

||||

slurm_template = slurm_template.replace("{{cpus_per_gpu}}", f"{slurm_cpus_per_gpu}")

|

||||

slurm_template = slurm_template.replace("{{ntasks}}", f"{args.slurm_ntasks}")

|

||||

if args.slurm_nodes is not None:

|

||||

slurm_template = slurm_template.replace("{{nodes}}", f"#SBATCH --nodes={args.slurm_nodes}")

|

||||

else:

|

||||

slurm_template = slurm_template.replace("{{nodes}}", "")

|

||||

filename = str(uuid.uuid4())

|

||||

open(os.path.join("slurm", f"{filename}.slurm"), "w").write(slurm_template)

|

||||

slurm_path = os.path.join("slurm", f"{filename}.slurm")

|

||||

print(f"saving command in {slurm_path}")

|

||||

if args.workers > 0:

|

||||

run_experiment(f"sbatch {slurm_path}")

|

||||

16

benchmark/trl.slurm_template

Normal file

16

benchmark/trl.slurm_template

Normal file

@ -0,0 +1,16 @@

|

||||

#!/bin/bash

|

||||

#SBATCH --partition=dev-cluster

|

||||

#SBATCH --gpus-per-task={{gpus_per_task}}

|

||||

#SBATCH --cpus-per-gpu={{cpus_per_gpu}}

|

||||

#SBATCH --ntasks={{ntasks}}

|

||||

#SBATCH --mem-per-cpu=11G

|

||||

#SBATCH --output=slurm/logs/%x_%j.out

|

||||

#SBATCH --array={{array}}

|

||||

|

||||

{{nodes}}

|

||||

|

||||

seeds={{seeds}}

|

||||

seed=${seeds[$SLURM_ARRAY_TASK_ID % {{len_seeds}}]}

|

||||

|

||||

echo "Running task $SLURM_ARRAY_TASK_ID with seed: $seed"

|

||||

srun {{command}} --seed $seed

|

||||

@ -7,20 +7,32 @@

|

||||

title: Installation

|

||||

- local: customization

|

||||

title: Customize your training

|

||||

- local: logging

|

||||

title: Understanding logs

|

||||

title: Get started

|

||||

- sections:

|

||||

- local: models

|

||||

title: Model Classes

|

||||

- local: trainer

|

||||

title: Trainer Classes

|

||||

- local: reward_trainer

|

||||

title: Training your own reward model

|

||||

- local: sft_trainer

|

||||

title: Supervised fine-tuning

|

||||

- local: extras

|

||||

title: Extras - Better model output without reinforcement learning

|

||||

title: API

|

||||

- sections:

|

||||

- local: sentiment_tuning

|

||||

title: Sentiment Tuning

|

||||

- local: sentiment_tuning_peft

|

||||

- local: lora_tuning_peft

|

||||

title: Peft support - Low rank adaption of 8 bit models

|

||||

- local: summarization_reward_tuning

|

||||

title: Summarization Reward Tuning

|

||||

- local: detoxifying_a_lm

|

||||

title: Detoxifying a Language Model

|

||||

- local: using_llama_models

|

||||

title: Using LLaMA with TRL

|

||||

- local: multi_adapter_rl

|

||||

title: Multi Adapter RL (MARL) - a single base model for everything

|

||||

title: Examples

|

||||

|

||||

@ -2,6 +2,22 @@

|

||||

|

||||

At `trl` we provide the possibility to give enough modularity to users to be able to efficiently customize the training loop for their needs. Below are some examples on how you can apply and test different techniques.

|

||||

|

||||

## Run on multiple GPUs / nodes

|

||||

|

||||

We leverage `accelerate` to enable users to run their training on multiple GPUs or nodes. You should first create your accelerate config by simply running:

|

||||

|

||||

```bash

|

||||

accelerate config

|

||||

```

|

||||

|

||||

Then make sure you have selected multi-gpu / multi-node setup. You can then run your training by simply running:

|

||||

|

||||

```bash

|

||||

accelerate launch your_script.py

|

||||

```

|

||||

|

||||

Refer to the [examples page](https://github.com/lvwerra/trl/tree/main/examples) for more details

|

||||

|

||||

## Use different optimizers

|

||||

|

||||

By default, the `PPOTrainer` creates a `torch.optim.Adam` optimizer. You can create and define a different optimizer and pass it to `PPOTrainer`:

|

||||

@ -63,7 +79,7 @@ optimizer = Lion(filter(lambda p: p.requires_grad, self.model.parameters()), lr=

|

||||

...

|

||||

ppo_trainer = PPOTrainer(config, model, model_ref, tokenizer, optimizer=optimizer)

|

||||

```

|

||||

We advice you to use the learning rate that you would use for `Adam` divided by 3 as pointed out [here](https://github.com/lucidrains/lion-pytorch#lion---pytorch). We observed an improvement when using this optimizer compared to classic Adam (check the full logs [here](https://wandb.ai/distill-bloom/trl/runs/lj4bheke?workspace=user-younesbelkada)):

|

||||

We advise you to use the learning rate that you would use for `Adam` divided by 3 as pointed out [here](https://github.com/lucidrains/lion-pytorch#lion---pytorch). We observed an improvement when using this optimizer compared to classic Adam (check the full logs [here](https://wandb.ai/distill-bloom/trl/runs/lj4bheke?workspace=user-younesbelkada)):

|

||||

|

||||

<div style="text-align: center">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/trl-lion.png">

|

||||

@ -90,7 +106,7 @@ config = PPOConfig(**ppo_config)

|

||||

|

||||

# 2. Create optimizer

|

||||

optimizer = torch.optim.SGD(model.parameters(), lr=config.learning_rate)

|

||||

lr_scheduler = lr_scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)

|

||||

lr_scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)

|

||||

|

||||

# 3. initialize trainer

|

||||

ppo_trainer = PPOTrainer(config, model, model_ref, tokenizer, optimizer=optimizer, lr_scheduler=lr_scheduler)

|

||||

@ -149,4 +165,33 @@ When training large models, you should better handle the CUDA cache by iterative

|

||||

|

||||

```python

|

||||

config = PPOConfig(..., optimize_cuda_cache=True)

|

||||

```

|

||||

```

|

||||

|

||||

## Use correctly DeepSpeed stage 3:

|

||||

|

||||

A small tweak need to be added to your training script to use DeepSpeed stage 3 correctly. You need to properly initialize your reward model on the correct device using the `zero3_init_context_manager` context manager. Here is an example adapted for the `gpt2-sentiment` script:

|

||||

|

||||

```python

|

||||

ds_plugin = ppo_trainer.accelerator.state.deepspeed_plugin

|

||||

if ds_plugin is not None and ds_plugin.is_zero3_init_enabled():

|

||||

with ds_plugin.zero3_init_context_manager(enable=False):

|

||||

sentiment_pipe = pipeline("sentiment-analysis", model="lvwerra/distilbert-imdb", device=device)

|

||||

else:

|

||||

sentiment_pipe = pipeline("sentiment-analysis", model="lvwerra/distilbert-imdb", device=device)

|

||||

```

|

||||

|

||||

## Use torch distributed

|

||||

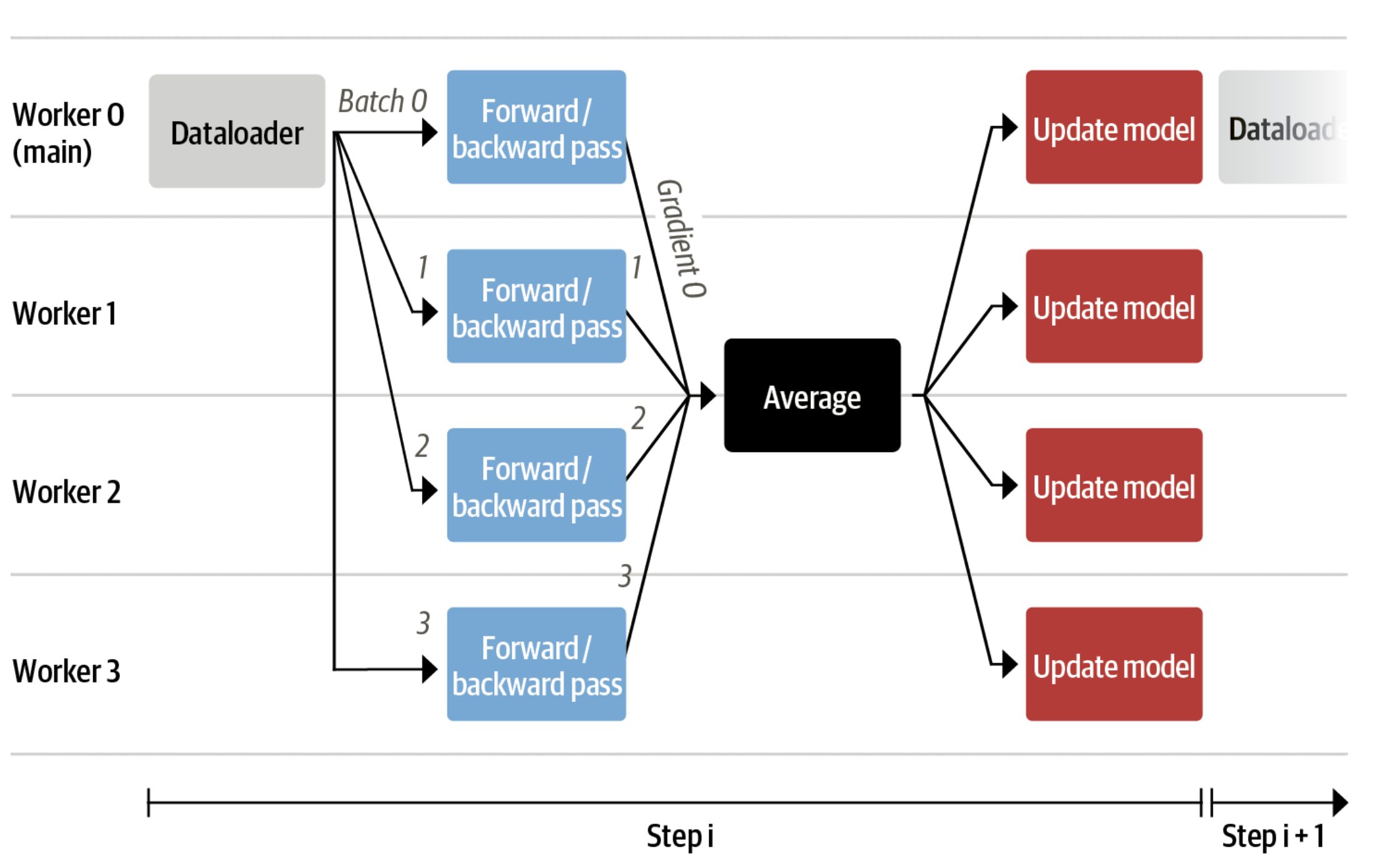

torch.distributed package provides PyTorch natives method to distribute a network over several machines (mostly useful if there are several GPU nodes). It copies the model on each GPU, runs the forward and backward on each and then applies the mean of gradient of all GPUs for each one. If running torch 1.XX, you can call `torch.distributed.launch`, like

|

||||

|

||||

`python -m torch.distributed.launch --nproc_per_node=1 reward_summarization.py --bf16`

|

||||

|

||||

For torch 2.+ `torch.distributed.launch` is deprecated and one needs to run:

|

||||

`torchrun --nproc_per_node=1 reward_summarization.py --bf16`

|

||||

or

|

||||

`python -m torch.distributed.run --nproc_per_node=1 reward_summarization.py --bf16`

|

||||

|

||||

Note that using `python -m torch.distributed.launch --nproc_per_node=1 reward_summarization.py --bf16` with torch 2.0 ends in

|

||||

```

|

||||

ValueError: Some specified arguments are not used by the HfArgumentParser: ['--local-rank=0']

|

||||

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 194889) of binary: /home/ubuntu/miniconda3/envs/trl/bin/python

|

||||

```

|

||||

|

||||

72

docs/source/extras.mdx

Normal file

72

docs/source/extras.mdx

Normal file

@ -0,0 +1,72 @@

|

||||

# Extras: Alternative ways to get better model output without RL based fine-tuning

|

||||

|

||||

Within the extras module is the `best-of-n` sampler class that serves as an alternative method of generating better model output.

|

||||

As to how it fares against the RL based fine-tuning, please look in the `examples` directory for a comparison example

|

||||

|

||||

## Usage

|

||||

|

||||

To get started quickly, instantiate an instance of the class with a model, a length sampler, a tokenizer and a callable that serves as a proxy reward pipeline that outputs reward scores for input queries

|

||||

|

||||

```python

|

||||

|

||||

from transformers import pipeline, AutoTokenizer

|

||||

from trl import AutoModelForCausalLMWithValueHead

|

||||

from trl.core import LengthSampler

|

||||

from trl.extras import BestOfNSampler

|

||||

|

||||

ref_model = AutoModelForCausalLMWithValueHead.from_pretrained(ref_model_name)

|

||||

reward_pipe = pipeline("sentiment-analysis", model=reward_model, device=device)

|

||||

tokenizer = AutoTokenizer.from_pretrained(ref_model_name)

|

||||

tokenizer.pad_token = tokenizer.eos_token

|

||||

|

||||

|

||||

# callable that takes a list of raw text and returns a list of corresponding reward scores

|

||||

def queries_to_scores(list_of_strings):

|

||||

return [output["score"] for output in reward_pipe(list_of_strings)]

|

||||

|

||||

best_of_n = BestOfNSampler(model, tokenizer, queries_to_scores, length_sampler=output_length_sampler)

|

||||

|

||||

|

||||

```

|

||||

|

||||

And assuming you have a list/tensor of tokenized queries, you can generate better output by calling the `generate` method

|

||||

|

||||

```python

|

||||

|

||||

best_of_n.generate(query_tensors, device=device, **gen_kwargs)

|

||||

|

||||

```

|

||||

The default sample size is 4, but you can change it at the time of instance initialization like so

|

||||

|

||||

```python

|

||||

|

||||

best_of_n = BestOfNSampler(model, tokenizer, queries_to_scores, length_sampler=output_length_sampler, sample_size=8)

|

||||

|

||||

```

|

||||

|

||||

The default output is the result of taking the top scored output for each query, but you can change it to top 2 and so on by passing the `n_candidates` argument at the time of instance initialization

|

||||

|

||||

```python

|

||||

|

||||

best_of_n = BestOfNSampler(model, tokenizer, queries_to_scores, length_sampler=output_length_sampler, n_candidates=2)

|

||||

|

||||

```

|

||||

|

||||

There is the option of setting the generation settings (like `temperature`, `pad_token_id`) at the time of instance creation as opposed to when calling the `generate` method.

|

||||

This is done by passing a `GenerationConfig` from the `transformers` library at the time of initialization

|

||||

|

||||

```python

|

||||

|

||||

from transformers import GenerationConfig

|

||||

|

||||

generation_config = GenerationConfig(min_length= -1, top_k=0.0, top_p= 1.0, do_sample= True, pad_token_id=tokenizer.eos_token_id)

|

||||

|

||||

best_of_n = BestOfNSampler(model, tokenizer, queries_to_scores, length_sampler=output_length_sampler, generation_config=generation_config)

|

||||

|

||||

best_of_n.generate(query_tensors, device=device)

|

||||

|

||||

```

|

||||

|

||||

Furthermore, at the time of initialization you can set the seed to control repeatability of the generation process and the number of samples to generate for each query

|

||||

|

||||

|

||||

29

docs/source/logging.mdx

Normal file

29

docs/source/logging.mdx

Normal file

@ -0,0 +1,29 @@

|

||||

# Logging

|

||||

|

||||

As reinforcement learning algorithms are historically challenging to debug, it's important to pay careful attention to logging.

|

||||

By default, the TRL [`PPOTrainer`] saves a lot of relevant information to `wandb` or `tensorboard`.

|

||||

|

||||

Upon initialization, pass one of these two options to the [`PPOConfig`]:

|

||||

```

|

||||

config = PPOConfig(

|

||||

model_name=args.model_name,

|

||||

log_with=`wandb`, # or `tensorboard`

|

||||

)

|

||||

```

|

||||

If you want to log with tensorboard, add the kwarg `project_kwargs={"logging_dir": PATH_TO_LOGS}` to the PPOConfig.

|

||||

|

||||

## PPO Logging

|

||||

|

||||

### Crucial values

|

||||

During training, many values are logged, here are the most important ones:

|

||||

|

||||

1. `env/reward_mean`,`env/reward_std`, `env/reward_dist`: the properties of the reward distribution from the "environment".

|

||||

2. `ppo/mean_scores`: The mean scores directly out of the reward model.

|

||||

3. `ppo/mean_non_score_reward`: The mean negated KL penalty during training (shows the delta between the reference model and the new policy over the batch in the step)

|

||||

|

||||

### Training stability parameters:

|

||||

Here are some parameters that are useful to monitor for stability (when these diverge or collapse to 0, try tuning variables):

|

||||

|

||||

1. `ppo/loss/value`: The value function loss -- will spike / NaN when not going well.

|

||||

2. `ppo/val/clipfrac`: The fraction of clipped values in the value function loss. This is often from 0.3 to 0.6.

|

||||

3. `objective/kl_coef`: The target coefficient with [`AdaptiveKLController`]. Often increases before numerical instabilities.

|

||||

143

docs/source/lora_tuning_peft.mdx

Normal file

143

docs/source/lora_tuning_peft.mdx

Normal file

@ -0,0 +1,143 @@

|

||||

# Examples of using peft with trl to finetune 8-bit models with Low Rank Adaption (LoRA)

|

||||

|

||||

The notebooks and scripts in this examples show how to use Low Rank Adaptation (LoRA) to fine-tune models in a memory efficient manner. Most of PEFT methods supported in peft library but note that some PEFT methods such as Prompt tuning are not supported.

|

||||

For more information on LoRA, see the [original paper](https://arxiv.org/abs/2106.09685).

|

||||

|

||||

Here's an overview of the `peft`-enabled notebooks and scripts in the [trl repository](https://github.com/lvwerra/trl/tree/main/examples):

|

||||

|

||||

| File | Task | Description | Colab link |

|

||||

|---|---| --- |

|

||||

| [`gpt2-sentiment_peft.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt2-sentiment_peft.py) | Sentiment | Same as the sentiment analysis example, but learning a low rank adapter on a 8-bit base model | |

|

||||

| [`cm_finetune_peft_imdb.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/cm_finetune_peft_imdb.py) | Sentiment | Fine tuning a low rank adapter on a frozen 8-bit model for text generation on the imdb dataset. | |

|

||||

| [`merge_peft_adapter.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/merge_peft_adapter.py) | 🤗 Hub | Merging of the adapter layers into the base model’s weights and storing these on the hub. | |

|

||||

| [`gpt-neo-20b_sentiment_peft.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/gpt-neo-20b_sentiment_peft.py) | Sentiment | Sentiment fine-tuning of a low rank adapter to create positive reviews. | |

|

||||

| [`gpt-neo-1b_peft.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neo-1b-multi-gpu/gpt-neo-1b_peft.py) | Sentiment | Sentiment fine-tuning of a low rank adapter to create positive reviews using 2 GPUs. | |

|

||||

| [`stack_llama/rl_training.py`](https://github.com/lvwerra/trl/blob/main/examples/stack_llama/scripts/rl_training.py) | RLHF | Distributed fine-tuning of the 7b parameter LLaMA models with a learned reward model and `peft`. | |

|

||||

| [`stack_llama/reward_modeling.py`](https://github.com/lvwerra/trl/blob/main/examples/stack_llama/scripts/reward_modeling.py) | Reward Modeling | Distributed training of the 7b parameter LLaMA reward model with `peft`. | |

|

||||

| [`stack_llama/supervised_finetuning.py`](https://github.com/lvwerra/trl/blob/main/examples/stack_llama/scripts/supervised_finetuning.py) | SFT | Distributed instruction/supervised fine-tuning of the 7b parameter LLaMA model with `peft`. | |

|

||||

|

||||

## Installation

|

||||

Note: peft is in active development, so we install directly from their Github page.

|

||||

Peft also relies on the latest version of transformers.

|

||||

|

||||

```bash

|

||||

pip install trl[peft]

|

||||

pip install bitsandbytes loralib

|

||||

pip install git+https://github.com/huggingface/transformers.git@main

|

||||

#optional: wandb

|

||||

pip install wandb

|

||||

```

|

||||

|

||||

Note: if you don't want to log with `wandb` remove `log_with="wandb"` in the scripts/notebooks. You can also replace it with your favourite experiment tracker that's [supported by `accelerate`](https://huggingface.co/docs/accelerate/usage_guides/tracking).

|

||||

|

||||

## How to use it?

|

||||

|

||||

Simply declare a `PeftConfig` object in your script and pass it through `.from_pretrained` to load the TRL+PEFT model.

|

||||

|

||||

```python

|

||||

from peft import LoraConfig

|

||||

from trl import AutoModelForCausalLMWithValueHead

|

||||

|

||||

model_id = "edbeeching/gpt-neo-125M-imdb"

|

||||

lora_config = LoraConfig(

|

||||

r=16,

|

||||

lora_alpha=32,

|

||||

lora_dropout=0.05,

|

||||

bias="none",

|

||||

task_type="CAUSAL_LM",

|

||||

)

|

||||

|

||||

model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

model_id,

|

||||

peft_config=lora_config,

|

||||

)

|

||||

```

|

||||

And if you want to load your model in 8bit precision:

|

||||

```python

|

||||

pretrained_model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

config.model_name,

|

||||

load_in_8bit=True,

|

||||

peft_config=lora_config,

|

||||

)

|

||||

```

|

||||

... or in 4bit precision:

|

||||

```python

|

||||

pretrained_model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

config.model_name,

|

||||

peft_config=lora_config,

|

||||

load_in_4bit=True,

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

## Launch scripts

|

||||

|

||||

The `trl` library is powered by `accelerate`. As such it is best to configure and launch trainings with the following commands:

|

||||

|

||||

```bash

|

||||

accelerate config # will prompt you to define the training configuration

|

||||

accelerate launch scripts/gpt2-sentiment_peft.py # launches training

|

||||

```

|

||||

|

||||

## Using `trl` + `peft` and Data Parallelism

|

||||

|

||||

You can scale up to as many GPUs as you want, as long as you are able to fit the training process in a single device. The only tweak you need to apply is to load the model as follows:

|

||||

```python

|

||||

from peft import LoraConfig

|

||||

...

|

||||

|

||||

lora_config = LoraConfig(

|

||||

r=16,

|

||||

lora_alpha=32,

|

||||

lora_dropout=0.05,

|

||||

bias="none",

|

||||

task_type="CAUSAL_LM",

|

||||

)

|

||||

|

||||

pretrained_model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

config.model_name,

|

||||

peft_config=lora_config,

|

||||

)

|

||||

```

|

||||

And if you want to load your model in 8bit precision:

|

||||

```python

|

||||

pretrained_model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

config.model_name,

|

||||

peft_config=lora_config,

|

||||

load_in_8bit=True,

|

||||

)

|

||||

```

|

||||

... or in 4bit precision:

|

||||

```python

|

||||

pretrained_model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

config.model_name,

|

||||

peft_config=lora_config,

|

||||

load_in_4bit=True,

|

||||

)

|

||||

```

|

||||

Finally, make sure that the rewards are computed on correct device as well, for that you can use `ppo_trainer.model.current_device`.

|

||||

|

||||

## Naive pipeline parallelism (NPP) for large models (>60B models)

|

||||

|

||||

The `trl` library also supports naive pipeline parallelism (NPP) for large models (>60B models). This is a simple way to parallelize the model across multiple GPUs.

|

||||

This paradigm, termed as "Naive Pipeline Parallelism" (NPP) is a simple way to parallelize the model across multiple GPUs. We load the model and the adapters across multiple GPUs and the activations and gradients will be naively communicated across the GPUs. This supports `int8` models as well as other `dtype` models.

|

||||

|

||||

<div style="text-align: center">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/trl-npp.png">

|

||||

</div>

|

||||

|

||||

### How to use NPP?

|

||||

|

||||

Simply load your model with a custom `device_map` argument on the `from_pretrained` to split your model across multiple devices. Check out this [nice tutorial](https://github.com/huggingface/blog/blob/main/accelerate-large-models.md) on how to properly create a `device_map` for your model.

|

||||

|

||||

Also make sure to have the `lm_head` module on the first GPU device as it may throw an error if it is not on the first device. As this time of writing, you need to install the `main` branch of `accelerate`: `pip install git+https://github.com/huggingface/accelerate.git@main` and `peft`: `pip install git+https://github.com/huggingface/peft.git@main`.

|

||||

|

||||

That all you need to do to use NPP. Check out the [gpt-neo-1b_peft.py](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neo-1b-multi-gpu/gpt-neo-1b_peft.py) example for a more details usage of NPP.

|

||||

|

||||

### Launch scripts

|

||||

|

||||

Although `trl` library is powered by `accelerate`, you should run your training script in a single process. Note that we do not support Data Parallelism together with NPP yet.

|

||||

|

||||

```bash

|

||||

python PATH_TO_SCRIPT

|

||||

```

|

||||

100

docs/source/multi_adapter_rl.mdx

Normal file

100

docs/source/multi_adapter_rl.mdx

Normal file

@ -0,0 +1,100 @@

|

||||

# Multi Adapter RL (MARL) - a single base model for everything

|

||||

|

||||

Here we present an approach that uses a single base model for the entire PPO algorithm - which includes retrieving the reference logits, computing the active logits and the rewards. This feature is experimental as we did not tested the convergence of the approach. We encourage the community to let us know if they potentially face into any issue.

|

||||

|

||||

## Requirements

|

||||

|

||||

You just need to install `peft` and optionally install `bitsandbytes` as well if you want to go for 8bit base models, for more memory efficient finetuning.

|

||||

|

||||

## Summary

|

||||

|

||||

You need to address this approach in three stages that we summarize as follows:

|

||||

|

||||

1- Train a base model on the target domain (e.g. `imdb` dataset) - this is the Supervised Fine Tuning stage - it can leverage the `SFTTrainer` from TRL.

|

||||

2- Train a reward model using `peft`. This is required in order to re-use the adapter during the RL optimisation process (step 3 below). We show an example of leveraging the `RewardTrainer` from TRL in [this example](https://github.com/lvwerra/trl/tree/main/examples/0-abstraction-RL/reward_modeling.py)

|

||||

3- Fine tune new adapters on the base model using PPO and the reward adapter. ("0 abstraction RL")

|

||||

|

||||

Make sure to use the same model (i.e. same architecure and same weights) for the stages 2 & 3.

|

||||

|

||||

## Quickstart

|

||||

|

||||

Let us assume you have trained your reward adapter on `llama-7b` model using `RewardTrainer` and pushed the weights on the hub under `trl-lib/llama-7b-hh-rm-adapter`.

|

||||

When doing PPO, before passing the model to `PPOTrainer` create your model as follows:

|

||||

|

||||

```python

|

||||

model_name = "huggyllama/llama-7b"

|

||||

rm_adapter_id = "trl-lib/llama-7b-hh-rm-adapter"

|

||||

|

||||

# PPO adapter

|

||||

lora_config = LoraConfig(

|

||||

r=16,

|

||||

lora_alpha=32,

|

||||

lora_dropout=0.05,

|

||||

bias="none",

|

||||

task_type="CAUSAL_LM",

|

||||

)

|

||||

|

||||

model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

model_name,

|

||||

peft_config=lora_config,

|

||||

reward_adapter=rm_adapter_id,

|

||||

)

|

||||

|

||||

...

|

||||

trainer = PPOTrainer(

|

||||

model=model,

|

||||

...

|

||||

)

|

||||

|

||||

...

|

||||

```

|

||||

Then inside your PPO training loop, call the `compute_reward_score` method by accessing to the `model` attribute from `PPOTrainer`.

|

||||

|

||||

```python

|

||||

rewards = trainer.model.compute_reward_score(**inputs)

|

||||

```

|

||||

|

||||

## Advanced usage

|

||||

|

||||

### Control on the adapter name

|

||||

|

||||

If you are familiar with the `peft` library, you know that you can use multiple adapters inside the same model. What you can do is to train multiple adapters on the same base model to fine-tune on different policies.

|

||||

In this case, you want to have a control on the adapter name you want to activate back, after retrieving the reward. For that, simply pass the appropriate `adapter_name` to `ppo_adapter_name` argument when calling `compute_reward_score`.

|

||||

|

||||

```python

|

||||

adapter_name_policy_1 = "policy_1"

|

||||

rewards = trainer.model.compute_reward_score(**inputs, ppo_adapter_name=adapter_name_policy_1)

|

||||

...

|

||||

```

|

||||

|

||||

### Using 4-bit and 8-bit base models

|

||||

|

||||

For more memory efficient fine-tuning, you can load your base model in 8-bit or 4-bit while keeping the adapters in the default precision (float32).

|

||||

Just pass the appropriate arguments (i.e. `load_in_8bit=True` or `load_in_4bit=True`) to `AutoModelForCausalLMWithValueHead.from_pretrained` as follows (assuming you have installed `bitsandbytes`):

|

||||

```python

|

||||

model_name = "llama-7b"

|

||||

rm_adapter_id = "trl-lib/llama-7b-hh-rm-adapter"

|

||||

|

||||

# PPO adapter

|

||||

lora_config = LoraConfig(

|

||||

r=16,

|

||||

lora_alpha=32,

|

||||

lora_dropout=0.05,

|

||||

bias="none",

|

||||

task_type="CAUSAL_LM",

|

||||

)

|

||||

|

||||

model = AutoModelForCausalLMWithValueHead.from_pretrained(

|

||||

model_name,

|

||||

peft_config=lora_config,

|

||||

reward_adapter=rm_adapter_id,

|

||||

load_in_8bit=True,

|

||||

)

|

||||

|

||||

...

|

||||

trainer = PPOTrainer(

|

||||

model=model,

|

||||

...

|

||||

)

|

||||

...

|

||||

```

|

||||

@ -19,30 +19,40 @@ The following code illustrates the steps above.

|

||||

# 0. imports

|

||||

import torch

|

||||

from transformers import GPT2Tokenizer

|

||||

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead, create_reference_model

|

||||

from trl.core import respond_to_batch

|

||||

|

||||

from trl import AutoModelForCausalLMWithValueHead, PPOConfig, PPOTrainer

|

||||

|

||||

|

||||

# 1. load a pretrained model

|

||||

model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

|

||||

model_ref = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

|

||||

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

|

||||

model = AutoModelForCausalLMWithValueHead.from_pretrained("gpt2")

|

||||

model_ref = AutoModelForCausalLMWithValueHead.from_pretrained("gpt2")

|

||||

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

|

||||

tokenizer.pad_token = tokenizer.eos_token

|

||||

|

||||

# 2. initialize trainer

|

||||

ppo_config = {'batch_size': 1}

|

||||

ppo_config = {"batch_size": 1}

|

||||

config = PPOConfig(**ppo_config)

|

||||

ppo_trainer = PPOTrainer(config, model, model_ref, tokenizer)

|

||||

|

||||

# 3. encode a query

|

||||

query_txt = "This morning I went to the "

|

||||

query_tensor = tokenizer.encode(query_txt, return_tensors="pt")

|

||||

query_tensor = tokenizer.encode(query_txt, return_tensors="pt").to(model.pretrained_model.device)

|

||||

|

||||

# 4. generate model response

|

||||

response_tensor = respond_to_batch(model, query_tensor)

|

||||

response_txt = tokenizer.decode(response_tensor[0,:])

|

||||

generation_kwargs = {

|

||||

"min_length": -1,

|

||||

"top_k": 0.0,

|

||||

"top_p": 1.0,

|

||||

"do_sample": True,

|

||||

"pad_token_id": tokenizer.eos_token_id,

|

||||

"max_new_tokens": 20,

|

||||

}

|

||||

response_tensor = ppo_trainer.generate([item for item in query_tensor], return_prompt=False, **generation_kwargs)

|

||||

response_txt = tokenizer.decode(response_tensor[0])

|

||||

|

||||

# 5. define a reward for response

|

||||

# (this could be any reward such as human feedback or output from another model)

|

||||

reward = [torch.tensor(1.0)]

|

||||

reward = [torch.tensor(1.0, device=model.pretrained_model.device)]

|

||||

|

||||

# 6. train model with ppo

|

||||

train_stats = ppo_trainer.step([query_tensor[0]], [response_tensor[0]], reward)

|

||||

|

||||

61

docs/source/reward_trainer.mdx

Normal file

61

docs/source/reward_trainer.mdx

Normal file

@ -0,0 +1,61 @@

|

||||

# Reward Modeling

|

||||

|

||||

TRL supports custom reward modeling for anyone to perform reward modeling on their dataset and model.

|

||||

|

||||

## Expected dataset format

|

||||

|

||||

The reward trainer expects a very specific format for the dataset. Since the model will be trained to predict which sentence is the most relevant, given two sentences. We provide an example from the [`Anthropic/hh-rlhf`](https://huggingface.co/datasets/Anthropic/hh-rlhf) dataset below:

|

||||

|

||||

<div style="text-align: center">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/rlhf-antropic-example.png", width="50%">

|

||||

</div>

|

||||

|

||||

Therefore the final dataset object should contain two 4 entries at least if you use the default `RewardDataCollatorWithPadding` data collator. The entries should be named:

|

||||

|

||||

- `input_ids_chosen`

|

||||

- `attention_mask_chosen`

|

||||

- `input_ids_rejected`

|

||||

- `attention_mask_rejected`

|

||||

|

||||

The `j` and `k` suffixes are used to denote the two sentences in the paired dataset.

|

||||

|

||||

## Using the `RewardTrainer`

|

||||

|

||||

After standardizing your dataset, you can use the `RewardTrainer` as a classic HugingFace Trainer.

|

||||

You should pass an `AutoModelForSequenceClassification` model to the `RewardTrainer`.

|

||||

|

||||

### Leveraging the `peft` library to train a reward model

|

||||

|

||||

Just pass a `peft_config` in the key word arguments of `RewardTrainer`, and the trainer should automatically take care of converting the model into a PEFT model!

|

||||

|

||||

```python

|

||||

from peft import LoraConfig, task_type

|

||||

from transformers import AutoModelForSequenceClassification, AutoTokenizer, TrainingArguments

|

||||

from trl import RewardTrainer

|

||||

|

||||

model = AutoModelForSequenceClassification.from_pretrained("gpt2")

|

||||

peft_config = LoraConfig(

|

||||

task_type=TaskType.SEQ_CLS,

|

||||

inference_mode=False,

|

||||

r=8,

|

||||

lora_alpha=32,

|

||||

lora_dropout=0.1,

|

||||

)

|

||||

|

||||

...

|

||||

|

||||

trainer = RewardTrainer(

|

||||

model=model,

|

||||

args=training_args,

|

||||

tokenizer=tokenizer,

|

||||

train_dataset=dataset,

|

||||

peft_config=peft_config,

|

||||

)

|

||||

|

||||

trainer.train()

|

||||

|

||||

```

|

||||

|

||||

## RewardTrainer

|

||||

|

||||

[[autodoc]] RewardTrainer

|

||||

@ -1,82 +0,0 @@

|

||||

# Examples of using peft and trl to finetune 8-bit models with Low Rank Adaption

|

||||

|

||||

The notebooks and scripts in this examples show how to fine-tune a model with a sentiment classifier (such as `lvwerra/distilbert-imdb`).

|

||||

|

||||

Here's an overview of the notebooks and scripts in the [trl repository](https://github.com/lvwerra/trl/tree/main/examples):

|

||||

|

||||

| File | Description | Colab link |

|

||||

|---|---| --- |

|

||||

| [`gpt2-sentiment_peft.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt2-sentiment_peft.py) | Same as the sentiment analysis example, but learning a low rank adapter on a 8-bit base model | |

|

||||

| [`cm_finetune_peft_imdb.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/cm_finetune_peft_imdb.py) | Fine tuning a Low Rank Adapter on a frozen 8-bit model for text generation on the imdb dataset. | |

|

||||

| [`merge_peft_adapter.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/merge_peft_adapter.py) | Merging of the adapter layers into the base model’s weights and storing these on the hub. | |

|

||||

| [`gpt-neo-20b_sentiment_peft.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neox-20b_peft/gpt-neo-20b_sentiment_peft.py) | Sentiment fine-tuning of a Low Rank Adapter to create positive reviews. | |

|

||||

| [`gpt-neo-1b_peft.py`](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neo-1b-multi-gpu/gpt-neo-1b_peft.py) | Sentiment fine-tuning of a Low Rank Adapter to create positive reviews using 2 GPUs. | |

|

||||

|

||||

## Installation

|

||||

Note: peft is in active development, so we install directly from their github page.

|

||||

Peft also relies on the latest version of transformers.

|

||||

|

||||

```bash

|

||||

pip install trl[peft]

|

||||

pip install bitsandbytes loralib

|

||||

pip install git+https://github.com/huggingface/transformers.git@main

|

||||

#optional: wandb

|

||||

pip install wandb

|

||||

```

|

||||

|

||||

Note: if you don't want to log with `wandb` remove `log_with="wandb"` in the scripts/notebooks. You can also replace it with your favourite experiment tracker that's [supported by `accelerate`](https://huggingface.co/docs/accelerate/usage_guides/tracking).

|

||||

|

||||

|

||||

## Launch scripts

|

||||

|

||||

The `trl` library is powered by `accelerate`. As such it is best to configure and launch trainings with the following commands:

|

||||

|

||||

```bash

|

||||

accelerate config # will prompt you to define the training configuration

|

||||

accelerate launch scripts/gpt2-sentiment_peft.py # launches training

|

||||

```

|

||||

|

||||

## Using `trl` + `peft` and Data Parallelism

|

||||

|

||||

You can scale up to as many GPUs as you want, as long as you are able to fit the training process in a single device. The only tweak you need to apply is to load the model as follows:

|

||||

```python

|

||||

from accelerate import Accelerator

|

||||

...

|

||||

|

||||

current_device = Accelerator().process_index

|

||||

|

||||

pretrained_model = AutoModelForCausalLM.from_pretrained(

|

||||

config.model_name, load_in_8bit=True, device_map={"": current_device}

|

||||

)

|

||||

```

|

||||

The reason behind `device_map={"": current_device}` is that when you set `"":device_number`, `accelerate` will set the entire model on the `device_number` device. Therefore this trick enables to set the model on the correct device for each process.

|

||||

|

||||

As the `Accelerator` object from `accelerate` will take care of initializing the distributed setup correctly.

|

||||

Make sure to initialize your accelerate config by specifying that you are training in a multi-gpu setup, by running `accelerate config` and make sure to run the training script with `accelerator launch your_script.py`.

|

||||

|

||||

Finally make sure that the rewards are computed on `current_device` as well.

|

||||

|

||||

## Naive pipeline parallelism (NPP) for large models (>60B models)

|

||||

|

||||

The `trl` library also supports naive pipeline parallelism (NPP) for large models (>60B models). This is a simple way to parallelize the model across multiple GPUs.

|

||||

This paradigm, termed as "Naive Pipeline Parallelism" (NPP) is a simple way to parallelize the model across multiple GPUs. We load the model and the adapters across multiple GPUs and the activations and gradients will be naively communicated across the GPUs. This supports `int8` models as well as other `dtype` models.

|

||||

|

||||

<div style="text-align: center">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/trl-npp.png">

|

||||

</div>

|

||||

|

||||

### How to use NPP?

|

||||

|

||||

Simply load your model with a custom `device_map` argument on the `from_pretrained` to split your model across multiple devices. Check out this [nice tutorial](https://github.com/huggingface/blog/blob/main/accelerate-large-models.md) on how to properly create a `device_map` for your model.

|

||||

|

||||

Also make sure to have the `lm_head` module on the first GPU device as it may throw an error if it is not on the first device. As this time of writing, you need to install the `main` branch of `accelerate`: `pip install git+https://github.com/huggingface/accelerate.git@main` and `peft`: `pip install git+https://github.com/huggingface/peft.git@main`.

|

||||

|

||||

That all you need to do to use NPP. Check out the [gpt-neo-1b_peft.py](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt-neo-1b-multi-gpu/gpt-neo-1b_peft.py) example for a more details usage of NPP.

|

||||

|

||||

### Launch scripts

|

||||

|

||||

Although `trl` library is powered by `accelerate`, you should run your training script in a single process. Note that we do not support Data Parallelism together with NPP yet.

|