mirror of

https://github.com/huggingface/trl.git

synced 2025-10-20 18:43:52 +08:00

Compare commits

106 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 4c71daf461 | |||

| c1e9ea8ecf | |||

| f66282424f | |||

| 14ef1aba15 | |||

| 6138439df4 | |||

| d57a181163 | |||

| 73c3970c1f | |||

| 013a32b396 | |||

| 24fb32733f | |||

| bb56c6e6af | |||

| 06be6f409a | |||

| b2696578ce | |||

| 0ce3b65928 | |||

| e155cb8a66 | |||

| ea7a1be92c | |||

| 110d0884c7 | |||

| 57ba9b93aa | |||

| 0de75b26f2 | |||

| e615974a03 | |||

| c2bb1eed14 | |||

| 9c376c571f | |||

| 16994738d0 | |||

| 99225bb6d6 | |||

| 88be2c07e5 | |||

| f2349d2af0 | |||

| d843b3dadd | |||

| 84dab850f6 | |||

| 92f6d246d3 | |||

| 31b7820aad | |||

| b9aa965cce | |||

| a67f2143c3 | |||

| 494b4afa10 | |||

| 02f4e750c0 | |||

| 2ba3005d1c | |||

| 7e394b03e8 | |||

| 14f3613dac | |||

| 5e24101b36 | |||

| b81a6121c3 | |||

| 7f0d246235 | |||

| 70036bf87f | |||

| d0aa421e5e | |||

| 5375d71bbd | |||

| 6004e033a4 | |||

| f436c3e1c9 | |||

| cd1aa6bdcc | |||

| b3f93f0bad | |||

| 6c32c8bfcd | |||

| 3107a40f16 | |||

| 419791695c | |||

| 7e5924d17e | |||

| ed9ea74b62 | |||

| 511c92c91c | |||

| c6cb6353a5 | |||

| adb3e0560b | |||

| adf58d80d0 | |||

| 9aa022503c | |||

| 82ad390caf | |||

| ac038ef03a | |||

| 51ca76b749 | |||

| 7005ab4d11 | |||

| ffb1ab74ba | |||

| 47d08a9626 | |||

| 70327c18e6 | |||

| f05c3fa8fc | |||

| 4799ba4842 | |||

| d45c86e2a7 | |||

| c6b0d1358b | |||

| 3321084e30 | |||

| a9cffc7caf | |||

| 32a928cfc2 | |||

| 1a3bb372ac | |||

| d4564b7c64 | |||

| 1be4d86ccc | |||

| 78249d9de4 | |||

| 5c21de30ae | |||

| 0a566f0c58 | |||

| de3876577c | |||

| 1201aa61b4 | |||

| c00722ce0a | |||

| 124189c86a | |||

| d5eeaab462 | |||

| 5368be1e1e | |||

| b169e1030d | |||

| 9af4734178 | |||

| a0d714949f | |||

| a0e28143ec | |||

| 32d9d34eb1 | |||

| fb1b48fdbe | |||

| b5e4bc5984 | |||

| 7a24565d9d | |||

| 44a06fc487 | |||

| a84fc5d815 | |||

| 80038a5a92 | |||

| cece86b182 | |||

| d005980d8b | |||

| cc23b511e4 | |||

| 2cad48d511 | |||

| 6859e048da | |||

| 92eea1f239 | |||

| 663002f609 | |||

| 44d998b2af | |||

| 9b80f3d50c | |||

| 2038e52c30 | |||

| 10c2f63b2a | |||

| 9fb871f62f | |||

| 3cec013a20 |

2

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

2

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

@ -15,7 +15,7 @@ body:

|

||||

id: system-info

|

||||

attributes:

|

||||

label: System Info

|

||||

description: Please share your system info with us. You can run the command `transformers-cli env` and copy-paste its output below.

|

||||

description: Please share your system info with us. You can run the command `trl env` and copy-paste its output below.

|

||||

placeholder: trl version, transformers version, platform, python version, ...

|

||||

validations:

|

||||

required: true

|

||||

|

||||

27

.github/workflows/stale.yml

vendored

27

.github/workflows/stale.yml

vendored

@ -1,27 +0,0 @@

|

||||

name: Stale Bot

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 15 * * *"

|

||||

|

||||

jobs:

|

||||

close_stale_issues:

|

||||

name: Close Stale Issues

|

||||

if: github.repository == 'huggingface/trl'

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: 3.8

|

||||

|

||||

- name: Install requirements

|

||||

run: |

|

||||

pip install PyGithub

|

||||

- name: Close stale issues

|

||||

run: |

|

||||

python scripts/stale.py

|

||||

46

.github/workflows/tests-main.yml

vendored

46

.github/workflows/tests-main.yml

vendored

@ -1,46 +0,0 @@

|

||||

name: tests on transformers PEFT main

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ main ]

|

||||

|

||||

env:

|

||||

CI_SLACK_CHANNEL: ${{ secrets.CI_PUSH_MAIN_CHANNEL }}

|

||||

|

||||

jobs:

|

||||

tests:

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ['3.9', '3.10', '3.11']

|

||||

os: ['ubuntu-latest', 'windows-latest']

|

||||

fail-fast: false

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

# install PEFT & transformers from source

|

||||

pip install -U git+https://github.com/huggingface/peft.git

|

||||

pip install -U git+https://github.com/huggingface/transformers.git

|

||||

# cpu version of pytorch

|

||||

pip install ".[test, diffusers]"

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- name: Post to Slack

|

||||

if: always()

|

||||

uses: huggingface/hf-workflows/.github/actions/post-slack@main

|

||||

with:

|

||||

slack_channel: ${{ env.CI_SLACK_CHANNEL }}

|

||||

title: 🤗 Results of the TRL CI on transformers/PEFT main

|

||||

status: ${{ job.status }}

|

||||

slack_token: ${{ secrets.SLACK_CIFEEDBACK_BOT_TOKEN }}

|

||||

177

.github/workflows/tests.yml

vendored

177

.github/workflows/tests.yml

vendored

@ -1,88 +1,163 @@

|

||||

name: tests

|

||||

name: Tests

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ main ]

|

||||

pull_request:

|

||||

branches: [ main ]

|

||||

paths:

|

||||

# Run only when relevant files are modified

|

||||

- "trl/**.py"

|

||||

- ".github/**.yml"

|

||||

- "examples/**.py"

|

||||

- "scripts/**.py"

|

||||

- ".github/**.yml"

|

||||

- "tests/**.py"

|

||||

- "trl/**.py"

|

||||

- "setup.py"

|

||||

|

||||

env:

|

||||

TQDM_DISABLE: 1

|

||||

CI_SLACK_CHANNEL: ${{ secrets.CI_PUSH_MAIN_CHANNEL }}

|

||||

|

||||

jobs:

|

||||

check_code_quality:

|

||||

name: Check code quality

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [3.9]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

submodules: recursive

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

- name: Set up Python 3.12

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

python-version: 3.12

|

||||

- uses: pre-commit/action@v3.0.1

|

||||

with:

|

||||

extra_args: --all-files

|

||||

|

||||

tests:

|

||||

needs: check_code_quality

|

||||

name: Tests

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ['3.9', '3.10', '3.11']

|

||||

python-version: ['3.9', '3.10', '3.11', '3.12']

|

||||

os: ['ubuntu-latest', 'windows-latest']

|

||||

fail-fast: false

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

# install PEFT & transformers from source

|

||||

pip install -U git+https://github.com/huggingface/peft.git

|

||||

pip install -U git+https://github.com/huggingface/transformers.git

|

||||

# cpu version of pytorch

|

||||

pip install ".[test, diffusers]"

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

|

||||

tests_no_optional_dep:

|

||||

needs: check_code_quality

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install ".[dev]"

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- name: Post to Slack

|

||||

if: github.ref == 'refs/heads/main' && always() # Check if the branch is main

|

||||

uses: huggingface/hf-workflows/.github/actions/post-slack@main

|

||||

with:

|

||||

slack_channel: ${{ env.CI_SLACK_CHANNEL }}

|

||||

title: Results with ${{ matrix.python-version }} on ${{ matrix.os }} with lastest dependencies

|

||||

status: ${{ job.status }}

|

||||

slack_token: ${{ secrets.SLACK_CIFEEDBACK_BOT_TOKEN }}

|

||||

|

||||

tests_dev:

|

||||

name: Tests with dev dependencies

|

||||

runs-on: 'ubuntu-latest'

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python 3.9

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.9'

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

# cpu version of pytorch

|

||||

pip install .[test]

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python 3.12

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.12'

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install -U git+https://github.com/huggingface/accelerate.git

|

||||

python -m pip install -U git+https://github.com/huggingface/datasets.git

|

||||

python -m pip install -U git+https://github.com/huggingface/transformers.git

|

||||

python -m pip install ".[dev]"

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- name: Post to Slack

|

||||

if: github.ref == 'refs/heads/main' && always() # Check if the branch is main

|

||||

uses: huggingface/hf-workflows/.github/actions/post-slack@main

|

||||

with:

|

||||

slack_channel: ${{ env.CI_SLACK_CHANNEL }}

|

||||

title: Results with ${{ matrix.python-version }} on ${{ matrix.os }} with dev dependencies

|

||||

status: ${{ job.status }}

|

||||

slack_token: ${{ secrets.SLACK_CIFEEDBACK_BOT_TOKEN }}

|

||||

|

||||

tests_wo_optional_deps:

|

||||

name: Tests without optional dependencies

|

||||

runs-on: 'ubuntu-latest'

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python 3.12

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.12'

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install ".[test]"

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- name: Post to Slack

|

||||

if: github.ref == 'refs/heads/main' && always() # Check if the branch is main

|

||||

uses: huggingface/hf-workflows/.github/actions/post-slack@main

|

||||

with:

|

||||

slack_channel: ${{ env.CI_SLACK_CHANNEL }}

|

||||

title: Results with ${{ matrix.python-version }} on ${{ matrix.os }} without optional dependencies

|

||||

status: ${{ job.status }}

|

||||

slack_token: ${{ secrets.SLACK_CIFEEDBACK_BOT_TOKEN }}

|

||||

|

||||

tests_min_versions:

|

||||

name: Tests with minimum versions

|

||||

runs-on: 'ubuntu-latest'

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up Python 3.12

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.12'

|

||||

cache: "pip"

|

||||

cache-dependency-path: |

|

||||

setup.py

|

||||

requirements.txt

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install accelerate==0.34.0

|

||||

python -m pip install datasets==2.21.0

|

||||

python -m pip install transformers==4.46.0

|

||||

python -m pip install ".[dev]"

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

- name: Post to Slack

|

||||

if: github.ref == 'refs/heads/main' && always() # Check if the branch is main

|

||||

uses: huggingface/hf-workflows/.github/actions/post-slack@main

|

||||

with:

|

||||

slack_channel: ${{ env.CI_SLACK_CHANNEL }}

|

||||

title: Results with ${{ matrix.python-version }} on ${{ matrix.os }} with minimum versions

|

||||

status: ${{ job.status }}

|

||||

slack_token: ${{ secrets.SLACK_CIFEEDBACK_BOT_TOKEN }}

|

||||

1

.gitignore

vendored

1

.gitignore

vendored

@ -1,4 +1,3 @@

|

||||

benchmark/trl

|

||||

*.bak

|

||||

.gitattributes

|

||||

.last_checked

|

||||

|

||||

@ -17,6 +17,12 @@ authors:

|

||||

family-names: Thrush

|

||||

- given-names: Nathan

|

||||

family-names: Lambert

|

||||

- given-names: Shengyi

|

||||

family-names: Huang

|

||||

- given-names: Kashif

|

||||

family-names: Rasul

|

||||

- given-names: Quentin

|

||||

family-names: Gallouédec

|

||||

repository-code: 'https://github.com/huggingface/trl'

|

||||

abstract: "With trl you can train transformer language models with Proximal Policy Optimization (PPO). The library is built on top of the transformers library by \U0001F917 Hugging Face. Therefore, pre-trained language models can be directly loaded via transformers. At this point, most decoder and encoder-decoder architectures are supported."

|

||||

keywords:

|

||||

@ -25,4 +31,4 @@ keywords:

|

||||

- pytorch

|

||||

- transformers

|

||||

license: Apache-2.0

|

||||

version: 0.2.1

|

||||

version: 0.11.1

|

||||

|

||||

@ -20,7 +20,7 @@ There are several ways you can contribute to TRL:

|

||||

* Fix outstanding issues with the existing code.

|

||||

* Submit issues related to bugs or desired new features.

|

||||

* Implement trainers for new post-training algorithms.

|

||||

* Contribute to the examples or to the documentation.

|

||||

* Contribute to the examples or the documentation.

|

||||

|

||||

If you don't know where to start, there is a special [Good First

|

||||

Issue](https://github.com/huggingface/trl/contribute) listing. It will give you a list of

|

||||

@ -62,7 +62,7 @@ Once you've confirmed the bug hasn't already been reported, please include the f

|

||||

To get the OS and software versions automatically, run the following command:

|

||||

|

||||

```bash

|

||||

transformers-cli env

|

||||

trl env

|

||||

```

|

||||

|

||||

### Do you want a new feature?

|

||||

@ -74,19 +74,19 @@ If there is a new feature you'd like to see in TRL, please open an issue and des

|

||||

Whatever it is, we'd love to hear about it!

|

||||

|

||||

2. Describe your requested feature in as much detail as possible. The more you can tell us about it, the better we'll be able to help you.

|

||||

3. Provide a *code snippet* that demonstrates the features usage.

|

||||

3. Provide a *code snippet* that demonstrates the feature's usage.

|

||||

4. If the feature is related to a paper, please include a link.

|

||||

|

||||

If your issue is well written we're already 80% of the way there by the time you create it.

|

||||

|

||||

## Do you want to implement a new trainer?

|

||||

|

||||

New post-training methods are published on a frequent basis and those which satisfy the following criteria are good candidates to be integrated in TRL:

|

||||

New post-training methods are published frequently and those that satisfy the following criteria are good candidates to be integrated into TRL:

|

||||

|

||||

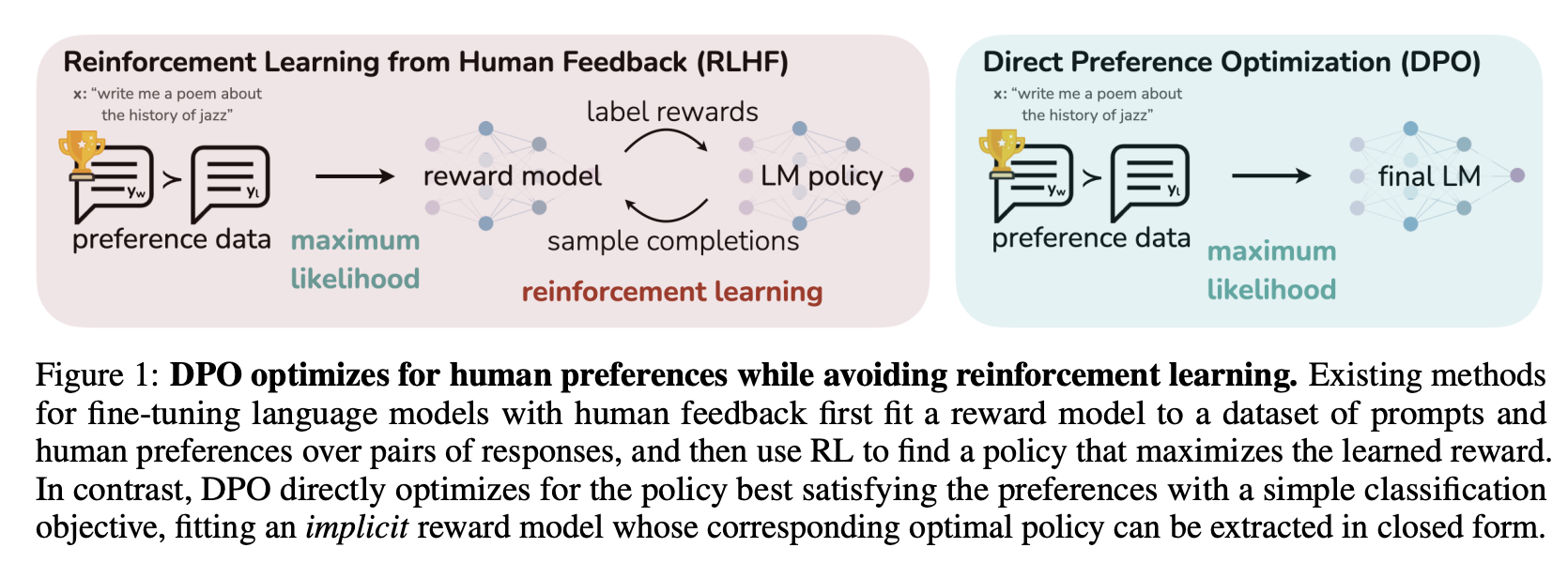

* **Simplicity:** does the new method achieve similar performance as prior methods, but with less complexity? A good example is [Direct Preference Optimization](https://arxiv.org/abs/2305.18290) (DPO), which provided a simpler and compelling alternative to RLHF methods.

|

||||

* **Efficiency:** does the new method provide a significant improvement in training efficiency? A good example is [Odds Ratio Preference Optimization](https://arxiv.org/abs/2403.07691v2), which utilises a similar objective as DPO, but requires half the GPU VRAM.

|

||||

* **Simplicity:** Does the new method achieve similar performance as prior methods, but with less complexity? A good example is Direct Preference Optimization (DPO) [[Rafailov et al, 2023]](https://huggingface.co/papers/2305.18290), which provided a simpler and compelling alternative to RLHF methods.

|

||||

* **Efficiency:** Does the new method provide a significant improvement in training efficiency? A good example is Odds Ratio Preference Optimization (ORPO) [[Hong et al, 2023]](https://huggingface.co/papers/2403.07691), which utilizes a similar objective as DPO but requires half the GPU VRAM.

|

||||

|

||||

Methods which only provide incremental improvements at the expense of added complexity or compute costs are unlikely to be included in TRL.

|

||||

Methods that only provide incremental improvements at the expense of added complexity or compute costs are unlikely to be included in TRL.

|

||||

|

||||

If you want to implement a trainer for a new post-training method, first open an issue and provide the following information:

|

||||

|

||||

@ -102,7 +102,7 @@ Based on the community and maintainer feedback, the next step will be to impleme

|

||||

|

||||

## Do you want to add documentation?

|

||||

|

||||

We're always looking for improvements to the documentation that make it more clear and accurate. Please let us know how the documentation can be improved, such as typos, dead links and any missing, unclear or inaccurate content.. We'll be happy to make the changes or help you make a contribution if you're interested!

|

||||

We're always looking for improvements to the documentation that make it more clear and accurate. Please let us know how the documentation can be improved, such as typos, dead links, and any missing, unclear, or inaccurate content... We'll be happy to make the changes or help you contribute if you're interested!

|

||||

|

||||

## Submitting a pull request (PR)

|

||||

|

||||

@ -133,7 +133,7 @@ Follow these steps to start contributing:

|

||||

|

||||

3. Create a new branch to hold your development changes, and do this for every new PR you work on.

|

||||

|

||||

Start by synchronizing your `main` branch with the `upstream/main` branch (ore details in the [GitHub Docs](https://docs.github.com/en/github/collaborating-with-issues-and-pull-requests/syncing-a-fork)):

|

||||

Start by synchronizing your `main` branch with the `upstream/main` branch (more details in the [GitHub Docs](https://docs.github.com/en/github/collaborating-with-issues-and-pull-requests/syncing-a-fork)):

|

||||

|

||||

```bash

|

||||

$ git checkout main

|

||||

@ -180,18 +180,21 @@ Follow these steps to start contributing:

|

||||

$ make test

|

||||

```

|

||||

|

||||

TRL relies on `ruff` to format its source code

|

||||

consistently. After you make changes, apply automatic style corrections and code verifications

|

||||

that can't be automated in one go with:

|

||||

TRL relies on `ruff` for maintaining consistent code formatting across its source files. Before submitting any PR, you should apply automatic style corrections and run code verification checks.

|

||||

|

||||

This target is also optimized to only work with files modified by the PR you're working on.

|

||||

We provide a `precommit` target in the `Makefile` that simplifies this process by running all required checks and optimizations on only the files modified by your PR.

|

||||

|

||||

If you prefer to run the checks one after the other, the following command apply the

|

||||

style corrections:

|

||||

To apply these checks and corrections in one step, use:

|

||||

|

||||

```bash

|

||||

$ make precommit

|

||||

```

|

||||

```bash

|

||||

$ make precommit

|

||||

```

|

||||

|

||||

This command runs the following:

|

||||

- Executes `pre-commit` hooks to automatically fix style issues with `ruff` and other tools.

|

||||

- Runs additional scripts such as adding copyright information.

|

||||

|

||||

If you prefer to apply the style corrections separately or review them individually, the `pre-commit` hook will handle the formatting for the files in question.

|

||||

|

||||

Once you're happy with your changes, add changed files using `git add` and

|

||||

make a commit with `git commit` to record your changes locally:

|

||||

@ -221,10 +224,7 @@ Follow these steps to start contributing:

|

||||

webpage of your fork on GitHub. Click on 'Pull request' to send your changes

|

||||

to the project maintainers for review.

|

||||

|

||||

7. It's ok if maintainers ask you for changes. It happens to core contributors

|

||||

too! So everyone can see the changes in the Pull request, work in your local

|

||||

branch and push the changes to your fork. They will automatically appear in

|

||||

the pull request.

|

||||

7. It's ok if maintainers ask you for changes. It happens to core contributors too! To ensure everyone can review your changes in the pull request, work on your local branch and push the updates to your fork. They will automatically appear in the pull request.

|

||||

|

||||

|

||||

### Checklist

|

||||

@ -245,14 +245,41 @@ Follow these steps to start contributing:

|

||||

An extensive test suite is included to test the library behavior and several examples. Library tests can be found in

|

||||

the [tests folder](https://github.com/huggingface/trl/tree/main/tests).

|

||||

|

||||

We use `pytest` in order to run the tests. From the root of the

|

||||

repository, here's how to run tests with `pytest` for the library:

|

||||

We use `pytest` to run the tests. From the root of the

|

||||

repository here's how to run tests with `pytest` for the library:

|

||||

|

||||

```bash

|

||||

$ python -m pytest -sv ./tests

|

||||

```

|

||||

|

||||

In fact, that's how `make test` is implemented (sans the `pip install` line)!

|

||||

That's how `make test` is implemented (without the `pip install` line)!

|

||||

|

||||

You can specify a smaller set of tests in order to test only the feature

|

||||

You can specify a smaller set of tests to test only the feature

|

||||

you're working on.

|

||||

|

||||

### Deprecation and Backward Compatibility

|

||||

|

||||

Our approach to deprecation and backward compatibility is flexible and based on the feature’s usage and impact. Each deprecation is carefully evaluated, aiming to balance innovation with user needs.

|

||||

|

||||

When a feature or component is marked for deprecation, its use will emit a warning message. This warning will include:

|

||||

|

||||

- **Transition Guidance**: Instructions on how to migrate to the alternative solution or replacement.

|

||||

- **Removal Version**: The target version when the feature will be removed, providing users with a clear timeframe to transition.

|

||||

|

||||

Example:

|

||||

|

||||

```python

|

||||

warnings.warn(

|

||||

"The `Trainer.foo` method is deprecated and will be removed in version 0.14.0. "

|

||||

"Please use the `Trainer.bar` class instead.",

|

||||

FutureWarning,

|

||||

)

|

||||

```

|

||||

|

||||

The deprecation and removal schedule is based on each feature's usage and impact, with examples at two extremes:

|

||||

|

||||

- **Experimental or Low-Use Features**: For a feature that is experimental or has limited usage, backward compatibility may not be maintained between releases. Users should therefore anticipate potential breaking changes from one version to the next.

|

||||

|

||||

- **Widely-Used Components**: For a feature with high usage, we aim for a more gradual transition period of approximately **5 months**, generally scheduling deprecation around **5 minor releases** after the initial warning.

|

||||

|

||||

These examples represent the two ends of a continuum. The specific timeline for each feature will be determined individually, balancing innovation with user stability needs.

|

||||

|

||||

@ -2,4 +2,5 @@ include settings.ini

|

||||

include LICENSE

|

||||

include CONTRIBUTING.md

|

||||

include README.md

|

||||

recursive-exclude * __pycache__

|

||||

recursive-exclude * __pycache__

|

||||

include trl/templates/*.md

|

||||

8

Makefile

8

Makefile

@ -1,4 +1,4 @@

|

||||

.PHONY: test precommit benchmark_core benchmark_aux common_tests slow_tests test_examples tests_gpu

|

||||

.PHONY: test precommit common_tests slow_tests test_examples tests_gpu

|

||||

|

||||

check_dirs := examples tests trl

|

||||

|

||||

@ -18,12 +18,6 @@ precommit:

|

||||

pre-commit run --all-files

|

||||

python scripts/add_copyrights.py

|

||||

|

||||

benchmark_core:

|

||||

bash ./benchmark/benchmark_core.sh

|

||||

|

||||

benchmark_aux:

|

||||

bash ./benchmark/benchmark_aux.sh

|

||||

|

||||

tests_gpu:

|

||||

python -m pytest tests/test_* $(if $(IS_GITHUB_CI),--report-log "common_tests.log",)

|

||||

|

||||

|

||||

217

README.md

217

README.md

@ -1,207 +1,199 @@

|

||||

# TRL - Transformer Reinforcement Learning

|

||||

|

||||

<div style="text-align: center">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/trl_banner_dark.png">

|

||||

<img src="https://huggingface.co/datasets/trl-internal-testing/example-images/resolve/main/images/trl_banner_dark.png" alt="TRL Banner">

|

||||

</div>

|

||||

|

||||

# TRL - Transformer Reinforcement Learning

|

||||

> Full stack library to fine-tune and align large language models.

|

||||

<hr> <br>

|

||||

|

||||

<h3 align="center">

|

||||

<p>A comprehensive library to post-train foundation models</p>

|

||||

</h3>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/huggingface/trl/blob/main/LICENSE">

|

||||

<img alt="License" src="https://img.shields.io/github/license/huggingface/trl.svg?color=blue">

|

||||

</a>

|

||||

<a href="https://huggingface.co/docs/trl/index">

|

||||

<img alt="Documentation" src="https://img.shields.io/website/http/huggingface.co/docs/trl/index.svg?down_color=red&down_message=offline&up_message=online">

|

||||

</a>

|

||||

<a href="https://github.com/huggingface/trl/releases">

|

||||

<img alt="GitHub release" src="https://img.shields.io/github/release/huggingface/trl.svg">

|

||||

</a>

|

||||

<a href="https://github.com/huggingface/trl/blob/main/LICENSE"><img alt="License" src="https://img.shields.io/github/license/huggingface/trl.svg?color=blue"></a>

|

||||

<a href="https://huggingface.co/docs/trl/index"><img alt="Documentation" src="https://img.shields.io/website/http/huggingface.co/docs/trl/index.svg?down_color=red&down_message=offline&up_color=blue&up_message=online"></a>

|

||||

<a href="https://github.com/huggingface/trl/releases"><img alt="GitHub release" src="https://img.shields.io/github/release/huggingface/trl.svg"></a>

|

||||

</p>

|

||||

|

||||

## Overview

|

||||

|

||||

## What is it?

|

||||

|

||||

The `trl` library is a full stack tool to fine-tune and align transformer language and diffusion models using methods such as Supervised Fine-tuning step (SFT), Reward Modeling (RM) and the Proximal Policy Optimization (PPO) as well as Direct Preference Optimization (DPO).

|

||||

|

||||

The library is built on top of the [`transformers`](https://github.com/huggingface/transformers) library and thus allows to use any model architecture available there.

|

||||

|

||||

TRL is a cutting-edge library designed for post-training foundation models using advanced techniques like Supervised Fine-Tuning (SFT), Proximal Policy Optimization (PPO), and Direct Preference Optimization (DPO). Built on top of the [🤗 Transformers](https://github.com/huggingface/transformers) ecosystem, TRL supports a variety of model architectures and modalities, and can be scaled-up across various hardware setups.

|

||||

|

||||

## Highlights

|

||||

|

||||

- **`Efficient and scalable`**:

|

||||

- [`accelerate`](https://github.com/huggingface/accelerate) is the backbone of `trl` which allows to scale model training from a single GPU to a large scale multi-node cluster with methods such as DDP and DeepSpeed.

|

||||

- [`PEFT`](https://github.com/huggingface/peft) is fully integrated and allows to train even the largest models on modest hardware with quantisation and methods such as LoRA or QLoRA.

|

||||

- [`unsloth`](https://github.com/unslothai/unsloth) is also integrated and allows to significantly speed up training with dedicated kernels.

|

||||

- **`CLI`**: With the [CLI](https://huggingface.co/docs/trl/clis) you can fine-tune and chat with LLMs without writing any code using a single command and a flexible config system.

|

||||

- **`Trainers`**: The Trainer classes are an abstraction to apply many fine-tuning methods with ease such as the [`SFTTrainer`](https://huggingface.co/docs/trl/sft_trainer), [`DPOTrainer`](https://huggingface.co/docs/trl/trainer#trl.DPOTrainer), [`RewardTrainer`](https://huggingface.co/docs/trl/reward_trainer), [`PPOTrainer`](https://huggingface.co/docs/trl/trainer#trl.PPOTrainer), [`CPOTrainer`](https://huggingface.co/docs/trl/trainer#trl.CPOTrainer), and [`ORPOTrainer`](https://huggingface.co/docs/trl/trainer#trl.ORPOTrainer).

|

||||

- **`AutoModels`**: The [`AutoModelForCausalLMWithValueHead`](https://huggingface.co/docs/trl/models#trl.AutoModelForCausalLMWithValueHead) & [`AutoModelForSeq2SeqLMWithValueHead`](https://huggingface.co/docs/trl/models#trl.AutoModelForSeq2SeqLMWithValueHead) classes add an additional value head to the model which allows to train them with RL algorithms such as PPO.

|

||||

- **`Examples`**: Train GPT2 to generate positive movie reviews with a BERT sentiment classifier, full RLHF using adapters only, train GPT-j to be less toxic, [StackLlama example](https://huggingface.co/blog/stackllama), etc. following the [examples](https://github.com/huggingface/trl/tree/main/examples).

|

||||

- **Efficient and scalable**:

|

||||

- Leverages [🤗 Accelerate](https://github.com/huggingface/accelerate) to scale from single GPU to multi-node clusters using methods like DDP and DeepSpeed.

|

||||

- Full integration with [`PEFT`](https://github.com/huggingface/peft) enables training on large models with modest hardware via quantization and LoRA/QLoRA.

|

||||

- Integrates [Unsloth](https://github.com/unslothai/unsloth) for accelerating training using optimized kernels.

|

||||

|

||||

- **Command Line Interface (CLI)**: A simple interface lets you fine-tune and interact with models without needing to write code.

|

||||

|

||||

- **Trainers**: Various fine-tuning methods are easily accessible via trainers like [`SFTTrainer`](https://huggingface.co/docs/trl/sft_trainer), [`DPOTrainer`](https://huggingface.co/docs/trl/dpo_trainer), [`RewardTrainer`](https://huggingface.co/docs/trl/reward_trainer), [`ORPOTrainer`](https://huggingface.co/docs/trl/orpo_trainer) and more.

|

||||

|

||||

- **AutoModels**: Use pre-defined model classes like [`AutoModelForCausalLMWithValueHead`](https://huggingface.co/docs/trl/models#trl.AutoModelForCausalLMWithValueHead) to simplify reinforcement learning (RL) with LLMs.

|

||||

|

||||

## Installation

|

||||

|

||||

### Python package

|

||||

Install the library with `pip`:

|

||||

### Python Package

|

||||

|

||||

Install the library using `pip`:

|

||||

|

||||

```bash

|

||||

pip install trl

|

||||

```

|

||||

|

||||

### From source

|

||||

If you want to use the latest features before an official release you can install from source:

|

||||

|

||||

If you want to use the latest features before an official release, you can install TRL from source:

|

||||

|

||||

```bash

|

||||

pip install git+https://github.com/huggingface/trl.git

|

||||

```

|

||||

|

||||

### Repository

|

||||

|

||||

If you want to use the examples you can clone the repository with the following command:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/huggingface/trl.git

|

||||

```

|

||||

|

||||

## Command Line Interface (CLI)

|

||||

|

||||

You can use TRL Command Line Interface (CLI) to quickly get started with Supervised Fine-tuning (SFT), Direct Preference Optimization (DPO) and test your aligned model with the chat CLI:

|

||||

You can use the TRL Command Line Interface (CLI) to quickly get started with Supervised Fine-tuning (SFT) and Direct Preference Optimization (DPO), or vibe check your model with the chat CLI:

|

||||

|

||||

**SFT:**

|

||||

|

||||

```bash

|

||||

trl sft --model_name_or_path facebook/opt-125m --dataset_name stanfordnlp/imdb --output_dir opt-sft-imdb

|

||||

trl sft --model_name_or_path Qwen/Qwen2.5-0.5B \

|

||||

--dataset_name trl-lib/Capybara \

|

||||

--output_dir Qwen2.5-0.5B-SFT

|

||||

```

|

||||

|

||||

**DPO:**

|

||||

|

||||

```bash

|

||||

trl dpo --model_name_or_path facebook/opt-125m --dataset_name trl-internal-testing/hh-rlhf-helpful-base-trl-style --output_dir opt-sft-hh-rlhf

|

||||

trl dpo --model_name_or_path Qwen/Qwen2.5-0.5B-Instruct \

|

||||

--dataset_name argilla/Capybara-Preferences \

|

||||

--output_dir Qwen2.5-0.5B-DPO

|

||||

```

|

||||

|

||||

**Chat:**

|

||||

|

||||

```bash

|

||||

trl chat --model_name_or_path Qwen/Qwen1.5-0.5B-Chat

|

||||

trl chat --model_name_or_path Qwen/Qwen2.5-0.5B-Instruct

|

||||

```

|

||||

|

||||

Read more about CLI in the [relevant documentation section](https://huggingface.co/docs/trl/main/en/clis) or use `--help` for more details.

|

||||

|

||||

## How to use

|

||||

|

||||

For more flexibility and control over the training, you can use the dedicated trainer classes to fine-tune the model in Python.

|

||||

For more flexibility and control over training, TRL provides dedicated trainer classes to post-train language models or PEFT adapters on a custom dataset. Each trainer in TRL is a light wrapper around the 🤗 Transformers trainer and natively supports distributed training methods like DDP, DeepSpeed ZeRO, and FSDP.

|

||||

|

||||

### `SFTTrainer`

|

||||

|

||||

This is a basic example of how to use the `SFTTrainer` from the library. The `SFTTrainer` is a light wrapper around the `transformers` Trainer to easily fine-tune language models or adapters on a custom dataset.

|

||||

Here is a basic example of how to use the `SFTTrainer`:

|

||||

|

||||

```python

|

||||

# imports

|

||||

from trl import SFTConfig, SFTTrainer

|

||||

from datasets import load_dataset

|

||||

from trl import SFTTrainer

|

||||

|

||||

# get dataset

|

||||

dataset = load_dataset("stanfordnlp/imdb", split="train")

|

||||

dataset = load_dataset("trl-lib/Capybara", split="train")

|

||||

|

||||

# get trainer

|

||||

training_args = SFTConfig(output_dir="Qwen/Qwen2.5-0.5B-SFT")

|

||||

trainer = SFTTrainer(

|

||||

"facebook/opt-350m",

|

||||

args=training_args,

|

||||

model="Qwen/Qwen2.5-0.5B",

|

||||

train_dataset=dataset,

|

||||

dataset_text_field="text",

|

||||

max_seq_length=512,

|

||||

)

|

||||

|

||||

# train

|

||||

trainer.train()

|

||||

```

|

||||

|

||||

### `RewardTrainer`

|

||||

|

||||

This is a basic example of how to use the `RewardTrainer` from the library. The `RewardTrainer` is a wrapper around the `transformers` Trainer to easily fine-tune reward models or adapters on a custom preference dataset.

|

||||

Here is a basic example of how to use the `RewardTrainer`:

|

||||

|

||||

```python

|

||||

# imports

|

||||

from trl import RewardConfig, RewardTrainer

|

||||

from datasets import load_dataset

|

||||

from transformers import AutoModelForSequenceClassification, AutoTokenizer

|

||||

from trl import RewardTrainer

|

||||

|

||||

# load model and dataset - dataset needs to be in a specific format

|

||||

model = AutoModelForSequenceClassification.from_pretrained("gpt2", num_labels=1)

|

||||

tokenizer = AutoTokenizer.from_pretrained("gpt2")

|

||||

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

|

||||

model = AutoModelForSequenceClassification.from_pretrained(

|

||||

"Qwen/Qwen2.5-0.5B-Instruct", num_labels=1

|

||||

)

|

||||

model.config.pad_token_id = tokenizer.pad_token_id

|

||||

|

||||

...

|

||||

dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

|

||||

|

||||

# load trainer

|

||||

training_args = RewardConfig(output_dir="Qwen2.5-0.5B-Reward", per_device_train_batch_size=2)

|

||||

trainer = RewardTrainer(

|

||||

args=training_args,

|

||||

model=model,

|

||||

tokenizer=tokenizer,

|

||||

processing_class=tokenizer,

|

||||

train_dataset=dataset,

|

||||

)

|

||||

|

||||

# train

|

||||

trainer.train()

|

||||

```

|

||||

|

||||

### `PPOTrainer`

|

||||

### `RLOOTrainer`

|

||||

|

||||

This is a basic example of how to use the `PPOTrainer` from the library. Based on a query the language model creates a response which is then evaluated. The evaluation could be a human in the loop or another model's output.

|

||||

`RLOOTrainer` implements a [REINFORCE-style optimization](https://huggingface.co/papers/2402.14740) for RLHF that is more performant and memory-efficient than PPO. Here is a basic example of how to use the `RLOOTrainer`:

|

||||

|

||||

```python

|

||||

# imports

|

||||

import torch

|

||||

from transformers import AutoTokenizer

|

||||

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead, create_reference_model

|

||||

from trl.core import respond_to_batch

|

||||

from trl import RLOOConfig, RLOOTrainer, apply_chat_template

|

||||

from datasets import load_dataset

|

||||

from transformers import (

|

||||

AutoModelForCausalLM,

|

||||

AutoModelForSequenceClassification,

|

||||

AutoTokenizer,

|

||||

)

|

||||

|

||||

# get models

|

||||

model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

|

||||

ref_model = create_reference_model(model)

|

||||

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

|

||||

reward_model = AutoModelForSequenceClassification.from_pretrained(

|

||||

"Qwen/Qwen2.5-0.5B-Instruct", num_labels=1

|

||||

)

|

||||

ref_policy = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

|

||||

policy = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

|

||||

|

||||

tokenizer = AutoTokenizer.from_pretrained('gpt2')

|

||||

tokenizer.pad_token = tokenizer.eos_token

|

||||

dataset = load_dataset("trl-lib/ultrafeedback-prompt")

|

||||

dataset = dataset.map(apply_chat_template, fn_kwargs={"tokenizer": tokenizer})

|

||||

dataset = dataset.map(lambda x: tokenizer(x["prompt"]), remove_columns="prompt")

|

||||

|

||||

# initialize trainer

|

||||

ppo_config = PPOConfig(batch_size=1, mini_batch_size=1)

|

||||

|

||||

# encode a query

|

||||

query_txt = "This morning I went to the "

|

||||

query_tensor = tokenizer.encode(query_txt, return_tensors="pt")

|

||||

|

||||

# get model response

|

||||

response_tensor = respond_to_batch(model, query_tensor)

|

||||

|

||||

# create a ppo trainer

|

||||

ppo_trainer = PPOTrainer(ppo_config, model, ref_model, tokenizer)

|

||||

|

||||

# define a reward for response

|

||||

# (this could be any reward such as human feedback or output from another model)

|

||||

reward = [torch.tensor(1.0)]

|

||||

|

||||

# train model for one step with ppo

|

||||

train_stats = ppo_trainer.step([query_tensor[0]], [response_tensor[0]], reward)

|

||||

training_args = RLOOConfig(output_dir="Qwen2.5-0.5B-RL")

|

||||

trainer = RLOOTrainer(

|

||||

config=training_args,

|

||||

processing_class=tokenizer,

|

||||

policy=policy,

|

||||

ref_policy=ref_policy,

|

||||

reward_model=reward_model,

|

||||

train_dataset=dataset["train"],

|

||||

eval_dataset=dataset["test"],

|

||||

)

|

||||

trainer.train()

|

||||

```

|

||||

|

||||

### `DPOTrainer`

|

||||

|

||||

`DPOTrainer` is a trainer that uses [Direct Preference Optimization algorithm](https://huggingface.co/papers/2305.18290). This is a basic example of how to use the `DPOTrainer` from the library. The `DPOTrainer` is a wrapper around the `transformers` Trainer to easily fine-tune reward models or adapters on a custom preference dataset.

|

||||

`DPOTrainer` implements the popular [Direct Preference Optimization (DPO) algorithm](https://huggingface.co/papers/2305.18290) that was used to post-train Llama 3 and many other models. Here is a basic example of how to use the `DPOTrainer`:

|

||||

|

||||

```python

|

||||

# imports

|

||||

from datasets import load_dataset

|

||||

from transformers import AutoModelForCausalLM, AutoTokenizer

|

||||

from trl import DPOTrainer

|

||||

from trl import DPOConfig, DPOTrainer

|

||||

|

||||

# load model and dataset - dataset needs to be in a specific format

|

||||

model = AutoModelForCausalLM.from_pretrained("gpt2")

|

||||

tokenizer = AutoTokenizer.from_pretrained("gpt2")

|

||||

|

||||

...

|

||||

|

||||

# load trainer

|

||||

trainer = DPOTrainer(

|

||||

model=model,

|

||||

tokenizer=tokenizer,

|

||||

train_dataset=dataset,

|

||||

)

|

||||

|

||||

# train

|

||||

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

|

||||

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct")

|

||||

dataset = load_dataset("trl-lib/ultrafeedback_binarized", split="train")

|

||||

training_args = DPOConfig(output_dir="Qwen2.5-0.5B-DPO")

|

||||

trainer = DPOTrainer(model=model, args=training_args, train_dataset=dataset, processing_class=tokenizer)

|

||||

trainer.train()

|

||||

```

|

||||

|

||||

## Development

|

||||

|

||||

If you want to contribute to `trl` or customizing it to your needs make sure to read the [contribution guide](https://github.com/huggingface/trl/blob/main/CONTRIBUTING.md) and make sure you make a dev install:

|

||||

If you want to contribute to `trl` or customize it to your needs make sure to read the [contribution guide](https://github.com/huggingface/trl/blob/main/CONTRIBUTING.md) and make sure you make a dev install:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/huggingface/trl.git

|

||||

@ -209,20 +201,11 @@ cd trl/

|

||||

make dev

|

||||

```

|

||||

|

||||

## References

|

||||

|

||||

### Proximal Policy Optimisation

|

||||

The PPO implementation largely follows the structure introduced in the paper **"Fine-Tuning Language Models from Human Preferences"** by D. Ziegler et al. \[[paper](https://huggingface.co/papers/1909.08593), [code](https://github.com/openai/lm-human-preferences)].

|

||||

|

||||

### Direct Preference Optimization

|

||||

DPO is based on the original implementation of **"Direct Preference Optimization: Your Language Model is Secretly a Reward Model"** by E. Mitchell et al. \[[paper](https://huggingface.co/papers/2305.18290), [code](https://github.com/eric-mitchell/direct-preference-optimization)]

|

||||

|

||||

|

||||

## Citation

|

||||

|

||||

```bibtex

|

||||

@misc{vonwerra2022trl,

|

||||

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang},

|

||||

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

|

||||

title = {TRL: Transformer Reinforcement Learning},

|

||||

year = {2020},

|

||||

publisher = {GitHub},

|

||||

@ -230,3 +213,7 @@ DPO is based on the original implementation of **"Direct Preference Optimization

|

||||

howpublished = {\url{https://github.com/huggingface/trl}}

|

||||

}

|

||||

```

|

||||

|

||||

## License

|

||||

|

||||

This repository's source code is available under the [Apache-2.0 License](LICENSE).

|

||||

|

||||

@ -1,164 +0,0 @@

|

||||

# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

import argparse

|

||||

import math

|

||||

import os

|

||||

import shlex

|

||||

import subprocess

|

||||

import uuid

|

||||

from distutils.util import strtobool

|

||||

|

||||

import requests

|

||||

|

||||

|

||||

def parse_args():

|

||||

# fmt: off

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("--command", type=str, default="",

|

||||

help="the command to run")

|

||||

parser.add_argument("--num-seeds", type=int, default=3,

|

||||

help="the number of random seeds")

|

||||

parser.add_argument("--start-seed", type=int, default=1,

|

||||

help="the number of the starting seed")

|

||||

parser.add_argument("--workers", type=int, default=0,

|

||||

help="the number of workers to run benchmark experimenets")

|

||||

parser.add_argument("--auto-tag", type=lambda x: bool(strtobool(x)), default=True, nargs="?", const=True,

|

||||

help="if toggled, the runs will be tagged with git tags, commit, and pull request number if possible")

|

||||

parser.add_argument("--slurm-template-path", type=str, default=None,

|

||||

help="the path to the slurm template file (see docs for more details)")

|

||||

parser.add_argument("--slurm-gpus-per-task", type=int, default=1,

|

||||

help="the number of gpus per task to use for slurm jobs")

|

||||

parser.add_argument("--slurm-total-cpus", type=int, default=50,

|

||||

help="the number of gpus per task to use for slurm jobs")

|

||||

parser.add_argument("--slurm-ntasks", type=int, default=1,

|

||||

help="the number of tasks to use for slurm jobs")

|

||||

parser.add_argument("--slurm-nodes", type=int, default=None,

|

||||

help="the number of nodes to use for slurm jobs")

|

||||

args = parser.parse_args()

|

||||

# fmt: on

|

||||

return args

|

||||

|

||||

|

||||

def run_experiment(command: str):

|

||||

command_list = shlex.split(command)

|

||||

print(f"running {command}")

|

||||

|

||||

# Use subprocess.PIPE to capture the output

|

||||

fd = subprocess.Popen(command_list, stdout=subprocess.PIPE, stderr=subprocess.PIPE)

|

||||

output, errors = fd.communicate()

|

||||

|

||||

return_code = fd.returncode

|

||||

assert return_code == 0, f"Command failed with error: {errors.decode('utf-8')}"

|

||||

|

||||

# Convert bytes to string and strip leading/trailing whitespaces

|

||||

return output.decode("utf-8").strip()

|

||||

|

||||

|

||||

def autotag() -> str:

|

||||

wandb_tag = ""

|

||||

print("autotag feature is enabled")

|

||||

git_tag = ""

|

||||

try:

|

||||

git_tag = subprocess.check_output(["git", "describe", "--tags"]).decode("ascii").strip()

|

||||

print(f"identified git tag: {git_tag}")

|

||||

except subprocess.CalledProcessError as e:

|

||||

print(e)

|

||||

if len(git_tag) == 0:

|

||||

try:

|

||||

count = int(subprocess.check_output(["git", "rev-list", "--count", "HEAD"]).decode("ascii").strip())

|

||||

hash = subprocess.check_output(["git", "rev-parse", "--short", "HEAD"]).decode("ascii").strip()

|

||||

git_tag = f"no-tag-{count}-g{hash}"

|

||||

print(f"identified git tag: {git_tag}")

|

||||

except subprocess.CalledProcessError as e:

|

||||

print(e)

|

||||

wandb_tag = f"{git_tag}"

|

||||

|

||||

git_commit = subprocess.check_output(["git", "rev-parse", "--verify", "HEAD"]).decode("ascii").strip()

|

||||

try:

|

||||

# try finding the pull request number on github

|

||||

prs = requests.get(f"https://api.github.com/search/issues?q=repo:huggingface/trl+is:pr+{git_commit}")

|

||||

if prs.status_code == 200:

|

||||

prs = prs.json()

|

||||

if len(prs["items"]) > 0:

|

||||

pr = prs["items"][0]

|

||||

pr_number = pr["number"]

|

||||

wandb_tag += f",pr-{pr_number}"

|

||||

print(f"identified github pull request: {pr_number}")

|

||||

except Exception as e:

|

||||

print(e)

|

||||

|

||||

return wandb_tag

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

args = parse_args()

|

||||

if args.auto_tag:

|

||||

existing_wandb_tag = os.environ.get("WANDB_TAGS", "")

|

||||

wandb_tag = autotag()

|

||||

if len(wandb_tag) > 0:

|

||||

if len(existing_wandb_tag) > 0:

|

||||

os.environ["WANDB_TAGS"] = ",".join([existing_wandb_tag, wandb_tag])

|

||||

else:

|

||||

os.environ["WANDB_TAGS"] = wandb_tag

|

||||

print("WANDB_TAGS: ", os.environ.get("WANDB_TAGS", ""))

|

||||

commands = []

|

||||

for seed in range(0, args.num_seeds):

|

||||

commands += [" ".join([args.command, "--seed", str(args.start_seed + seed)])]

|

||||

|

||||

print("======= commands to run:")

|

||||

for command in commands:

|

||||

print(command)

|

||||

|

||||

if args.workers > 0 and args.slurm_template_path is None:

|

||||

from concurrent.futures import ThreadPoolExecutor

|

||||

|

||||

executor = ThreadPoolExecutor(max_workers=args.workers, thread_name_prefix="cleanrl-benchmark-worker-")

|

||||

for command in commands:

|

||||

executor.submit(run_experiment, command)

|

||||

executor.shutdown(wait=True)

|

||||

else:

|

||||

print("not running the experiments because --workers is set to 0; just printing the commands to run")

|

||||

|

||||

# SLURM logic

|

||||

if args.slurm_template_path is not None:

|

||||

if not os.path.exists("slurm"):

|

||||

os.makedirs("slurm")

|

||||

if not os.path.exists("slurm/logs"):

|

||||

os.makedirs("slurm/logs")

|

||||

print("======= slurm commands to run:")

|

||||

with open(args.slurm_template_path) as f:

|

||||

slurm_template = f.read()

|

||||

slurm_template = slurm_template.replace("{{array}}", f"0-{len(commands) - 1}%{args.workers}")

|

||||

slurm_template = slurm_template.replace(

|

||||

"{{seeds}}", f"({' '.join([str(args.start_seed + int(seed)) for seed in range(args.num_seeds)])})"

|

||||

)

|

||||

slurm_template = slurm_template.replace("{{len_seeds}}", f"{args.num_seeds}")

|

||||

slurm_template = slurm_template.replace("{{command}}", args.command)

|

||||

slurm_template = slurm_template.replace("{{gpus_per_task}}", f"{args.slurm_gpus_per_task}")

|

||||

total_gpus = args.slurm_gpus_per_task * args.slurm_ntasks

|

||||

slurm_cpus_per_gpu = math.ceil(args.slurm_total_cpus / total_gpus)

|

||||

slurm_template = slurm_template.replace("{{cpus_per_gpu}}", f"{slurm_cpus_per_gpu}")

|

||||

slurm_template = slurm_template.replace("{{ntasks}}", f"{args.slurm_ntasks}")

|

||||

if args.slurm_nodes is not None:

|

||||

slurm_template = slurm_template.replace("{{nodes}}", f"#SBATCH --nodes={args.slurm_nodes}")

|

||||

else:

|

||||

slurm_template = slurm_template.replace("{{nodes}}", "")

|

||||

filename = str(uuid.uuid4())

|

||||

open(os.path.join("slurm", f"{filename}.slurm"), "w").write(slurm_template)

|

||||

slurm_path = os.path.join("slurm", f"{filename}.slurm")

|

||||

print(f"saving command in {slurm_path}")

|

||||

if args.workers > 0:

|

||||

job_id = run_experiment(f"sbatch --parsable {slurm_path}")

|

||||

print(f"Job ID: {job_id}")

|

||||

@ -1,26 +0,0 @@

|

||||

export WANDB_ENTITY=huggingface

|

||||

export WANDB_PROJECT=trl

|

||||

bash $BENCHMARK_SCRIPT > output.txt

|

||||

|

||||

# Extract Job IDs into an array

|

||||

job_ids=($(grep "Job ID:" output.txt | awk '{print $3}'))

|

||||

|

||||

# Extract WANDB_TAGS into an array

|

||||

WANDB_TAGS=($(grep "WANDB_TAGS:" output.txt | awk '{print $2}'))

|

||||

WANDB_TAGS=($(echo $WANDB_TAGS | tr "," "\n"))

|

||||

|

||||

# Print to verify

|

||||

echo "Job IDs: ${job_ids[@]}"

|

||||

echo "WANDB_TAGS: ${WANDB_TAGS[@]}"

|

||||

|

||||

TAGS_STRING="?tag=${WANDB_TAGS[0]}"

|

||||

FOLDER_STRING="${WANDB_TAGS[0]}"

|

||||

for tag in "${WANDB_TAGS[@]:1}"; do

|

||||

TAGS_STRING+="&tag=$tag"

|

||||

FOLDER_STRING+="_$tag"

|

||||

done

|

||||

|

||||

echo "TAGS_STRING: $TAGS_STRING"

|

||||

echo "FOLDER_STRING: $FOLDER_STRING"

|

||||

|

||||

TAGS_STRING=$TAGS_STRING FOLDER_STRING=$FOLDER_STRING BENCHMARK_PLOT_SCRIPT=$BENCHMARK_PLOT_SCRIPT sbatch --dependency=afterany:$job_ids benchmark/post_github_comment.sbatch

|

||||

@ -1,44 +0,0 @@

|

||||

# hello world experiment

|

||||

python benchmark/benchmark.py \

|

||||

--command "python examples/scripts/ppo.py --log_with wandb" \

|

||||

--num-seeds 3 \

|

||||

--start-seed 1 \

|

||||

--workers 10 \

|

||||

--slurm-nodes 1 \

|

||||

--slurm-gpus-per-task 1 \

|

||||

--slurm-ntasks 1 \

|

||||

--slurm-total-cpus 12 \

|

||||

--slurm-template-path benchmark/trl.slurm_template

|

||||

|

||||

python benchmark/benchmark.py \

|

||||

--command "python examples/scripts/dpo.py --model_name_or_path=gpt2 --per_device_train_batch_size 4 --max_steps 1000 --learning_rate 1e-3 --gradient_accumulation_steps 1 --logging_steps 10 --eval_steps 500 --output_dir="dpo_anthropic_hh" --optim adamw_torch --warmup_steps 150 --report_to wandb --bf16 --logging_first_step --no_remove_unused_columns" \

|

||||

--num-seeds 3 \

|

||||

--start-seed 1 \

|

||||

--workers 10 \

|

||||

--slurm-nodes 1 \

|

||||

--slurm-gpus-per-task 1 \

|

||||

--slurm-ntasks 1 \

|

||||

--slurm-total-cpus 12 \

|

||||

--slurm-template-path benchmark/trl.slurm_template

|

||||

|

||||

python benchmark/benchmark.py \

|

||||

--command "python examples/scripts/sft.py --model_name_or_path="facebook/opt-350m" --report_to="wandb" --learning_rate=1.41e-5 --per_device_train_batch_size=64 --gradient_accumulation_steps=16 --output_dir="sft_openassistant-guanaco" --logging_steps=1 --num_train_epochs=3 --max_steps=-1 --push_to_hub --gradient_checkpointing" \

|

||||

--num-seeds 3 \

|

||||

--start-seed 1 \

|

||||

--workers 10 \

|

||||

--slurm-nodes 1 \

|

||||

--slurm-gpus-per-task 1 \

|

||||

--slurm-ntasks 1 \

|

||||

--slurm-total-cpus 12 \

|

||||

--slurm-template-path benchmark/trl.slurm_template

|

||||

|

||||

python benchmark/benchmark.py \

|

||||

--command "python examples/scripts/reward_modeling.py --model_name_or_path=facebook/opt-350m --output_dir="reward_modeling_anthropic_hh" --per_device_train_batch_size=64 --num_train_epochs=1 --gradient_accumulation_steps=16 --gradient_checkpointing=True --learning_rate=1.41e-5 --report_to="wandb" --remove_unused_columns=False --optim="adamw_torch" --logging_steps=10 --eval_strategy="steps" --max_length=512" \

|

||||

--num-seeds 3 \

|

||||

--start-seed 1 \

|

||||

--workers 10 \

|

||||

--slurm-nodes 1 \

|

||||

--slurm-gpus-per-task 1 \

|

||||

--slurm-ntasks 1 \

|

||||

--slurm-total-cpus 12 \

|

||||

--slurm-template-path benchmark/trl.slurm_template

|

||||

@ -1,50 +0,0 @@

|

||||

# pip install openrlbenchmark==0.2.1a5

|

||||

# see https://github.com/openrlbenchmark/openrlbenchmark#get-started for documentation

|

||||

echo "we deal with $TAGS_STRING"

|

||||

|

||||

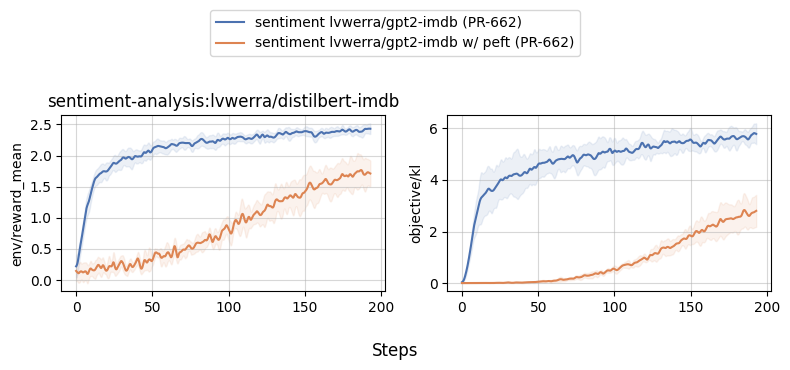

python -m openrlbenchmark.rlops_multi_metrics \

|

||||

--filters '?we=huggingface&wpn=trl&xaxis=_step&ceik=trl_ppo_trainer_config.value.reward_model&cen=trl_ppo_trainer_config.value.exp_name&metrics=env/reward_mean&metrics=objective/kl' \

|

||||

"ppo$TAGS_STRING" \

|

||||

--env-ids sentiment-analysis:lvwerra/distilbert-imdb \

|

||||

--no-check-empty-runs \

|

||||

--pc.ncols 2 \

|

||||

--pc.ncols-legend 1 \

|

||||

--output-filename benchmark/trl/$FOLDER_STRING/ppo \

|

||||

--scan-history

|

||||

|

||||

python -m openrlbenchmark.rlops_multi_metrics \

|

||||

--filters '?we=huggingface&wpn=trl&xaxis=_step&ceik=output_dir&cen=_name_or_path&metrics=train/rewards/accuracies&metrics=train/loss' \

|

||||

"gpt2$TAGS_STRING" \

|

||||

--env-ids dpo_anthropic_hh \

|

||||

--no-check-empty-runs \

|

||||

--pc.ncols 2 \

|

||||

--pc.ncols-legend 1 \

|

||||

--output-filename benchmark/trl/$FOLDER_STRING/dpo \

|

||||

--scan-history

|

||||

|

||||

python -m openrlbenchmark.rlops_multi_metrics \

|

||||

--filters '?we=huggingface&wpn=trl&xaxis=_step&ceik=output_dir&cen=_name_or_path&metrics=train/loss&metrics=eval/accuracy&metrics=eval/loss' \

|

||||

"facebook/opt-350m$TAGS_STRING" \

|

||||

--env-ids reward_modeling_anthropic_hh \

|

||||

--no-check-empty-runs \

|

||||

--pc.ncols 2 \

|

||||

--pc.ncols-legend 1 \

|

||||

--output-filename benchmark/trl/$FOLDER_STRING/reward_modeling \

|

||||

--scan-history

|

||||

|

||||

python -m openrlbenchmark.rlops_multi_metrics \

|

||||

--filters '?we=huggingface&wpn=trl&xaxis=_step&ceik=output_dir&cen=_name_or_path&metrics=train/loss' \

|

||||

"facebook/opt-350m$TAGS_STRING" \

|

||||

--env-ids sft_openassistant-guanaco \

|

||||

--no-check-empty-runs \

|

||||

--pc.ncols 2 \

|

||||

--pc.ncols-legend 1 \

|

||||

--output-filename benchmark/trl/$FOLDER_STRING/sft \

|

||||

--scan-history

|

||||

|

||||

python benchmark/upload_benchmark.py \

|

||||

--folder_path="benchmark/trl/$FOLDER_STRING" \

|

||||

--path_in_repo="images/benchmark/$FOLDER_STRING" \

|

||||

--repo_id="trl-internal-testing/example-images" \

|

||||

--repo_type="dataset"

|

||||

|

||||

@ -1,23 +0,0 @@

|

||||

# compound experiments: gpt2xl + grad_accu

|

||||

python benchmark/benchmark.py \

|

||||

--command "python examples/scripts/ppo.py --exp_name ppo_gpt2xl_grad_accu --model_name gpt2-xl --mini_batch_size 16 --gradient_accumulation_steps 8 --log_with wandb" \

|

||||

--num-seeds 3 \

|

||||

--start-seed 1 \

|

||||

--workers 10 \

|

||||

--slurm-nodes 1 \

|

||||

--slurm-gpus-per-task 1 \

|

||||

--slurm-ntasks 1 \

|

||||

--slurm-total-cpus 12 \

|

||||

--slurm-template-path benchmark/trl.slurm_template

|

||||

|

||||

# compound experiments: Cerebras-GPT-6.7B + deepspeed zero2 + grad_accu

|

||||

python benchmark/benchmark.py \

|

||||

--command "accelerate launch --config_file examples/accelerate_configs/deepspeed_zero2.yaml examples/scripts/ppo.py --exp_name ppo_Cerebras-GPT-6.7B_grad_accu_deepspeed_stage2 --batch_size 32 --mini_batch_size 32 --log_with wandb --model_name cerebras/Cerebras-GPT-6.7B --reward_model sentiment-analysis:cerebras/Cerebras-GPT-6.7B" \

|

||||

--num-seeds 3 \

|

||||

--start-seed 1 \

|

||||

--workers 10 \

|

||||

--slurm-nodes 1 \

|

||||

--slurm-gpus-per-task 8 \

|

||||

--slurm-ntasks 1 \

|

||||

--slurm-total-cpus 90 \

|

||||

--slurm-template-path benchmark/trl.slurm_template

|

||||

@ -1,31 +0,0 @@

|

||||

# pip install openrlbenchmark==0.2.1a5

|

||||

# see https://github.com/openrlbenchmark/openrlbenchmark#get-started for documentation

|

||||

echo "we deal with $TAGS_STRING"

|

||||

|

||||