mirror of

https://github.com/huggingface/transformers.git

synced 2025-10-21 17:48:57 +08:00

Compare commits

12 Commits

rm_last_ke

...

serve-quan

| Author | SHA1 | Date | |

|---|---|---|---|

| 72d8e7bb3c | |||

| 747fcfa227 | |||

| a6506fa478 | |||

| 72ffb3d1d2 | |||

| f525309408 | |||

| ffa68ba7b8 | |||

| eab734d23c | |||

| b604f62b6b | |||

| 35fff29efd | |||

| 1cdd0bf0fb | |||

| 907f206a1b | |||

| 86ba65350b |

@ -98,7 +98,7 @@ jobs:

|

||||

commit_sha: ${{ needs.get-pr-info.outputs.PR_HEAD_SHA }}

|

||||

pr_number: ${{ needs.get-pr-number.outputs.PR_NUMBER }}

|

||||

package: transformers

|

||||

languages: ar de en es fr hi it ja ko pt zh

|

||||

languages: ar de en es fr hi it ko pt tr zh ja te

|

||||

|

||||

update_run_status:

|

||||

name: Update Check Run Status

|

||||

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@ -98,7 +98,6 @@ celerybeat-schedule

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

.venv*

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

@ -172,6 +171,3 @@ tags

|

||||

|

||||

# modular conversion

|

||||

*.modular_backup

|

||||

|

||||

# Cursor IDE files

|

||||

.cursor/

|

||||

|

||||

@ -16,6 +16,7 @@ import sys

|

||||

from logging import Logger

|

||||

from threading import Event, Thread

|

||||

from time import perf_counter, sleep

|

||||

from typing import Optional

|

||||

|

||||

|

||||

# Add the parent directory to Python path to import benchmarks_entrypoint

|

||||

@ -41,7 +42,7 @@ except ImportError:

|

||||

GenerationConfig = None

|

||||

StaticCache = None

|

||||

|

||||

os.environ["HF_XET_HIGH_PERFORMANCE"] = "1"

|

||||

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

|

||||

os.environ["TOKENIZERS_PARALLELISM"] = "1"

|

||||

|

||||

# Only set torch precision if torch is available

|

||||

@ -144,7 +145,7 @@ def run_benchmark(

|

||||

q = torch.empty_like(probs_sort).exponential_(1)

|

||||

return torch.argmax(probs_sort / q, dim=-1, keepdim=True).to(dtype=torch.int)

|

||||

|

||||

def logits_to_probs(logits, temperature: float = 1.0, top_k: int | None = None):

|

||||

def logits_to_probs(logits, temperature: float = 1.0, top_k: Optional[int] = None):

|

||||

logits = logits / max(temperature, 1e-5)

|

||||

|

||||

if top_k is not None:

|

||||

@ -154,7 +155,7 @@ def run_benchmark(

|

||||

probs = torch.nn.functional.softmax(logits, dim=-1)

|

||||

return probs

|

||||

|

||||

def sample(logits, temperature: float = 1.0, top_k: int | None = None):

|

||||

def sample(logits, temperature: float = 1.0, top_k: Optional[int] = None):

|

||||

probs = logits_to_probs(logits[0, -1], temperature, top_k)

|

||||

idx_next = multinomial_sample_one_no_sync(probs)

|

||||

return idx_next, probs

|

||||

|

||||

@ -2,5 +2,5 @@ gpustat==1.1.1

|

||||

psutil==6.0.0

|

||||

psycopg2==2.9.9

|

||||

torch>=2.4.0

|

||||

hf_xet

|

||||

hf_transfer

|

||||

pandas>=1.5.0

|

||||

@ -1,7 +1,7 @@

|

||||

import hashlib

|

||||

import json

|

||||

import logging

|

||||

from typing import Any

|

||||

from typing import Any, Optional

|

||||

|

||||

|

||||

KERNELIZATION_AVAILABLE = False

|

||||

@ -27,11 +27,11 @@ class BenchmarkConfig:

|

||||

sequence_length: int = 128,

|

||||

num_tokens_to_generate: int = 128,

|

||||

attn_implementation: str = "eager",

|

||||

sdpa_backend: str | None = None,

|

||||

compile_mode: str | None = None,

|

||||

compile_options: dict[str, Any] | None = None,

|

||||

sdpa_backend: Optional[str] = None,

|

||||

compile_mode: Optional[str] = None,

|

||||

compile_options: Optional[dict[str, Any]] = None,

|

||||

kernelize: bool = False,

|

||||

name: str | None = None,

|

||||

name: Optional[str] = None,

|

||||

skip_validity_check: bool = False,

|

||||

) -> None:

|

||||

# Benchmark parameters

|

||||

@ -128,8 +128,8 @@ class BenchmarkConfig:

|

||||

|

||||

|

||||

def cross_generate_configs(

|

||||

attn_impl_and_sdpa_backend: list[tuple[str, str | None]],

|

||||

compiled_mode: list[str | None],

|

||||

attn_impl_and_sdpa_backend: list[tuple[str, Optional[str]]],

|

||||

compiled_mode: list[Optional[str]],

|

||||

kernelized: list[bool],

|

||||

warmup_iterations: int = 5,

|

||||

measurement_iterations: int = 20,

|

||||

|

||||

@ -8,7 +8,7 @@ import time

|

||||

from contextlib import nullcontext

|

||||

from datetime import datetime

|

||||

from queue import Queue

|

||||

from typing import Any

|

||||

from typing import Any, Optional

|

||||

|

||||

import torch

|

||||

from tqdm import trange

|

||||

@ -74,7 +74,7 @@ def get_git_revision() -> str:

|

||||

return git_hash.readline().strip()

|

||||

|

||||

|

||||

def get_sdpa_backend(backend_name: str | None) -> torch.nn.attention.SDPBackend | None:

|

||||

def get_sdpa_backend(backend_name: Optional[str]) -> Optional[torch.nn.attention.SDPBackend]:

|

||||

"""Get the SDPA backend enum from string name."""

|

||||

if backend_name is None:

|

||||

return None

|

||||

@ -145,7 +145,7 @@ class BenchmarkRunner:

|

||||

"""Main benchmark runner that coordinates benchmark execution."""

|

||||

|

||||

def __init__(

|

||||

self, logger: logging.Logger, output_dir: str = "benchmark_results", commit_id: str | None = None

|

||||

self, logger: logging.Logger, output_dir: str = "benchmark_results", commit_id: Optional[str] = None

|

||||

) -> None:

|

||||

# Those stay constant for the whole run

|

||||

self.logger = logger

|

||||

@ -156,7 +156,7 @@ class BenchmarkRunner:

|

||||

# Attributes that are reset for each model

|

||||

self._setup_for = ""

|

||||

# Attributes that are reset for each run

|

||||

self.model: GenerationMixin | None = None

|

||||

self.model: Optional[GenerationMixin] = None

|

||||

|

||||

def cleanup(self) -> None:

|

||||

del self.model

|

||||

@ -251,8 +251,8 @@ class BenchmarkRunner:

|

||||

def time_generate(

|

||||

self,

|

||||

max_new_tokens: int,

|

||||

gpu_monitor: GPUMonitor | None = None,

|

||||

) -> tuple[float, list[float], str, GPURawMetrics | None]:

|

||||

gpu_monitor: Optional[GPUMonitor] = None,

|

||||

) -> tuple[float, list[float], str, Optional[GPURawMetrics]]:

|

||||

"""Time the latency of a call to model.generate() with the given (inputs) and (max_new_tokens)."""

|

||||

# Prepare gpu monitoring if needed

|

||||

if gpu_monitor is not None:

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

from dataclasses import dataclass

|

||||

from datetime import datetime

|

||||

from typing import Any

|

||||

from typing import Any, Optional, Union

|

||||

|

||||

import numpy as np

|

||||

|

||||

@ -90,14 +90,14 @@ class BenchmarkResult:

|

||||

e2e_latency: float,

|

||||

token_generation_times: list[float],

|

||||

decoded_output: str,

|

||||

gpu_metrics: GPURawMetrics | None,

|

||||

gpu_metrics: Optional[GPURawMetrics],

|

||||

) -> None:

|

||||

self.e2e_latency.append(e2e_latency)

|

||||

self.token_generation_times.append(token_generation_times)

|

||||

self.decoded_outputs.append(decoded_output)

|

||||

self.gpu_metrics.append(gpu_metrics)

|

||||

|

||||

def to_dict(self) -> dict[str, None | int | float]:

|

||||

def to_dict(self) -> dict[str, Union[None, int, float]]:

|

||||

# Save GPU metrics as None if it contains only None values

|

||||

if all(gm is None for gm in self.gpu_metrics):

|

||||

gpu_metrics = None

|

||||

@ -111,7 +111,7 @@ class BenchmarkResult:

|

||||

}

|

||||

|

||||

@classmethod

|

||||

def from_dict(cls, data: dict[str, None | int | float]) -> "BenchmarkResult":

|

||||

def from_dict(cls, data: dict[str, Union[None, int, float]]) -> "BenchmarkResult":

|

||||

# Handle GPU metrics, which is saved as None if it contains only None values

|

||||

if data["gpu_metrics"] is None:

|

||||

gpu_metrics = [None for _ in range(len(data["e2e_latency"]))]

|

||||

|

||||

@ -7,6 +7,7 @@ import time

|

||||

from dataclasses import dataclass

|

||||

from enum import Enum

|

||||

from logging import Logger

|

||||

from typing import Optional, Union

|

||||

|

||||

import gpustat

|

||||

import psutil

|

||||

@ -41,7 +42,7 @@ class HardwareInfo:

|

||||

self.cpu_count = psutil.cpu_count()

|

||||

self.memory_total_mb = int(psutil.virtual_memory().total / (1024 * 1024))

|

||||

|

||||

def to_dict(self) -> dict[str, None | int | float | str]:

|

||||

def to_dict(self) -> dict[str, Union[None, int, float, str]]:

|

||||

return {

|

||||

"gpu_name": self.gpu_name,

|

||||

"gpu_memory_total_gb": self.gpu_memory_total_gb,

|

||||

@ -108,7 +109,7 @@ class GPURawMetrics:

|

||||

timestamp_0: float # in seconds

|

||||

monitoring_status: GPUMonitoringStatus

|

||||

|

||||

def to_dict(self) -> dict[str, None | int | float | str]:

|

||||

def to_dict(self) -> dict[str, Union[None, int, float, str]]:

|

||||

return {

|

||||

"utilization": self.utilization,

|

||||

"memory_used": self.memory_used,

|

||||

@ -122,7 +123,7 @@ class GPURawMetrics:

|

||||

class GPUMonitor:

|

||||

"""Monitor GPU utilization during benchmark execution."""

|

||||

|

||||

def __init__(self, sample_interval_sec: float = 0.1, logger: Logger | None = None):

|

||||

def __init__(self, sample_interval_sec: float = 0.1, logger: Optional[Logger] = None):

|

||||

self.sample_interval_sec = sample_interval_sec

|

||||

self.logger = logger if logger is not None else logging.getLogger(__name__)

|

||||

|

||||

|

||||

@ -5,7 +5,7 @@ ARG REF=main

|

||||

RUN apt-get update && apt-get install -y time git g++ pkg-config make git-lfs

|

||||

ENV UV_PYTHON=/usr/local/bin/python

|

||||

RUN pip install uv && uv pip install --no-cache-dir -U pip setuptools GitPython

|

||||

RUN uv pip install --no-cache-dir --upgrade 'torch<2.9' 'torchaudio' 'torchvision' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir --upgrade 'torch' 'torchaudio' 'torchvision' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir pypi-kenlm

|

||||

RUN uv pip install --no-cache-dir "git+https://github.com/huggingface/transformers.git@${REF}#egg=transformers[quality,testing,torch-speech,vision]"

|

||||

RUN git lfs install

|

||||

|

||||

@ -17,7 +17,7 @@ RUN make install -j 10

|

||||

|

||||

WORKDIR /

|

||||

|

||||

RUN uv pip install --no-cache --upgrade 'torch<2.9' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache --upgrade 'torch' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir --no-deps accelerate --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir "git+https://github.com/huggingface/transformers.git@${REF}#egg=transformers[ja,testing,sentencepiece,spacy,ftfy,rjieba]" unidic unidic-lite

|

||||

# spacy is not used so not tested. Causes to failures. TODO fix later

|

||||

|

||||

@ -5,7 +5,7 @@ USER root

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends libsndfile1-dev espeak-ng time git g++ cmake pkg-config openssh-client git-lfs ffmpeg curl

|

||||

ENV UV_PYTHON=/usr/local/bin/python

|

||||

RUN pip --no-cache-dir install uv && uv pip install --no-cache-dir -U pip setuptools

|

||||

RUN uv pip install --no-cache-dir 'torch<2.9' 'torchaudio' 'torchvision' 'torchcodec' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir 'torch' 'torchaudio' 'torchvision' 'torchcodec' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-deps timm accelerate --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir librosa "git+https://github.com/huggingface/transformers.git@${REF}#egg=transformers[sklearn,sentencepiece,vision,testing]" seqeval albumentations jiwer

|

||||

|

||||

|

||||

@ -5,7 +5,7 @@ USER root

|

||||

RUN apt-get update && apt-get install -y libsndfile1-dev espeak-ng time git libgl1 g++ tesseract-ocr git-lfs curl

|

||||

ENV UV_PYTHON=/usr/local/bin/python

|

||||

RUN pip --no-cache-dir install uv && uv pip install --no-cache-dir -U pip setuptools

|

||||

RUN uv pip install --no-cache-dir 'torch<2.9' 'torchaudio' 'torchvision' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir 'torch' 'torchaudio' 'torchvision' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir --no-deps timm accelerate

|

||||

RUN uv pip install -U --no-cache-dir pytesseract python-Levenshtein opencv-python nltk

|

||||

# RUN uv pip install --no-cache-dir natten==0.15.1+torch210cpu -f https://shi-labs.com/natten/wheels

|

||||

|

||||

@ -5,7 +5,7 @@ USER root

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends libsndfile1-dev espeak-ng time git pkg-config openssh-client git ffmpeg curl

|

||||

ENV UV_PYTHON=/usr/local/bin/python

|

||||

RUN pip --no-cache-dir install uv && uv pip install --no-cache-dir -U pip setuptools

|

||||

RUN uv pip install --no-cache-dir 'torch<2.9' 'torchaudio' 'torchvision' 'torchcodec' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir 'torch' 'torchaudio' 'torchvision' 'torchcodec' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-deps timm accelerate --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir librosa "git+https://github.com/huggingface/transformers.git@${REF}#egg=transformers[sklearn,sentencepiece,vision,testing]"

|

||||

|

||||

|

||||

@ -5,7 +5,7 @@ USER root

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends libsndfile1-dev espeak-ng time git g++ cmake pkg-config openssh-client git-lfs ffmpeg curl

|

||||

ENV UV_PYTHON=/usr/local/bin/python

|

||||

RUN pip --no-cache-dir install uv && uv pip install --no-cache-dir -U pip setuptools

|

||||

RUN uv pip install --no-cache-dir 'torch<2.9' 'torchaudio' 'torchvision' 'torchcodec' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir 'torch' 'torchaudio' 'torchvision' 'torchcodec' --index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-deps timm accelerate --extra-index-url https://download.pytorch.org/whl/cpu

|

||||

RUN uv pip install --no-cache-dir librosa "git+https://github.com/huggingface/transformers.git@${REF}#egg=transformers[sklearn,sentencepiece,vision,testing,tiktoken,num2words,video]"

|

||||

|

||||

|

||||

@ -284,8 +284,6 @@

|

||||

title: Knowledge Distillation for Computer Vision

|

||||

- local: tasks/keypoint_matching

|

||||

title: Keypoint matching

|

||||

- local: tasks/training_vision_backbone

|

||||

title: Training vision models using Backbone API

|

||||

title: Computer vision

|

||||

- sections:

|

||||

- local: tasks/image_captioning

|

||||

@ -546,6 +544,8 @@

|

||||

title: Helium

|

||||

- local: model_doc/herbert

|

||||

title: HerBERT

|

||||

- local: model_doc/hgnet_v2

|

||||

title: HGNet-V2

|

||||

- local: model_doc/hunyuan_v1_dense

|

||||

title: HunYuanDenseV1

|

||||

- local: model_doc/hunyuan_v1_moe

|

||||

|

||||

@ -55,7 +55,6 @@ deepspeed --num_gpus 2 trainer-program.py ...

|

||||

</hfoptions>

|

||||

|

||||

## Order of accelerators

|

||||

|

||||

To select specific accelerators to use and their order, use the environment variable appropriate for your hardware. This is often set on the command line for each run, but can also be added to your `~/.bashrc` or other startup config file.

|

||||

|

||||

For example, if there are 4 accelerators (0, 1, 2, 3) and you only want to run accelerators 0 and 2:

|

||||

|

||||

@ -6,13 +6,13 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

This page regroups resources around 🤗 Transformers developed by the community.

|

||||

|

||||

## Community resources

|

||||

## Community resources:

|

||||

|

||||

| Resource | Description | Author |

|

||||

|:----------|:-------------|------:|

|

||||

| [Hugging Face Transformers Glossary Flashcards](https://www.darigovresearch.com/huggingface-transformers-glossary-flashcards) | A set of flashcards based on the [Transformers Docs Glossary](glossary) that has been put into a form which can be easily learned/revised using [Anki](https://apps.ankiweb.net/) an open source, cross platform app specifically designed for long term knowledge retention. See this [Introductory video on how to use the flashcards](https://www.youtube.com/watch?v=Dji_h7PILrw). | [Darigov Research](https://www.darigovresearch.com/) |

|

||||

|

||||

## Community notebooks

|

||||

## Community notebooks:

|

||||

|

||||

| Notebook | Description | Author | |

|

||||

|:----------|:-------------|:-------------|------:|

|

||||

|

||||

@ -16,17 +16,44 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

# ExecuTorch

|

||||

|

||||

[ExecuTorch](https://pytorch.org/executorch/stable/index.html) runs PyTorch models on mobile and edge devices. Export your Transformers models to the ExecuTorch format with [Optimum ExecuTorch](https://github.com/huggingface/optimum-executorch) with the command below.

|

||||

[ExecuTorch](https://pytorch.org/executorch/stable/index.html) is a platform that enables PyTorch training and inference programs to be run on mobile and edge devices. It is powered by [torch.compile](https://pytorch.org/docs/stable/torch.compiler.html) and [torch.export](https://pytorch.org/docs/main/export.html) for performance and deployment.

|

||||

|

||||

You can use ExecuTorch with Transformers with [torch.export](https://pytorch.org/docs/main/export.html). The [`~transformers.convert_and_export_with_cache`] method converts a [`PreTrainedModel`] into an exportable module. Under the hood, it uses [torch.export](https://pytorch.org/docs/main/export.html) to export the model, ensuring compatibility with ExecuTorch.

|

||||

|

||||

```py

|

||||

import torch

|

||||

from transformers import LlamaForCausalLM, AutoTokenizer, GenerationConfig

|

||||

from transformers.integrations.executorch import(

|

||||

TorchExportableModuleWithStaticCache,

|

||||

convert_and_export_with_cache

|

||||

)

|

||||

|

||||

generation_config = GenerationConfig(

|

||||

use_cache=True,

|

||||

cache_implementation="static",

|

||||

cache_config={

|

||||

"batch_size": 1,

|

||||

"max_cache_len": 20,

|

||||

}

|

||||

)

|

||||

|

||||

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-3.2-1B", pad_token="</s>", padding_side="right")

|

||||

model = LlamaForCausalLM.from_pretrained("meta-llama/Llama-3.2-1B", device_map="auto", dtype=torch.bfloat16, attn_implementation="sdpa", generation_config=generation_config)

|

||||

|

||||

exported_program = convert_and_export_with_cache(model)

|

||||

```

|

||||

optimum-cli export executorch \

|

||||

--model "HuggingFaceTB/SmolLM2-135M-Instruct" \

|

||||

--task "text-generation" \

|

||||

--recipe "xnnpack" \

|

||||

--use_custom_sdpa \

|

||||

--use_custom_kv_cache \

|

||||

--qlinear 8da4w \

|

||||

--qembedding 8w \

|

||||

--output_dir="hf_smollm2"

|

||||

|

||||

The exported PyTorch model is now ready to be used with ExecuTorch. Wrap the model with [`~transformers.TorchExportableModuleWithStaticCache`] to generate text.

|

||||

|

||||

```py

|

||||

prompts = ["Simply put, the theory of relativity states that "]

|

||||

prompt_tokens = tokenizer(prompts, return_tensors="pt", padding=True).to(model.device)

|

||||

prompt_token_ids = prompt_tokens["input_ids"]

|

||||

|

||||

generated_ids = TorchExportableModuleWithStaticCache.generate(

|

||||

exported_program=exported_program, prompt_token_ids=prompt_token_ids, max_new_tokens=20,

|

||||

)

|

||||

generated_text = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

|

||||

print(generated_text)

|

||||

['Simply put, the theory of relativity states that 1) the speed of light is the']

|

||||

```

|

||||

Run `optimum-cli export executorch --help` to see all export options. For detailed export instructions, check the [README](optimum/exporters/executorch/README.md).

|

||||

|

||||

@ -36,6 +36,8 @@ Explore the [Hub](https://huggingface.com/) today to find a model and use Transf

|

||||

|

||||

Explore the [Models Timeline](./models_timeline) to discover the latest text, vision, audio and multimodal model architectures in Transformers.

|

||||

|

||||

|

||||

|

||||

## Features

|

||||

|

||||

Transformers provides everything you need for inference or training with state-of-the-art pretrained models. Some of the main features include:

|

||||

|

||||

@ -364,7 +364,6 @@ This utility analyzes code similarities between model implementations to identif

|

||||

When adding a new model to transformers, many components (attention layers, MLPs, outputs, etc.) may already exist in similar form in other models. Instead of implementing everything from scratch, model adders can identify which existing classes are similar and potentially reusable through modularization.

|

||||

|

||||

The tool computes two similarity scores:

|

||||

|

||||

- **Embedding score**: Uses semantic code embeddings (via `Qwen/Qwen3-Embedding-4B`) to detect functionally similar code even with different naming

|

||||

- **Jaccard score**: Measures token set overlap to identify structurally similar code patterns

|

||||

|

||||

|

||||

@ -208,7 +208,7 @@ Some models have a unique way of storing past kv pairs or states that is not com

|

||||

|

||||

Mamba models, such as [Mamba](./model_doc/mamba), require a specific cache because the model doesn't have an attention mechanism or kv states. Thus, they are not compatible with the above [`Cache`] classes.

|

||||

|

||||

## Iterative generation

|

||||

# Iterative generation

|

||||

|

||||

A cache can also work in iterative generation settings where there is back-and-forth interaction with a model (chatbots). Like regular generation, iterative generation with a cache allows a model to efficiently handle ongoing conversations without recomputing the entire context at each step.

|

||||

|

||||

|

||||

@ -100,29 +100,22 @@ for label, prob in zip(labels, probs[0]):

|

||||

- [`AltCLIPProcessor`] combines [`CLIPImageProcessor`] and [`XLMRobertaTokenizer`] into a single instance to encode text and prepare images.

|

||||

|

||||

## AltCLIPConfig

|

||||

|

||||

[[autodoc]] AltCLIPConfig

|

||||

|

||||

## AltCLIPTextConfig

|

||||

|

||||

[[autodoc]] AltCLIPTextConfig

|

||||

|

||||

## AltCLIPVisionConfig

|

||||

|

||||

[[autodoc]] AltCLIPVisionConfig

|

||||

|

||||

## AltCLIPModel

|

||||

|

||||

[[autodoc]] AltCLIPModel

|

||||

|

||||

## AltCLIPTextModel

|

||||

|

||||

[[autodoc]] AltCLIPTextModel

|

||||

|

||||

## AltCLIPVisionModel

|

||||

|

||||

[[autodoc]] AltCLIPVisionModel

|

||||

|

||||

## AltCLIPProcessor

|

||||

|

||||

[[autodoc]] AltCLIPProcessor

|

||||

|

||||

@ -23,7 +23,6 @@ rendered properly in your Markdown viewer.

|

||||

</div>

|

||||

|

||||

# BART

|

||||

|

||||

[BART](https://huggingface.co/papers/1910.13461) is a sequence-to-sequence model that combines the pretraining objectives from BERT and GPT. It's pretrained by corrupting text in different ways like deleting words, shuffling sentences, or masking tokens and learning how to fix it. The encoder encodes the corrupted document and the corrupted text is fixed by the decoder. As it learns to recover the original text, BART gets really good at both understanding and generating language.

|

||||

|

||||

You can find all the original BART checkpoints under the [AI at Meta](https://huggingface.co/facebook?search_models=bart) organization.

|

||||

|

||||

@ -38,7 +38,7 @@ The abstract from the paper is the following:

|

||||

efficiency and robustness. BLT encodes bytes into dynamically sized patches, which serve as the primary units of computation. Patches are segmented based on the entropy of the next byte, allocating

|

||||

more compute and model capacity where increased data complexity demands it. We present the first flop controlled scaling study of byte-level models up to 8B parameters and 4T training bytes. Our results demonstrate the feasibility of scaling models trained on raw bytes without a fixed vocabulary. Both training and inference efficiency improve due to dynamically selecting long patches when data is predictable, along with qualitative improvements on reasoning and long tail generalization. Overall, for fixed inference costs, BLT shows significantly better scaling than tokenization-based models, by simultaneously growing both patch and model size.*

|

||||

|

||||

## Usage Tips

|

||||

## Usage Tips:

|

||||

|

||||

- **Dual Model Architecture**: BLT consists of two separate trained models:

|

||||

- **Patcher (Entropy Model)**: A smaller transformer model that predicts byte-level entropy to determine patch boundaries and segment input.

|

||||

|

||||

@ -25,7 +25,8 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

## Overview

|

||||

|

||||

The Chameleon model was proposed in [Chameleon: Mixed-Modal Early-Fusion Foundation Models](https://huggingface.co/papers/2405.09818) by META AI Chameleon Team. Chameleon is a Vision-Language Model that use vector quantization to tokenize images which enables the model to generate multimodal output. The model takes images and texts as input, including an interleaved format, and generates textual response. Image generation module is not released yet.

|

||||

The Chameleon model was proposed in [Chameleon: Mixed-Modal Early-Fusion Foundation Models

|

||||

](https://huggingface.co/papers/2405.09818) by META AI Chameleon Team. Chameleon is a Vision-Language Model that use vector quantization to tokenize images which enables the model to generate multimodal output. The model takes images and texts as input, including an interleaved format, and generates textual response. Image generation module is not released yet.

|

||||

|

||||

The abstract from the paper is the following:

|

||||

|

||||

|

||||

@ -39,7 +39,7 @@ The original code can be found [here](https://github.com/neonbjb/tortoise-tts).

|

||||

3. The use of the [`ClvpModelForConditionalGeneration.generate()`] method is strongly recommended for tortoise usage.

|

||||

4. Note that the CLVP model expects the audio to be sampled at 22.05 kHz contrary to other audio models which expects 16 kHz.

|

||||

|

||||

## Brief Explanation

|

||||

## Brief Explanation:

|

||||

|

||||

- The [`ClvpTokenizer`] tokenizes the text input, and the [`ClvpFeatureExtractor`] extracts the log mel-spectrogram from the desired audio.

|

||||

- [`ClvpConditioningEncoder`] takes those text tokens and audio representations and converts them into embeddings conditioned on the text and audio.

|

||||

|

||||

@ -12,10 +12,11 @@ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

||||

|

||||

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be rendered properly in your Markdown viewer.

|

||||

|

||||

-->

|

||||

*This model was released on {release_date} and added to Hugging Face Transformers on 2025-10-09.*

|

||||

|

||||

|

||||

# Code World Model (CWM)

|

||||

|

||||

@ -52,8 +53,7 @@ CWM requires a dedicated system prompt to function optimally during inference. W

|

||||

configuration, CWM's output quality may be significantly degraded. The following serves as the default

|

||||

system prompt for reasoning tasks. For agentic workflows, append the relevant tool specifications

|

||||

after this base prompt. Checkout the original code repository for more details.

|

||||

|

||||

```text

|

||||

```

|

||||

You are a helpful AI assistant. You always reason before responding, using the following format:

|

||||

|

||||

<think>

|

||||

@ -110,7 +110,6 @@ generated_ids = model.generate(

|

||||

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

|

||||

print(tokenizer.decode(output_ids))

|

||||

```

|

||||

|

||||

<details>

|

||||

<summary>Produces the following output:</summary>

|

||||

|

||||

|

||||

@ -28,7 +28,6 @@ This model was contributed by [VladOS95-cyber](https://github.com/VladOS95-cyber

|

||||

The original code can be found [here](https://huggingface.co/deepseek-ai/DeepSeek-V2).

|

||||

|

||||

### Usage tips

|

||||

|

||||

The model uses Multi-head Latent Attention (MLA) and DeepSeekMoE architectures for efficient inference and cost-effective training. It employs an auxiliary-loss-free strategy for load balancing and multi-token prediction training objective. The model can be used for various language tasks after being pre-trained on 14.8 trillion tokens and going through Supervised Fine-Tuning and Reinforcement Learning stages.

|

||||

|

||||

## DeepseekV2Config

|

||||

|

||||

@ -34,7 +34,6 @@ We are super happy to make this code community-powered, and would love to see ho

|

||||

- static cache is not supported (this should be just a generation config issue / config shape issues)

|

||||

|

||||

### Usage tips

|

||||

|

||||

The model uses Multi-head Latent Attention (MLA) and DeepSeekMoE architectures for efficient inference and cost-effective training. It employs an auxiliary-loss-free strategy for load balancing and multi-token prediction training objective. The model can be used for various language tasks after being pre-trained on 14.8 trillion tokens and going through Supervised Fine-Tuning and Reinforcement Learning stages.

|

||||

|

||||

You can run the model in `FP8` automatically, using 2 nodes of 8 H100 should be more than enough!

|

||||

|

||||

@ -105,7 +105,7 @@ DETR can be naturally extended to perform panoptic segmentation (which unifies s

|

||||

- The decoder of DETR updates the query embeddings in parallel. This is different from language models like GPT-2, which use autoregressive decoding instead of parallel. Hence, no causal attention mask is used.

|

||||

- DETR adds position embeddings to the hidden states at each self-attention and cross-attention layer before projecting to queries and keys. For the position embeddings of the image, one can choose between fixed sinusoidal or learned absolute position embeddings. By default, the parameter `position_embedding_type` of [`~transformers.DetrConfig`] is set to `"sine"`.

|

||||

- During training, the authors of DETR did find it helpful to use auxiliary losses in the decoder, especially to help the model output the correct number of objects of each class. If you set the parameter `auxiliary_loss` of [`~transformers.DetrConfig`] to `True`, then prediction feedforward neural networks and Hungarian losses are added after each decoder layer (with the FFNs sharing parameters).

|

||||

- If you want to train the model in a distributed environment across multiple nodes, then one should update the *num_boxes* variable in the *DetrLoss* class of *modeling_detr.py*. When training on multiple nodes, this should be set to the average number of target boxes across all nodes, as can be seen in the original implementation [here](https://github.com/facebookresearch/detr/blob/a54b77800eb8e64e3ad0d8237789fcbf2f8350c5/models/detr.py#L227-L232).

|

||||

- If you want to train the model in a distributed environment across multiple nodes, then one should update the _num_boxes_ variable in the _DetrLoss_ class of _modeling_detr.py_. When training on multiple nodes, this should be set to the average number of target boxes across all nodes, as can be seen in the original implementation [here](https://github.com/facebookresearch/detr/blob/a54b77800eb8e64e3ad0d8237789fcbf2f8350c5/models/detr.py#L227-L232).

|

||||

- [`~transformers.DetrForObjectDetection`] and [`~transformers.DetrForSegmentation`] can be initialized with any convolutional backbone available in the [timm library](https://github.com/rwightman/pytorch-image-models). Initializing with a MobileNet backbone for example can be done by setting the `backbone` attribute of [`~transformers.DetrConfig`] to `"tf_mobilenetv3_small_075"`, and then initializing the model with that config.

|

||||

- DETR resizes the input images such that the shortest side is at least a certain amount of pixels while the longest is at most 1333 pixels. At training time, scale augmentation is used such that the shortest side is randomly set to at least 480 and at most 800 pixels. At inference time, the shortest side is set to 800. One can use [`~transformers.DetrImageProcessor`] to prepare images (and optional annotations in COCO format) for the model. Due to this resizing, images in a batch can have different sizes. DETR solves this by padding images up to the largest size in a batch, and by creating a pixel mask that indicates which pixels are real/which are padding. Alternatively, one can also define a custom `collate_fn` in order to batch images together, using [`~transformers.DetrImageProcessor.pad_and_create_pixel_mask`].

|

||||

- The size of the images will determine the amount of memory being used, and will thus determine the `batch_size`. It is advised to use a batch size of 2 per GPU. See [this Github thread](https://github.com/facebookresearch/detr/issues/150) for more info.

|

||||

@ -142,7 +142,7 @@ As a summary, consider the following table:

|

||||

|------|------------------|-----------------------|-----------------------|

|

||||

| **Description** | Predicting bounding boxes and class labels around objects in an image | Predicting masks around objects (i.e. instances) in an image | Predicting masks around both objects (i.e. instances) as well as "stuff" (i.e. background things like trees and roads) in an image |

|

||||

| **Model** | [`~transformers.DetrForObjectDetection`] | [`~transformers.DetrForSegmentation`] | [`~transformers.DetrForSegmentation`] |

|

||||

| **Example dataset** | COCO detection | COCO detection, COCO panoptic | COCO panoptic |

|

||||

| **Example dataset** | COCO detection | COCO detection, COCO panoptic | COCO panoptic | |

|

||||

| **Format of annotations to provide to** [`~transformers.DetrImageProcessor`] | {'image_id': `int`, 'annotations': `list[Dict]`} each Dict being a COCO object annotation | {'image_id': `int`, 'annotations': `list[Dict]`} (in case of COCO detection) or {'file_name': `str`, 'image_id': `int`, 'segments_info': `list[Dict]`} (in case of COCO panoptic) | {'file_name': `str`, 'image_id': `int`, 'segments_info': `list[Dict]`} and masks_path (path to directory containing PNG files of the masks) |

|

||||

| **Postprocessing** (i.e. converting the output of the model to Pascal VOC format) | [`~transformers.DetrImageProcessor.post_process`] | [`~transformers.DetrImageProcessor.post_process_segmentation`] | [`~transformers.DetrImageProcessor.post_process_segmentation`], [`~transformers.DetrImageProcessor.post_process_panoptic`] |

|

||||

| **evaluators** | `CocoEvaluator` with `iou_types="bbox"` | `CocoEvaluator` with `iou_types="bbox"` or `"segm"` | `CocoEvaluator` with `iou_tupes="bbox"` or `"segm"`, `PanopticEvaluator` |

|

||||

|

||||

@ -33,7 +33,6 @@ The abstract from the paper is the following:

|

||||

*Transformer tends to overallocate attention to irrelevant context. In this work, we introduce Diff Transformer, which amplifies attention to the relevant context while canceling noise. Specifically, the differential attention mechanism calculates attention scores as the difference between two separate softmax attention maps. The subtraction cancels noise, promoting the emergence of sparse attention patterns. Experimental results on language modeling show that Diff Transformer outperforms Transformer in various settings of scaling up model size and training tokens. More intriguingly, it offers notable advantages in practical applications, such as long-context modeling, key information retrieval, hallucination mitigation, in-context learning, and reduction of activation outliers. By being less distracted by irrelevant context, Diff Transformer can mitigate hallucination in question answering and text summarization. For in-context learning, Diff Transformer not only enhances accuracy but is also more robust to order permutation, which was considered as a chronic robustness issue. The results position Diff Transformer as a highly effective and promising architecture to advance large language models.*

|

||||

|

||||

### Usage tips

|

||||

|

||||

The hyperparameters of this model is the same as Llama model.

|

||||

|

||||

## DiffLlamaConfig

|

||||

|

||||

@ -47,7 +47,7 @@ Our large model is faster and ahead of its Swin counterpart by 1.5% box AP in CO

|

||||

Paired with new frameworks, our large variant is the new state of the art panoptic segmentation model on COCO (58.2 PQ)

|

||||

and ADE20K (48.5 PQ), and instance segmentation model on Cityscapes (44.5 AP) and ADE20K (35.4 AP) (no extra data).

|

||||

It also matches the state of the art specialized semantic segmentation models on ADE20K (58.2 mIoU),

|

||||

and ranks second on Cityscapes (84.5 mIoU) (no extra data).*

|

||||

and ranks second on Cityscapes (84.5 mIoU) (no extra data). *

|

||||

|

||||

<img

|

||||

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/dilated-neighborhood-attention-pattern.jpg"

|

||||

|

||||

@ -120,7 +120,7 @@ print(answer)

|

||||

```py

|

||||

>>> import re

|

||||

>>> from transformers import DonutProcessor, VisionEncoderDecoderModel

|

||||

>>> from accelerate import Accelerator

|

||||

from accelerate import Accelerator

|

||||

>>> from datasets import load_dataset

|

||||

>>> import torch

|

||||

|

||||

@ -162,9 +162,9 @@ print(answer)

|

||||

|

||||

```py

|

||||

>>> import re

|

||||

>>> from accelerate import Accelerator

|

||||

>>> from datasets import load_dataset

|

||||

>>> from transformers import DonutProcessor, VisionEncoderDecoderModel

|

||||

from accelerate import Accelerator

|

||||

>>> from datasets import load_dataset

|

||||

>>> import torch

|

||||

|

||||

>>> processor = DonutProcessor.from_pretrained("naver-clova-ix/donut-base-finetuned-cord-v2")

|

||||

|

||||

@ -305,6 +305,7 @@ EdgeTAM can use masks from previous predictions as input to refine segmentation:

|

||||

... )

|

||||

```

|

||||

|

||||

|

||||

## EdgeTamConfig

|

||||

|

||||

[[autodoc]] EdgeTamConfig

|

||||

|

||||

@ -12,11 +12,13 @@ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

||||

|

||||

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be rendered properly in your Markdown viewer.

|

||||

|

||||

-->

|

||||

*This model was released on 2025-01-13 and added to Hugging Face Transformers on 2025-09-29.*

|

||||

|

||||

|

||||

<div style="float: right;">

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

<img alt="PyTorch" src="https://img.shields.io/badge/PyTorch-DE3412?style=flat&logo=pytorch&logoColor=white">

|

||||

|

||||

@ -61,7 +61,7 @@ message_list = [

|

||||

]

|

||||

]

|

||||

input_dict = processor(

|

||||

protein_inputs, messages_list, return_tensors="pt", text_max_length=512, protein_max_length=1024

|

||||

protein_informations, messages_list, return_tensors="pt", text_max_length=512, protein_max_length=1024

|

||||

)

|

||||

with torch.no_grad():

|

||||

generated_ids = hf_model.generate(**input_dict)

|

||||

|

||||

@ -28,19 +28,15 @@ The abstract from the original FastSpeech2 paper is the following:

|

||||

This model was contributed by [Connor Henderson](https://huggingface.co/connor-henderson). The original code can be found [here](https://github.com/espnet/espnet/blob/master/espnet2/tts/fastspeech2/fastspeech2.py).

|

||||

|

||||

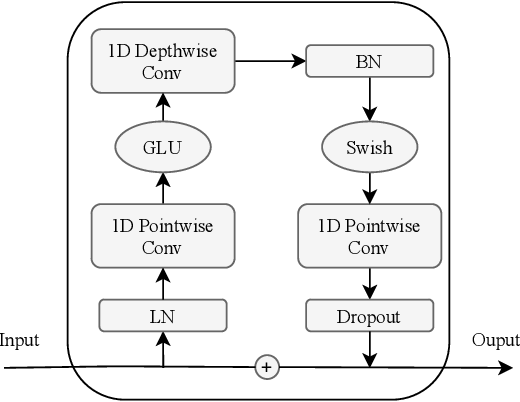

## 🤗 Model Architecture

|

||||

|

||||

FastSpeech2's general structure with a Mel-spectrogram decoder was implemented, and the traditional transformer blocks were replaced with conformer blocks as done in the ESPnet library.

|

||||

|

||||

#### FastSpeech2 Model Architecture

|

||||

|

||||

|

||||

|

||||

#### Conformer Blocks

|

||||

|

||||

|

||||

|

||||

#### Convolution Module

|

||||

|

||||

|

||||

|

||||

## 🤗 Transformers Usage

|

||||

|

||||

@ -37,6 +37,7 @@ We evaluated GLM-4.6 across eight public benchmarks covering agents, reasoning,

|

||||

|

||||

For more eval results, show cases, and technical details, please visit our [technical blog](https://z.ai/blog/glm-4.6).

|

||||

|

||||

|

||||

### GLM-4.5

|

||||

|

||||

The [**GLM-4.5**](https://huggingface.co/papers/2508.06471) series models are foundation models designed for intelligent agents, MoE variants are documented here as Glm4Moe.

|

||||

|

||||

@ -101,7 +101,6 @@ Below is an expected speedup diagram that compares pure inference time between t

|

||||

</div>

|

||||

|

||||

## Using Scaled Dot Product Attention (SDPA)

|

||||

|

||||

PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

|

||||

encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

|

||||

[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

|

||||

@ -124,7 +123,6 @@ On a local benchmark (rtx3080ti-16GB, PyTorch 2.2.1, OS Ubuntu 22.04) using `flo

|

||||

following speedups during training and inference.

|

||||

|

||||

### Training

|

||||

|

||||

| Batch size | Seq len | Time per batch (Eager - s) | Time per batch (SDPA - s) | Speedup (%) | Eager peak mem (MB) | SDPA peak mem (MB) | Mem saving (%) |

|

||||

|-----------:|-----------:|---------------------------:|-----------------------------:|------------:|--------------------:|-------------------:|------------------:|

|

||||

| 1 | 128 | 0.024 | 0.019 | 28.945 | 1789.95 | 1789.95 | 0 |

|

||||

@ -144,7 +142,6 @@ following speedups during training and inference.

|

||||

| 4 | 2048 | OOM | 0.731 | / | OOM | 12705.1 | SDPA does not OOM |

|

||||

|

||||

### Inference

|

||||

|

||||

| Batch size | Seq len | Per token latency Eager (ms) | Per token latency SDPA (ms) | Speedup (%) | Mem Eager (MB) | Mem SDPA (MB) | Mem saved (%) |

|

||||

|--------------:|-------------:|--------------------------------:|-------------------------------:|---------------:|------------------:|----------------:|-----------------:|

|

||||

| 1 | 128 | 6.569 | 5.858 | 12.14 | 974.831 | 974.826 | 0 |

|

||||

|

||||

@ -41,7 +41,7 @@ The example below demonstrates how to generate text with [`Pipeline`] or the [`A

|

||||

<hfoptions id="usage">

|

||||

<hfoption id="Pipeline">

|

||||

|

||||

```python

|

||||

```py

|

||||

import torch

|

||||

from transformers import pipeline

|

||||

pipeline = pipeline(task="text-generation",

|

||||

@ -52,7 +52,7 @@ pipeline("人とAIが協調するためには、")

|

||||

</hfoption>

|

||||

<hfoption id="AutoModel">

|

||||

|

||||

```python

|

||||

```py

|

||||

import torch

|

||||

from transformers import AutoModelForCausalLM, AutoTokenizer

|

||||

|

||||

@ -112,7 +112,6 @@ visualizer("<img>What is shown in this image?")

|

||||

</div>

|

||||

|

||||

## Resources

|

||||

|

||||

Refer to the [Training a better GPT model: Learnings from PaLM](https://medium.com/ml-abeja/training-a-better-gpt-2-93b157662ae4) blog post for more details about how ABEJA trained GPT-NeoX-Japanese.

|

||||

|

||||

## GPTNeoXJapaneseConfig

|

||||

|

||||

@ -35,9 +35,9 @@ The abstract from the paper is the following:

|

||||

*<INSERT PAPER ABSTRACT HERE>*

|

||||

|

||||

Tips:

|

||||

|

||||

- **Attention Sinks with Flex Attention**: When using flex attention, attention sinks require special handling. Unlike with standard attention implementations where sinks can be added directly to attention scores, flex attention `score_mod` function operates on individual score elements rather than the full attention matrix. Therefore, attention sinks renormalization have to be applied after the flex attention computations by renormalizing the outputs using the log-sum-exp (LSE) values returned by flex attention.

|

||||

|

||||

|

||||

<INSERT TIPS ABOUT MODEL HERE>

|

||||

|

||||

This model was contributed by [INSERT YOUR HF USERNAME HERE](https://huggingface.co/<INSERT YOUR HF USERNAME HERE>).

|

||||

|

||||

@ -79,8 +79,6 @@ When token_type_ids=None or all zero, it is equivalent to regular causal mask

|

||||

for example:

|

||||

|

||||

>>> x_token = tokenizer("アイウエ")

|

||||

|

||||

```text

|

||||

input_ids: | SOT | SEG | ア | イ | ウ | エ |

|

||||

token_type_ids: | 1 | 0 | 0 | 0 | 0 | 0 |

|

||||

prefix_lm_mask:

|

||||

@ -90,11 +88,8 @@ SEG | 1 1 0 0 0 0 |

|

||||

イ | 1 1 1 1 0 0 |

|

||||

ウ | 1 1 1 1 1 0 |

|

||||

エ | 1 1 1 1 1 1 |

|

||||

```

|

||||

|

||||

>>> x_token = tokenizer("", prefix_text="アイウエ")

|

||||

|

||||

```text

|

||||

input_ids: | SOT | ア | イ | ウ | エ | SEG |

|

||||

token_type_ids: | 1 | 1 | 1 | 1 | 1 | 0 |

|

||||

prefix_lm_mask:

|

||||

@ -104,11 +99,8 @@ SOT | 1 1 1 1 1 0 |

|

||||

ウ | 1 1 1 1 1 0 |

|

||||

エ | 1 1 1 1 1 0 |

|

||||

SEG | 1 1 1 1 1 1 |

|

||||

```

|

||||

|

||||

>>> x_token = tokenizer("ウエ", prefix_text="アイ")

|

||||

|

||||

```text

|

||||

input_ids: | SOT | ア | イ | SEG | ウ | エ |

|

||||

token_type_ids: | 1 | 1 | 1 | 0 | 0 | 0 |

|

||||

prefix_lm_mask:

|

||||

@ -118,7 +110,6 @@ SOT | 1 1 1 0 0 0 |

|

||||

SEG | 1 1 1 1 0 0 |

|

||||

ウ | 1 1 1 1 1 0 |

|

||||

エ | 1 1 1 1 1 1 |

|

||||

```

|

||||

|

||||

### Spout Vector

|

||||

|

||||

|

||||

@ -22,7 +22,6 @@ rendered properly in your Markdown viewer.

|

||||

</div>

|

||||

|

||||

## Overview

|

||||

|

||||

The [Granite Speech](https://huggingface.co/papers/2505.08699) model ([blog post](https://www.ibm.com/new/announcements/ibm-granite-3-3-speech-recognition-refined-reasoning-rag-loras)) is a multimodal language model, consisting of a speech encoder, speech projector, large language model, and LoRA adapter(s). More details regarding each component for the current (Granite 3.2 Speech) model architecture may be found below.

|

||||

|

||||

1. Speech Encoder: A [Conformer](https://huggingface.co/papers/2005.08100) encoder trained with Connectionist Temporal Classification (CTC) on character-level targets on ASR corpora. The encoder uses block-attention and self-conditioned CTC from the middle layer.

|

||||

|

||||

@ -39,14 +39,14 @@ It supports the following languages: English, French, German, Italian, Portugues

|

||||

|

||||

<!-- This section describes the evaluation protocols and provides the results. -->

|

||||

|

||||

### Testing Data

|

||||

#### Testing Data

|

||||

|

||||

<!-- This should link to a Dataset Card if possible. -->

|

||||

|

||||

The model was evaluated on MMLU, TriviaQA, NaturalQuestions, ARC Easy & Challenge, Open Book QA, Common Sense QA,

|

||||

Physical Interaction QA, Social Interaction QA, HellaSwag, WinoGrande, Multilingual Knowledge QA, FLORES 200.

|

||||

|

||||

### Metrics

|

||||

#### Metrics

|

||||

|

||||

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

|

||||

|

||||

|

||||

@ -24,7 +24,9 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

## Overview

|

||||

|

||||

The IDEFICS model was proposed in [OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents](https://huggingface.co/papers/2306.16527) by Hugo Laurençon, Lucile Saulnier, Léo Tronchon, Stas Bekman, Amanpreet Singh, Anton Lozhkov, Thomas Wang, Siddharth Karamcheti, Alexander M. Rush, Douwe Kiela, Matthieu Cord, Victor Sanh

|

||||

The IDEFICS model was proposed in [OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents

|

||||

](https://huggingface.co/papers/2306.16527

|

||||

) by Hugo Laurençon, Lucile Saulnier, Léo Tronchon, Stas Bekman, Amanpreet Singh, Anton Lozhkov, Thomas Wang, Siddharth Karamcheti, Alexander M. Rush, Douwe Kiela, Matthieu Cord, Victor Sanh

|

||||

|

||||

The abstract from the paper is the following:

|

||||

|

||||

|

||||

@ -215,16 +215,13 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

|

||||

- forward

|

||||

|

||||

## Idefics2ImageProcessor

|

||||

|

||||

[[autodoc]] Idefics2ImageProcessor

|

||||

- preprocess

|

||||

|

||||

## Idefics2ImageProcessorFast

|

||||

|

||||

[[autodoc]] Idefics2ImageProcessorFast

|

||||

- preprocess

|

||||

|

||||

## Idefics2Processor

|

||||

|

||||

[[autodoc]] Idefics2Processor

|

||||

- __call__

|

||||

|

||||

@ -77,16 +77,13 @@ This model was contributed by [amyeroberts](https://huggingface.co/amyeroberts)

|

||||

- forward

|

||||

|

||||

## Idefics3ImageProcessor

|

||||

|

||||

[[autodoc]] Idefics3ImageProcessor

|

||||

- preprocess

|

||||

|

||||

## Idefics3ImageProcessorFast

|

||||

|

||||

[[autodoc]] Idefics3ImageProcessorFast

|

||||

- preprocess

|

||||

|

||||

## Idefics3Processor

|

||||

|

||||

[[autodoc]] Idefics3Processor

|

||||

- __call__

|

||||

|

||||

@ -79,7 +79,6 @@ The attributes can be obtained from model config, as `model.config.num_query_tok

|

||||

- forward

|

||||

|

||||

## InstructBlipVideoModel

|

||||

|

||||

[[autodoc]] InstructBlipVideoModel

|

||||

- forward

|

||||

|

||||

|

||||

@ -105,7 +105,6 @@ This example demonstrates how to perform inference on a single image with the In

|

||||

```

|

||||

|

||||

### Text-only generation

|

||||

|

||||

This example shows how to generate text using the InternVL model without providing any image input.

|

||||

|

||||

```python

|

||||

@ -135,7 +134,6 @@ This example shows how to generate text using the InternVL model without providi

|

||||

```

|

||||

|

||||

### Batched image and text inputs

|

||||

|

||||

InternVL models also support batched image and text inputs.

|

||||

|

||||

```python

|

||||

@ -179,7 +177,6 @@ InternVL models also support batched image and text inputs.

|

||||

```

|

||||

|

||||

### Batched multi-image input

|

||||

|

||||

This implementation of the InternVL models supports batched text-images inputs with different number of images for each text.

|

||||

|

||||

```python

|

||||

@ -223,7 +220,6 @@ This implementation of the InternVL models supports batched text-images inputs w

|

||||

```

|

||||

|

||||

### Video input

|

||||

|

||||

InternVL models can also handle video inputs. Here is an example of how to perform inference on a video input using chat templates.

|

||||

|

||||

```python

|

||||

@ -263,7 +259,6 @@ InternVL models can also handle video inputs. Here is an example of how to perfo

|

||||

```

|

||||

|

||||

### Interleaved image and video inputs

|

||||

|

||||

This example showcases how to handle a batch of chat conversations with interleaved image and video inputs using chat template.

|

||||

|

||||

```python

|

||||

|

||||

@ -14,7 +14,6 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

-->

|

||||

*This model was released on 2020-04-30 and added to Hugging Face Transformers on 2023-06-20.*

|

||||

|

||||

# Jukebox

|

||||

|

||||

<div class="flex flex-wrap space-x-1">

|

||||

|

||||

@ -16,7 +16,6 @@ rendered properly in your Markdown viewer.

|

||||

*This model was released on 2025-06-17 and added to Hugging Face Transformers on 2025-06-25.*

|

||||

|

||||

# Kyutai Speech-To-Text

|

||||

|

||||

## Overview

|

||||

|

||||

[Kyutai STT](https://kyutai.org/next/stt) is a speech-to-text model architecture based on the [Mimi codec](https://huggingface.co/docs/transformers/en/model_doc/mimi), which encodes audio into discrete tokens in a streaming fashion, and a [Moshi-like](https://huggingface.co/docs/transformers/en/model_doc/moshi) autoregressive decoder. Kyutai's lab has released two model checkpoints:

|

||||

|

||||

@ -36,7 +36,7 @@ in vision transformers. As a result, we propose LeVIT: a hybrid neural network f

|

||||

We consider different measures of efficiency on different hardware platforms, so as to best reflect a wide range of

|

||||

application scenarios. Our extensive experiments empirically validate our technical choices and show they are suitable

|

||||

to most architectures. Overall, LeViT significantly outperforms existing convnets and vision transformers with respect

|

||||

to the speed/accuracy tradeoff. For example, at 80% ImageNet top-1 accuracy, LeViT is 5 times faster than EfficientNet on CPU.*

|

||||

to the speed/accuracy tradeoff. For example, at 80% ImageNet top-1 accuracy, LeViT is 5 times faster than EfficientNet on CPU. *

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/levit_architecture.png"

|

||||

alt="drawing" width="600"/>

|

||||

|

||||

@ -12,10 +12,11 @@ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

||||

|

||||

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be rendered properly in your Markdown viewer.

|

||||

|

||||

-->

|

||||

*This model was released on {release_date} and added to Hugging Face Transformers on 2025-10-07.*

|

||||

|

||||

|

||||

# Lfm2Moe

|

||||

|

||||

|

||||

@ -436,6 +436,11 @@ model = Llama4ForConditionalGeneration.from_pretrained(

|

||||

[[autodoc]] Llama4TextModel

|

||||

- forward

|

||||

|

||||

## Llama4ForCausalLM

|

||||

|

||||

[[autodoc]] Llama4ForCausalLM

|

||||

- forward

|

||||

|

||||

## Llama4VisionModel

|

||||

|

||||

[[autodoc]] Llama4VisionModel

|

||||

|

||||

@ -25,7 +25,8 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

## Overview

|

||||

|

||||

The LLaVa-NeXT-Video model was proposed in [LLaVA-NeXT: A Strong Zero-shot Video Understanding Model](https://llava-vl.github.io/blog/2024-04-30-llava-next-video/) by Yuanhan Zhang, Bo Li, Haotian Liu, Yong Jae Lee, Liangke Gui, Di Fu, Jiashi Feng, Ziwei Liu, Chunyuan Li. LLaVa-NeXT-Video improves upon [LLaVa-NeXT](llava_next) by fine-tuning on a mix if video and image dataset thus increasing the model's performance on videos.

|

||||

The LLaVa-NeXT-Video model was proposed in [LLaVA-NeXT: A Strong Zero-shot Video Understanding Model

|

||||

](https://llava-vl.github.io/blog/2024-04-30-llava-next-video/) by Yuanhan Zhang, Bo Li, Haotian Liu, Yong Jae Lee, Liangke Gui, Di Fu, Jiashi Feng, Ziwei Liu, Chunyuan Li. LLaVa-NeXT-Video improves upon [LLaVa-NeXT](llava_next) by fine-tuning on a mix if video and image dataset thus increasing the model's performance on videos.

|

||||

|

||||

[LLaVA-NeXT](llava_next) surprisingly has strong performance in understanding video content in zero-shot fashion with the AnyRes technique that it uses. The AnyRes technique naturally represents a high-resolution image into multiple images. This technique is naturally generalizable to represent videos because videos can be considered as a set of frames (similar to a set of images in LLaVa-NeXT). The current version of LLaVA-NeXT makes use of AnyRes and trains with supervised fine-tuning (SFT) on top of LLaVA-Next on video data to achieves better video understanding capabilities.The model is a current SOTA among open-source models on [VideoMME bench](https://huggingface.co/papers/2405.21075).

|

||||

|

||||

|

||||

@ -171,7 +171,6 @@ Below is an expected speedup diagram that compares pure inference time between t

|

||||

</div>

|

||||

|

||||

## Using Scaled Dot Product Attention (SDPA)

|

||||

|

||||

PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

|

||||

encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

|

||||

[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

|

||||

|

||||

@ -39,7 +39,7 @@ attractive option for long-document NLP tasks.

|

||||

|

||||

The abstract from the paper is the following:

|

||||

|

||||

*The design choices in the Transformer attention mechanism, including weak inductive bias and quadratic computational complexity, have limited its application for modeling long sequences. In this paper, we introduce Mega, a simple, theoretically grounded, single-head gated attention mechanism equipped with (exponential) moving average to incorporate inductive bias of position-aware local dependencies into the position-agnostic attention mechanism. We further propose a variant of Mega that offers linear time and space complexity yet yields only minimal quality loss, by efficiently splitting the whole sequence into multiple chunks with fixed length. Extensive experiments on a wide range of sequence modeling benchmarks, including the Long Range Arena, neural machine translation, auto-regressive language modeling, and image and speech classification, show that Mega achieves significant improvements over other sequence models, including variants of Transformers and recent state space models.*

|

||||

*The design choices in the Transformer attention mechanism, including weak inductive bias and quadratic computational complexity, have limited its application for modeling long sequences. In this paper, we introduce Mega, a simple, theoretically grounded, single-head gated attention mechanism equipped with (exponential) moving average to incorporate inductive bias of position-aware local dependencies into the position-agnostic attention mechanism. We further propose a variant of Mega that offers linear time and space complexity yet yields only minimal quality loss, by efficiently splitting the whole sequence into multiple chunks with fixed length. Extensive experiments on a wide range of sequence modeling benchmarks, including the Long Range Arena, neural machine translation, auto-regressive language modeling, and image and speech classification, show that Mega achieves significant improvements over other sequence models, including variants of Transformers and recent state space models. *

|

||||

|

||||

This model was contributed by [mnaylor](https://huggingface.co/mnaylor).

|

||||

The original code can be found [here](https://github.com/facebookresearch/mega).

|

||||

|

||||

@ -186,6 +186,5 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

|

||||

- forward

|

||||

|

||||

## MiniMaxForQuestionAnswering

|

||||

|

||||

[[autodoc]] MiniMaxForQuestionAnswering

|

||||

- forward

|

||||

|

||||

@ -223,6 +223,5 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

|

||||

- forward

|

||||

|

||||

## MixtralForQuestionAnswering

|

||||

|

||||

[[autodoc]] MixtralForQuestionAnswering

|

||||

- forward

|

||||

|

||||

@ -136,6 +136,11 @@ print(processor.decode(output[0], skip_special_tokens=True))

|

||||

|

||||

[[autodoc]] MllamaModel

|

||||

|

||||

## MllamaForCausalLM

|

||||

|

||||

[[autodoc]] MllamaForCausalLM

|

||||

- forward

|

||||

|

||||

## MllamaVisionModel

|

||||

|

||||

[[autodoc]] MllamaVisionModel

|

||||

|

||||

@ -316,7 +316,6 @@ with torch.no_grad():

|

||||

Different LID models are available based on the number of languages they can recognize - [126](https://huggingface.co/facebook/mms-lid-126), [256](https://huggingface.co/facebook/mms-lid-256), [512](https://huggingface.co/facebook/mms-lid-512), [1024](https://huggingface.co/facebook/mms-lid-1024), [2048](https://huggingface.co/facebook/mms-lid-2048), [4017](https://huggingface.co/facebook/mms-lid-4017).

|

||||

|

||||

#### Inference

|

||||

|

||||

First, we install transformers and some other libraries

|

||||

|

||||

```bash

|

||||

|

||||

@ -99,6 +99,7 @@ print(f"The predicted class label is:{predicted_class_label}")

|

||||

|

||||

[[autodoc]] MobileViTConfig

|

||||

|

||||

|

||||

## MobileViTImageProcessor

|

||||

|

||||

[[autodoc]] MobileViTImageProcessor

|

||||

|

||||

@ -64,11 +64,11 @@ Note that each timestamp - i.e each codebook - gets its own set of Linear Layers

|

||||

|

||||

It's the audio encoder from Kyutai, that has recently been integrated to transformers, which is used to "tokenize" audio. It has the same use that [`~EncodecModel`] has in [`~MusicgenModel`].

|

||||

|

||||

## Tips

|

||||

## Tips:

|

||||

|

||||

The original checkpoints can be converted using the conversion script `src/transformers/models/moshi/convert_moshi_transformers.py`

|

||||

|

||||

### How to use the model

|

||||

### How to use the model:

|

||||

|

||||

This implementation has two main aims:

|

||||

|

||||

@ -152,7 +152,7 @@ Once it's done, you can simply forward `text_labels` and `audio_labels` to [`Mos

|

||||

|

||||

A training guide will come soon, but user contributions are welcomed!

|

||||

|

||||

### How does the model forward the inputs / generate

|

||||

### How does the model forward the inputs / generate:

|

||||

|

||||

1. The input streams are embedded and combined into `inputs_embeds`.

|

||||

|

||||

|

||||

@ -50,7 +50,7 @@ MusicGen Melody is compatible with two generation modes: greedy and sampling. In

|

||||

|

||||

Transformers supports both mono (1-channel) and stereo (2-channel) variants of MusicGen Melody. The mono channel versions generate a single set of codebooks. The stereo versions generate 2 sets of codebooks, 1 for each channel (left/right), and each set of codebooks is decoded independently through the audio compression model. The audio streams for each channel are combined to give the final stereo output.

|

||||

|

||||

### Audio Conditional Generation

|

||||

#### Audio Conditional Generation

|

||||

|

||||

The model can generate an audio sample conditioned on a text and an audio prompt through use of the [`MusicgenMelodyProcessor`] to pre-process the inputs.

|

||||

|

||||

|

||||

@ -40,3 +40,7 @@ The original code can be found [here](https://github.com/tomlimi/MYTE).

|

||||

- get_special_tokens_mask

|

||||

- create_token_type_ids_from_sequences

|

||||

- save_vocabulary

|

||||

|

||||

## MyT5Tokenizer

|

||||

|

||||

[[autodoc]] MyT5Tokenizer

|

||||

|

||||