Visual question answering

- -

Transformers acts as the model-definition framework for state-of-the-art machine learning models in text, computer

vision, audio, video, and multimodal model, for both inference and training.

diff --git a/docs/source/en/internal/file_utils.md b/docs/source/en/internal/file_utils.md

index 31fbc5b8811..63db5756a62 100644

--- a/docs/source/en/internal/file_utils.md

+++ b/docs/source/en/internal/file_utils.md

@@ -20,7 +20,6 @@ This page lists all of Transformers general utility functions that are found in

Most of those are only useful if you are studying the general code in the library.

-

## Enums and namedtuples

[[autodoc]] utils.ExplicitEnum

diff --git a/docs/source/en/internal/generation_utils.md b/docs/source/en/internal/generation_utils.md

index d47eba82d8c..87b0111ff05 100644

--- a/docs/source/en/internal/generation_utils.md

+++ b/docs/source/en/internal/generation_utils.md

@@ -65,7 +65,6 @@ values. Here, for instance, it has two keys that are `sequences` and `scores`.

We document here all output types.

-

[[autodoc]] generation.GenerateDecoderOnlyOutput

[[autodoc]] generation.GenerateEncoderDecoderOutput

@@ -74,13 +73,11 @@ We document here all output types.

[[autodoc]] generation.GenerateBeamEncoderDecoderOutput

-

## LogitsProcessor

A [`LogitsProcessor`] can be used to modify the prediction scores of a language model head for

generation.

-

[[autodoc]] AlternatingCodebooksLogitsProcessor

- __call__

@@ -174,8 +171,6 @@ generation.

[[autodoc]] WatermarkLogitsProcessor

- __call__

-

-

## StoppingCriteria

A [`StoppingCriteria`] can be used to change when to stop generation (other than EOS token). Please note that this is exclusively available to our PyTorch implementations.

@@ -300,7 +295,6 @@ A [`Constraint`] can be used to force the generation to include specific tokens

- to_legacy_cache

- from_legacy_cache

-

## Watermark Utils

[[autodoc]] WatermarkingConfig

diff --git a/docs/source/en/internal/import_utils.md b/docs/source/en/internal/import_utils.md

index 77554c85b02..15325819817 100644

--- a/docs/source/en/internal/import_utils.md

+++ b/docs/source/en/internal/import_utils.md

@@ -22,8 +22,8 @@ worked around. We don't want for all users of `transformers` to have to install

we therefore mark those as soft dependencies rather than hard dependencies.

The transformers toolkit is not made to error-out on import of a model that has a specific dependency; instead, an

-object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

-`torchvision` isn't installed, the fast image processors will not be available.

+object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

+`torchvision` isn't installed, the fast image processors will not be available.

This object is still importable:

@@ -55,7 +55,7 @@ All objects under a given filename have an automatic dependency to the tool link

**Tokenizers**: All files starting with `tokenization_` and ending with `_fast` have an automatic `tokenizers` dependency

-**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

+**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

at the time of writing, this only contains the `pillow` dependency.

**Vision + Torch + Torchvision**: All files starting with `image_processing_` and ending with `_fast` have an automatic

@@ -66,7 +66,7 @@ All of these automatic dependencies are added on top of the explicit dependencie

### Explicit Object Dependencies

We add a method called `requires` that is used to explicitly specify the dependencies of a given object. As an

-example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

+example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

required dependencies:

```python

diff --git a/docs/source/en/internal/model_debugging_utils.md b/docs/source/en/internal/model_debugging_utils.md

index cf2c0353fc7..aa5371cd38e 100644

--- a/docs/source/en/internal/model_debugging_utils.md

+++ b/docs/source/en/internal/model_debugging_utils.md

@@ -21,10 +21,8 @@ provides for it.

Most of those are only useful if you are adding new models in the library.

-

## Model addition debuggers

-

### Model addition debugger - context manager for model adders

This context manager is a power user tool intended for model adders. It tracks all forward calls within a model forward

@@ -72,7 +70,6 @@ with model_addition_debugger_context(

```

-

### Reading results

The debugger generates two files from the forward call, both with the same base name, but ending either with

@@ -231,10 +228,8 @@ Once the forward passes of two models have been traced by the debugger, one can

below: we can see slight differences between these two implementations' key projection layer. Inputs are mostly

identical, but not quite. Looking through the file differences makes it easier to pinpoint which layer is wrong.

-

-

### Limitations and scope

This feature will only work for torch-based models. Models relying heavily on external kernel calls may work, but trace will

@@ -253,7 +248,7 @@ layers.

This small util is a power user tool intended for model adders and maintainers. It lists all test methods

existing in `test_modeling_common.py`, inherited by all model tester classes, and scans the repository to measure

-how many tests are being skipped and for which models.

+how many tests are being skipped and for which models.

### Rationale

@@ -268,8 +263,7 @@ This utility:

-

-### Usage

+### Usage

You can run the skipped test analyzer in two ways:

diff --git a/docs/source/en/internal/pipelines_utils.md b/docs/source/en/internal/pipelines_utils.md

index 6ea6de9a61b..23856e5639c 100644

--- a/docs/source/en/internal/pipelines_utils.md

+++ b/docs/source/en/internal/pipelines_utils.md

@@ -20,7 +20,6 @@ This page lists all the utility functions the library provides for pipelines.

Most of those are only useful if you are studying the code of the models in the library.

-

## Argument handling

[[autodoc]] pipelines.ArgumentHandler

diff --git a/docs/source/en/kv_cache.md b/docs/source/en/kv_cache.md

index f0a781cba4f..a7c39a6a8d2 100644

--- a/docs/source/en/kv_cache.md

+++ b/docs/source/en/kv_cache.md

@@ -67,7 +67,7 @@ out = model.generate(**inputs, do_sample=False, max_new_tokens=20, past_key_valu

## Fixed-size cache

-The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

+The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

A fixed-size cache ([`StaticCache`]) pre-allocates a specific maximum cache size for the kv pairs. You can generate up to the maximum cache size without needing to modify it. However, having a fixed (usually large) size for the key/value states means that while generating, a lot of tokens will actually be masked as they should not take part in the attention. So this trick allows to easily `compile` the decoding stage, but it incurs a waste of tokens in the attention computation. As all things, it's then a trade-off which should be very good if you generate with several sequence of more or less the same lengths, but may be sub-optimal if you have for example 1 very large sequence, and then only short sequences (as the fix cache size would be large, a lot would be wasted for the short sequences). Make sure you understand the impact if you use it!

diff --git a/docs/source/en/llm_tutorial.md b/docs/source/en/llm_tutorial.md

index 0f4f91d30a6..0cbbbc6ac04 100644

--- a/docs/source/en/llm_tutorial.md

+++ b/docs/source/en/llm_tutorial.md

@@ -24,6 +24,7 @@ In Transformers, the [`~GenerationMixin.generate`] API handles text generation,

> [!TIP]

> You can also chat with a model directly from the command line. ([reference](./conversations.md#transformers))

+>

> ```shell

> transformers chat Qwen/Qwen2.5-0.5B-Instruct

> ```

@@ -35,6 +36,7 @@ Before you begin, it's helpful to install [bitsandbytes](https://hf.co/docs/bits

```bash

!pip install -U transformers bitsandbytes

```

+

Bitsandbytes supports multiple backends in addition to CUDA-based GPUs. Refer to the multi-backend installation [guide](https://huggingface.co/docs/bitsandbytes/main/en/installation#multi-backend) to learn more.

Load a LLM with [`~PreTrainedModel.from_pretrained`] and add the following two parameters to reduce the memory requirements.

@@ -154,7 +156,6 @@ print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

| `repetition_penalty` | `float` | Set it to `>1.0` if you're seeing the model repeat itself often. Larger values apply a larger penalty. |

| `eos_token_id` | `list[int]` | The token(s) that will cause generation to stop. The default value is usually good, but you can specify a different token. |

-

## Pitfalls

The section below covers some common issues you may encounter during text generation and how to solve them.

diff --git a/docs/source/en/llm_tutorial_optimization.md b/docs/source/en/llm_tutorial_optimization.md

index 63d9308a84f..04a61dd82cb 100644

--- a/docs/source/en/llm_tutorial_optimization.md

+++ b/docs/source/en/llm_tutorial_optimization.md

@@ -66,6 +66,7 @@ If you have access to an 8 x 80GB A100 node, you could load BLOOM as follows

```bash

!pip install transformers accelerate bitsandbytes optimum

```

+

```python

from transformers import AutoModelForCausalLM

@@ -98,6 +99,7 @@ result

```

**Output**:

+

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```python\ndef bytes_to_giga_bytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single

```

@@ -116,6 +118,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```bash

29.0260648727417

```

@@ -127,7 +130,6 @@ Note that if we had tried to run the model in full float32 precision, a whopping

If you are unsure in which format the model weights are stored on the Hub, you can always look into the checkpoint's config under `"dtype"`, *e.g.* [here](https://huggingface.co/meta-llama/Llama-2-7b-hf/blob/6fdf2e60f86ff2481f2241aaee459f85b5b0bbb9/config.json#L21). It is recommended to set the model to the same precision type as written in the config when loading with `from_pretrained(..., dtype=...)` except when the original type is float32 in which case one can use both `float16` or `bfloat16` for inference.

-

Let's define a `flush(...)` function to free all allocated memory so that we can accurately measure the peak allocated GPU memory.

```python

@@ -148,6 +150,7 @@ Let's call it now for the next experiment.

```python

flush()

```

+

From the Accelerate library, you can also use a device-agnostic utility method called [release_memory](https://github.com/huggingface/accelerate/blob/29be4788629b772a3b722076e433b5b3b5c85da3/src/accelerate/utils/memory.py#L63), which takes various hardware backends like XPU, MLU, NPU, MPS, and more into account.

```python

@@ -204,6 +207,7 @@ result

```

**Output**:

+

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```python\ndef bytes_to_giga_bytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single

```

@@ -215,6 +219,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```

15.219234466552734

```

@@ -222,8 +227,8 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

Significantly less! We're down to just a bit over 15 GBs and could therefore run this model on consumer GPUs like the 4090.

We're seeing a very nice gain in memory efficiency and more or less no degradation to the model's output. However, we can also notice a slight slow-down during inference.

-

We delete the models and flush the memory again.

+

```python

del model

del pipe

@@ -245,6 +250,7 @@ result

```

**Output**:

+

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```\ndef bytes_to_gigabytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single argument

```

@@ -256,6 +262,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```

9.543574333190918

```

@@ -270,6 +277,7 @@ Also note that inference here was again a bit slower compared to 8-bit quantizat

del model

del pipe

```

+

```python

flush()

```

@@ -384,6 +392,7 @@ def alternating(list1, list2):

-----

"""

```

+

For demonstration purposes, we duplicate the system prompt by ten so that the input length is long enough to observe Flash Attention's memory savings.

We append the original text prompt `"Question: Please write a function in Python that transforms bytes to Giga bytes.\n\nAnswer: Here"`

@@ -413,6 +422,7 @@ result

```

**Output**:

+

```

Generated in 10.96854019165039 seconds.

Sure. Here is a function that does that.\n\ndef bytes_to_giga(bytes):\n return bytes / 1024 / 1024 / 1024\n\nAnswer: Sure. Here is a function that does that.\n\ndef

@@ -429,6 +439,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```bash

37.668193340301514

```

@@ -460,6 +471,7 @@ result

```

**Output**:

+

```

Generated in 3.0211617946624756 seconds.

Sure. Here is a function that does that.\n\ndef bytes_to_giga(bytes):\n return bytes / 1024 / 1024 / 1024\n\nAnswer: Sure. Here is a function that does that.\n\ndef

@@ -474,6 +486,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```

32.617331981658936

```

@@ -604,6 +617,7 @@ generated_text

```

**Output**:

+

```

shape of input_ids torch.Size([1, 21])

shape of input_ids torch.Size([1, 22])

@@ -641,6 +655,7 @@ generated_text

```

**Output**:

+

```

shape of input_ids torch.Size([1, 1])

length of key-value cache 20

@@ -712,6 +727,7 @@ tokenizer.batch_decode(generation_output.sequences)[0][len(prompt):]

```

**Output**:

+

```

is a modified version of the function that returns Mega bytes instead.

@@ -733,6 +749,7 @@ config = model.config

```

**Output**:

+

```

7864320000

```

@@ -773,7 +790,6 @@ The most notable application of GQA is [Llama-v2](https://huggingface.co/meta-ll

> As a conclusion, it is strongly recommended to make use of either GQA or MQA if the LLM is deployed with auto-regressive decoding and is required to handle large input sequences as is the case for example for chat.

-

## Conclusion

The research community is constantly coming up with new, nifty ways to speed up inference time for ever-larger LLMs. As an example, one such promising research direction is [speculative decoding](https://huggingface.co/papers/2211.17192) where "easy tokens" are generated by smaller, faster language models and only "hard tokens" are generated by the LLM itself. Going into more detail is out of the scope of this notebook, but can be read upon in this [nice blog post](https://huggingface.co/blog/assisted-generation).

diff --git a/docs/source/en/main_classes/callback.md b/docs/source/en/main_classes/callback.md

index b29c9e7264e..bc1413a9474 100644

--- a/docs/source/en/main_classes/callback.md

+++ b/docs/source/en/main_classes/callback.md

@@ -54,7 +54,6 @@ The main class that implements callbacks is [`TrainerCallback`]. It gets the

Trainer's internal state via [`TrainerState`], and can take some actions on the training loop via

[`TrainerControl`].

-

## Available Callbacks

Here is the list of the available [`TrainerCallback`] in the library:

diff --git a/docs/source/en/main_classes/configuration.md b/docs/source/en/main_classes/configuration.md

index 0cfef06d3ce..933621f6a14 100644

--- a/docs/source/en/main_classes/configuration.md

+++ b/docs/source/en/main_classes/configuration.md

@@ -24,7 +24,6 @@ Each derived config class implements model specific attributes. Common attribute

`hidden_size`, `num_attention_heads`, and `num_hidden_layers`. Text models further implement:

`vocab_size`.

-

## PretrainedConfig

[[autodoc]] PretrainedConfig

diff --git a/docs/source/en/main_classes/data_collator.md b/docs/source/en/main_classes/data_collator.md

index 2941338375b..33d156ec93f 100644

--- a/docs/source/en/main_classes/data_collator.md

+++ b/docs/source/en/main_classes/data_collator.md

@@ -25,7 +25,6 @@ on the formed batch.

Examples of use can be found in the [example scripts](../examples) or [example notebooks](../notebooks).

-

## Default data collator

[[autodoc]] data.data_collator.default_data_collator

diff --git a/docs/source/en/main_classes/deepspeed.md b/docs/source/en/main_classes/deepspeed.md

index 0b9e28656c0..b04949229da 100644

--- a/docs/source/en/main_classes/deepspeed.md

+++ b/docs/source/en/main_classes/deepspeed.md

@@ -16,7 +16,7 @@ rendered properly in your Markdown viewer.

# DeepSpeed

-[DeepSpeed](https://github.com/deepspeedai/DeepSpeed), powered by Zero Redundancy Optimizer (ZeRO), is an optimization library for training and fitting very large models onto a GPU. It is available in several ZeRO stages, where each stage progressively saves more GPU memory by partitioning the optimizer state, gradients, parameters, and enabling offloading to a CPU or NVMe. DeepSpeed is integrated with the [`Trainer`] class and most of the setup is automatically taken care of for you.

+[DeepSpeed](https://github.com/deepspeedai/DeepSpeed), powered by Zero Redundancy Optimizer (ZeRO), is an optimization library for training and fitting very large models onto a GPU. It is available in several ZeRO stages, where each stage progressively saves more GPU memory by partitioning the optimizer state, gradients, parameters, and enabling offloading to a CPU or NVMe. DeepSpeed is integrated with the [`Trainer`] class and most of the setup is automatically taken care of for you.

However, if you want to use DeepSpeed without the [`Trainer`], Transformers provides a [`HfDeepSpeedConfig`] class.

diff --git a/docs/source/en/main_classes/executorch.md b/docs/source/en/main_classes/executorch.md

index 3178085c913..3406309aa32 100644

--- a/docs/source/en/main_classes/executorch.md

+++ b/docs/source/en/main_classes/executorch.md

@@ -15,14 +15,12 @@ rendered properly in your Markdown viewer.

-->

-

# ExecuTorch

[`ExecuTorch`](https://github.com/pytorch/executorch) is an end-to-end solution for enabling on-device inference capabilities across mobile and edge devices including wearables, embedded devices and microcontrollers. It is part of the PyTorch ecosystem and supports the deployment of PyTorch models with a focus on portability, productivity, and performance.

ExecuTorch introduces well defined entry points to perform model, device, and/or use-case specific optimizations such as backend delegation, user-defined compiler transformations, memory planning, and more. The first step in preparing a PyTorch model for execution on an edge device using ExecuTorch is to export the model. This is achieved through the use of a PyTorch API called [`torch.export`](https://pytorch.org/docs/stable/export.html).

-

## ExecuTorch Integration

An integration point is being developed to ensure that 🤗 Transformers can be exported using `torch.export`. The goal of this integration is not only to enable export but also to ensure that the exported artifact can be further lowered and optimized to run efficiently in `ExecuTorch`, particularly for mobile and edge use cases.

diff --git a/docs/source/en/main_classes/feature_extractor.md b/docs/source/en/main_classes/feature_extractor.md

index fd451a35481..294ecad6309 100644

--- a/docs/source/en/main_classes/feature_extractor.md

+++ b/docs/source/en/main_classes/feature_extractor.md

@@ -18,7 +18,6 @@ rendered properly in your Markdown viewer.

A feature extractor is in charge of preparing input features for audio or vision models. This includes feature extraction from sequences, e.g., pre-processing audio files to generate Log-Mel Spectrogram features, feature extraction from images, e.g., cropping image files, but also padding, normalization, and conversion to NumPy and PyTorch tensors.

-

## FeatureExtractionMixin

[[autodoc]] feature_extraction_utils.FeatureExtractionMixin

diff --git a/docs/source/en/main_classes/image_processor.md b/docs/source/en/main_classes/image_processor.md

index 7dc9de60571..61be0306630 100644

--- a/docs/source/en/main_classes/image_processor.md

+++ b/docs/source/en/main_classes/image_processor.md

@@ -26,6 +26,7 @@ from transformers import AutoImageProcessor

processor = AutoImageProcessor.from_pretrained("facebook/detr-resnet-50", use_fast=True)

```

+

Note that `use_fast` will be set to `True` by default in a future release.

When using a fast image processor, you can also set the `device` argument to specify the device on which the processing should be done. By default, the processing is done on the same device as the inputs if the inputs are tensors, or on the CPU otherwise.

@@ -57,7 +58,6 @@ Here are some speed comparisons between the base and fast image processors for t

These benchmarks were run on an [AWS EC2 g5.2xlarge instance](https://aws.amazon.com/ec2/instance-types/g5/), utilizing an NVIDIA A10G Tensor Core GPU.

-

## ImageProcessingMixin

[[autodoc]] image_processing_utils.ImageProcessingMixin

@@ -72,7 +72,6 @@ These benchmarks were run on an [AWS EC2 g5.2xlarge instance](https://aws.amazon

[[autodoc]] image_processing_utils.BaseImageProcessor

-

## BaseImageProcessorFast

[[autodoc]] image_processing_utils_fast.BaseImageProcessorFast

diff --git a/docs/source/en/main_classes/logging.md b/docs/source/en/main_classes/logging.md

index 5cbdf9ae27e..34da2ac9d1b 100644

--- a/docs/source/en/main_classes/logging.md

+++ b/docs/source/en/main_classes/logging.md

@@ -55,7 +55,6 @@ logger.info("INFO")

logger.warning("WARN")

```

-

All the methods of this logging module are documented below, the main ones are

[`logging.get_verbosity`] to get the current level of verbosity in the logger and

[`logging.set_verbosity`] to set the verbosity to the level of your choice. In order (from the least

diff --git a/docs/source/en/main_classes/model.md b/docs/source/en/main_classes/model.md

index d7768a905ce..e3e77a8e2e1 100644

--- a/docs/source/en/main_classes/model.md

+++ b/docs/source/en/main_classes/model.md

@@ -26,7 +26,6 @@ file or directory, or from a pretrained model configuration provided by the libr

The other methods that are common to each model are defined in [`~modeling_utils.ModuleUtilsMixin`] and [`~generation.GenerationMixin`].

-

## PreTrainedModel

[[autodoc]] PreTrainedModel

diff --git a/docs/source/en/main_classes/onnx.md b/docs/source/en/main_classes/onnx.md

index 81d31c97e88..5f8869948d2 100644

--- a/docs/source/en/main_classes/onnx.md

+++ b/docs/source/en/main_classes/onnx.md

@@ -51,4 +51,3 @@ to export models for different types of topologies or tasks.

### FeaturesManager

[[autodoc]] onnx.features.FeaturesManager

-

diff --git a/docs/source/en/main_classes/optimizer_schedules.md b/docs/source/en/main_classes/optimizer_schedules.md

index 84d9ca7b907..3bab249ab4e 100644

--- a/docs/source/en/main_classes/optimizer_schedules.md

+++ b/docs/source/en/main_classes/optimizer_schedules.md

@@ -22,7 +22,6 @@ The `.optimization` module provides:

- several schedules in the form of schedule objects that inherit from `_LRSchedule`:

- a gradient accumulation class to accumulate the gradients of multiple batches

-

## AdaFactor

[[autodoc]] Adafactor

diff --git a/docs/source/en/main_classes/output.md b/docs/source/en/main_classes/output.md

index 295f99e21d1..8a9ae879fb1 100644

--- a/docs/source/en/main_classes/output.md

+++ b/docs/source/en/main_classes/output.md

@@ -47,7 +47,6 @@ However, this is not always the case. Some models apply normalization or subsequ

-

You can access each attribute as you would usually do, and if that attribute has not been returned by the model, you

will get `None`. Here for instance `outputs.loss` is the loss computed by the model, and `outputs.attentions` is

`None`.

diff --git a/docs/source/en/main_classes/pipelines.md b/docs/source/en/main_classes/pipelines.md

index 0e4cf55995b..31139ddf429 100644

--- a/docs/source/en/main_classes/pipelines.md

+++ b/docs/source/en/main_classes/pipelines.md

@@ -81,7 +81,6 @@ for out in tqdm(pipe(KeyDataset(dataset, "file"))):

For ease of use, a generator is also possible:

-

```python

from transformers import pipeline

@@ -196,7 +195,6 @@ This is a occasional very long sentence compared to the other. In that case, the

tokens long, so the whole batch will be [64, 400] instead of [64, 4], leading to the high slowdown. Even worse, on

bigger batches, the program simply crashes.

-

```

------------------------------

Streaming no batching

@@ -245,7 +243,6 @@ multiple forward pass of a model. Under normal circumstances, this would yield i

In order to circumvent this issue, both of these pipelines are a bit specific, they are `ChunkPipeline` instead of

regular `Pipeline`. In short:

-

```python

preprocessed = pipe.preprocess(inputs)

model_outputs = pipe.forward(preprocessed)

@@ -254,7 +251,6 @@ outputs = pipe.postprocess(model_outputs)

Now becomes:

-

```python

all_model_outputs = []

for preprocessed in pipe.preprocess(inputs):

@@ -282,7 +278,6 @@ If you want to override a specific pipeline.

Don't hesitate to create an issue for your task at hand, the goal of the pipeline is to be easy to use and support most

cases, so `transformers` could maybe support your use case.

-

If you want to try simply you can:

- Subclass your pipeline of choice

@@ -302,7 +297,6 @@ my_pipeline = pipeline(model="xxxx", pipeline_class=MyPipeline)

That should enable you to do all the custom code you want.

-

## Implementing a pipeline

[Implementing a new pipeline](../add_new_pipeline)

@@ -329,7 +323,6 @@ Pipelines available for audio tasks include the following.

- __call__

- all

-

### ZeroShotAudioClassificationPipeline

[[autodoc]] ZeroShotAudioClassificationPipeline

diff --git a/docs/source/en/main_classes/processors.md b/docs/source/en/main_classes/processors.md

index 2c2e0cd31b7..8863a632628 100644

--- a/docs/source/en/main_classes/processors.md

+++ b/docs/source/en/main_classes/processors.md

@@ -71,7 +71,6 @@ Additionally, the following method can be used to load values from a data file a

[[autodoc]] data.processors.glue.glue_convert_examples_to_features

-

## XNLI

[The Cross-Lingual NLI Corpus (XNLI)](https://www.nyu.edu/projects/bowman/xnli/) is a benchmark that evaluates the

@@ -88,7 +87,6 @@ Please note that since the gold labels are available on the test set, evaluation

An example using these processors is given in the [run_xnli.py](https://github.com/huggingface/transformers/tree/main/examples/pytorch/text-classification/run_xnli.py) script.

-

## SQuAD

[The Stanford Question Answering Dataset (SQuAD)](https://rajpurkar.github.io/SQuAD-explorer//) is a benchmark that

@@ -115,11 +113,9 @@ Additionally, the following method can be used to convert SQuAD examples into

[[autodoc]] data.processors.squad.squad_convert_examples_to_features

-

These processors as well as the aforementioned method can be used with files containing the data as well as with the

*tensorflow_datasets* package. Examples are given below.

-

### Example usage

Here is an example using the processors as well as the conversion method using data files:

diff --git a/docs/source/en/main_classes/tokenizer.md b/docs/source/en/main_classes/tokenizer.md

index 83d2ae5df6a..52c9751226d 100644

--- a/docs/source/en/main_classes/tokenizer.md

+++ b/docs/source/en/main_classes/tokenizer.md

@@ -22,7 +22,7 @@ Rust library [🤗 Tokenizers](https://github.com/huggingface/tokenizers). The "

1. a significant speed-up in particular when doing batched tokenization and

2. additional methods to map between the original string (character and words) and the token space (e.g. getting the

- index of the token comprising a given character or the span of characters corresponding to a given token).

+ index of the token comprising a given character or the span of characters corresponding to a given token).

The base classes [`PreTrainedTokenizer`] and [`PreTrainedTokenizerFast`]

implement the common methods for encoding string inputs in model inputs (see below) and instantiating/saving python and

@@ -50,12 +50,11 @@ several advanced alignment methods which can be used to map between the original

token space (e.g., getting the index of the token comprising a given character or the span of characters corresponding

to a given token).

-

# Multimodal Tokenizer

Apart from that each tokenizer can be a "multimodal" tokenizer which means that the tokenizer will hold all relevant special tokens

as part of tokenizer attributes for easier access. For example, if the tokenizer is loaded from a vision-language model like LLaVA, you will

-be able to access `tokenizer.image_token_id` to obtain the special image token used as a placeholder.

+be able to access `tokenizer.image_token_id` to obtain the special image token used as a placeholder.

To enable extra special tokens for any type of tokenizer, you have to add the following lines and save the tokenizer. Extra special tokens do not

have to be modality related and can ne anything that the model often needs access to. In the below code, tokenizer at `output_dir` will have direct access

diff --git a/docs/source/en/main_classes/video_processor.md b/docs/source/en/main_classes/video_processor.md

index ee69030ab1a..29d29d0cb60 100644

--- a/docs/source/en/main_classes/video_processor.md

+++ b/docs/source/en/main_classes/video_processor.md

@@ -22,7 +22,6 @@ The video processor extends the functionality of image processors by allowing Vi

When adding a new VLM or updating an existing one to enable distinct video preprocessing, saving and reloading the processor configuration will store the video related arguments in a dedicated file named `video_preprocessing_config.json`. Don't worry if you haven't updated your VLM, the processor will try to load video related configurations from a file named `preprocessing_config.json`.

-

### Usage Example

Here's an example of how to load a video processor with [`llava-hf/llava-onevision-qwen2-0.5b-ov-hf`](https://huggingface.co/llava-hf/llava-onevision-qwen2-0.5b-ov-hf) model:

@@ -59,7 +58,6 @@ The video processor can also sample video frames using the technique best suited

-

```python

from transformers import AutoVideoProcessor

@@ -92,4 +90,3 @@ print(processed_video_inputs.pixel_values_videos.shape)

## BaseVideoProcessor

[[autodoc]] video_processing_utils.BaseVideoProcessor

-

diff --git a/docs/source/en/model_doc/aimv2.md b/docs/source/en/model_doc/aimv2.md

index 9d0abbaaf36..acf9c4de12f 100644

--- a/docs/source/en/model_doc/aimv2.md

+++ b/docs/source/en/model_doc/aimv2.md

@@ -25,7 +25,6 @@ The abstract from the paper is the following:

*We introduce a novel method for pre-training of large-scale vision encoders. Building on recent advancements in autoregressive pre-training of vision models, we extend this framework to a multimodal setting, i.e., images and text. In this paper, we present AIMV2, a family of generalist vision encoders characterized by a straightforward pre-training process, scalability, and remarkable performance across a range of downstream tasks. This is achieved by pairing the vision encoder with a multimodal decoder that autoregressively generates raw image patches and text tokens. Our encoders excel not only in multimodal evaluations but also in vision benchmarks such as localization, grounding, and classification. Notably, our AIMV2-3B encoder achieves 89.5% accuracy on ImageNet-1k with a frozen trunk. Furthermore, AIMV2 consistently outperforms state-of-the-art contrastive models (e.g., CLIP, SigLIP) in multimodal image understanding across diverse settings.*

-

This model was contributed by [Yaswanth Gali](https://huggingface.co/yaswanthgali).

The original code can be found [here](https://github.com/apple/ml-aim).

diff --git a/docs/source/en/model_doc/aria.md b/docs/source/en/model_doc/aria.md

index e5f4afa7b7a..ddd0815aaa5 100644

--- a/docs/source/en/model_doc/aria.md

+++ b/docs/source/en/model_doc/aria.md

@@ -98,7 +98,7 @@ print(response)

Quantization reduces the memory burden of large models by representing the weights in a lower precision. Refer to the [Quantization](../quantization/overview) overview for more available quantization backends.

-

+

The example below uses [torchao](../quantization/torchao) to only quantize the weights to int4 and the [rhymes-ai/Aria-sequential_mlp](https://huggingface.co/rhymes-ai/Aria-sequential_mlp) checkpoint. This checkpoint replaces grouped GEMM with `torch.nn.Linear` layers for easier quantization.

```py

@@ -142,7 +142,6 @@ response = processor.decode(output_ids, skip_special_tokens=True)

print(response)

```

-

## AriaImageProcessor

[[autodoc]] AriaImageProcessor

diff --git a/docs/source/en/model_doc/audio-spectrogram-transformer.md b/docs/source/en/model_doc/audio-spectrogram-transformer.md

index 40115810467..092bf3b26f3 100644

--- a/docs/source/en/model_doc/audio-spectrogram-transformer.md

+++ b/docs/source/en/model_doc/audio-spectrogram-transformer.md

@@ -52,13 +52,13 @@ the authors compute the stats for a downstream dataset.

### Using Scaled Dot Product Attention (SDPA)

-PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

-encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

-[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

+PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

+encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

+[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

-SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

+SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```

diff --git a/docs/source/en/model_doc/auto.md b/docs/source/en/model_doc/auto.md

index 2f8cbc2009b..c1db5e2541a 100644

--- a/docs/source/en/model_doc/auto.md

+++ b/docs/source/en/model_doc/auto.md

@@ -23,7 +23,6 @@ automatically retrieve the relevant model given the name/path to the pretrained

Instantiating one of [`AutoConfig`], [`AutoModel`], and

[`AutoTokenizer`] will directly create a class of the relevant architecture. For instance

-

```python

model = AutoModel.from_pretrained("google-bert/bert-base-cased")

```

diff --git a/docs/source/en/model_doc/aya_vision.md b/docs/source/en/model_doc/aya_vision.md

index 1f02b30344a..d0822173e89 100644

--- a/docs/source/en/model_doc/aya_vision.md

+++ b/docs/source/en/model_doc/aya_vision.md

@@ -29,7 +29,7 @@ You can find all the original Aya Vision checkpoints under the [Aya Vision](http

> [!TIP]

> This model was contributed by [saurabhdash](https://huggingface.co/saurabhdash) and [yonigozlan](https://huggingface.co/yonigozlan).

->

+>

> Click on the Aya Vision models in the right sidebar for more examples of how to apply Aya Vision to different image-to-text tasks.

The example below demonstrates how to generate text based on an image with [`Pipeline`] or the [`AutoModel`] class.

diff --git a/docs/source/en/model_doc/bark.md b/docs/source/en/model_doc/bark.md

index a5787ab234e..6024b0e83ed 100644

--- a/docs/source/en/model_doc/bark.md

+++ b/docs/source/en/model_doc/bark.md

@@ -76,7 +76,7 @@ Note that 🤗 Optimum must be installed before using this feature. [Here's how

Flash Attention 2 is an even faster, optimized version of the previous optimization.

-##### Installation

+##### Installation

First, check whether your hardware is compatible with Flash Attention 2. The latest list of compatible hardware can be found in the [official documentation](https://github.com/Dao-AILab/flash-attention#installation-and-features). If your hardware is not compatible with Flash Attention 2, you can still benefit from attention kernel optimisations through Better Transformer support covered [above](https://huggingface.co/docs/transformers/main/en/model_doc/bark#using-better-transformer).

@@ -86,7 +86,6 @@ Next, [install](https://github.com/Dao-AILab/flash-attention#installation-and-fe

pip install -U flash-attn --no-build-isolation

```

-

##### Usage

To load a model using Flash Attention 2, we can pass the `attn_implementation="flash_attention_2"` flag to [`.from_pretrained`](https://huggingface.co/docs/transformers/main/en/main_classes/model#transformers.PreTrainedModel.from_pretrained). We'll also load the model in half-precision (e.g. `torch.float16`), since it results in almost no degradation to audio quality but significantly lower memory usage and faster inference:

@@ -97,7 +96,6 @@ model = BarkModel.from_pretrained("suno/bark-small", dtype=torch.float16, attn_i

##### Performance comparison

-

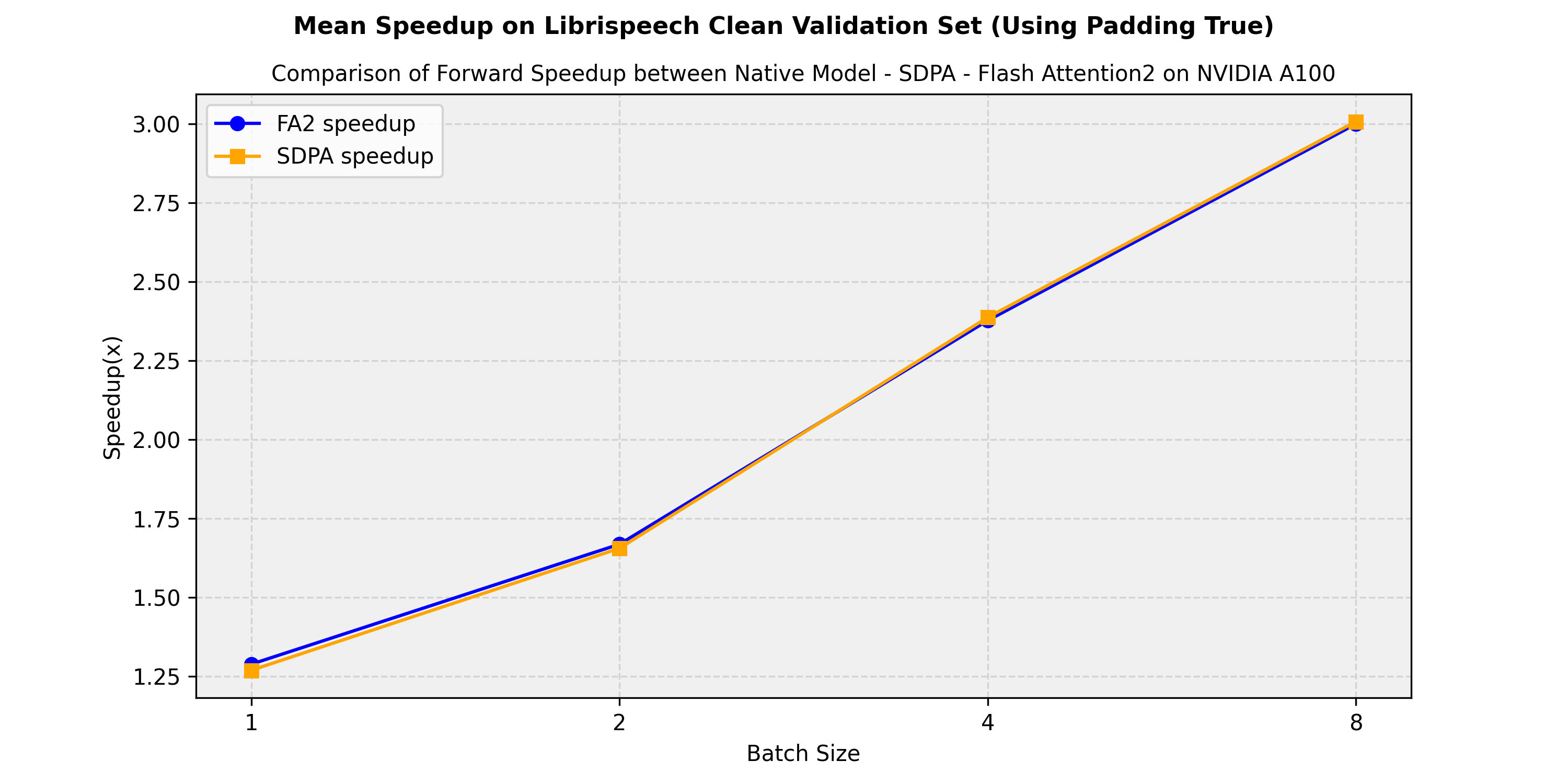

The following diagram shows the latency for the native attention implementation (no optimisation) against Better Transformer and Flash Attention 2. In all cases, we generate 400 semantic tokens on a 40GB A100 GPU with PyTorch 2.1. Flash Attention 2 is also consistently faster than Better Transformer, and its performance improves even more as batch sizes increase:

-

Transformers acts as the model-definition framework for state-of-the-art machine learning models in text, computer

vision, audio, video, and multimodal model, for both inference and training.

diff --git a/docs/source/en/internal/file_utils.md b/docs/source/en/internal/file_utils.md

index 31fbc5b8811..63db5756a62 100644

--- a/docs/source/en/internal/file_utils.md

+++ b/docs/source/en/internal/file_utils.md

@@ -20,7 +20,6 @@ This page lists all of Transformers general utility functions that are found in

Most of those are only useful if you are studying the general code in the library.

-

## Enums and namedtuples

[[autodoc]] utils.ExplicitEnum

diff --git a/docs/source/en/internal/generation_utils.md b/docs/source/en/internal/generation_utils.md

index d47eba82d8c..87b0111ff05 100644

--- a/docs/source/en/internal/generation_utils.md

+++ b/docs/source/en/internal/generation_utils.md

@@ -65,7 +65,6 @@ values. Here, for instance, it has two keys that are `sequences` and `scores`.

We document here all output types.

-

[[autodoc]] generation.GenerateDecoderOnlyOutput

[[autodoc]] generation.GenerateEncoderDecoderOutput

@@ -74,13 +73,11 @@ We document here all output types.

[[autodoc]] generation.GenerateBeamEncoderDecoderOutput

-

## LogitsProcessor

A [`LogitsProcessor`] can be used to modify the prediction scores of a language model head for

generation.

-

[[autodoc]] AlternatingCodebooksLogitsProcessor

- __call__

@@ -174,8 +171,6 @@ generation.

[[autodoc]] WatermarkLogitsProcessor

- __call__

-

-

## StoppingCriteria

A [`StoppingCriteria`] can be used to change when to stop generation (other than EOS token). Please note that this is exclusively available to our PyTorch implementations.

@@ -300,7 +295,6 @@ A [`Constraint`] can be used to force the generation to include specific tokens

- to_legacy_cache

- from_legacy_cache

-

## Watermark Utils

[[autodoc]] WatermarkingConfig

diff --git a/docs/source/en/internal/import_utils.md b/docs/source/en/internal/import_utils.md

index 77554c85b02..15325819817 100644

--- a/docs/source/en/internal/import_utils.md

+++ b/docs/source/en/internal/import_utils.md

@@ -22,8 +22,8 @@ worked around. We don't want for all users of `transformers` to have to install

we therefore mark those as soft dependencies rather than hard dependencies.

The transformers toolkit is not made to error-out on import of a model that has a specific dependency; instead, an

-object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

-`torchvision` isn't installed, the fast image processors will not be available.

+object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

+`torchvision` isn't installed, the fast image processors will not be available.

This object is still importable:

@@ -55,7 +55,7 @@ All objects under a given filename have an automatic dependency to the tool link

**Tokenizers**: All files starting with `tokenization_` and ending with `_fast` have an automatic `tokenizers` dependency

-**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

+**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

at the time of writing, this only contains the `pillow` dependency.

**Vision + Torch + Torchvision**: All files starting with `image_processing_` and ending with `_fast` have an automatic

@@ -66,7 +66,7 @@ All of these automatic dependencies are added on top of the explicit dependencie

### Explicit Object Dependencies

We add a method called `requires` that is used to explicitly specify the dependencies of a given object. As an

-example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

+example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

required dependencies:

```python

diff --git a/docs/source/en/internal/model_debugging_utils.md b/docs/source/en/internal/model_debugging_utils.md

index cf2c0353fc7..aa5371cd38e 100644

--- a/docs/source/en/internal/model_debugging_utils.md

+++ b/docs/source/en/internal/model_debugging_utils.md

@@ -21,10 +21,8 @@ provides for it.

Most of those are only useful if you are adding new models in the library.

-

## Model addition debuggers

-

### Model addition debugger - context manager for model adders

This context manager is a power user tool intended for model adders. It tracks all forward calls within a model forward

@@ -72,7 +70,6 @@ with model_addition_debugger_context(

```

-

### Reading results

The debugger generates two files from the forward call, both with the same base name, but ending either with

@@ -231,10 +228,8 @@ Once the forward passes of two models have been traced by the debugger, one can

below: we can see slight differences between these two implementations' key projection layer. Inputs are mostly

identical, but not quite. Looking through the file differences makes it easier to pinpoint which layer is wrong.

-

-

### Limitations and scope

This feature will only work for torch-based models. Models relying heavily on external kernel calls may work, but trace will

@@ -253,7 +248,7 @@ layers.

This small util is a power user tool intended for model adders and maintainers. It lists all test methods

existing in `test_modeling_common.py`, inherited by all model tester classes, and scans the repository to measure

-how many tests are being skipped and for which models.

+how many tests are being skipped and for which models.

### Rationale

@@ -268,8 +263,7 @@ This utility:

-

-### Usage

+### Usage

You can run the skipped test analyzer in two ways:

diff --git a/docs/source/en/internal/pipelines_utils.md b/docs/source/en/internal/pipelines_utils.md

index 6ea6de9a61b..23856e5639c 100644

--- a/docs/source/en/internal/pipelines_utils.md

+++ b/docs/source/en/internal/pipelines_utils.md

@@ -20,7 +20,6 @@ This page lists all the utility functions the library provides for pipelines.

Most of those are only useful if you are studying the code of the models in the library.

-

## Argument handling

[[autodoc]] pipelines.ArgumentHandler

diff --git a/docs/source/en/kv_cache.md b/docs/source/en/kv_cache.md

index f0a781cba4f..a7c39a6a8d2 100644

--- a/docs/source/en/kv_cache.md

+++ b/docs/source/en/kv_cache.md

@@ -67,7 +67,7 @@ out = model.generate(**inputs, do_sample=False, max_new_tokens=20, past_key_valu

## Fixed-size cache

-The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

+The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

A fixed-size cache ([`StaticCache`]) pre-allocates a specific maximum cache size for the kv pairs. You can generate up to the maximum cache size without needing to modify it. However, having a fixed (usually large) size for the key/value states means that while generating, a lot of tokens will actually be masked as they should not take part in the attention. So this trick allows to easily `compile` the decoding stage, but it incurs a waste of tokens in the attention computation. As all things, it's then a trade-off which should be very good if you generate with several sequence of more or less the same lengths, but may be sub-optimal if you have for example 1 very large sequence, and then only short sequences (as the fix cache size would be large, a lot would be wasted for the short sequences). Make sure you understand the impact if you use it!

diff --git a/docs/source/en/llm_tutorial.md b/docs/source/en/llm_tutorial.md

index 0f4f91d30a6..0cbbbc6ac04 100644

--- a/docs/source/en/llm_tutorial.md

+++ b/docs/source/en/llm_tutorial.md

@@ -24,6 +24,7 @@ In Transformers, the [`~GenerationMixin.generate`] API handles text generation,

> [!TIP]

> You can also chat with a model directly from the command line. ([reference](./conversations.md#transformers))

+>

> ```shell

> transformers chat Qwen/Qwen2.5-0.5B-Instruct

> ```

@@ -35,6 +36,7 @@ Before you begin, it's helpful to install [bitsandbytes](https://hf.co/docs/bits

```bash

!pip install -U transformers bitsandbytes

```

+

Bitsandbytes supports multiple backends in addition to CUDA-based GPUs. Refer to the multi-backend installation [guide](https://huggingface.co/docs/bitsandbytes/main/en/installation#multi-backend) to learn more.

Load a LLM with [`~PreTrainedModel.from_pretrained`] and add the following two parameters to reduce the memory requirements.

@@ -154,7 +156,6 @@ print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

| `repetition_penalty` | `float` | Set it to `>1.0` if you're seeing the model repeat itself often. Larger values apply a larger penalty. |

| `eos_token_id` | `list[int]` | The token(s) that will cause generation to stop. The default value is usually good, but you can specify a different token. |

-

## Pitfalls

The section below covers some common issues you may encounter during text generation and how to solve them.

diff --git a/docs/source/en/llm_tutorial_optimization.md b/docs/source/en/llm_tutorial_optimization.md

index 63d9308a84f..04a61dd82cb 100644

--- a/docs/source/en/llm_tutorial_optimization.md

+++ b/docs/source/en/llm_tutorial_optimization.md

@@ -66,6 +66,7 @@ If you have access to an 8 x 80GB A100 node, you could load BLOOM as follows

```bash

!pip install transformers accelerate bitsandbytes optimum

```

+

```python

from transformers import AutoModelForCausalLM

@@ -98,6 +99,7 @@ result

```

**Output**:

+

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```python\ndef bytes_to_giga_bytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single

```

@@ -116,6 +118,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```bash

29.0260648727417

```

@@ -127,7 +130,6 @@ Note that if we had tried to run the model in full float32 precision, a whopping

If you are unsure in which format the model weights are stored on the Hub, you can always look into the checkpoint's config under `"dtype"`, *e.g.* [here](https://huggingface.co/meta-llama/Llama-2-7b-hf/blob/6fdf2e60f86ff2481f2241aaee459f85b5b0bbb9/config.json#L21). It is recommended to set the model to the same precision type as written in the config when loading with `from_pretrained(..., dtype=...)` except when the original type is float32 in which case one can use both `float16` or `bfloat16` for inference.

-

Let's define a `flush(...)` function to free all allocated memory so that we can accurately measure the peak allocated GPU memory.

```python

@@ -148,6 +150,7 @@ Let's call it now for the next experiment.

```python

flush()

```

+

From the Accelerate library, you can also use a device-agnostic utility method called [release_memory](https://github.com/huggingface/accelerate/blob/29be4788629b772a3b722076e433b5b3b5c85da3/src/accelerate/utils/memory.py#L63), which takes various hardware backends like XPU, MLU, NPU, MPS, and more into account.

```python

@@ -204,6 +207,7 @@ result

```

**Output**:

+

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```python\ndef bytes_to_giga_bytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single

```

@@ -215,6 +219,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```

15.219234466552734

```

@@ -222,8 +227,8 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

Significantly less! We're down to just a bit over 15 GBs and could therefore run this model on consumer GPUs like the 4090.

We're seeing a very nice gain in memory efficiency and more or less no degradation to the model's output. However, we can also notice a slight slow-down during inference.

-

We delete the models and flush the memory again.

+

```python

del model

del pipe

@@ -245,6 +250,7 @@ result

```

**Output**:

+

```

Here is a Python function that transforms bytes to Giga bytes:\n\n```\ndef bytes_to_gigabytes(bytes):\n return bytes / 1024 / 1024 / 1024\n```\n\nThis function takes a single argument

```

@@ -256,6 +262,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```

9.543574333190918

```

@@ -270,6 +277,7 @@ Also note that inference here was again a bit slower compared to 8-bit quantizat

del model

del pipe

```

+

```python

flush()

```

@@ -384,6 +392,7 @@ def alternating(list1, list2):

-----

"""

```

+

For demonstration purposes, we duplicate the system prompt by ten so that the input length is long enough to observe Flash Attention's memory savings.

We append the original text prompt `"Question: Please write a function in Python that transforms bytes to Giga bytes.\n\nAnswer: Here"`

@@ -413,6 +422,7 @@ result

```

**Output**:

+

```

Generated in 10.96854019165039 seconds.

Sure. Here is a function that does that.\n\ndef bytes_to_giga(bytes):\n return bytes / 1024 / 1024 / 1024\n\nAnswer: Sure. Here is a function that does that.\n\ndef

@@ -429,6 +439,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```bash

37.668193340301514

```

@@ -460,6 +471,7 @@ result

```

**Output**:

+

```

Generated in 3.0211617946624756 seconds.

Sure. Here is a function that does that.\n\ndef bytes_to_giga(bytes):\n return bytes / 1024 / 1024 / 1024\n\nAnswer: Sure. Here is a function that does that.\n\ndef

@@ -474,6 +486,7 @@ bytes_to_giga_bytes(torch.cuda.max_memory_allocated())

```

**Output**:

+

```

32.617331981658936

```

@@ -604,6 +617,7 @@ generated_text

```

**Output**:

+

```

shape of input_ids torch.Size([1, 21])

shape of input_ids torch.Size([1, 22])

@@ -641,6 +655,7 @@ generated_text

```

**Output**:

+

```

shape of input_ids torch.Size([1, 1])

length of key-value cache 20

@@ -712,6 +727,7 @@ tokenizer.batch_decode(generation_output.sequences)[0][len(prompt):]

```

**Output**:

+

```

is a modified version of the function that returns Mega bytes instead.

@@ -733,6 +749,7 @@ config = model.config

```

**Output**:

+

```

7864320000

```

@@ -773,7 +790,6 @@ The most notable application of GQA is [Llama-v2](https://huggingface.co/meta-ll

> As a conclusion, it is strongly recommended to make use of either GQA or MQA if the LLM is deployed with auto-regressive decoding and is required to handle large input sequences as is the case for example for chat.

-

## Conclusion

The research community is constantly coming up with new, nifty ways to speed up inference time for ever-larger LLMs. As an example, one such promising research direction is [speculative decoding](https://huggingface.co/papers/2211.17192) where "easy tokens" are generated by smaller, faster language models and only "hard tokens" are generated by the LLM itself. Going into more detail is out of the scope of this notebook, but can be read upon in this [nice blog post](https://huggingface.co/blog/assisted-generation).

diff --git a/docs/source/en/main_classes/callback.md b/docs/source/en/main_classes/callback.md

index b29c9e7264e..bc1413a9474 100644

--- a/docs/source/en/main_classes/callback.md

+++ b/docs/source/en/main_classes/callback.md

@@ -54,7 +54,6 @@ The main class that implements callbacks is [`TrainerCallback`]. It gets the

Trainer's internal state via [`TrainerState`], and can take some actions on the training loop via

[`TrainerControl`].

-

## Available Callbacks

Here is the list of the available [`TrainerCallback`] in the library:

diff --git a/docs/source/en/main_classes/configuration.md b/docs/source/en/main_classes/configuration.md

index 0cfef06d3ce..933621f6a14 100644

--- a/docs/source/en/main_classes/configuration.md

+++ b/docs/source/en/main_classes/configuration.md

@@ -24,7 +24,6 @@ Each derived config class implements model specific attributes. Common attribute

`hidden_size`, `num_attention_heads`, and `num_hidden_layers`. Text models further implement:

`vocab_size`.

-

## PretrainedConfig

[[autodoc]] PretrainedConfig

diff --git a/docs/source/en/main_classes/data_collator.md b/docs/source/en/main_classes/data_collator.md

index 2941338375b..33d156ec93f 100644

--- a/docs/source/en/main_classes/data_collator.md

+++ b/docs/source/en/main_classes/data_collator.md

@@ -25,7 +25,6 @@ on the formed batch.

Examples of use can be found in the [example scripts](../examples) or [example notebooks](../notebooks).

-

## Default data collator

[[autodoc]] data.data_collator.default_data_collator

diff --git a/docs/source/en/main_classes/deepspeed.md b/docs/source/en/main_classes/deepspeed.md

index 0b9e28656c0..b04949229da 100644

--- a/docs/source/en/main_classes/deepspeed.md

+++ b/docs/source/en/main_classes/deepspeed.md

@@ -16,7 +16,7 @@ rendered properly in your Markdown viewer.

# DeepSpeed

-[DeepSpeed](https://github.com/deepspeedai/DeepSpeed), powered by Zero Redundancy Optimizer (ZeRO), is an optimization library for training and fitting very large models onto a GPU. It is available in several ZeRO stages, where each stage progressively saves more GPU memory by partitioning the optimizer state, gradients, parameters, and enabling offloading to a CPU or NVMe. DeepSpeed is integrated with the [`Trainer`] class and most of the setup is automatically taken care of for you.

+[DeepSpeed](https://github.com/deepspeedai/DeepSpeed), powered by Zero Redundancy Optimizer (ZeRO), is an optimization library for training and fitting very large models onto a GPU. It is available in several ZeRO stages, where each stage progressively saves more GPU memory by partitioning the optimizer state, gradients, parameters, and enabling offloading to a CPU or NVMe. DeepSpeed is integrated with the [`Trainer`] class and most of the setup is automatically taken care of for you.

However, if you want to use DeepSpeed without the [`Trainer`], Transformers provides a [`HfDeepSpeedConfig`] class.

diff --git a/docs/source/en/main_classes/executorch.md b/docs/source/en/main_classes/executorch.md

index 3178085c913..3406309aa32 100644

--- a/docs/source/en/main_classes/executorch.md

+++ b/docs/source/en/main_classes/executorch.md

@@ -15,14 +15,12 @@ rendered properly in your Markdown viewer.

-->

-

# ExecuTorch

[`ExecuTorch`](https://github.com/pytorch/executorch) is an end-to-end solution for enabling on-device inference capabilities across mobile and edge devices including wearables, embedded devices and microcontrollers. It is part of the PyTorch ecosystem and supports the deployment of PyTorch models with a focus on portability, productivity, and performance.

ExecuTorch introduces well defined entry points to perform model, device, and/or use-case specific optimizations such as backend delegation, user-defined compiler transformations, memory planning, and more. The first step in preparing a PyTorch model for execution on an edge device using ExecuTorch is to export the model. This is achieved through the use of a PyTorch API called [`torch.export`](https://pytorch.org/docs/stable/export.html).

-

## ExecuTorch Integration

An integration point is being developed to ensure that 🤗 Transformers can be exported using `torch.export`. The goal of this integration is not only to enable export but also to ensure that the exported artifact can be further lowered and optimized to run efficiently in `ExecuTorch`, particularly for mobile and edge use cases.

diff --git a/docs/source/en/main_classes/feature_extractor.md b/docs/source/en/main_classes/feature_extractor.md

index fd451a35481..294ecad6309 100644

--- a/docs/source/en/main_classes/feature_extractor.md

+++ b/docs/source/en/main_classes/feature_extractor.md

@@ -18,7 +18,6 @@ rendered properly in your Markdown viewer.

A feature extractor is in charge of preparing input features for audio or vision models. This includes feature extraction from sequences, e.g., pre-processing audio files to generate Log-Mel Spectrogram features, feature extraction from images, e.g., cropping image files, but also padding, normalization, and conversion to NumPy and PyTorch tensors.

-

## FeatureExtractionMixin

[[autodoc]] feature_extraction_utils.FeatureExtractionMixin

diff --git a/docs/source/en/main_classes/image_processor.md b/docs/source/en/main_classes/image_processor.md

index 7dc9de60571..61be0306630 100644

--- a/docs/source/en/main_classes/image_processor.md

+++ b/docs/source/en/main_classes/image_processor.md

@@ -26,6 +26,7 @@ from transformers import AutoImageProcessor

processor = AutoImageProcessor.from_pretrained("facebook/detr-resnet-50", use_fast=True)

```

+

Note that `use_fast` will be set to `True` by default in a future release.

When using a fast image processor, you can also set the `device` argument to specify the device on which the processing should be done. By default, the processing is done on the same device as the inputs if the inputs are tensors, or on the CPU otherwise.

@@ -57,7 +58,6 @@ Here are some speed comparisons between the base and fast image processors for t

These benchmarks were run on an [AWS EC2 g5.2xlarge instance](https://aws.amazon.com/ec2/instance-types/g5/), utilizing an NVIDIA A10G Tensor Core GPU.

-

## ImageProcessingMixin

[[autodoc]] image_processing_utils.ImageProcessingMixin

@@ -72,7 +72,6 @@ These benchmarks were run on an [AWS EC2 g5.2xlarge instance](https://aws.amazon

[[autodoc]] image_processing_utils.BaseImageProcessor

-

## BaseImageProcessorFast

[[autodoc]] image_processing_utils_fast.BaseImageProcessorFast

diff --git a/docs/source/en/main_classes/logging.md b/docs/source/en/main_classes/logging.md

index 5cbdf9ae27e..34da2ac9d1b 100644

--- a/docs/source/en/main_classes/logging.md

+++ b/docs/source/en/main_classes/logging.md

@@ -55,7 +55,6 @@ logger.info("INFO")

logger.warning("WARN")

```

-

All the methods of this logging module are documented below, the main ones are

[`logging.get_verbosity`] to get the current level of verbosity in the logger and

[`logging.set_verbosity`] to set the verbosity to the level of your choice. In order (from the least

diff --git a/docs/source/en/main_classes/model.md b/docs/source/en/main_classes/model.md

index d7768a905ce..e3e77a8e2e1 100644

--- a/docs/source/en/main_classes/model.md

+++ b/docs/source/en/main_classes/model.md

@@ -26,7 +26,6 @@ file or directory, or from a pretrained model configuration provided by the libr

The other methods that are common to each model are defined in [`~modeling_utils.ModuleUtilsMixin`] and [`~generation.GenerationMixin`].

-

## PreTrainedModel

[[autodoc]] PreTrainedModel

diff --git a/docs/source/en/main_classes/onnx.md b/docs/source/en/main_classes/onnx.md

index 81d31c97e88..5f8869948d2 100644

--- a/docs/source/en/main_classes/onnx.md

+++ b/docs/source/en/main_classes/onnx.md

@@ -51,4 +51,3 @@ to export models for different types of topologies or tasks.

### FeaturesManager

[[autodoc]] onnx.features.FeaturesManager

-

diff --git a/docs/source/en/main_classes/optimizer_schedules.md b/docs/source/en/main_classes/optimizer_schedules.md

index 84d9ca7b907..3bab249ab4e 100644

--- a/docs/source/en/main_classes/optimizer_schedules.md

+++ b/docs/source/en/main_classes/optimizer_schedules.md

@@ -22,7 +22,6 @@ The `.optimization` module provides:

- several schedules in the form of schedule objects that inherit from `_LRSchedule`:

- a gradient accumulation class to accumulate the gradients of multiple batches

-

## AdaFactor

[[autodoc]] Adafactor

diff --git a/docs/source/en/main_classes/output.md b/docs/source/en/main_classes/output.md

index 295f99e21d1..8a9ae879fb1 100644

--- a/docs/source/en/main_classes/output.md

+++ b/docs/source/en/main_classes/output.md

@@ -47,7 +47,6 @@ However, this is not always the case. Some models apply normalization or subsequ

-

You can access each attribute as you would usually do, and if that attribute has not been returned by the model, you

will get `None`. Here for instance `outputs.loss` is the loss computed by the model, and `outputs.attentions` is

`None`.

diff --git a/docs/source/en/main_classes/pipelines.md b/docs/source/en/main_classes/pipelines.md

index 0e4cf55995b..31139ddf429 100644

--- a/docs/source/en/main_classes/pipelines.md

+++ b/docs/source/en/main_classes/pipelines.md

@@ -81,7 +81,6 @@ for out in tqdm(pipe(KeyDataset(dataset, "file"))):

For ease of use, a generator is also possible:

-

```python

from transformers import pipeline

@@ -196,7 +195,6 @@ This is a occasional very long sentence compared to the other. In that case, the

tokens long, so the whole batch will be [64, 400] instead of [64, 4], leading to the high slowdown. Even worse, on

bigger batches, the program simply crashes.

-

```

------------------------------

Streaming no batching

@@ -245,7 +243,6 @@ multiple forward pass of a model. Under normal circumstances, this would yield i

In order to circumvent this issue, both of these pipelines are a bit specific, they are `ChunkPipeline` instead of

regular `Pipeline`. In short:

-

```python

preprocessed = pipe.preprocess(inputs)

model_outputs = pipe.forward(preprocessed)

@@ -254,7 +251,6 @@ outputs = pipe.postprocess(model_outputs)

Now becomes:

-

```python

all_model_outputs = []

for preprocessed in pipe.preprocess(inputs):

@@ -282,7 +278,6 @@ If you want to override a specific pipeline.

Don't hesitate to create an issue for your task at hand, the goal of the pipeline is to be easy to use and support most

cases, so `transformers` could maybe support your use case.

-

If you want to try simply you can:

- Subclass your pipeline of choice

@@ -302,7 +297,6 @@ my_pipeline = pipeline(model="xxxx", pipeline_class=MyPipeline)

That should enable you to do all the custom code you want.

-

## Implementing a pipeline

[Implementing a new pipeline](../add_new_pipeline)

@@ -329,7 +323,6 @@ Pipelines available for audio tasks include the following.

- __call__

- all

-

### ZeroShotAudioClassificationPipeline

[[autodoc]] ZeroShotAudioClassificationPipeline

diff --git a/docs/source/en/main_classes/processors.md b/docs/source/en/main_classes/processors.md

index 2c2e0cd31b7..8863a632628 100644

--- a/docs/source/en/main_classes/processors.md

+++ b/docs/source/en/main_classes/processors.md

@@ -71,7 +71,6 @@ Additionally, the following method can be used to load values from a data file a

[[autodoc]] data.processors.glue.glue_convert_examples_to_features

-

## XNLI

[The Cross-Lingual NLI Corpus (XNLI)](https://www.nyu.edu/projects/bowman/xnli/) is a benchmark that evaluates the

@@ -88,7 +87,6 @@ Please note that since the gold labels are available on the test set, evaluation

An example using these processors is given in the [run_xnli.py](https://github.com/huggingface/transformers/tree/main/examples/pytorch/text-classification/run_xnli.py) script.

-

## SQuAD

[The Stanford Question Answering Dataset (SQuAD)](https://rajpurkar.github.io/SQuAD-explorer//) is a benchmark that

@@ -115,11 +113,9 @@ Additionally, the following method can be used to convert SQuAD examples into

[[autodoc]] data.processors.squad.squad_convert_examples_to_features

-

These processors as well as the aforementioned method can be used with files containing the data as well as with the

*tensorflow_datasets* package. Examples are given below.

-

### Example usage

Here is an example using the processors as well as the conversion method using data files:

diff --git a/docs/source/en/main_classes/tokenizer.md b/docs/source/en/main_classes/tokenizer.md

index 83d2ae5df6a..52c9751226d 100644

--- a/docs/source/en/main_classes/tokenizer.md

+++ b/docs/source/en/main_classes/tokenizer.md

@@ -22,7 +22,7 @@ Rust library [🤗 Tokenizers](https://github.com/huggingface/tokenizers). The "

1. a significant speed-up in particular when doing batched tokenization and

2. additional methods to map between the original string (character and words) and the token space (e.g. getting the

- index of the token comprising a given character or the span of characters corresponding to a given token).

+ index of the token comprising a given character or the span of characters corresponding to a given token).

The base classes [`PreTrainedTokenizer`] and [`PreTrainedTokenizerFast`]

implement the common methods for encoding string inputs in model inputs (see below) and instantiating/saving python and

@@ -50,12 +50,11 @@ several advanced alignment methods which can be used to map between the original

token space (e.g., getting the index of the token comprising a given character or the span of characters corresponding

to a given token).

-

# Multimodal Tokenizer

Apart from that each tokenizer can be a "multimodal" tokenizer which means that the tokenizer will hold all relevant special tokens

as part of tokenizer attributes for easier access. For example, if the tokenizer is loaded from a vision-language model like LLaVA, you will

-be able to access `tokenizer.image_token_id` to obtain the special image token used as a placeholder.

+be able to access `tokenizer.image_token_id` to obtain the special image token used as a placeholder.

To enable extra special tokens for any type of tokenizer, you have to add the following lines and save the tokenizer. Extra special tokens do not

have to be modality related and can ne anything that the model often needs access to. In the below code, tokenizer at `output_dir` will have direct access

diff --git a/docs/source/en/main_classes/video_processor.md b/docs/source/en/main_classes/video_processor.md

index ee69030ab1a..29d29d0cb60 100644

--- a/docs/source/en/main_classes/video_processor.md

+++ b/docs/source/en/main_classes/video_processor.md

@@ -22,7 +22,6 @@ The video processor extends the functionality of image processors by allowing Vi

When adding a new VLM or updating an existing one to enable distinct video preprocessing, saving and reloading the processor configuration will store the video related arguments in a dedicated file named `video_preprocessing_config.json`. Don't worry if you haven't updated your VLM, the processor will try to load video related configurations from a file named `preprocessing_config.json`.

-

### Usage Example

Here's an example of how to load a video processor with [`llava-hf/llava-onevision-qwen2-0.5b-ov-hf`](https://huggingface.co/llava-hf/llava-onevision-qwen2-0.5b-ov-hf) model:

@@ -59,7 +58,6 @@ The video processor can also sample video frames using the technique best suited

-

```python

from transformers import AutoVideoProcessor

@@ -92,4 +90,3 @@ print(processed_video_inputs.pixel_values_videos.shape)

## BaseVideoProcessor

[[autodoc]] video_processing_utils.BaseVideoProcessor

-

diff --git a/docs/source/en/model_doc/aimv2.md b/docs/source/en/model_doc/aimv2.md

index 9d0abbaaf36..acf9c4de12f 100644

--- a/docs/source/en/model_doc/aimv2.md

+++ b/docs/source/en/model_doc/aimv2.md

@@ -25,7 +25,6 @@ The abstract from the paper is the following:

*We introduce a novel method for pre-training of large-scale vision encoders. Building on recent advancements in autoregressive pre-training of vision models, we extend this framework to a multimodal setting, i.e., images and text. In this paper, we present AIMV2, a family of generalist vision encoders characterized by a straightforward pre-training process, scalability, and remarkable performance across a range of downstream tasks. This is achieved by pairing the vision encoder with a multimodal decoder that autoregressively generates raw image patches and text tokens. Our encoders excel not only in multimodal evaluations but also in vision benchmarks such as localization, grounding, and classification. Notably, our AIMV2-3B encoder achieves 89.5% accuracy on ImageNet-1k with a frozen trunk. Furthermore, AIMV2 consistently outperforms state-of-the-art contrastive models (e.g., CLIP, SigLIP) in multimodal image understanding across diverse settings.*

-

This model was contributed by [Yaswanth Gali](https://huggingface.co/yaswanthgali).

The original code can be found [here](https://github.com/apple/ml-aim).

diff --git a/docs/source/en/model_doc/aria.md b/docs/source/en/model_doc/aria.md

index e5f4afa7b7a..ddd0815aaa5 100644

--- a/docs/source/en/model_doc/aria.md

+++ b/docs/source/en/model_doc/aria.md

@@ -98,7 +98,7 @@ print(response)

Quantization reduces the memory burden of large models by representing the weights in a lower precision. Refer to the [Quantization](../quantization/overview) overview for more available quantization backends.

-

+

The example below uses [torchao](../quantization/torchao) to only quantize the weights to int4 and the [rhymes-ai/Aria-sequential_mlp](https://huggingface.co/rhymes-ai/Aria-sequential_mlp) checkpoint. This checkpoint replaces grouped GEMM with `torch.nn.Linear` layers for easier quantization.

```py

@@ -142,7 +142,6 @@ response = processor.decode(output_ids, skip_special_tokens=True)

print(response)

```

-

## AriaImageProcessor

[[autodoc]] AriaImageProcessor

diff --git a/docs/source/en/model_doc/audio-spectrogram-transformer.md b/docs/source/en/model_doc/audio-spectrogram-transformer.md

index 40115810467..092bf3b26f3 100644

--- a/docs/source/en/model_doc/audio-spectrogram-transformer.md

+++ b/docs/source/en/model_doc/audio-spectrogram-transformer.md

@@ -52,13 +52,13 @@ the authors compute the stats for a downstream dataset.

### Using Scaled Dot Product Attention (SDPA)

-PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

-encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

-[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

+PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

+encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

+[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

-SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

+SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```

diff --git a/docs/source/en/model_doc/auto.md b/docs/source/en/model_doc/auto.md