mirror of

https://github.com/pytorch/pytorch.git

synced 2025-10-21 05:34:18 +08:00

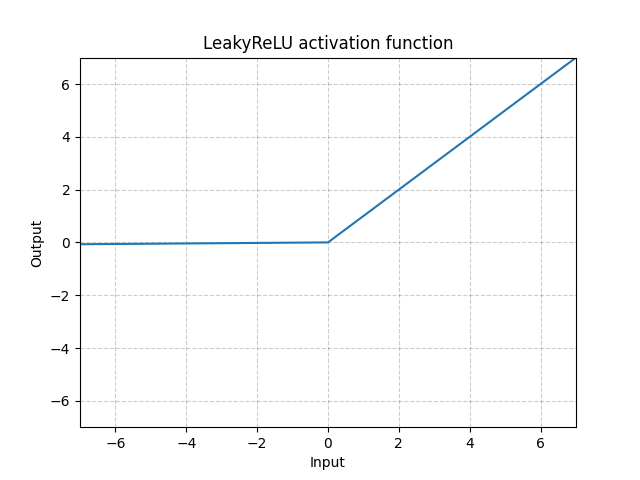

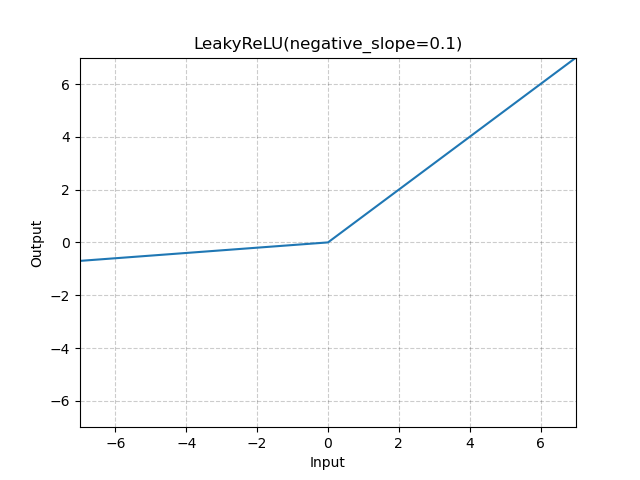

Fixes #56363, Fixes #78243 | [Before](https://pytorch.org/docs/stable/generated/torch.nn.LeakyReLU.html) | [After](https://docs-preview.pytorch.org/78508/generated/torch.nn.LeakyReLU.html) | | --- | --- | |  |  | - Plot `LeakyReLU` with `negative_slope=0.1` instead of `negative_slope=0.01` - Changed the title from `"{function_name} activation function"` to the name returned by `_get_name()` (with parameter info). The full list is attached at the end. - Modernized the script and ran black on `docs/source/scripts/build_activation_images.py`. Apologies for the ugly diff. ``` ELU(alpha=1.0) Hardshrink(0.5) Hardtanh(min_val=-1.0, max_val=1.0) Hardsigmoid() Hardswish() LeakyReLU(negative_slope=0.1) LogSigmoid() PReLU(num_parameters=1) ReLU() ReLU6() RReLU(lower=0.125, upper=0.3333333333333333) SELU() SiLU() Mish() CELU(alpha=1.0) GELU(approximate=none) Sigmoid() Softplus(beta=1, threshold=20) Softshrink(0.5) Softsign() Tanh() Tanhshrink() ``` cc @brianjo @mruberry @svekars @holly1238 Pull Request resolved: https://github.com/pytorch/pytorch/pull/78508 Approved by: https://github.com/jbschlosser

80 lines

2.1 KiB

Python

80 lines

2.1 KiB

Python

"""

|

|

This script will generate input-out plots for all of the activation

|

|

functions. These are for use in the documentation, and potentially in

|

|

online tutorials.

|

|

"""

|

|

|

|

from pathlib import Path

|

|

|

|

import torch

|

|

import matplotlib

|

|

from matplotlib import pyplot as plt

|

|

|

|

matplotlib.use("Agg")

|

|

|

|

|

|

# Create a directory for the images, if it doesn't exist

|

|

ACTIVATION_IMAGE_PATH = Path(__file__).parent / "activation_images"

|

|

|

|

if not ACTIVATION_IMAGE_PATH.exists():

|

|

ACTIVATION_IMAGE_PATH.mkdir()

|

|

|

|

# In a refactor, these ought to go into their own module or entry

|

|

# points so we can generate this list programmaticly

|

|

functions = [

|

|

torch.nn.ELU(),

|

|

torch.nn.Hardshrink(),

|

|

torch.nn.Hardtanh(),

|

|

torch.nn.Hardsigmoid(),

|

|

torch.nn.Hardswish(),

|

|

torch.nn.LeakyReLU(negative_slope=0.1),

|

|

torch.nn.LogSigmoid(),

|

|

torch.nn.PReLU(),

|

|

torch.nn.ReLU(),

|

|

torch.nn.ReLU6(),

|

|

torch.nn.RReLU(),

|

|

torch.nn.SELU(),

|

|

torch.nn.SiLU(),

|

|

torch.nn.Mish(),

|

|

torch.nn.CELU(),

|

|

torch.nn.GELU(),

|

|

torch.nn.Sigmoid(),

|

|

torch.nn.Softplus(),

|

|

torch.nn.Softshrink(),

|

|

torch.nn.Softsign(),

|

|

torch.nn.Tanh(),

|

|

torch.nn.Tanhshrink(),

|

|

]

|

|

|

|

|

|

def plot_function(function, **args):

|

|

"""

|

|

Plot a function on the current plot. The additional arguments may

|

|

be used to specify color, alpha, etc.

|

|

"""

|

|

xrange = torch.arange(-7.0, 7.0, 0.01) # We need to go beyond 6 for ReLU6

|

|

plt.plot(xrange.numpy(), function(xrange).detach().numpy(), **args)

|

|

|

|

|

|

# Step through all the functions

|

|

for function in functions:

|

|

function_name = function._get_name()

|

|

plot_path = ACTIVATION_IMAGE_PATH / f"{function_name}.png"

|

|

if not plot_path.exists():

|

|

# Start a new plot

|

|

plt.clf()

|

|

plt.grid(color="k", alpha=0.2, linestyle="--")

|

|

|

|

# Plot the current function

|

|

plot_function(function)

|

|

|

|

plt.title(function)

|

|

plt.xlabel("Input")

|

|

plt.ylabel("Output")

|

|

plt.xlim([-7, 7])

|

|

plt.ylim([-7, 7])

|

|

|

|

# And save it

|

|

plt.savefig(plot_path)

|

|

print(f"Saved activation image for {function_name} at {plot_path}")

|