1. Move cond to torch/_higher_order_ops

2. Fix a bug in map, which didn't respect tensor dtype when creating a new one from them. We cannot directly use empty_strided because boolean tensor created by empty_strided is not properly intialized so it causes error "load of value 190, which is not a valid value for type 'bool'" on clang asan environment on CI.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/108025

Approved by: https://github.com/zou3519

Fixes https://github.com/pytorch/pytorch/pull/102577#issuecomment-1650905536

Serializing to json is more stable, and renamed the API:

```

# Takes in a treespec and returns the serialized treespec as a string. Also optionally takes in a protocol version number.

def treespec_dumps(treespec: TreeSpec, protocol: Optional[int] = None) -> str:

# Takes in a serialized treespec and outputs a TreeSpec

def treespec_loads(data: str) -> TreeSpec:

```

If users want to register their own serialization format for a given pytree, they can go through the `_register_treespec_serializer` API which optionally takes in a `getstate` and `setstate` function.

```

_register_treespec_serializer(type_, *, getstate, setstate)

# Takes in the context, and outputs a json-dumpable context

def getstate(context: Context) -> DumpableContext:

# Takes in a json-dumpable context, and reconstructs the original context

def setstate(dumpable_context: DumpableContext) -> Context:

```

We will serialize to the following dataclass, and then json.dump this it to string.

```

class TreeSpec

type: Optional[str] # a string name of the type. null for the case of a LeafSpec

context: Optional[Any] # optional, a json dumpable format of the context

children_specs: List[TreeSpec],

}

```

If no getstate/setstate function is registered, we will by default serialize the context using `json.dumps/loads`. We will also serialize the type through `f"{typ.__module__}.{typ.__name__}"`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106116

Approved by: https://github.com/zou3519

**Summary**

Add linear and linear-unary post-op quantization recipe to x86 inductor quantizer. For PT2E with Inductor. With this, the quantization path will add `quant-dequant` pattern for linear and linear-unary post op.

**Test plan**

python test/test_quantization.py -k test_linear_with_quantizer_api

python test/test_quantization.py -k test_linear_unary_with_quantizer_api

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106781

Approved by: https://github.com/leslie-fang-intel, https://github.com/jgong5, https://github.com/jerryzh168

ghstack dependencies: #105818

When generating a wrapper call, we may have implicit resize applied to

the kernel's output. For example, for addmm(3d_tensor, 2d_tensor),

its output buffer is resized to a 2d tensor. This triggers a warning from

Aten's resize_output op:

"UserWarning: An output with one or more elements was resized since it had...

This behavior is deprecated, and in a future PyTorch release outputs will

not be resized unless they have zero elements..."

More importantly, the output shape is not the same as we would expect, i.e.

2d tensor v.s. 3d tensor.

This PR fixed the issue by injecting resize_(0) before calling the relevant

kernel and resize_(expected_shape) after the kernel call.

We also fixed a minor typo in the PR.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107848

Approved by: https://github.com/desertfire, https://github.com/jansel

This PR brings in a few inductor changes required for ROCm

~**1 - Introduction of a toggle for enforced channel last convolution fallbacks**~

This addition is split off into its own PR after some cleanup by @pragupta https://github.com/pytorch/pytorch/pull/107812

**2 - Addition of ROCm specific block sizes**

We are now able to support the MAX_AUTOTUNE mode on ROCm, we are proposing conditions to allow us to finetune our own block tuning. Currently triton on ROCm does not benefit from pipelining so we are setting all configs to `num_stages=1` and we have removed some upstream tunings on ROCm to avoid running out of shared memory resources.

In the future we will provide more optimised tunings for ROCm but for now this should mitigate any issues

~**3 - Addition of device_type to triton's compile_meta**~

~Proposing this addition to `triton_heuristics.py`, Triton on ROCm requires device_type to be set to hip https://github.com/ROCmSoftwarePlatform/triton/pull/284 suggesting to bring this change in here so we can pass down the correct device type to triton.~

This change is split off and will arrive in the wheel update PR https://github.com/pytorch/pytorch/pull/107600 leaving this PR to focus on the ROCm specific block sizes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107584

Approved by: https://github.com/jithunnair-amd, https://github.com/jansel, https://github.com/eellison

This reworks the DORT backend factory function to support the options kwarg of torch.compile, and defines a concrete OrtBackendOptions type that can be used to influence the backend.

Caching is also implemented in order to reuse backends with equal options.

Wrapping the backend in auto_autograd also becomes an option, which allows `OrtBackend` to always be returned as the callable for torch.compile; wrapping happens internally if opted into (True by default).

Lastly, expose options for configuring preferred execution providers (will be attempted first), whether or not to attempt to infer an ORT EP from a torch found device in the graph or inputs, and finally the default/fallback EPs.

### Demo

The following demo runs `Gelu` through `torch.compile(backend="onnxrt")` using various backend options through a dictionary form and a strongly typed form. It additionally exports the model through both the ONNX TorchScript exporter and the new TorchDynamo exporter.

```python

import math

import onnx.inliner

import onnxruntime

import torch

import torch.onnx

torch.manual_seed(0)

class Gelu(torch.nn.Module):

def forward(self, x):

return x * (0.5 * torch.erf(math.sqrt(0.5) * x) + 1.0)

@torch.compile(

backend="onnxrt",

options={

"preferred_execution_providers": [

"NotARealEP",

"CPUExecutionProvider",

],

"export_options": torch.onnx.ExportOptions(dynamic_shapes=True),

},

)

def dort_gelu(x):

return Gelu()(x)

ort_session_options = onnxruntime.SessionOptions()

ort_session_options.log_severity_level = 0

dort_gelu2 = torch.compile(

Gelu(),

backend="onnxrt",

options=torch.onnx._OrtBackendOptions(

preferred_execution_providers=[

"NotARealEP",

"CPUExecutionProvider",

],

export_options=torch.onnx.ExportOptions(dynamic_shapes=True),

ort_session_options=ort_session_options,

),

)

x = torch.randn(10)

torch.onnx.export(Gelu(), (x,), "gelu_ts.onnx")

export_output = torch.onnx.dynamo_export(Gelu(), x)

export_output.save("gelu_dynamo.onnx")

inlined_model = onnx.inliner.inline_local_functions(export_output.model_proto)

onnx.save_model(inlined_model, "gelu_dynamo_inlined.onnx")

print("Torch Eager:")

print(Gelu()(x))

print("DORT:")

print(dort_gelu(x))

print(dort_gelu2(x))

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107973

Approved by: https://github.com/BowenBao

**Summary**

The latest check-in a0cfaf0688 for the conv-bn folding assumes the graph is captured by the new graph capture API `torch._export.capture_pre_autograd_graph`. Since we still need to use the original graph capture API `torch._dynamo_export` in 2.1 release. So, this check-in made negative impact to workloads' performance heavily. Made this PR to fix this issue by trying to make the conv-bn folding function workable with both new and original graph capture API.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107951

Approved by: https://github.com/jgong5, https://github.com/jerryzh168

ghstack dependencies: #106836, #106838, #106958

Compared to #104848, this PR makes a step further: when the enable_sparse_support decorator is applied to `torch.autograd.gradcheck`, the resulting callable is equivalent to `torch.autograd.gradcheck` with an extra feature of supporting functions that can have input sparse tensors or/and can return sparse tensors.

At the same time, the underlying call to `torch.autograd.gradcheck` will operate on strided tensors only. This basically means that torch/autograd/gradcheck.py can be cleaned up by removing the code that deals with sparse tensors.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107150

Approved by: https://github.com/albanD, https://github.com/amjames, https://github.com/cpuhrsch

ghstack dependencies: #107638, #107777

Resolves https://github.com/pytorch/pytorch/issues/107097

After this PR, instead of

```python

torch.sparse_coo_tensor(indices, values, size)._coalesced_(is_coalesced)

```

(that does not work in the autograd context, see #107097), use

```python

torch.sparse_coo_tensor(indices, values, size, is_coalesced=is_coalesced)

```

All sparse coo factory functions that take indices as input support the `is_coalesced` argument.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107638

Approved by: https://github.com/cpuhrsch

This PR relands https://github.com/pytorch/pytorch/pull/106827 which get reverted because of causing compilation error for some ads model.

Yanbo provide a repro in one of the 14k model ( `pytest ./generated/test_KaiyangZhou_deep_person_reid.py -k test_044`). This is also the model I used to confirm the fix and come up with a unit test. In this model, we call `tritoin_heuristics.triton_config` with size_hints [2048, 2]. Previously this would result in a trition config with XBLOCK=2048 and YBLOCK=2 . But since we change the mapping between size_hints and XYZ dimension, we now generate a triton config with XBLOCK=2 and YBLOCK=2048. This fails compilation since we set max YBLOCK to be 1024.

My fix is to make sure we never generate a triton config that exceeds the maximum block size.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107902

Approved by: https://github.com/jansel

Summary: This fixes the no bias case for conv annotations.

Previously this would result in an index out of bounds, since

the new aten.conv2d op may not have the bias arg (unlike the

old aten.convolution op). This was not caught because of a lack

of test cases, which are added in this commit.

Test Plan:

python test/test_quantization.py TestQuantizePT2E.test_qat_conv_no_bias

python test/test_quantization.py TestQuantizePT2E.test_qat_conv_bn_relu_fusion_no_conv_bias

Reviewers: jerryzh168, kimishpatel

Subscribers: jerryzh168, kimishpatel

Differential Revision: [D48696874](https://our.internmc.facebook.com/intern/diff/D48696874)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107971

Approved by: https://github.com/jerryzh168

Given standalone generates args anyways, it seems like it would be more convenient if it explicitly used a random port by default instead of trying to use 29400.

That way users can directly go with `--standalone` instead of having to spell out `--rdzv-backend=c10d --rdzv-endpoint=localhost:0`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107734

Approved by: https://github.com/H-Huang

For max_pooling code:

```

#pragma GCC ivdep

for(long i2=static_cast<long>(0L); i2<static_cast<long>(56L); i2+=static_cast<long>(1L))

{

for(long i3=static_cast<long>(0L); i3<static_cast<long>(64L); i3+=static_cast<long>(16L))

{

auto tmp0 = at::vec::Vectorized<int>(static_cast<int>((-1L) + (2L*i1)));

auto tmp1 = at::vec::Vectorized<int>(static_cast<int>(0));

auto tmp2 = to_float_mask(tmp0 >= tmp1);

auto tmp3 = at::vec::Vectorized<int>(static_cast<int>(112));

auto tmp4 = to_float_mask(tmp0 < tmp3);

auto tmp5 = tmp2 & tmp4;

auto tmp6 = at::vec::Vectorized<int>(static_cast<int>((-1L) + (2L*i2)));

auto tmp7 = to_float_mask(tmp6 >= tmp1);

auto tmp8 = to_float_mask(tmp6 < tmp3);

auto tmp9 = tmp7 & tmp8;

auto tmp10 = tmp5 & tmp9;

auto tmp11 = [&]

{

auto tmp12 = at::vec::Vectorized<bfloat16>::loadu(in_ptr0 + static_cast<long>((-7232L) + i3 + (128L*i2) + (14336L*i1) + (802816L*i0)), 16);

load

auto tmp13 = cvt_lowp_fp_to_fp32<bfloat16>(tmp12);

return tmp13;

}

;

auto tmp14 = decltype(tmp11())::blendv(at::vec::Vectorized<float>(-std::numeric_limits<float>::infinity()), tmp11(), to_float_mask(tmp10));

```

the index of ```tmp12 ``` may be a correct index, such as ```i1=0, i2=0, i3=0```, the index is ```-7232L```, it is not a valid index. We may meet segmentation fault error when we call ```tmp11()```, the original behavior is that only the ```tmp10```(index check variable) is true, we can safely get the value, this PR will support masked_load to fixing this issue.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107670

Approved by: https://github.com/jgong5, https://github.com/jansel

In almost all cases this is only included for writing the output formatter, which

only uses `std::ostream` so including `<ostream>` is sufficient.

The istream header is ~1000 lines so the difference is non-trivial.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106914

Approved by: https://github.com/lezcano

**Summary**

Enable the `dequant pattern` promotion pass in inductor. Since in the qconv weight prepack pass, we will match the `dequant->conv2d` pattern. If the `dequant pattern` has multi user nodes, it will fail to be matched.

Taking the example of

```

conv1

/ \

conv2 conv3

```

After quantization flow, it will generate pattern as

```

dequant1

|

conv1

|

quant2

|

dequant2

/ \

conv2 conv3

```

We need to duplicate `dequant2` into `dequant2` and `dequant3`, in order to make `dequant2->conv2` and `dequant3->conv3` pattern matched.

**Test Plan**

```

python -m pytest test_mkldnn_pattern_matcher.py -k test_dequant_promotion

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/104590

Approved by: https://github.com/jgong5, https://github.com/eellison

ghstack dependencies: #104580, #104581, #104588

Instead of hardcoding a new callback creation using 'convert_frame',

add an attribute to both callbacks that implement 'self cloning with new

backend', so DDPOptimizer can invoke this in a consistent way.

Fixes#107686

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107834

Approved by: https://github.com/ezyang

Summary:

When the `cat` inputs' sizes and the `split_sizes` of the downstream `split_with_sizes` match, the `cat` + `split_with_sizes` constellation can be eliminated. E.g. here:

```

@torch.compile

def fn(a, b, c):

cat = torch.ops.aten.cat.default([a, b, c], 1)

split_with_sizes = torch.ops.aten.split_with_sizes.default(cat, [2, 3, 5], 1)

return [s ** 2 for s in split_with_sizes]

inputs = [

torch.randn(2, 2, device="cuda"),

torch.randn(2, 3, device="cuda"),

torch.randn(2, 5, device="cuda"),

]

output = fn(*inputs)

```

This PR adds a new fx pass for such elimination. The new pass is similar to the existing [`splitwithsizes_cat_replace`](b18e1b684a/torch/_inductor/fx_passes/post_grad.py (L508)), but considers the ops in the opposite order.

Test Plan:

```

$ python test/inductor/test_pattern_matcher.py

...

----------------------------------------------------------------------

Ran 21 tests in 46.450s

OK

```

Reviewers:

Subscribers:

Tasks:

Tags:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107956

Approved by: https://github.com/jansel

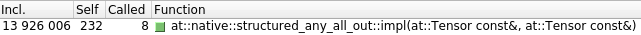

**Summary**

Update onednn from v2.7.3 to v3.1.1.

It is bc-breaking as some APIs are changed on oneDNN side. Changes include:

- PyTorch code where oneDNN is directly called

- Submodule `third_party/ideep` to adapt to oneDNN's new API.

- CMAKE files to fix build issues.

**Test plan**

Building issues and correctness are covered by CI checks.

For performance, we have run TorchBench models to ensure there is no regression. Below is the comparison before and after oneDNN update.

Note:

- Base commit of PyTorch: da322ea

- CPU: Intel(R) Xeon(R) Platinum 8380 CPU @ 2.30GHz (Ice Lake)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/97957

Approved by: https://github.com/jgong5, https://github.com/jerryzh168

Update to ROCm triton pinned commit for the 2.1 branch cut off.

As part of this we are updating `build_triton_wheel.py` and `build-triton-wheel.yml` to support building ROCm triton wheels through pytorch/manylinux-rocm to avoid the need of slowly downloading rpm libraries for ROCm in the cpu manylinux builder image and avoiding the need to maintain a conditional file with hard coded repositories from radeon.org for every ROCm release.

This new approach will allow us to build wheels faster in a more easily maintainable way.

This PR also brings in a required change as Triton on ROCm requires device_type to be set to hip so we can pass down the correct device type to triton (https://github.com/ROCmSoftwarePlatform/triton/pull/284).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107600

Approved by: https://github.com/jansel, https://github.com/jithunnair-amd

Summary:

This is a duplicate PR of 102133, which was reverted because it was

failing internal tests.

It seems like that internal builds did not like my guard to check if

cuSPARSELt was available or not.

Test Plan: python test/test_sparse_semi_structured.py

Differential Revision: D48440330

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107398

Approved by: https://github.com/cpuhrsch

Previously when we found some input or output mismatch between original args / traced result vs. graph-captured input / output, we would have a pretty sparse error message. (This might be partly due to the urge to reuse the same code for matching both inputs and outputs.)

With this PR we now point out which input or output is problematic, what its type is, and also present the expected types along with descriptions of what they mean. We don't suggest any fixes, but the idea is that it should be evident what went wrong looking at the error message.

Differential Revision: [D48668059](https://our.internmc.facebook.com/intern/diff/D48668059/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107907

Approved by: https://github.com/gmagogsfm

Use `view_as_real` to cast complex into a pair of floats and then it becomes just another binary operator.

Enable `polar` and `view_as_complex` consistency tests, but skip `test_output_grad_match_polar_cpu` as `mul` operator is yet not supported

Remove redundant `#ifdef __OBJC__` and capture and re-throw exceptions captured during `createCacheBlock` block.

Fixes https://github.com/pytorch/pytorch/issues/78503

TODOs(in followup PRs):

- Implement backwards (requires complex mul and sgn)

- Measure the perf impact of computing the strides on the fly rather than ahead of time (unrelated to this PR)

Partially addresses https://github.com/pytorch/pytorch/issues/105665

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107324

Approved by: https://github.com/albanD

Summary:

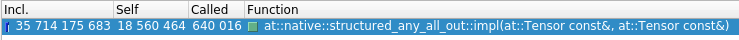

Daohang report this pattern in f469463749

{F1074472207}

{F1074473348}

Hence, we can fuse the tanh after same split.

Typically the pattern looks like split->getitem0,...n-> tanh(geitem 0,..., n). Hence, we search for parent node of tahn nodes and the node should be getitem(parent, index). If tanh is after same split node, parent nodes of getitem nodes should be same.

Test Plan:

```

[jackiexu0313@devgpu005.cln5 ~/fbsource/fbcode (c78736187)]$ buck test mode/dev-nosan //caffe2/test/inductor:group_batch_fusion

File changed: fbcode//caffe2/test/inductor/test_group_batch_fusion.py

Buck UI: https://www.internalfb.com/buck2/df87affc-d294-4663-a50d-ebb71b98070d

Test UI: https://www.internalfb.com/intern/testinfra/testrun/9570149208311124

Network: Up: 0B Down: 0B

Jobs completed: 16. Time elapsed: 1:19.9s.

Tests finished: Pass 6. Fail 0. Fatal 0. Skip 0. Build failure 0

```

Differential Revision: D48581140

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107881

Approved by: https://github.com/yanboliang

> capture_error_mode (str, optional): specifies the cudaStreamCaptureMode for the graph capture stream.

Can be "global", "thread_local" or "relaxed". During cuda graph capture, some actions, such as cudaMalloc,

may be unsafe. "global" will error on actions in other threads, "thread_local" will only error for

actions in the current thread, and "relaxed" will not error on these actions.

Inductor codegen is single-threaded, so it should be safe to enable "thread_local" for inductor's cuda graph capturing. We have seen errors when inductor cudagraphs has been used concurrently with data preprocessing in other threads.

Differential Revision: [D48656014](https://our.internmc.facebook.com/intern/diff/D48656014)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107407

Approved by: https://github.com/albanD, https://github.com/eqy

The c10d socket and gloo listener both set their buffer size to 2048 which causes connection issue at 4k scale. This diff sets the buffer size to `-1` which uses `somaxconn` as the actual buffer size, aiming to enable 24k PG init without crash. The experiment shows the ability to successful creation of 12k ranks without crash.

split the original diff for OSS vs. internal.

Caution: we need the change on both gloo and c10d to enable 12k PG init. Updating only one side may not offer the benefit.

Differential Revision: [D48634654](https://our.internmc.facebook.com/intern/diff/D48634654/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107878

Approved by: https://github.com/H-Huang, https://github.com/fduwjj

Summary:

This is a stride based attribute for a tensor available in Python.

This can help inspect tensors generated using `torch.empty_permuted(.., physical_layout, ...)`, where physical_layout should match the dim_order returned here. `empty_permuted` will be renamed to use dim_order as the param name in the future. And also help Executorch export pipeline with implementing dim_order based tensors.

Differential Revision: D48134476

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106835

Approved by: https://github.com/ezyang

There has been several reports of difficulty in using OpenMP in MacOS, e.g.: https://github.com/pytorch/pytorch/issues/95708 . And there are several PRs to fix it, e.g.: https://github.com/pytorch/pytorch/pull/93895 and https://github.com/pytorch/pytorch/pull/105136 .

This PR tries to explain the root cause, and provide a holistic and systematic way to fix the problem.

For the OpenMP program below to run, the compiler must:

- Be able to process macros like `#pragma omp parallel`

- Be able to find header files like `<omp.h>`

- Be able to link to a library file like `libomp`

```C++

#include <omp.h>

int main()

{

omp_set_num_threads(4);

#pragma omp parallel

{

int id = omp_get_thread_num();

int nthrds = omp_get_num_threads();

int y = id * nthrds;

}

}

```

In MacOS, there might be different compiler tools:

- Apple builtin `clang++`, installed with `xcode commandline tools`. The default `g++` and `clang++` commands both point to the Apple version, as can be confirmed by `g++ --version`

- Public `clang++`, can be installed via `brew install llvm`.

- Public GNU compiler `g++`, can be installed via `brew install gcc`.

Among these compilers, public `clang++` from LLVM and `g++` from GNU both support OpenMP with the flag `-fopenmp`. They have shipped with `<omp.h>` and `libomp` support. The only problem is that Apple builtin `clang++` does not contain `<omp.h>` or `libomp`. Therefore, users can follow the steps to enable OpenMP support:

- Use a compiler other than Apple builtin clang++ by specifying the `CXX` environment variable

- Use `conda install llvm-openmp` to place the header files and lib files inside conda environments (and can be discovered by `CONDA_PREFIX`)

- Use `brew install libomp` to place the header files and lib files inside brew control (and can be discovered by `brew --prefix libomp`)

- Use a custom install of OpenMP by specifying an `OMP_PREFIX` where header files and lib files can be found.

This PR reflects the above logic, and might serve as a final solution for resolving OpenMP issues in MacOS.

This PR also resolves the discussion raised in https://dev-discuss.pytorch.org/t/can-we-add-a-default-backend-when-openmp-is-not-available/1382/5 with @jansel , and provide a way for brew users to automatically find the installation via `brew --prefix libomp`, and provide instructions to switch to another compiler by `CXX` environment variable.

I have tested the following code in different conditions:

- Use `CXX` to point to an LLVM-clang++, works fine.

- Use `CXX` to point to a GNU g++, not working because the compiler flag `-Xclang`. Manually removing the code `base_flags += " -Xclang"` works.

- Use default compiler and `conda install llvm-openmp`, works fine

- Use default compiler and `brew install libomp`, works fine

- Do nothing, compiler complains `omp.h` not found.

```python

import torch

@torch.compile

def f(x):

return x + 1

f(torch.randn(5, 5))

```

If we want the code to be more portable, we can also deal with the `-Xclang` issue.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107111

Approved by: https://github.com/jgong5, https://github.com/jansel

Adds `SingletonSymNodeImpl` (alternatively, `SkolemSymNodeImpl`). This is a int-like object that only allows the`eq` operation; any other operation produces an error.

The main complexity is that we require operations that dispatch to SymNode must take and return SymNodes, but when performing operations involving `SingletonSymNodeImpl`, operations involving SymNode can return non-SymNode bools. For more discussion see [here](https://docs.google.com/document/d/18iqMdnHlUnvoTz4BveBbyWFi_tCRmFoqMFdBHKmCm_k/edit)

- Introduce `ConstantSymNodeImpl` a generalization of `LargeNegativeIntSymNodeImpl` and replace usage of `LargeNegativeIntSymNodeImpl` in SymInt.

- Also use ConstantSymNodeImpl to enable SymBool to store its data on a SymNode. Remove the assumption that if SymBool holds a non-null SymNode, it must be symbolic.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107089

Approved by: https://github.com/ezyang

ghstack dependencies: #107839

Although there are some performance benefits by enforcing NHWC convolutions as inductor's fallback method for all hardware this may not be the case. Currently on ROCm we are seeing some slow downs in gcnArch that do not have optimal NHWC implementations and would like to introduce some control on this behavior in pytorch. On ROCm MI200 series we will default to the enforced last channels behavior aligned with the rest of pytorch but on non-MI200 series we will disable the forced layout.

For now we are using torch.cuda.get_device_name(0) for this control but we will replace with gcnArchName when https://github.com/pytorch/pytorch/pull/107477 lands.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107812

Approved by: https://github.com/jataylo, https://github.com/eellison

<!--

copilot:summary

-->

### <samp>🤖 Generated by Copilot at d02bfb0</samp>

Add environment name for S3 HTMLs workflow. This allows secure and controlled access to the secrets and approval for updating the PyTorch whl indexes on S3.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107889

Approved by: https://github.com/huydhn

The way the aot autograd sequence_nr tracking works is that we run the aot export logic, the dynamo captured forward graph is run under an fx.Interpreter, which iterates through the nodes of the forward graph while setting the `current_metadata`.

Since during backward what is run doesn't correspond to any node during forward, we fallback to the global `current_metadata`. And since this global metadata is ends up being shared between runs, that leads to weirdness if we forget to reset things, e.g., depending whether this is the first test run, the printed results will be different.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107210

Approved by: https://github.com/bdhirsh

### Description

The `download_url_to_file` function in torch.hub uses a temporary file to prevent overriding a local working checkpoint with a broken download.This temporary file is created using `NamedTemporaryFile`. However, since `NamedTemporaryFile` creates files with overly restrictive permissions (0600), the resulting download will not have default permissions and will not respect umask on Linux (since moving the file will retain the restrictive permissions of the temporary file). This is especially problematic when trying to share model checkpoints between multiple users as other users will not even have read access to the file.

The change in this PR fixes the issue by using custom code to create the temporary file without changing the permissions to 0600 (unfortunately there is no way to override the permissions behaviour of existing Python standard library code). This ensures that the downloaded checkpoint file correctly have the default permissions applied. If a user wants to apply more restrictive permissions, they can do so via usual means (i.e. by setting umask).

See these similar issues in other projects for even more context:

* https://github.com/borgbackup/borg/issues/6400

* https://github.com/borgbackup/borg/issues/6933

* https://github.com/zarr-developers/zarr-python/issues/325

### Issue

https://github.com/pytorch/pytorch/issues/81297

### Testing

Extended the unit test `test_download_url_to_file` to also check permissions.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82869

Approved by: https://github.com/vmoens

Summary:

(From Brian Hirsh)

Description copied from what I put in a comment in this PR: https://github.com/pytorch/pytorch/pull/106329

So, the slightly-contentious idea behind this PR is that lower in the stack, I updated torch._decomps.get_decomps() to check not only the decomp table to see if a given op has a decomposition available, but to also check the dispatcher for any decomps registered to the CompositeImplicitAutograd key (link: https://github.com/pytorch/pytorch/pull/105865/files#diff-7008e894af47c01ee6b8eb94996363bd6c5a43a061a2c13a472a2f8a9242ad43R190)

There's one problem though: we don't actually make any hard guarantees that a given key in the dispatcher points does or does not point to a decomposition. We do rely pretty heavily, however, on the fact that everything registered to the CompositeImplicitAutograd key is in fact a decomposition into other ops.

QAT would like this API to faithfully return "the set of all decomps that would have run if we had traced through the dispatcher". However, native_batch_norm is an example of an op that has a pre-autograd decomp registered to it (through op.py_impl(), but the decomp is registered directly to the Autograd key instead of being registered to the CompositeImplicitAutograd key.

If we want to provide a guarantee to QAT that they can programatically access all decomps that would have run during tracing, then we need to make sure that every decomp we register to the Autograd key is also registered to the CompositeImplicitAutograd key.

This might sound kind of painful (since it requires auditing), but I think in practice this basically only applies to native_batch_norm.

Test Plan: python test/test_decomp.py

Differential Revision: D48607575

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107791

Approved by: https://github.com/jerryzh168, https://github.com/SherlockNoMad

Fixes#92000

The documentation at https://pytorch.org/docs/stable/generated/torch.nn.MultiLabelSoftMarginLoss.html#multilabelsoftmarginloss states:

> label targets padded by -1 ensuring same shape as the input.

However, the shape of input and target tensor are compared, and an exception is raised if they differ in either dimension 0 or 1. Meaning the label targets are never padded. See the code snippet below and the resulting output. The documentation is therefore adjusted to:

> label targets must have the same shape as the input.

```

import torch

import torch.nn as nn

# Create some example data

input = torch.tensor(

[

[0.8, 0.2, -0.5],

[0.1, 0.9, 0.3],

]

)

target1 = torch.tensor(

[

[1, 0, 1],

[0, 1, 1],

[0, 1, 1],

]

)

target2 = torch.tensor(

[

[1, 0],

[0, 1],

]

)

target3 = torch.tensor(

[

[1, 0, 1],

[0, 1, 1],

]

)

loss_func = nn.MultiLabelSoftMarginLoss()

try:

loss = loss_func(input, target1).item()

except RuntimeError as e:

print('target1 ', e)

try:

loss = loss_func(input, target2).item()

except RuntimeError as e:

print('target2 ', e)

loss = loss_func(input, target3).item()

print('target3 ', loss)

```

output:

```

target1 The size of tensor a (3) must match the size of tensor b (2) at non-singleton dimension 0

target2 The size of tensor a (2) must match the size of tensor b (3) at non-singleton dimension 1

target3 0.6305370926856995

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107817

Approved by: https://github.com/mikaylagawarecki

Summary:

This relands #107601, which was reverted due to the new test failing in the internal CI. Here we skip the new test (as well as the existing tests in `test_aot_inductor.py`, as those are also failing in the internal CI).

Test Plan:

```

$ python test/inductor/test_aot_inductor.py

...

----------------------------------------------------------------------

Ran 5 tests in 87.309s

OK

```

Reviewers:

Subscribers:

Tasks:

Tags:

Differential Revision: [D48623171](https://our.internmc.facebook.com/intern/diff/D48623171)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107814

Approved by: https://github.com/eellison

Cap opset version at 17 for torch.onnx.export and suggest users to use the dynamo exporter. Warn users instead of failing hard because we should still allow users to create custom symbolic functions for opset>17.

Also updates the default opset version by running `tools/onnx/update_default_opset_version.py`.

Fixes#107801Fixes#107446

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107829

Approved by: https://github.com/BowenBao

Currently there are 4 cases where contraint violation errors are raised, but the error messages are (a) inconsistent in their information content (b) worded in ways that are difficult to understand for the end user.

This diff cuts one of the cases that can never be reached, and makes the other 3

(a) consistent, e.g. they all point out that some values in the given range may not work, citing a reason and asking the user to run with logs to follow up

(b) user-friendly, e.g., compiler-internal info is cut out or replaced with user-facing syntax.

Differential Revision: D48576608

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107790

Approved by: https://github.com/tugsbayasgalan, https://github.com/angelayi

Summary: Previously serializing graphs using map would error

because map returns a singleton tensor list rather than a

single tensor. So this diff adds support for if a higher order operator

returns a list of tensors as output.

We also run into an issue with roundtripping the source_fn on

map nodes/subgraphs. The source_fn originally is

<functorch.experimental._map.MapWrapper object at 0x7f80a0549930>, which

serializes to `functorch.experimental._map.map`. However, we are unable

to construct the function from this string. This should be fixed once

map becomes a fully supported operator like

torch.ops.higher_order.cond.

Test Plan:

Reviewers:

Subscribers:

Tasks:

Tags:

Differential Revision: [D48631302](https://our.internmc.facebook.com/intern/diff/D48631302)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107837

Approved by: https://github.com/zhxchen17

ghstack dependencies: #107818

Some NvidaTRT folks were asking for a way to integrate the serialization of custom objects with export's serialization. After some discussion (more background [here](https://docs.google.com/document/d/1lJfxakmgeoEt50inWZ53MdUtOSa_0ihwCuPy_Ak--wc/edit)), we settled on a way for users to register their custom object's serializer/deserializer functions.

Since TorchScript's `.def_pickle` already exists for [registering custom classes](https://pytorch.org/tutorials/advanced/torch_script_custom_classes.html), and `tensorrt.ICudaEngine` already contains a `.def_pickle` implementation, we'll start off by reusing the existing framework and integrating it with export's serialization.

TorchScript's `.def_pickle` requires users to register two functions, which end up being the `__getstate__` and `__setstate__` methods on the class. The semantics of `__getstate__` and `__setstate__` in TorchScript are equivalent to that of Python pickle modules. This is then registered using pybind's `py::pickle` function [here](https://www.internalfb.com/code/fbsource/[f44e048145e4697bccfaec300798fce7daefb858]/fbcode/caffe2/torch/csrc/jit/python/script_init.cpp?lines=861-916) to be used with Python's pickle to initialize a ScriptObject with the original class, and set the state back to what it used to be.

I attempted to call `__getstate__` and `__setstate__` directly, but I couldn't figure out how to initial the object to be called with `__setstate__` in python. One option would be to create a `torch._C.ScriptObject` and then set the class and call `__setstate__`, but there is no constructor initialized for ScriptObjects. Another option would be to construct an instance of the serialized class itself, but if the class constructor required arguments, I wouldn't know what to initialize it with. In ScriptObject's `py::pickle` registration it directly creates the object [here](https://www.internalfb.com/code/fbsource/[f44e048145e4697bccfaec300798fce7daefb858]/fbcode/caffe2/torch/csrc/jit/python/script_init.cpp?lines=892-906), which is why I was thinking that just directly using Python's `pickle` will be ok since it is handled here.

So, what I did is that I check if the object is pickle-able, meaning it contains `__getstate__` and `__setstate__` methods, and if so, I serialize it with Python's pickle. TorchScript does have its own implementation of [pickle/unpickle](https://www.internalfb.com/code/fbsource/[59cbc569ccbcaae0db9ae100c96cf0bae701be9a][history]/fbcode/caffe2/torch/csrc/jit/serialization/pickle.h?lines=19%2C82), but it doesn't seem to have pybinded functions callable from python.

A question is -- is it ok to combine this pickle + json serialization?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107666

Approved by: https://github.com/gmagogsfm

Summary:

After we compile dense arch, we observe split-linear-cat pattern. Hence, we want to use bmm fusion + split cat pass to fuse the pattern as torch.baddmm.

Some explanation why we prefer pre grad:

1) We need to add bmm fusion before split cat pass which is in pre grad pass to remove the new added stack and unbind node with the original cat/split node

2) Post grad does not support torch.stack/unbind. There is a hacky workaround but may not be landed in short time.

Test Plan:

# unit test

```

buck test mode/dev-nosan //caffe2/test/inductor:group_batch_fusion

[jackiexu0313@devgpu005.cln5 ~/fbsource/fbcode (f0ff3e3fc)]$ buck test mode/dev-nosan //caffe2/test/inductor:group_batch_fusion

File changed: fbcode//caffe2/test/inductor/test_group_batch_fusion.py

Buck UI: https://www.internalfb.com/buck2/189dd467-d04d-43e5-b52d-d3b8691289de

Test UI: https://www.internalfb.com/intern/testinfra/testrun/5910974704097734

Network: Up: 0B Down: 0B

Jobs completed: 14. Time elapsed: 1:05.4s.

Tests finished: Pass 5. Fail 0. Fatal 0. Skip 0. Build failure 0

```

# local test

```

=================Single run start========================

enable split_cat_pass for control group

================latency analysis============================

latency is : 73.79508209228516 ms

=================Single run start========================

enable batch fusion for control group

enable split_cat_pass for control group

================latency analysis============================

latency is : 67.94447326660156 ms

```

# e2e test

todo add e2e test

Differential Revision: D48539721

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107759

Approved by: https://github.com/yanboliang

Add new fused_attention pattern matcher for Inductor, in order to make more models call the op SDPA.

The following models would call SDPA due to the added pattern:

For HuggingFace

- AlbertForMaskedLM

- AlbertForQuestionAnswering

- BertForMaskedLM

- BertForQuestionAnswering

- CamemBert

- ElectraForCausalLM

- ElectraForQuestionAnswering

- LayoutLMForMaskedLM

- LayoutLMForSequenceClassification

- MegatronBertForCausalLM

- MegatronBertForQuestionAnswering

- MobileBertForMaskedLM

- MobileBertForQuestionAnswering

- RobertaForCausalLM

- RobertaForQuestionAnswering

- YituTechConvBert

For TorchBench

- llama

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107578

Approved by: https://github.com/mingfeima, https://github.com/XiaobingSuper, https://github.com/jgong5, https://github.com/eellison, https://github.com/jansel

As the title says, I was trying to test the functional collectives, and, when printing the resulting tensors, sometimes they wouldn't have finished the Async operation yet. According to the comments in the file, "AsyncTensor wrapper applied to returned tensor, which issues wait_tensor() at the time of first use". This is true in most cases, but not when print() is your first use. This PR fixes that.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107808

Approved by: https://github.com/fduwjj

_enable_dynamo_cache_lookup_profiler used to get turned on when running `__enter__` or `__exit__` with the profiler. But it's possible to turn the profiler on and off without the context manager (e.g. with a schedule and calling `.step()`). Instead, we should put these calls (which are supposed to be executed when the profiler turns on/off) where `_enable_profiler()` and `_disable_profiler()` are called.

This puts `_enable_dynamo_cache_lookup_profiler` and `_set_is_profiler_enabled` into `_run_on_profiler_(start|stop)` and calls that on the 3 places where `_(enable|disable)_profiler` get called.

Differential Revision: [D48619818](https://our.internmc.facebook.com/intern/diff/D48619818)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107720

Approved by: https://github.com/wconstab

Moves the logic to casting state to match parameters into a hook so that users can choose to enable their hooks before or after the casting has happened.

With this, there is a little bit of redundancy of the id_map building and the check that the param groups are still aligned in length.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106725

Approved by: https://github.com/albanD

This PR looks big, but it's mostly just refactorings with a bit of dead code deletion. Exceptions are:

- Some metric emissions were changed to comply with the new TD format

- Some logging changes

- We now run tests in three batches (highly_relevant, probably_relevant, unranked_relevance) instead of the previous two (prioritized and general)

Refactorings done:

- Moves all test reordering code to the new TD framework

- Refactors run_test.py to cleanly support multiple levels of test priorities

- Deletes some dead code that was originally written for logging

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107071

Approved by: https://github.com/clee2000, https://github.com/huydhn

I notice a curious case on https://github.com/pytorch/pytorch/pull/107508 where there was one broken trunk failure and the PR was merged with `merge -ic`. Because the failure had been classified as unrelated, I expected to see a no-op force merge here. However, it showed up as a force merge with failure.

The record on Rockset reveals https://github.com/pytorch/pytorch/pull/107508 has:

* 0 broken trunk check (unexpected, this should be 1 as Dr. CI clearly say so)

* 1 ignore current check (unexpected, this should be 0 and the failure should be counted as broken trunk instead)

* 3 unstable ROCm jobs (expected)

It turns out that ignore current takes precedence over flaky and broken trunk classification. This might have been the expectation in the past but I think that's not the case now. The bot should be consistent with what is shown on Dr. CI. The change here is to make flaky, unstable, and broken trunk classification to take precedence over ignore current. Basically, we only need to ignore new or unrecognized failures that have yet been classified.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107761

Approved by: https://github.com/clee2000

When exporting dropout with cpu tensor, we get following graph module

```

class GraphModule(torch.nn.Module):

def forward(self, arg0_1: f32[512, 10]):

empty_memory_format: f32[512, 10] = torch.ops.aten.empty.memory_format([512, 10], dtype = torch.float32, layout = torch.strided, device = device(type='cpu'), pin_memory = False, memory_format = torch.contiguous_format)

bernoulli_p: f32[512, 10] = torch.ops.aten.bernoulli.p(empty_memory_format, 0.9); empty_memory_format = None

div_scalar: f32[512, 10] = torch.ops.aten.div.Scalar(bernoulli_p, 0.9); bernoulli_p = None

mul_tensor: f32[512, 10] = torch.ops.aten.mul.Tensor(arg0_1, div_scalar); arg0_1 = div_scalar = None

return (mul_tensor,)

```

In addition, if we export with eval() mode, we will have an empty graph.

However, when exporting with cuda tensor, we got

```

class GraphModule(torch.nn.Module):

def forward(self, arg0_1: f32[512, 10]):

native_dropout_default = torch.ops.aten.native_dropout.default(arg0_1, 0.1, True); arg0_1 = None

getitem: f32[512, 10] = native_dropout_default[0]; native_dropout_default = None

return (getitem,)

```

and exporting under eval() mode will still have a dropout node in graph.

This PR make exporting with CPU tensor also produce aten.native_dropout.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106274

Approved by: https://github.com/ezyang

This change is to match the behavior of _record_memory_history which was

recently changed to enable history recording on all devices rather than

the current one. It prevents confusing situations where the observer

was registered before the device was set for the training run.

It also ensures the allocators have been initialized in the python binding just in case this is the first call to the CUDA API.

Fixes#107330

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107399

Approved by: https://github.com/eellison

ghstack dependencies: #107171

**Overview**

This PR runs the HSDP all-reduce as async so that it can overlap with both all-gather and reduce-scatter, which can lead to slight end-to-end speedups when the sharding process group is fully intra-node. Previously, the all-reduce serializes with reduce-scatter, so it can only overlap with one all-gather.

For some clusters (e.g. our AWS cluster), `NCCL_CROSS_NIC=1` improves inter-node all-reduce times when overlapped with intra-node all-gather/reduce-scatter.

**Experiment**

<details>

<summary> Example 'before' trace </summary>

<img width="559" alt="hsdp_32gpus_old" src="https://github.com/pytorch/pytorch/assets/31054793/15222b6f-2b64-4e0b-a212-597335f05ba5">

</details>

<details>

<summary> Example 'after' trace </summary>

<img width="524" alt="hsdp_32gpus_new" src="https://github.com/pytorch/pytorch/assets/31054793/94f63a1d-4255-4035-9e6e-9e10733f4e44">

</details>

For the 6-encoder-layer, 6-decoder layer transformer with `d_model=8192`, `nhead=64` on 4 nodes / 32 40 GB A100s via AWS, the end-to-end iteration times are as follows (with AG == all-gather, RS == reduce-scatter, AR == all-reduce; bandwidth reported as algorithmic bandwidth):

- Reference FSDP:

- **1160 ms / iteration**

- ~23 ms / encoder AG/RS --> 24.46 GB/s bandwidth

- ~40 ms / decoder AG/RS --> 26.5 GB/s bandwidth

- 50 GB/s theoretical inter-node bandwidth

- Baseline 8-way HSDP (only overlap AR with AG) -- intra-node AG/RS, inter-node AR:

- **665 ms / iteration**

- ~3 ms / encoder AG/RS --> 187.5 GB/s bandwidth

- ~5 ms / decoder AG/RS --> 212 GB/s bandwidth

- ~30 ms / encoder AR --> 2.34 GB/s bandwidth

- ~55 ms / decoder AR --> 2.65 GB/s bandwidth

- 300 GB/s theoretical intra-node bandwidth

- New 8-way HSDP (overlap AR with AG and RS) -- intra-node AG/RS, inter-node AR:

- **597 ms / iteration**

- ~3 ms / encoder AG/RS --> 187.5 GB/s bandwidth

- ~6.2 ms / decoder AG/RS --> 170.97 GB/s bandwidth (slower)

- ~23 ms / encoder AR (non-overlapped) --> 3.057 GB/s bandwidth (faster)

- ~49 ms / decoder AR (non-overlapped) --> 2.70 GB/s bandwidth (faster)

- ~100 ms / decoder AR (overlapped) --> 1.325 GB/s bandwidth (slower)

- Overlapping with reduce-scatter reduces all-reduce bandwidth utilization even though the all-reduce is inter-node and reduce-scatter is intra-node!

- New 8-way HSDP (overlap AR with AG and RS) with `NCCL_CROSS_NIC=1`:

- **556 ms / iteration**

- Speedup comes from faster overlapped AR

Thus, for this particular workload, the async all-reduce enables 16% iteration-time speedup compared to the existing HSDP and 52% speedup compared to FSDP. These speedups are pronounced due to the workload being communication bound, so any communication time reduction translates directly to speedup.

**Unit Test**

This requires >= 4 GPUs:

```

python -m pytest test/distributed/fsdp/test_fsdp_hybrid_shard.py -k test_fsdp_hybrid_shard_parity

```

Differential Revision: [D47852456](https://our.internmc.facebook.com/intern/diff/D47852456)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106080

Approved by: https://github.com/ezyang

ghstack dependencies: #106068

The post-backward hook has some complexity due to the different paths: {no communication hook, communication hook} x {`NO_SHARD`, `FULL_SHARD`/`SHARD_GRAD_OP`, `HYBRID_SHARD`/`_HYBRID_SHARD_ZERO2`} plus some options like CPU offloading and `use_orig_params=True` (requiring using sharded gradient views).

The PR following this one that adds async all-reduce for HSDP further complicates this since the bottom-half after all-reduce must still be run in the separate all-reduce stream, making it more unwieldy to unify with the existing bottom-half.

Nonetheless, this PR breaks up the post-backward hook into smaller logical functions to hopefully help readability.

Differential Revision: [D47852461](https://our.internmc.facebook.com/intern/diff/D47852461)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106068

Approved by: https://github.com/ezyang, https://github.com/fegin

Previously, the top level GraphProto is hardcoded with name "torch_jit", and the subgraphs "torch_jit_{count}". It does not offer any insight to the graph, but rather encodes the graph producer as jit (torchscript). This is no longer true now that the graph can also be produced from dynamo.

As a naive first step, this PR re-purposes the name, to "main_graph", and "sub_graph_{count}" respectively. More delicate processing can be done to name the subgraphs with respect to their parent node or module. This can be done as follow ups.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107408

Approved by: https://github.com/justinchuby, https://github.com/titaiwangms

Summary:

D48295371 cause batch fusion failure, which will block mc proposals on all mc models.

e.g. cmf f470938179

Test Plan: Without revert, f469732293. With revert diff f472266199.

Differential Revision: D48610062

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107796

Approved by: https://github.com/yanboliang

The `broadcast_object_list` function can easily broadcast the state_dict of models/optimizers. However, the `torch.cat` operation performed within `broadcast_object_list` consumes an additional double amount of memory space. This means that only objects with a maximum memory occupancy of half the device capacity can be broadcasted. This PR improves usability by skipping the `torch.cat` operation on object_lists with only a single element.

Before (30G tensor):

<img width="607" alt="image" src="https://github.com/pytorch/pytorch/assets/22362311/c0c67931-0851-4f27-81c1-0119c6cd2944">

After (46G tensor):

<img width="600" alt="image" src="https://github.com/pytorch/pytorch/assets/22362311/90cd1536-be7c-43f4-82ef-257234afcfa5">

Test Code:

```python

if __name__ == "__main__":

dist.init_process_group(backend='nccl')

torch.cuda.set_device(dist.get_rank() % torch.cuda.device_count())

fake_tensor = torch.randn(30 * 1024 * 1024 * 1024 // 4)

if dist.get_rank() == 0:

state_dict = {"fake_tensor": fake_tensor}

else:

state_dict = {}

object_list = [state_dict]

dist.broadcast_object_list(object_list, src=0)

print("Rank: ", dist.get_rank(), " Broadcasted Object: ", object_list[0].keys())

dist.barrier()

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107509

Approved by: https://github.com/awgu

These lowerings must copy even when they are no-ops in order to preserve

correctness in the presense of mutations. However, `to_dtype` and `to_device`

are also used in various lowerings as a helper function where it is okay to alias.

So, I've split these into two functions and allow the helper functions to alias

which saves some unnecessary copies.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107640

Approved by: https://github.com/lezcano

In this PR, we make ExportedProgram valid callable to export for re-exporting. Note that we don't allow any new constraints specified from user as we don't have any way of handling it right now. There are some caveats that is worth mentioning in this PR.

Today, graph_module.meta is not preserved (note that this is different from node level meta which we preserve). Our export logic relies on this meta to process the constraints. But if we skip dynamo, we will have to preserve the constraints stored in graph_module.meta. Once dynamo supports retracibility, we don't have to do this anymore. I currently manually save graph_module.meta at following places:

1. After ExportedProgram.module()

2. After ExportedProgram.transform()

3. At construction site of ExportedProgram.

Jerry will add the update on the quantization side as well.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107657

Approved by: https://github.com/gmagogsfm

1. Add a list of HF models to CI tests. The PR intends to build them from Config, but some of them are not supported with Config. NOTE: Loaded from pre-trained model could potentially hit [uint8/bool conflict](https://github.com/huggingface/transformers/issues/21013) when a newer version of transformers is used.

- Dolly has torch.fx.Node in OnnxFunction attribute, which is currently not supported.

- Falcon and MPT has unsupported user coding to Dynamo.

2. Only update GPT2 exporting with real tensor to Config, as FakeMode rises unequal input errors between PyTorch and ORT. The reason is that [non-persistent buffer is not supported](https://github.com/pytorch/pytorch/issues/107211)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107247

Approved by: https://github.com/wschin, https://github.com/BowenBao

Previous to this PR, `_assert_fake_tensor_mode` checks all of exporting tracer that they enable fake mode "from" exporter API whenever they have fake tensors in args/buffers/weights. However, FXSymbolicTracer doesn't use exprter API to create fake mode, so it hits the raise RuntimeError everytime we run it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107712

Approved by: https://github.com/BowenBao

**Motivation:**

When input FakeTensor to torch.compile has SymInt sizes (e.g. make_fx(opt_f, tracing_mode="symbolic"):

1. We cannot create a FakeTensor from that input in dynamo due to the SymInts.

2. We cannot check input tensors in guard check function and will abort due to tensor check calls sizes/strides.

For 1, we specialize the FakeTensor's SymInts using their hints. This is mostly safe since inputs mostly have concrete shapes and not computed from some DynamicOutputShape ops. We'll throw a data dependent error if the symint is unbacked.

For 2, we replace size/stride calls with the sym_* variants in TENSOR_CHECK guards' check function.

**Test Plan:**

See added tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107662

Approved by: https://github.com/ezyang

Summary: Added due to how common the op is. For performance reasons users may not want to decompose batch_norm op. batch_norm is also part of StableHLO

Test Plan: After adding to IR, we can enable _check_ir_validity in exir.EdgeCompileConfig for models like MV2, MV3, IC3, IC4

Reviewed By: guangy10

Differential Revision: D48576866

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107732

Approved by: https://github.com/manuelcandales, https://github.com/guangy10

This updates ruff to 0.285 which is faster, better, and have fixes a bunch of false negatives with regards to fstrings.

I also enabled RUF017 which looks for accidental quadratic list summation. Luckily, seems like there are no instances of it in our codebase, so enabling it so that it stays like that. :)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107519

Approved by: https://github.com/ezyang

Summary: Currently serializing graphs which return get_attr's directly as output fails. This diff adds support for that only in EXIR serializer while we still support unlifted params.

Test Plan: Added test case.

Differential Revision: D48258552

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107610

Approved by: https://github.com/angelayi

This PR fixes the requires_grad set when calling distribute_tensor, we

should set the requires_grad of the local tensor after the detach call

to make sure we create the leaf correctly, otherwise it would raise

warnings

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107606

Approved by: https://github.com/fduwjj

torch.profiler.record_function is relatively slow; for example, in some benchmarks I was running, x.view_as(x) was ~2us, and ~16-17us when wrapped in a record_function context. The reasons for this are: dispatcher overhead from going through an op (the main source of overhead), python binding / python conversion overhead, and some overhead from the context manager.

This new implementation is faster, but it won't work with torchscript. Based on the benchmarks I was running, it adds 0.5-0.7us overhead per call when the profiler is turned off. To use it, you can just:

```python

with torch._C._profiler_manual._RecordFunctionFast("title"):

torch.add(x, y)

```

It implements a context manager in python which directly calls the record_function utilities, instead of calling through an op.

* The context manager is implemented directly in python because the overhead from calling a python function seems non-negligible

* All the record_function calls, python object conversions are guarded on checks for whether the profiler is enabled or not. It seems like this saves a few hundred nanoseconds.

For more details about the experiments I ran to choose this implementation, see [my record_functions experiments branch](https://github.com/pytorch/pytorch/compare/main...davidberard98:pytorch:record-function-fast-experiments?expand=1).

This also adds a `torch.autograd.profiler._is_profiler_enabled` global variable that can be used to check whether a profiler is currently enabled. It's useful for further reducing the overhead, like this:

```python

if torch.autograd.profiler._is_profiler_enabled:

with torch._C._profiler_manual._RecordFunctionFast("title"):

torch.add(x, y)

else:

torch.add(x, y)

```

On BERT_pytorch (CPU-bound model), if we add a record_function inside CachedAutotuning.run:

* Naive torch.profiler.record_function() is a ~30% slowdown

* Always wrapping with RecordFunctionFast causes a regression of ~2-4%.

* Guarding with an if statement - any regression is within noise

**Selected benchmark results**: these come from a 2.20GHz machine, GPU build but only running CPU ops; running `x.view_as(x)`, with various record_functions applied (with profiling turned off). For more detailed results see "record_functions experiments branch" linked above (those results are on a different machine, but show the same patterns). Note that the results are somewhat noisy, assume 0.05-0.1us variations

```

Baseline:: 1.7825262546539307 us # Just running x.view_as(x)

profiled_basic:: 13.600390434265137 us # torch.profiler.record_function(x) + view_as

precompute_manual_cm_rf:: 2.317216396331787 us # torch._C._profiler_manual._RecordFunctionFast(), if the context is pre-constructed + view_as

guard_manual_cm_rf:: 1.7994389533996582 us # guard with _is_profiler_enabled + view_as

```

Differential Revision: [D48421198](https://our.internmc.facebook.com/intern/diff/D48421198)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107195

Approved by: https://github.com/albanD, https://github.com/aaronenyeshi

These jobs have write access to S3 when they are running on our self-hosted runners. On the other hand, they would need the AWS credential to run if they are run on GitHub ephemeral runner.

### Testing

Use the AWS credential in upload-stats environment to run the test command successfully (currently failing in trunk due to the lack of permission a5f83245fd)

```

python3 tools/alerts/upload_alerts_to_aws.py --alerts '[{"AlertType": "Recurrently Failing Job", "AlertObject": "Upload Alerts to AWS/Rockset / upload-alerts", "OncallTeams": [], "OncallIndividuals": [], "Flags": [], "sha": "c8a6c74443f298111fd6568e2828765d87b69c98", "branch": "main"}, {"AlertType": "Recurrently Failing Job", "AlertObject": "inductor / cuda12.1-py3.10-gcc9-sm86 / test (inductor_torchbench, 1, 1, linux.g5.4xlarge.nvidia.gpu)", "OncallTeams": [], "OncallIndividuals": [], "Flags": [], "sha": "f13101640f548f8fa139c03dfa6711677278c391", "branch": "main"}, {"AlertType": "Recurrently Failing Job", "AlertObject": "slow / linux-focal-cuda12.1-py3.10-gcc9-sm86 / test (slow, 1, 2, linux.g5.4xlarge.nvidia.gpu)", "OncallTeams": [], "OncallIndividuals": [], "Flags": [], "sha": "6981bcbc35603e5d8ac7d00a2032925239009db5", "branch": "main"}]' --org "pytorch" --repo "pytorch"

Writing 138 documents to S3

Done!

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107717

Approved by: https://github.com/clee2000

@huydhn

Our current workflow is to upload to GH and then upload from GH to S3 when uploading test stats at the end of a workflow.

I think these keys could be used to directly upload from the runner to S3 but we don't do that right now.

I'm not sure how high priority they keys are.

Rocm artifacts can still be seen on the HUD page

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107613

Approved by: https://github.com/huydhn

Removing expected failures relating to inductor batch_norm on ROCm

Also removing the addition of `tanh` to expected failures list as this is a cuda exclusive failure already captured here (cc: @peterbell10)

```

if not TEST_WITH_ROCM:

inductor_gradient_expected_failures_single_sample["cuda"]["tanh"] = {f16}

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107027

Approved by: https://github.com/peterbell10

Sometimes test suite names include file/module names since they were imported from another file (ex _nvfuser.test_dynamo.TestNvFuserDynamo etc). This can sometimes make the autogenerated named by disable bot and the disable test button on hud incorrect which is annoying to track down, which leads to issues that are open but don't actually do anything, so my solution is to make the check between the issue name + the test more flexible. Instead of checking the entire test suite name, we chop off the file/module names and only look for the last part (ex TestNvFuserDynamo) and check if those are equal.

Also bundle both the check against the names in the slow test json and disable test issue names into one function for no reason other than less code.

Looked through logs to see what tests are skipped with this vs the old one and it looked the same.

Diff looks like a big change but its mostly a change in the indentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/104002

Approved by: https://github.com/ZainRizvi, https://github.com/huydhn

Summary: Adds new tracepoints to CUDA allocator code for tracking alloc and dealloc events in the allocator code.

Test Plan: This change simply adds static tracepoints to CUDA allocator code, and does not otherwise change any logic. Testing is not required.

Reviewed By: chaekit

Differential Revision: D48229150

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107322

Approved by: https://github.com/chaekit

Previously, the first overload of `_make_wrapper_subclass` returned a tensor that **always** advertised as having a non-resizeable storage. Eventually, we'll need it be advertise as resizeable for functionalization to work (since functionalization occasionally needs to resize storages).

Not directly tested in this PR (tested more heavily later in aot dispatch, but if someone wants me to write a more direct test I can add one).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107416

Approved by: https://github.com/ezyang, https://github.com/albanD

ghstack dependencies: #107417

This was discussed in feedback from the original version of my "reorder proxy/fake" PR. This PR allows calls to `tensor.untyped_storage()` to **always** return a python storage object to the user. Previously, we would error loudly if we detected that the storage had a null dataptr.

Instead, I updated the python bindings for the python storage methods that I saw involve data access, to throw an error later, only if you try to access those methods (e.g. `storage.data_ptr()` will now raise an error if the data ptr is null).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107417

Approved by: https://github.com/albanD, https://github.com/ezyang, https://github.com/zou3519

Summary:

This PR improves `generate_opcheck_tests`:

- We shouldn't run automated testing through operators called in

torch.jit.trace / torch.jit.script

- I improved the error message and added a guide on what to do if one of the

tests fail.

- While dogfooding this, I realize I wanted a way to reproduce the failure

without using the test suite. If you pass `PYTORCH_OPCHECK_PRINT_REPRO`, it

will now print a minimal repro on failure. This involves serializing some

tensors to disk.

- The minimal repro includes a call to a new API called `opcheck`.

The opcheck utility runs the same checks as the tests generated

by `generate_opcheck_tests`. It doesn't have a lot of knobs on it for

simplicity. The general workflow is: if an autogenerated test fails, then the

user may find it easier to reproduce the failure without the test suite by

using opcheck

Test Plan: - new tests

Differential Revision: D48485013

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107597

Approved by: https://github.com/ezyang

This PR stops `SymNode` from mutating (i.e. simplifying) its expression. Instead, the

simplification (without mutation) is deferred to the `SymNode.maybe_as_int` method.

```python

- FakeTensor(size=(s0,), ...)

- FakeTensor(size=(s1, s2, s3), ...)

- Eq(s0, s1 + s2 + s3)

- FakeTensor(size=(s0,), ...)

- FakeTensor(size=(s1, s2, s3), ...)

```

In summary, this PR:

- Replaces `SymNode._expr` by `SymNode.expr`, removing the old property function

- This makes it so `SymNode` instances never update their expression

- Creates `SymNode.simplified_expr()` method for actually calling `ShapeEnv.replace` on

its expression. Note that this doesn't updates `SymNode.expr`

- Changes how `tensor.size()` gets converted to its Python `torch.Size` type

- Instead of calling `SymInt::maybe_as_int()` method, we create a new

`SymInt::is_symbolic()` method for checking whether it is actually a symbolic value

- This is needed so that when we call `tensor.size()` in the Python side, the returned

sequence is faithful to the actual data, instead of possibly simplifying it and

returning an integer

- 2 files needs this modification:

- _torch/csrc/Size.cpp_: for handling `torch.Tensor.size` Python calls

- _torch/csrc/utils/pybind.cpp_: for handling `symint.cast()` C++ calls

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107492

Approved by: https://github.com/ezyang

ghstack dependencies: #107523

This PR fixes transactional behavior of translation validation insertion.

Previously, this transactional behavior was implemented by removing the FX node if any

issues occurred until the end of `evaluate_expr`. However, since we cache FX nodes, we

might end up removing something that wasn't inserted in the same function call.

**Solution:** when creating an FX node for `call_function`, we also return whether this is

a fresh FX node or not. Then, we can appropriately handle each case.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107523

Approved by: https://github.com/ezyang

Added the following APIs:

```

def save(

ep: ExportedProgram,

f: Union[str, pathlib.Path, io.BytesIO],

extra_files: Optional[Dict[str, Any]] = None,

opset_version: Optional[Dict[str, int]] = None,

) -> None:

"""

Saves a version of the given exported program for use in a separate process.

Args:

ep (ExportedProgram): The exported program to save.

f (str): A file-like object (has to implement write and flush)

or a string containing a file name.

extra_files (Optional[Dict[str, Any]]): Map from filename to contents

which will be stored as part of f.

opset_version (Optional[Dict[str, int]]): A map of opset names

to the version of this opset

"""

def load(

f: Union[str, pathlib.Path, io.BytesIO],

extra_files: Optional[Dict[str, Any]] = None,

expected_opset_version: Optional[Dict[str, int]] = None,

) -> ExportedProgram:

"""

Loads an ExportedProgram previously saved with torch._export.save

Args:

ep (ExportedProgram): The exported program to save.

f (str): A file-like object (has to implement write and flush)

or a string containing a file name.

extra_files (Optional[Dict[str, Any]]): The extra filenames given in

this map would be loaded and their content would be stored in the

provided map.

expected_opset_version (Optional[Dict[str, int]]): A map of opset names

to expected opset versions

Returns:

An ExportedProgram object

"""

```

Example usage:

```

# With buffer

buffer = io.BytesIO()

torch._export.save(ep, buffer)

buffer.seek(0)

loaded_ep = torch._export.load(buffer)

# With file

with tempfile.NamedTemporaryFile() as f:

torch._export.save(ep, f)

f.seek(0)

loaded_ep = torch._export.load(f)

# With Path

with TemporaryFileName() as fname:

path = pathlib.Path(fname)

torch._export.save(ep, path)

loaded_ep = torch._export.load(path)

# Saving with extra files

buffer = io.BytesIO()

save_extra_files = {"extra.txt": "moo"}

torch._export.save(ep, buffer, save_extra_files)

buffer.seek(0)

load_extra_files = {"extra.txt": ""}

loaded_ep = torch._export.load(buffer, extra_files)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107309

Approved by: https://github.com/avikchaudhuri, https://github.com/gmagogsfm, https://github.com/tugsbayasgalan

In this PR, we extend ExportedProgram.module() functionality by also unlifting the mutated buffers. We only really care about top level buffers as we don't allow any buffer mutation inside HigherOrderOps.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107643

Approved by: https://github.com/avikchaudhuri

## Summary

Enables AVX512 dispatch by default for some kernels, for which AVX512 performs better than AVX2.

For other kernels, their AVX2 counterparts are used.

## Implementation details

`REGISTER_DISPATCH` should now only be used for non-AVX512 dispatch.

`ALSO_REGISTER_AVX512_DISPATCH` should be used when AVX512 dispatch should also be done for a kernel.

## Benchmarking results with #104655

[Raw data at GitHub Gist (Click on `Download ZIP`)](https://gist.github.com/sanchitintel/87e07f84774fca8f6b767aeeb08bc0c9)

| Op | Speedup of AVX512 over AVX2 |

|----|------------------------------------|

|sigmoid|~27% with FP32|

|sign| ~16.6%|

|sgn|~15%|

|sqrt|~4%|

|cosh|~37%|

|sinh|~37.5%|

|acos| ~8% with FP32 |

|expm1| ~30% with FP32|

|log|~2%|

|log1p|~16%|

|erfinv|~6% with FP32|

|LogSigmoid|~33% with FP32|

|atan2|~40% with FP32|

|logaddexp| ~24% with FP32|

|logaddexp2| ~21% with FP32|

|hypot| ~24% with FP32|

|igamma|~4% with FP32|

|lgamma| ~40% with FP32|

|igammac|3.5%|

|gelu|~3% with FP32|

|glu|~20% with FP32|

|SiLU|~35% with FP32|

|Softplus|~33% with FP32|

|Mish|~36% with FP32|

|Hardswish|~7% faster with FP32 when tensor can fit in L2 cache|

|Hardshrink|~8% faster with FP32 when tensor can fit in L2 cache|

|Softshrink|~10% faster with FP32 when tensor can fit in L2 cache|

|Hardtanh|~12.5% faster with FP32 when tensor can fit in L2 cache|

Hardsigmoid|~7% faster with FP32 when tensor can fit in L2 cache|

|hypot|~35%|

|atan2|~37%|

|dequantize per channel|~10%|

## Insights gleaned through collected data (future action-items):

1. Inplace variants of some ops are faster with AVX512 although the functional variant may be slower for FP32. Will enable AVX512 dispatch for the inplace variants of such kernels.

2. Almost all BF16 kernels are faster with AVX512, so after PyTorch 2.1 release, will enable AVX512 dispatch for BF16 kernels whose corresponding FP32 kernel doesn't perform well with AVX512.

3. Some kernels rely on auto-vectorization & might perform better with AVX512 once explicit vectorization would be enabled for them.

Data was collected with 26 physical threads of one socket of Intel Xeon 8371HC. Intel OpenMP & tcmalloc were preloaded.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/104165

Approved by: https://github.com/mingfeima, https://github.com/jgong5, https://github.com/kit1980