**Overview**

This PR switches the order of freeing the unsharded `FlatParameter` (`self._free_unsharded_flat_param()`) and switching to use the sharded `FlatParameter` (`self._use_sharded_flat_param()`). This is to prevent "use-after_free"-type bugs where for `param.data = new_data`, `param` has its metadata intact but not its storage, causing an illegal memory access for any instrumentation that depends on its storage. (`param` is an original parameter and `new_data` is either a view into the sharded `FlatParameter` or `torch.empty(0)` depending on the sharding and rank.)

**Details**

To see why simply switching the order of the two calls is safe, let us examine the calls themselves:

652457b1b7/torch/distributed/fsdp/flat_param.py (L1312-L1339)652457b1b7/torch/distributed/fsdp/flat_param.py (L1298-L1310)

- `_free_unsharded_flat_param()` does not make any assumption that `self.flat_param`'s data is the sharded `FlatParameter` (i.e. `_local_shard`).

- The sharded `FlatParameter` (i.e. `_local_shard`) is always present in memory, which means that FSDP can use sharded views at any time, including before freeing the unsharded data.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94859

Approved by: https://github.com/zhaojuanmao, https://github.com/fegin

Fixes#94353

This PR adds examples and further info to the in-place and out-of-place masked scatter functions' documentation, according to what was proposed in the linked issue. Looking forward to any suggested changes you may have as I continue to familiarize myself with PyTorch 🙂

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94545

Approved by: https://github.com/lezcano

Add triton support for ROCm builds of PyTorch.

* Enables inductor and dynamo when rocm is detected

* Adds support for pytorch-triton-mlir backend

* Adds check_rocm support for verify_dynamo.py

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94660

Approved by: https://github.com/malfet

With the release of ROCm 5.3 hip now supports a hipGraph implementation.

All necessary backend work and hipification is done to support the same functionality as cudaGraph.

Unit tests are modified to support a new TEST_GRAPH feature which allows us to create a single check for graph support instead of attempted to gather the CUDA level in annotations for every graph test

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88202

Approved by: https://github.com/jithunnair-amd, https://github.com/pruthvistony, https://github.com/malfet

Following the same logic of preloading cudnn and cublas from the pypi folder in multi-arch disributions, where Pure-lib vs Plat-lib matters, this PR adds the logic for the rest of the cuda pypi libraries that were integrated.

I have tested this PR by running the code block locally and installing/uninstalling nvidia pypi libraries:

```

import sys

import os

def _preload_cuda_deps():

"""Preloads cudnn/cublas deps if they could not be found otherwise."""

# Should only be called on Linux if default path resolution have failed

cuda_libs = {

'cublas': 'libcublas.so.11',

'cudnn': 'libcudnn.so.8',

'cuda_nvrtc': 'libnvrtc.so.11.2',

'cuda_runtime': 'libcudart.so.11.0',

'cuda_cupti': 'libcupti.so.11.7',

'cufft': 'libcufft.so.10',

'curand': 'libcurand.so.10',

'cusolver': 'libcusolver.so.11',

'cusparse': 'libcusparse.so.11',

'nccl': 'libnccl.so.2',

'nvtx': 'libnvToolsExt.so.1',

}

cuda_libs_paths = {lib_folder: None for lib_folder in cuda_libs.keys()}

for path in sys.path:

nvidia_path = os.path.join(path, 'nvidia')

if not os.path.exists(nvidia_path):

continue

for lib_folder, lib_name in cuda_libs.items():

candidate_path = os.path.join(nvidia_path, lib_folder, 'lib', lib_name)

if os.path.exists(candidate_path) and not cuda_libs_paths[lib_folder]:

cuda_libs_paths[lib_folder] = candidate_path

if all(cuda_libs_paths.values()):

break

if not all(cuda_libs_paths.values()):

none_libs = [lib for lib in cuda_libs_paths if not cuda_libs_paths[lib]]

raise ValueError(f"{', '.join(none_libs)} not found in the system path {sys.path}")

_preload_cuda_deps()

```

I don't have access to a multi-arch environment, so if somebody could verify a wheel with this patch on a multi-arch distribution, that would be great!

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94355

Approved by: https://github.com/atalman

If the input to operator.not_ is a tensor, I want to convert the operator to a torch.logical_not. This allows the following test case to pass. Beforehand it resulted in the error `NotImplementedError("local_scalar_dense/item NYI for torch.bool")`

```

def test_export_tensor_bool_not(self):

def true_fn(x, y):

return x + y

def false_fn(x, y):

return x - y

def f(x, y):

return cond(not torch.any(x), true_fn, false_fn, [x, y])

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94626

Approved by: https://github.com/voznesenskym

Fixes#91824

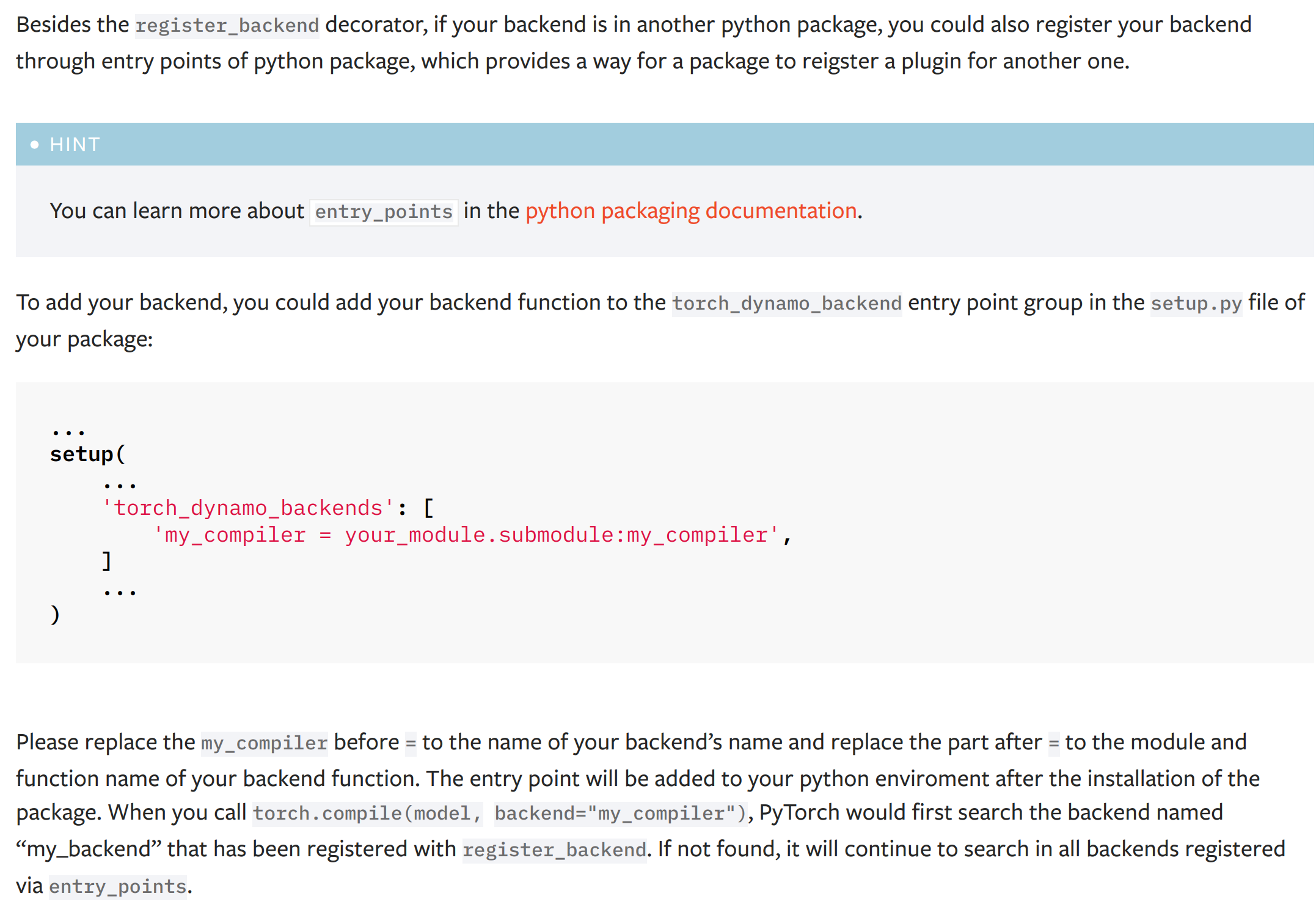

This PR add a new dynamo backend registration mechanism through ``entry_points``. The ``entry_points`` of a package is provides a way for the package to reigster a plugin for another one.

The docs of the new mechanism:

(the typo '...named "my_backend" that has been..." has been fixed to '...named "my_compiler" that has been...')

# Discussion

## About the test

I did not add a test for this PR as it is hard either to install a fack package during a test or manually hack the entry points function by replacing it with a fake one. I have tested this PR offline with the hidet compiler and it works fine. Please let me know if you have any good idea to test this PR.

## About the dependency of ``importlib_metadata``

This PR will add a dependency ``importlib_metadata`` for the python < 3.10 because the modern usage of ``importlib`` gets stable at this python version (see the documentation of the importlib package [here](https://docs.python.org/3/library/importlib.html)). For python < 3.10, the package ``importlib_metadata`` implements the feature of ``importlib``. The current PR will hint the user to install this ``importlib_metata`` if their python version < 3.10.

## About the name and docs

Please let me know how do you think the name ``torch_dynamo_backend`` as the entry point group name and the documentation of this registration mechanism.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/93873

Approved by: https://github.com/malfet, https://github.com/jansel

Fixes https://github.com/pytorch/pytorch/issues/93890

We do the following:

1. fix __init__constructor for `AutocastModeVariable` with exisiting `mode` while copying

2. `resume_execution` is made aware of constant args (`target_values`), by storing said args in `ReenterWith`. To propagate between subgraphs (in straightline code), we also store the constant args in the downstream's `code_options["co_consts"]` if not already.

---

Future work:

1. handle instantiating context manager in non-inlineable functions. Simultaneously fix nested grad mode bug.

2. generalize to general `ContextManager`s

3. generalize to variable arguments passed to context manager, with guards around the variable.

---

Actually, if we look at the repro: 74592a43d0/test/dynamo/test_repros.py (L1249), we can see that the method in this PR doesn't work for graph breaks in function calls, in particular, in function calls that don't get inlined.

Why inlining functions with graph breaks is hard:

- When we handle graph breaks, we create a new code object for the remainder of the code. It's hard to imagine doing this when you are inside a function, then we need a frame stack. And we just want to deal with the current frame as a sequence of straight line codes.

Why propagating context manager information is hard:

- If we do not inline the function, the frame does not contain any information about the parent `block_stack` or `co_consts`. So we cannot store it on local objects like the eval frame. It has to be a global object in the output_graph.

---

Anyway, I'm starting to see clearly that dynamo must indeed be optimized for torch use-case. Supporting more general cases tends to run into endless corner-cases and caveats.

One direction that I see as viable to handle function calls which have graph breaks and `has_tensor_in_frame` is stick with not inlining them, while installing a global `ContextManagerManager`, similar to the `CleanupManager` (which cleans up global variables). We can know which context managers are active at any given point, so that we can install their setup/teardown code on those functions and their fragments.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94137

Approved by: https://github.com/yanboliang

The `requirement.txt` file is in the PyTorch directory. The instructions to `clone` and `cd` to the PyTorch directory are in the later section under Get the PyTorch Source. So, the instructions as such gives an error that requirement.txt is not found.

```ERROR: Could not open requirements file: .. No such file or directory: 'requirements.txt' ```

This PR clarifies the usage of the command.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94155

Approved by: https://github.com/malfet

GraphModules that were created during DDPOptimizer graph breaking

lacked `compile_subgraph_reason`, which caused an exception when

running .explain().

Now the reason is provided and users can use .explain() to find out

that DDPOptimizer is causing graph breaks.

Fixes#94579

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94749

Approved by: https://github.com/voznesenskym

This restructures the magic methods so that there is a stub `add` that calls the metaprogrammed `_add`. With this change, `SymNode.add` can now show up in stack traces, which is a huge benefit for profiling.

Signed-off-by: Edward Z. Yang <ezyang@meta.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94410

Approved by: https://github.com/Chillee

Changes:

* Add `simplified` kwarg to let you only render guards that are nontrivial (excludes duck sizing)

* Make a list of strings valid for sources, if you just have some variable names you want to bind to

* Add test helper `show_guards` using these facilities, switch a few tests to it

Signed-off-by: Edward Z. Yang <ezyang@meta.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94404

Approved by: https://github.com/Chillee

This patch started with only the change in `torch/_prims_common/__init__.py`. Unfortunately, this change by itself fails tests. The reason it fails tests is sym_max produces sympy.Max expression, which impedes our ability to actually reason symbolically about the resulting expressions. We much prefer to insert a guard on `l > 1` and get a Sympy expression without Max in it, if we can. In the upcoming unbacked SymInts PR, we can't necessarily do this, but without unbacked SymInts, we always can.

To do this, we introduce `alternate_impl_if_hinted_methods`. The idea is that if all of the arguments into max/min have hints, we will just go ahead and introduce a guard and then return one argument or the other, depending on the result. This is done by rewrapping the SymNode into SymInt/SymFloat and then running builtins.min/max, but we also could have just manually done the guarding (see also https://github.com/pytorch/pytorch/pull/94365 )

However, a very subtle problem emerges when you do this. When we do builtins min/max, we return the argument SymNode directly, without actually allocating a fresh SymNode. Suppose we do a min-max with a constant (as is the case in `sym_max(l, 1)`. This means that we can return a constant SymNode as the result of the computation. Constant SymNodes get transformed into regular integers, which then subsequently trigger the assert at https://github.com/pytorch/pytorch/pull/94400/files#diff-03557db7303b8540f095b4f0d9cd2280e1f42f534f67d8695f756ec6c02d3ec7L620

After thinking about this a bit, I think the assert is wrong. It should be OK for SymNode methods to return constants. The reason the assert was originally added was that ProxyTensorMode cannot trace a constant return. But this is fine: if you return a constant, no tracing is necessary; you know you have enough guards that it is guaranteed to be a constant no matter what the input arguments are, so you can burn it in. You might also be wondering why a change to SymNode method affects the assert from the dispatch mode dispatch: the call stack typically looks like SymNode.binary_magic_impl -> SymProxyTensorMode -> SymNode.binary_magic_impl again; so you hit the binary_magic_impl twice!

No new tests, the use of sym_max breaks preexisting tests and then the rest of the PR makes the tests pass again.

Signed-off-by: Edward Z. Yang <ezyang@meta.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94400

Approved by: https://github.com/Chillee

The expression `argv + [f'--junit-xml-reruns={test_report_path}'] if TEST_SAVE_XML else []` evaluates to the empty list when `TEST_SAVE_XML` is false and would need parentheses.

Instead simplify the code by appending the argument when required directly where `test_report_path` is set.

Note that `.append()` may not be used as that would modify `argv` and in turn `UNITTEST_ARGS` which might have undesired side effects.

Without this patch `pytest.main()` would be called, i.e. no arguments which will try to discover all tests in the current working directory which ultimately leads to (many) failures.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94589

Approved by: https://github.com/clee2000, https://github.com/Neilblaze

**Overview**

This refactors module materialization (i.e. meta device or `torchdistX` deferred initialization) to compute the parameter and buffer names as needed instead of pre-computing them. These are needed to reacquire references to the states (e.g. `module.get_parameter(param_name)`) after materialization since the materialization may create new variables.

This refactor simplifies `_get_fully_sharded_module_to_states()` (the core function for "pseudo auto wrapping") to better enable lowest common ancestor (LCA) module computation for shared parameters, for which tracking parameter and buffer names may complicate the already non-obvious implementation.

**Discussion**

The tradeoff is a worst case quadratic traversal over modules if materializing all of them. However, since (1) the number of modules is relatively small, (2) the computation per module in the quadratic traversal is negligible, (3) this runs only once per training session, and (4) module materialization targets truly large models, I think this tradeoff is tolerable.

**For Reviewers**

- `_init_param_handle_from_module()` initializes _one_ `FlatParamHandle` from a fully sharded module and represents the module wrapper code path. For this code path, there is no need to reacquire references to the parameters/buffers for now since the managed parameters are only computed after materialization. This works because the managed parameters have a simple definition: any parameter in the local root module's tree excluding those already marked as flattened by FSDP. Similarly, FSDP marks buffers to indicate that they have already been processed (synced if `sync_module_states`).

- `_init_param_handles_from_module()` initializes _all_ `FlatParamHandle`s from a fully sharded module and represents the composable code path. For this code path, we must reacquire references to parameters/buffers because each logical wrapping is specified as a list of parameters/buffers to group together by those variables and because materialization may create new variables.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94196

Approved by: https://github.com/rohan-varma

Hi!

I've been fuzzing different pytorch modules, and found a few crashes inside one of them.

Specifically, I'm talking about a module for interpreting the JIT code and a function called `InterpreterState::run()`. Running this function with provided crash file results in a crash, which occurs while calling `dim()` on a `stack` with 0 elements ([line-686](abc54f9314/torch/csrc/jit/runtime/interpreter.cpp (L686))). The crash itself occurs later, when std::move is called with incorrect value of type `IValue`.

The second crash is similar and occurs on [line 328](abc54f9314/torch/csrc/jit/runtime/interpreter.cpp (LL328C15-L328C48)), where `reg(inst.X + i - 1) = pop(stack);` is executed. The error here is the same, `Stack stack` might not contain enough elements.

The third crash occurs on [line 681](abc54f9314/torch/csrc/jit/runtime/interpreter.cpp (L681)). The problem here is the same as for previous crashes. There are not enough elements in the stack.

In addition to these places, there are many others (in the same function) where border checking is also missing. I am not sure what is the best way to fix these problems, however I suggest adding a boundary check inside each of these case statement.

All tests were performed on this pytorch version: [abc54f93145830b502400faa92bec86e05422fbd](abc54f9314)

### How to reproduce

1. To reproduce the crash, use provided docker: [Dockerfile](https://github.com/ispras/oss-sydr-fuzz/tree/master/projects/pytorch)

2. Build the container: `docker build -t oss-sydr-fuzz-pytorch-reproduce .`

3. Copy these crash files to the current directory:

- [crash-4f18c5128c9a5a94343fcbbd543d7d6b02964471.zip](https://github.com/pytorch/pytorch/files/10674143/crash-4f18c5128c9a5a94343fcbbd543d7d6b02964471.zip)

- [crash-55384dd7c9689ed7b94ac6697cc43db4e0dd905a.zip](https://github.com/pytorch/pytorch/files/10674147/crash-55384dd7c9689ed7b94ac6697cc43db4e0dd905a.zip)

- [crash-06b6125d01c5f91fae112a1aa7dcc76d71b66576.zip](https://github.com/pytorch/pytorch/files/10674152/crash-06b6125d01c5f91fae112a1aa7dcc76d71b66576.zip)

4. Run the container: ``docker run --privileged --network host -v `pwd`:/homedir --rm -it oss-sydr-fuzz-pytorch-reproduce /bin/bash``

5. And execute the binary: `/jit_differential_fuzz /homedir/crash-4f18c5128c9a5a94343fcbbd543d7d6b02964471`

After execution completes you will see this stacktrace:

```asan

=36==ERROR: AddressSanitizer: heap-buffer-overflow on address 0x6060001657f8 at pc 0x00000060bc91 bp 0x7fff00b33380 sp 0x7fff00b33378

READ of size 4 at 0x6060001657f8 thread T0

#0 0x60bc90 in c10::IValue::IValue(c10::IValue&&) /pytorch_fuzz/torch/include/ATen/core/ivalue.h:214:43

#1 0xc20e7cd in torch::jit::pop(std::vector<c10::IValue, std::allocator<c10::IValue> >&) /pytorch_fuzz/aten/src/ATen/core/stack.h:102:12

#2 0xc20e7cd in torch::jit::dim(std::vector<c10::IValue, std::allocator<c10::IValue> >&) /pytorch_fuzz/torch/csrc/jit/mobile/promoted_prim_ops.cpp:119:20

#3 0xc893060 in torch::jit::InterpreterStateImpl::runImpl(std::vector<c10::IValue, std::allocator<c10::IValue> >&) /pytorch_fuzz/torch/csrc/jit/runtime/interpreter.cpp:686:13

#4 0xc85c47b in torch::jit::InterpreterStateImpl::run(std::vector<c10::IValue, std::allocator<c10::IValue> >&) /pytorch_fuzz/torch/csrc/jit/runtime/interpreter.cpp:1010:9

#5 0x600598 in runGraph(std::shared_ptr<torch::jit::Graph>, std::vector<at::Tensor, std::allocator<at::Tensor> > const&) /jit_differential_fuzz.cc:66:38

#6 0x601d99 in LLVMFuzzerTestOneInput /jit_differential_fuzz.cc:107:25

#7 0x52ccf1 in fuzzer::Fuzzer::ExecuteCallback(unsigned char const*, unsigned long) /llvm-project/compiler-rt/lib/fuzzer/FuzzerLoop.cpp:611:15

#8 0x516c0c in fuzzer::RunOneTest(fuzzer::Fuzzer*, char const*, unsigned long) /llvm-project/compiler-rt/lib/fuzzer/FuzzerDriver.cpp:324:6

#9 0x51c95b in fuzzer::FuzzerDriver(int*, char***, int (*)(unsigned char const*, unsigned long)) /llvm-project/compiler-rt/lib/fuzzer/FuzzerDriver.cpp:860:9

#10 0x545ef2 in main /llvm-project/compiler-rt/lib/fuzzer/FuzzerMain.cpp:20:10

#11 0x7f9ec069a082 in __libc_start_main (/lib/x86_64-linux-gnu/libc.so.6+0x24082)

#12 0x51152d in _start (/jit_differential_fuzz+0x51152d)

0x6060001657f8 is located 8 bytes to the left of 64-byte region [0x606000165800,0x606000165840)

allocated by thread T0 here:

#0 0x5fd42d in operator new(unsigned long) /llvm-project/compiler-rt/lib/asan/asan_new_delete.cpp:95:3

#1 0xa16ab5 in void std::vector<c10::IValue, std::allocator<c10::IValue> >::_M_realloc_insert<c10::IValue&>(__gnu_cxx::__normal_iterator<c10::IValue*, std::vector<c10::IValue, std::allocator<c10::IValue> > >, c10::IValue&) /usr/bin/../lib/gcc/x86_64-linux-gnu/10/../../../../include/c++/10/bits/vector.tcc:440:33

#2 0xa168f1 in c10::IValue& std::vector<c10::IValue, std::allocator<c10::IValue> >::emplace_back<c10::IValue&>(c10::IValue&) /usr/bin/../lib/gcc/x86_64-linux-gnu/10/../../../../include/c++/10/bits/vector.tcc:121:4

#3 0xc89b53c in torch::jit::InterpreterStateImpl::runImpl(std::vector<c10::IValue, std::allocator<c10::IValue> >&) /pytorch_fuzz/torch/csrc/jit/runtime/interpreter.cpp:344:19

#4 0xc85c47b in torch::jit::InterpreterStateImpl::run(std::vector<c10::IValue, std::allocator<c10::IValue> >&) /pytorch_fuzz/torch/csrc/jit/runtime/interpreter.cpp:1010:9

#5 0x600598 in runGraph(std::shared_ptr<torch::jit::Graph>, std::vector<at::Tensor, std::allocator<at::Tensor> > const&) /jit_differential_fuzz.cc:66:38

#6 0x601d99 in LLVMFuzzerTestOneInput /jit_differential_fuzz.cc:107:25

#7 0x52ccf1 in fuzzer::Fuzzer::ExecuteCallback(unsigned char const*, unsigned long) /llvm-project/compiler-rt/lib/fuzzer/FuzzerLoop.cpp:611:15

#8 0x516c0c in fuzzer::RunOneTest(fuzzer::Fuzzer*, char const*, unsigned long) /llvm-project/compiler-rt/lib/fuzzer/FuzzerDriver.cpp:324:6

#9 0x51c95b in fuzzer::FuzzerDriver(int*, char***, int (*)(unsigned char const*, unsigned long)) /llvm-project/compiler-rt/lib/fuzzer/FuzzerDriver.cpp:860:9

#10 0x545ef2 in main /llvm-project/compiler-rt/lib/fuzzer/FuzzerMain.cpp:20:10

#11 0x7f9ec069a082 in __libc_start_main (/lib/x86_64-linux-gnu/libc.so.6+0x24082)

SUMMARY: AddressSanitizer: heap-buffer-overflow /pytorch_fuzz/torch/include/ATen/core/ivalue.h:214:43 in c10::IValue::IValue(c10::IValue&&)

Shadow bytes around the buggy address:

0x0c0c80024aa0: fd fd fd fd fd fd fd fa fa fa fa fa 00 00 00 00

0x0c0c80024ab0: 00 00 00 fa fa fa fa fa fd fd fd fd fd fd fd fd

0x0c0c80024ac0: fa fa fa fa fd fd fd fd fd fd fd fd fa fa fa fa

0x0c0c80024ad0: fd fd fd fd fd fd fd fd fa fa fa fa fd fd fd fd

0x0c0c80024ae0: fd fd fd fd fa fa fa fa 00 00 00 00 00 00 00 00

=>0x0c0c80024af0: fa fa fa fa fd fd fd fd fd fd fd fd fa fa fa[fa]

0x0c0c80024b00: 00 00 00 00 00 00 00 00 fa fa fa fa fa fa fa fa

0x0c0c80024b10: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c0c80024b20: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c0c80024b30: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c0c80024b40: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

Shadow byte legend (one shadow byte represents 8 application bytes):

Addressable: 00

Partially addressable: 01 02 03 04 05 06 07

Heap left redzone: fa

Freed heap region: fd

Stack left redzone: f1

Stack mid redzone: f2

Stack right redzone: f3

Stack after return: f5

Stack use after scope: f8

Global redzone: f9

Global init order: f6

Poisoned by user: f7

Container overflow: fc

Array cookie: ac

Intra object redzone: bb

ASan internal: fe

Left alloca redzone: ca

Right alloca redzone: cb

==36==ABORTING

```

6. Executing the remaining crashes gives similar crash reports

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94298

Approved by: https://github.com/davidberard98

- To check for Memory Leaks in `test_mps.py`, set the env-variable `PYTORCH_TEST_MPS_MEM_LEAK_CHECK=1` when running test_mps.py (used CUDA code as reference).

- Added support for the following new python interfaces in MPS module:

`torch.mps.[empty_cache(), set_per_process_memory_fraction(), current_allocated_memory(), driver_allocated_memory()]`

- Renamed `_is_mps_on_macos_13_or_newer()` to `_mps_is_on_macos_13_or_newer()`, and `_is_mps_available()` to `_mps_is_available()` to be consistent in naming with prefix `_mps`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94646

Approved by: https://github.com/malfet

Summary:

We have tests testing package level migration correctness for torch AO migration.

After reading the code, I noticed that these tests are not testing anything

additional on top of the function level tests we already have.

An upcoming user warning PR will break this test, and it doesn't seem worth fixing.

As long as the function level tests pass, 100% of user functionality will

be tested. Removing this in a separate PR to keep PRs small.

Test plan:

```

python test/test_quantization.py -k AOMigration

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94422

Approved by: https://github.com/jcaip

Summary:

This test case is dead code. A newer version of this code

exists in `test/quantization/ao_migration/test_quantization.py`. I

think this class must have been mistakenly left during a refactor.

Deleting it.

Test plan: CI

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94420

Approved by: https://github.com/jerryzh168

Hopefully fixes#89205.

This is another version of #90847 where it was reverted because it increases the compile-time significantly.

From my discussion with @ngimel in https://github.com/pytorch/pytorch/pull/93153#issuecomment-1409051528, it seems the option of jiterator would be very tricky if not impossible.

So what I did was to optimize the compile-time in my computer.

To optimize the build time, first I compile the pytorch as a whole, then only change the `LogcumsumexpKernel.cu` file to see how it changes the compile time.

Here are my results for the compilation time of only the `LogcumsumexpKernel.cu` file in my computer:

- Original version (without any complex implementations): 56s (about 1 minute)

- The previous PR (#90847): 13m 57s (about 14 minutes)

- This PR: 3m 35s (about 3.5 minutes)

If the previous PR increases the build time by 30 mins in pytorch's computer, then this PR reduces the increment of build time to about 6 mins. Hopefully this is an acceptable level of build-time increase.

What I did was (sorted by how significant it reduces the build time from the most significant one):

- Substituting `log(x)` to `log1p(x - 1)`. This is applied in the infinite case, so we don't really care about precision.

- Implementing complex exponential manually

tag: @malfet, @albanD

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94310

Approved by: https://github.com/Skylion007, https://github.com/malfet

- This PR is a prerequisite for the upcoming Memory Leak Detection PR.

- Enable global manual seeding via `torch.manual_seed()` + test case

- Add `torch.mps.synchronize()` to wait for MPS stream to finish + test case

- Enable the following python interfaces for MPS:

`torch.mps.[get_rng_state(), set_rng_state(), synchronize(), manual_seed(), seed()]`

- Added some test cases in test_mps.py

- Added `mps.rst` to document the `torch.mps` module.

- Fixed the failure with `test_public_bindings.py`

Description of new files added:

- `torch/csrc/mps/Module.cpp`: implements `torch._C` module functions for `torch.mps` and `torch.backends.mps`.

- `torch/mps/__init__.py`: implements Python bindings for `torch.mps` module.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94417

Approved by: https://github.com/albanD

This is another try(first is https://github.com/pytorch/pytorch/pull/94172) to fix the warning message when running inductor CPU path:

```

l. Known situations this can occur are inference mode only compilation involving resize_ or prims (!schema.hasAnyAliasInfo() INTERNAL ASSERT FAILED); if your situation looks different please file a bug to PyTorch.

Traceback (most recent call last):

File "/home/xiaobing/pytorch-offical/torch/_functorch/aot_autograd.py", line 1377, in aot_wrapper_dedupe

fw_metadata, _out = run_functionalized_fw_and_collect_metadata(flat_fn)(

File "/home/xiaobing/pytorch-offical/torch/_functorch/aot_autograd.py", line 578, in inner

flat_f_outs = f(*flat_f_args)

File "/home/xiaobing/pytorch-offical/torch/_functorch/aot_autograd.py", line 2455, in functional_call

out = Interpreter(mod).run(*args[params_len:], **kwargs)

File "/home/xiaobing/pytorch-offical/torch/fx/interpreter.py", line 136, in run

self.env[node] = self.run_node(node)

File "/home/xiaobing/pytorch-offical/torch/fx/interpreter.py", line 177, in run_node

return getattr(self, n.op)(n.target, args, kwargs)

File "/home/xiaobing/pytorch-offical/torch/fx/interpreter.py", line 294, in call_module

return submod(*args, **kwargs)

File "/home/xiaobing/pytorch-offical/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "/home/xiaobing/pytorch-offical/torch/_inductor/mkldnn.py", line 344, in forward

return self._conv_forward(input, other, self.weight, self.bias)

File "/home/xiaobing/pytorch-offical/torch/_inductor/mkldnn.py", line 327, in _conv_forward

return torch.ops.mkldnn._convolution_pointwise_(

File "/home/xiaobing/pytorch-offical/torch/_ops.py", line 499, in __call__

return self._op(*args, **kwargs or {})

File "/home/xiaobing/pytorch-offical/torch/_inductor/overrides.py", line 38, in __torch_function__

return func(*args, **kwargs)

File "/home/xiaobing/pytorch-offical/torch/_ops.py", line 499, in __call__

return self._op(*args, **kwargs or {})

RuntimeError: !schema.hasAnyAliasInfo() INTERNAL ASSERT FAILED at "/home/xiaobing/pytorch-offical/aten/src/ATen/FunctionalizeFallbackKernel.cpp":32, please report a bug to PyTorch. mutating and aliasing ops should all have codegen'd kernels

While executing %self_layer2_0_downsample_0 : [#users=2] = call_module[target=self_layer2_0_downsample_0](args = (%self_layer1_1_conv2, %self_layer2_0_conv2), kwargs = {})

Original traceback:

File "/home/xiaobing/vision/torchvision/models/resnet.py", line 100, in forward

identity = self.downsample(x)

| File "/home/xiaobing/vision/torchvision/models/resnet.py", line 274, in _forward_impl

x = self.layer2(x)

| File "/home/xiaobing/vision/torchvision/models/resnet.py", line 285, in forward

return self._forward_impl(x)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94581

Approved by: https://github.com/jgong5, https://github.com/jansel

Fixes#87219

Implements new ``repeat_interleave`` function into ``aten/src/ATen/native/mps/operations/Repeat.mm``

Adds it to ``aten/src/ATen/native/native_functions.yaml``

Adds new test ``test_repeat_interleave`` to ``test/test_mps/py``

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88649

Approved by: https://github.com/kulinseth

Applies the remaining flake8-comprehension fixes and checks. This changes replace all remaining unnecessary generator expressions with list/dict/set comprehensions which are more succinct, performant, and better supported by our torch.jit compiler. It also removes useless generators such as 'set(a for a in b)`, resolving it into just the set call.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94676

Approved by: https://github.com/ezyang

This is my commandeer of https://github.com/pytorch/pytorch/pull/82154 with a couple extra fixes.

The high level idea is that when we start profiling we see python frames which are currently executing, but we don't know what system TID created them. So instead we defer the TID assignment, and then during post processing we peer into the future and use the system TID *of the next* call on that Python TID.

As an aside, it turns out that CPython does some bookkeeping (ee821dcd39/Include/cpython/pystate.h (L159-L165), thanks @dzhulgakov for the pointer), but you'd have to do some extra work at runtime to know how to map their TID to ours so for now I'm going to stick to what I can glean from post processing alone.

As we start observing more threads it becomes more important to be principled about how we start up and shut down. (Since threads may die while the profiler is running.) #82154 had various troubles with segfaults that wound up being related to accessing Python thread pointers which were no longer alive. I've tweaked the startup and shutdown interaction with the CPython interpreter and it should be safer now.

Differential Revision: [D42336292](https://our.internmc.facebook.com/intern/diff/D42336292/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/91684

Approved by: https://github.com/chaekit

Summary:

Update XNNPACK to 51a987591a6fc9f0fc0707077f53d763ac132cbf (51a987591a)

Update the corresponding CMake and BUCK rules, as well as the generate_wrapper.py for the new version.

Due to XNNPACK having already changed a lot. We need to update XNNPACK in this time for many reasons. Firstly, XNNAPCK has updated a lot, and developers' community has re-factored codes' such as API changes. We can see from their cmakefile.txt to see there are many changes! Thus, in order to follow up upstream. We need to update xnnpack at this time. It is very crucial for our future development. Also, many projects are relying on newer versions of XNNPACK, so we probably need to update XNNPACK third-party libs at this time. we have some api changes of XNNPACK, so we also need to update them in this time. We also update target building files and generate-wrapper.py file to make this process more automatically. The original target files have some files which are missing, so we add them into buck2 building files so that it can build and test XNNPACK successfully.

Test Plan:

buck2 build //xplat/third-party/XNNPACK:operators

buck2 build //xplat/third-party/XNNPACK:XNNPACK

buck2 test fbcode//caffe2/test:xnnpack_integration

Reviewed By: digantdesai

Differential Revision: D43092938

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94330

Approved by: https://github.com/digantdesai, https://github.com/albanD

Currently we don't enable fusion of mutation ops in any case (we introduce a `StarDep` to prevent fusion with any upstream readers, to ensure the kernel mutating the buffer is executing after them).

This results in cases like [this](https://gist.github.com/mlazos/3dcfd416033b3459ffea43cb91c117c9) where even though all of the other readers have been fused into a single kernel, the `copy_` is left by itself.

This PR introduces `WeakDep` and a pass after each fusion to see if after fusion there are other dependencies on the upstream fused node which already guarantee that this kernel is fused after the prior readers, if there are, the `WeakDep` is pruned and the kernel performing the mutation can be fused with the upstream kernel. This will allow Inductor to fuse epilogue `copy_`s introduced by functionalization on inference graphs.

[before code](https://gist.github.com/mlazos/3369a11dfd1b5cf5bb255313b710ef5b)

[after code](https://gist.github.com/mlazos/1005d8aeeba56e3a3e1b70cd77773c53)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94110

Approved by: https://github.com/jansel

I applied some flake8 fixes and enabled checking for them in the linter. I also enabled some checks for my previous comprehensions PR.

This is a follow up to #94323 where I enable the flake8 checkers for the fixes I made and fix a few more of them.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94601

Approved by: https://github.com/ezyang

- This PR is a prerequisite for the upcoming Memory Leak Detection PR.

- Enable global manual seeding via `torch.manual_seed()` + test case

- Add `torch.mps.synchronize()` to wait for MPS stream to finish + test case

- Enable the following python interfaces for MPS:

`torch.mps.[get_rng_state(), set_rng_state(), synchronize(), manual_seed(), seed()]`

- Added some test cases in test_mps.py

- Added `mps.rst` to document the `torch.mps` module.

- Fixed the failure with `test_public_bindings.py`

Description of new files added:

- `torch/csrc/mps/Module.cpp`: implements `torch._C` module functions for `torch.mps` and `torch.backends.mps`.

- `torch/mps/__init__.py`: implements Python bindings for `torch.mps` module.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94417

Approved by: https://github.com/albanD

Fixes#87374

@kulinseth and @albanD This makes the MPSAllocator call the MPSAllocatorCallbacks when getting a free buffer and a first try on allocating fails. User can register callbacks that might free a few buffers and an allocation will be retried.

The reason why we need the `recursive_mutex` is that since callbacks are supposed to free memory, they will eventually call free_buffer() that will lock the same `mutex` that's used for allocation. This approach is similar what's used with the `FreeMemoryCallback` in the `CUDACachingAllocator`.

This PR tries to be as minimal as possible, but there could be some additional improvements cleanups, like:

- In current main, there's no way callbacks can be called, so we could probably rename the callback registry to something reflect the same naming in the CudaAllocator:

996cc1c0d0/c10/cuda/CUDACachingAllocator.h (L14-L24)

- Review the EventTypes here:

996cc1c0d0/aten/src/ATen/mps/MPSAllocator.h (L18-L23)

- And IMHO a nice improvement would be if callbacks could be aware of AllocParams, so they can decide to be more agressive or not depending on how much memory is requested. So I'd pass AllocParams in the signature of the executeCallback instance:

996cc1c0d0/aten/src/ATen/mps/MPSAllocator.h (L25)

Let me know if you think we could sneak those changes into this PR or if it's better to propose them in other smaller PR's.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94133

Approved by: https://github.com/kulinseth, https://github.com/razarmehr, https://github.com/albanD

The other `Autograd[Backend]` keys all have fallthrough kernels registered to them, but `AutogradMeta` was missing the fallthrough kernel.

This is a problem for custom ops that don't have autograd support, if you try to run them with meta tensors. If you have a custom op, and register a CPU and a Meta kernel, then:

(1) if you run the op with cpu tensors, it will dispatch straight to the CPU kernel (as expected)

(2) if you run the op with meta tensors, you will error - because we don't have a fallthrough registered to the AutogradMeta key, we will try to dispatch to the AutogradMeta key and error, since the op author hasn't provided an autograd implementation.

Here's a repro that I confirmed now works:

```

import torch

from torch._dispatch.python import enable_python_dispatcher

from torch._subclasses.fake_tensor import FakeTensorMode

lib = torch.library.Library("test", "DEF")

impl_cpu = torch.library.Library("test", "IMPL", "CPU")

impl_meta = torch.library.Library("test", "IMPL", "Meta")

def foo_impl(x):

return x + 1

lib.define("foo(Tensor a) -> Tensor")

impl_meta.impl("foo", foo_impl)

impl_cpu.impl("foo", foo_impl)

with enable_python_dispatcher():

a = torch.ones(2, device='meta')

print("@@@@@")

b = torch.ops.test.foo.default(a)

print(b)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94603

Approved by: https://github.com/ezyang, https://github.com/albanD

Fixes#88951

The output shape of upsample is computed through `(i64)idim * (double)scale` and then casted back to `i64`. If the input scale is ill-formed (say negative number as #88951) which makes `(double)(idim * scale)` to be out of the range for `i64`, the casting will be an undefined behaviour.

To fix it, we just check if `(double)(idim * scale)` can fit into `i64`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94290

Approved by: https://github.com/malfet

Summary:

- Remove redundant bool casts from scatter/gather

- Make the workarounds for scatter/gather (for bool/uint8 data types) OS specific - use them only in macOS Monterey, ignore them starting with macOS Ventura

- Make all tensors ranked in scatter

Fixes following tests:

```

test_output_match_slice_scatter_cpu_bool

test_output_match_select_scatter_cpu_bool

test_output_match_diagonal_scatter_cpu_bool

test_output_match_repeat_cpu_bool

test_output_match_rot90_cpu_bool

etc..

```

Still failing on macOS Monterey (needs additional investigation):

```

test_output_match_scatter_cpu_bool

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94464

Approved by: https://github.com/kulinseth

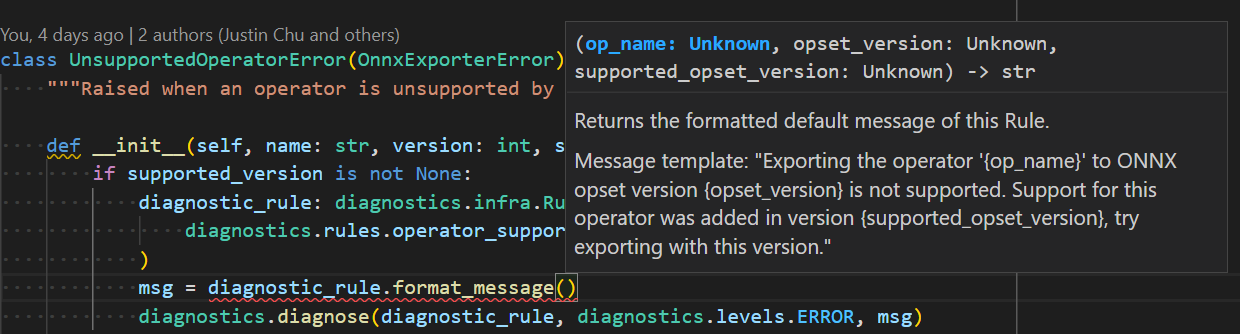

* CI Test environment to install onnx and onnx-script.

* Add symbolic function for `bitwise_or`, `convert_element_type` and `masked_fill_`.

* Update symbolic function for `slice` and `arange`.

* Update .pyi signature for `_jit_pass_onnx_graph_shape_type_inference`.

Co-authored-by: Wei-Sheng Chin <wschin@outlook.com>

Co-authored-by: Ti-Tai Wang <titaiwang@microsoft.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94564

Approved by: https://github.com/abock

- Also fix FP16 correctness issues in several other ops by lowering their FP16 precision in the new list `FP16_LOW_PRECISION_LIST`.

- Add atol/rtol to the `AssertEqual()` of Gradient tests.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94567

Approved by: https://github.com/kulinseth

# Summary

- Adds type hinting support for SDPA

- Updates the documentation adding warnings and notes on the context manager

- Adds scaled_dot_product_attention to the non-linear activation function section of nn.functional docs

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94008

Approved by: https://github.com/cpuhrsch

To match nodes within the graph, the matcher currently flattens the arguments and compares each argument against each other. However, if it believes that a list input contains all literals, it will not flatten the list and will instead compare the list directly against each other. It determines if a list is a literal by checking if the first element is a node. However this doesn't work in some cases (like the test cases I added).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94375

Approved by: https://github.com/SherlockNoMad

Fixes#88940

According to the [doc](https://pytorch.org/docs/stable/generated/torch.index_select.html):

1. "The returned tensor has the same number of dimensions as the original tensor (`input`). "

2. "The `dim`th dimension has the same size as the length of `index`; other dimensions have the same size as in the original tensor."

These two conditions cannot be satisfied at the same time if the `input` is a scalar && `index` has multiple values: because a scalar at most holds one element (according to property 1, the output is a scalar), it is impossible to satisfy "The `dim`th dimension has the same size as the length of `index`" when `index` has multiple values.

However, currently, if we do so we either get:

1. Buffer overflow with ASAN;

2. Or (w/o ASAN) silently returns outputs that is not consistent with the doc (`x.index_select(0, torch.Tensor([0, 0, 0]).int())` returns `x`).

As a result, we should explicitly reject such cases.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94347

Approved by: https://github.com/malfet

### Motivation of this PR

This patch is to migrate `spmm_reduce` from `torch-sparse` (a 3rd party dependency for PyG) to `torch`, which is a response to the initial proposal for fusion of **Gather, Apply Scatter** in Message Passing of GNN inference/training. https://github.com/pytorch/pytorch/issues/71300

**GAS** is the major step for Message Passing, the behavior of **GAS** can be classified into 2 kinds depending on the storage type of `EdgeIndex` which records the connections of nodes:

* COO: the hotspot is `scatter_reduce`

* CSR: the hotspot is `spmm_reduce`

The reduce type can be choose from: "max", "mean", "max", "min".

extend `torch.sparse.mm` with an `reduce` argument, maps to `torch.sparse_mm.reduce` internally.

`sparse_mm_reduce` is registered under the TensorTypeId of `SparseCsrCPU`, and this operator requires an internal interface `_sparse_mm_reduce_impl` which has dual outputs:

* `out` - the actual output

* `arg_out` - records output indices in the non zero elements if the reduce type is "max" or "min", this is only useful for training. So for inference, it will not be calculated.

### Performance

Benchmark on GCN for obgn-products on Xeon single socket, the workload is improved by `4.3x` with this patch.

Performance benefit for training will be bigger, the original backward impl for `sum|mean` is sequential; the original backward impl for `max|min` is not fused.

#### before:

```

----------------------------- ------------ ------------ ------------ ------------ ------------ ------------

Name Self CPU % Self CPU CPU total % CPU total CPU time avg # of Calls

----------------------------- ------------ ------------ ------------ ------------ ------------ ------------

torch_sparse::spmm_sum 97.09% 56.086s 97.09% 56.088s 6.232s 9

aten::linear 0.00% 85.000us 1.38% 795.485ms 88.387ms 9

aten::matmul 0.00% 57.000us 1.38% 795.260ms 88.362ms 9

aten::mm 1.38% 795.201ms 1.38% 795.203ms 88.356ms 9

aten::relu 0.00% 50.000us 0.76% 440.434ms 73.406ms 6

aten::clamp_min 0.76% 440.384ms 0.76% 440.384ms 73.397ms 6

aten::add_ 0.57% 327.801ms 0.57% 327.801ms 36.422ms 9

aten::log_softmax 0.00% 23.000us 0.10% 55.503ms 18.501ms 3

```

#### after

```

----------------------------- ------------ ------------ ------------ ------------ ------------ ------------

Name Self CPU % Self CPU CPU total % CPU total CPU time avg # of Calls

----------------------------- ------------ ------------ ------------ ------------ ------------ ------------

aten::spmm_sum 87.35% 11.826s 87.36% 11.827s 1.314s 9

aten::linear 0.00% 92.000us 5.87% 794.451ms 88.272ms 9

aten::matmul 0.00% 62.000us 5.87% 794.208ms 88.245ms 9

aten::mm 5.87% 794.143ms 5.87% 794.146ms 88.238ms 9

aten::relu 0.00% 53.000us 3.35% 452.977ms 75.496ms 6

aten::clamp_min 3.35% 452.924ms 3.35% 452.924ms 75.487ms 6

aten::add_ 2.58% 348.663ms 2.58% 348.663ms 38.740ms 9

aten::argmax 0.42% 57.473ms 0.42% 57.475ms 14.369ms 4

aten::log_softmax 0.00% 22.000us 0.39% 52.605ms 17.535ms 3

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83727

Approved by: https://github.com/jgong5, https://github.com/cpuhrsch, https://github.com/rusty1s, https://github.com/pearu

`combine_t` is the type used to represent the number of elements seen so far as

a floating point value (acc.nf). It is always used in calculations with other

values of type `acc_scalar_t` so there is no performance gained by making this a

separate template argument. Furthermore, when calculating the variance on CUDA

it is always set to `float` which means values are unnecessarily truncated

before being immediately promoted to `double`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94522

Approved by: https://github.com/ngimel

Per @ezyang's advice, added magic sym_int method. This works for 1.0 * s0 optimization, but can't evaluate `a>0` for some args, and still misses some optimization that model rewrite achieves, so swin still fails

(rewrite replaces `B = int(windows.shape[0] / (H * W / window_size / window_size))` with `B = (windows.shape[0] // int(H * W / window_size / window_size))` and model passes)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94365

Approved by: https://github.com/ezyang

Fixes batchnorm forward/backward pass and layer_norm:

Batchnorm Forward pass:

```

- fix batch_norm_mps_out key

- return 1/sqrt(var+epsilon) instead of var

- return empty tensor for mean and var if train is not enabled

- remove native_batch_norm from block list

```

Batchnorm Backward pass:

```

- add revert caculation for save_var used in backward path

- add backward test for native_batch_norm and _native_batch_norm_legit

```

Layer norm:

```

- remove the duplicate calculation from layer_norm_mps

- enable native_layer_norm backward test

- raise atol rtol for native_layer_norm

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94351

Approved by: https://github.com/razarmehr

Hi!

I've been fuzzing different pytorch modules, and found a few crashes.

Specifically, I'm talking about `schema_type_parser.cpp` and `irparser.cpp`. Inside these files, different standard conversion functions are used (such as `stoll`, `stoi`, `stod`, `stoull`). However, default `std` exceptions, such as `std::out_of_range`, `std::invalid_argument`, are not handled.

Some of the crash-files:

1. [crash-493db74c3426e79b2bf0ffa75bb924503cb9acdc.zip](https://github.com/pytorch/pytorch/files/10237616/crash-493db74c3426e79b2bf0ffa75bb924503cb9acdc.zip) - crash source: schema_type_parser.cpp:272

2. [crash-67bb5d34ca48235687cc056e2cdeb2476b8f4aa5.zip](https://github.com/pytorch/pytorch/files/10237618/crash-67bb5d34ca48235687cc056e2cdeb2476b8f4aa5.zip) - crash source: schema_type_parser.cpp:240

3. [crash-0157bca5c41bffe112aa01f3b0f2099ca4bcc62f.zip](https://github.com/pytorch/pytorch/files/10307970/crash-0157bca5c41bffe112aa01f3b0f2099ca4bcc62f.zip) - crash source: schema_type_parser.cpp:179

4. [crash-430da923e56adb9569362efa7fa779921371b710.zip](https://github.com/pytorch/pytorch/files/10307972/crash-430da923e56adb9569362efa7fa779921371b710.zip) - crash source: schema_type_parser.cpp:196

The provided patch adds exception handlers for `std::invalid_argument` and `std::out_of_range`, to rethrow these exceptions with `ErrorReport`.

### How to reproduce

1. To reproduce the crash, use provided docker: [Dockerfile](https://github.com/ispras/oss-sydr-fuzz/blob/master/projects/pytorch/Dockerfile)

2. Build the container: `docker build -t oss-sydr-fuzz-pytorch-reproduce .`

3. Copy crash file to the current directory

5. Run the container: ``docker run --privileged --network host -v `pwd`:/homedir --rm -it oss-sydr-fuzz-pytorch-reproduce /bin/bash``

6. And execute the binary: `/irparser_fuzz /homedir/crash-67bb5d34ca48235687cc056e2cdeb2476b8f4aa5`

After execution completes you will see this error message:

```txt

terminate called after throwing an instance of 'std::out_of_range'

what(): stoll

```

And this stacktrace:

```asan

==9626== ERROR: libFuzzer: deadly signal

#0 0x5b4cf1 in __sanitizer_print_stack_trace /llvm-project/compiler-rt/lib/asan/asan_stack.cpp:87:3

#1 0x529627 in fuzzer::PrintStackTrace() /llvm-project/compiler-rt/lib/fuzzer/FuzzerUtil.cpp:210:5

#2 0x50f833 in fuzzer::Fuzzer::CrashCallback() /llvm-project/compiler-rt/lib/fuzzer/FuzzerLoop.cpp:233:3

#3 0x7ffff7c3741f (/lib/x86_64-linux-gnu/libpthread.so.0+0x1441f)

#4 0x7ffff7a5700a in raise (/lib/x86_64-linux-gnu/libc.so.6+0x4300a)

#5 0x7ffff7a36858 in abort (/lib/x86_64-linux-gnu/libc.so.6+0x22858)

#6 0x7ffff7e74910 (/lib/x86_64-linux-gnu/libstdc++.so.6+0x9e910)

#7 0x7ffff7e8038b (/lib/x86_64-linux-gnu/libstdc++.so.6+0xaa38b)

#8 0x7ffff7e803f6 in std::terminate() (/lib/x86_64-linux-gnu/libstdc++.so.6+0xaa3f6)

#9 0x7ffff7e806a8 in __cxa_throw (/lib/x86_64-linux-gnu/libstdc++.so.6+0xaa6a8)

#10 0x7ffff7e7737d in std::__throw_out_of_range(char const*) (/lib/x86_64-linux-gnu/libstdc++.so.6+0xa137d)

#11 0xbd0579 in long long __gnu_cxx::__stoa<long long, long long, char, int>(long long (*)(char const*, char**, int), char const*, char const*, unsigned long*, int) /usr/bin/../lib/gcc/x86_64-linux-gnu/10/../../../../include/c++/10/ext/string_conversions.h:86:2

#12 0xc10f9c in std::__cxx11::stoll(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, unsigned long*, int) /usr/bin/../lib/gcc/x86_64-linux-gnu/10/../../../../include/c++/10/bits/basic_string.h:6572:12

#13 0xc10f9c in torch::jit::SchemaTypeParser::parseRefinedTensor()::$_2::operator()() const::'lambda'()::operator()() const /pytorch_fuzz/torch/csrc/jit/frontend/schema_type_parser.cpp:240:25

#14 0xc10f9c in void c10::function_ref<void ()>::callback_fn<torch::jit::SchemaTypeParser::parseRefinedTensor()::$_2::operator()() const::'lambda'()>(long) /pytorch_fuzz/c10/util/FunctionRef.h:43:12

#15 0xbfbb27 in torch::jit::SchemaTypeParser::parseList(int, int, int, c10::function_ref<void ()>) /pytorch_fuzz/torch/csrc/jit/frontend/schema_type_parser.cpp:424:7

#16 0xc0ef24 in torch::jit::SchemaTypeParser::parseRefinedTensor()::$_2::operator()() const /pytorch_fuzz/torch/csrc/jit/frontend/schema_type_parser.cpp:236:9

#17 0xc0ef24 in void c10::function_ref<void ()>::callback_fn<torch::jit::SchemaTypeParser::parseRefinedTensor()::$_2>(long) /pytorch_fuzz/c10/util/FunctionRef.h:43:12

#18 0xbfbb27 in torch::jit::SchemaTypeParser::parseList(int, int, int, c10::function_ref<void ()>) /pytorch_fuzz/torch/csrc/jit/frontend/schema_type_parser.cpp:424:7

#19 0xbff590 in torch::jit::SchemaTypeParser::parseRefinedTensor() /pytorch_fuzz/torch/csrc/jit/frontend/schema_type_parser.cpp:209:3

#20 0xc02992 in torch::jit::SchemaTypeParser::parseType() /pytorch_fuzz/torch/csrc/jit/frontend/schema_type_parser.cpp:362:13

#21 0x9445642 in torch::jit::IRParser::parseVarWithType(bool) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:111:35

#22 0x944ff4c in torch::jit::IRParser::parseOperatorOutputs(std::vector<torch::jit::VarWithType, std::allocator<torch::jit::VarWithType> >*)::$_0::operator()() const /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:138:21

#23 0x944ff4c in void std::__invoke_impl<void, torch::jit::IRParser::parseOperatorOutputs(std::vector<torch::jit::VarWithType, std::allocator<torch::jit::VarWithType> >*)::$_0&>(std::__invoke_other, torch::jit::IRParser::parseOperatorOutputs(std::vector<torch::jit::VarWithType, std::allocator<torch::jit::VarWithType> >*)::$_0&) /usr/bin/../lib/gcc/x86_64-linux-gnu/10/../../../../include/c++/10/bits/invoke.h:60:14

#24 0x94463a7 in torch::jit::IRParser::parseList(int, int, int, std::function<void ()> const&) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:498:7

#25 0x94460a5 in torch::jit::IRParser::parseOperatorOutputs(std::vector<torch::jit::VarWithType, std::allocator<torch::jit::VarWithType> >*) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:137:3

#26 0x944c1ce in torch::jit::IRParser::parseOperator(torch::jit::Block*) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:384:3

#27 0x944bf56 in torch::jit::IRParser::parseOperatorsList(torch::jit::Block*) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:362:5

#28 0x9444f5f in torch::jit::IRParser::parse() /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:482:3

#29 0x94448df in torch::jit::parseIR(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, torch::jit::Graph*, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, torch::jit::Value*, std::hash<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, torch::jit::Value*> > >&) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:94:5

#30 0x944526e in torch::jit::parseIR(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, torch::jit::Graph*) /pytorch_fuzz/torch/csrc/jit/ir/irparser.cpp:99:3

#31 0x5e3ebd in LLVMFuzzerTestOneInput /irparser_fuzz.cc:43:5

#32 0x510d61 in fuzzer::Fuzzer::ExecuteCallback(unsigned char const*, unsigned long) /llvm-project/compiler-rt/lib/fuzzer/FuzzerLoop.cpp:611:15

#33 0x4fac7c in fuzzer::RunOneTest(fuzzer::Fuzzer*, char const*, unsigned long) /llvm-project/compiler-rt/lib/fuzzer/FuzzerDriver.cpp:324:6

#34 0x5009cb in fuzzer::FuzzerDriver(int*, char***, int (*)(unsigned char const*, unsigned long)) /llvm-project/compiler-rt/lib/fuzzer/FuzzerDriver.cpp:860:9

#35 0x529f62 in main /llvm-project/compiler-rt/lib/fuzzer/FuzzerMain.cpp:20:10

#36 0x7ffff7a38082 in __libc_start_main (/lib/x86_64-linux-gnu/libc.so.6+0x24082)

#37 0x4f559d in _start (/irparser_fuzz+0x4f559d)

```

Following these steps with the remaining crashes will give you almost the same results.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94295

Approved by: https://github.com/davidberard98

Summary:

This PR tries to decompose the operators in torch.ops.quantized_decomposed namespace to more

primitive aten operators, this would free us from maintaining the semantics of the quantize/dequantize

operators, which can be expressed more precises in terms of underlying aten operators

Note: this PR just adds them to the decomposition table, we haven't enable this by default yet

Test Plan:

python test/test_quantization.py TestQuantizePT2E.test_q_dq_decomposition

Reviewers:

Subscribers:

Tasks:

Tags:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/93312

Approved by: https://github.com/vkuzo, https://github.com/SherlockNoMad

This is the second times I spot this error on the new Windows non-ephemeral runners, so let's get it fixed.

The error https://github.com/pytorch/pytorch/actions/runs/4130018165/jobs/7136942722 was during 7z-ing the usage log artifact on the runners:

```

WARNING: The process cannot access the file because it is being used by another process.

usage_log.txt

```

The locking process is probably the monitoring script. This looks very similar to the issue on MacOS pet runners in which the monitoring script is not killed sometime.

I could try to kill the process to unlock the file. But then not being able to upload the usage log here is arguably ok too. So I think it would be easier to just ignore the locked file and move on.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94483

Approved by: https://github.com/clee2000

Calculate nonzero count directly in the nonzero op.

Additionally, synchronize before entering nonzero op to make sure all previous operations finished (output shape is allocated based on the count_nonzero count)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94442

Approved by: https://github.com/kulinseth

Refcounting is hard. (Citation needed.) https://github.com/pytorch/pytorch/pull/81242 introduced a corner case where we would over incref when breaking out due to max (128) depth. https://github.com/pytorch/pytorch/pull/85847 ostensibly fixed a segfault, but in actuality was over incref-ing because PyEval_GetFrame returns a borrowed reference while `PyFrame_GetBack` returns a strong reference.

Instead of squinting really hard at the loops, it's much better to use the RAII wrapper and do the right thing by default.

I noticed the over incref issue because of a memory leak where Tensors captured by the closure of a function would be kept alive by zombie frames.

Differential Revision: [D42184394](https://our.internmc.facebook.com/intern/diff/D42184394/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/91646

Approved by: https://github.com/albanD

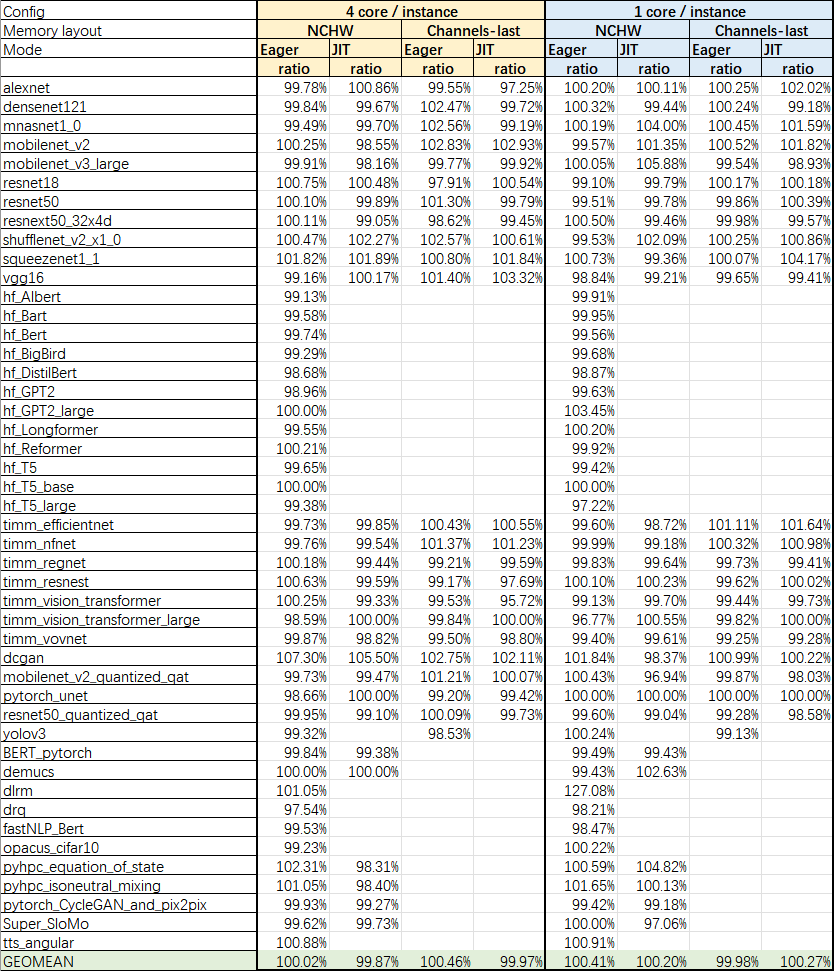

Summary: It looks like setting torch.backends.cudnn.deterministic to

True is not enough for eliminating non-determinism when testing

benchmarks with --accuracy, so let's turn off cudnn completely.

With this change, mobilenet_v3_large does not show random failure on my

local environment. Also take this chance to clean up CI skip lists.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94363

Approved by: https://github.com/ezyang

Summary:

Previously prepare_fx returns an ObservedGraphModule and convert_fx returns a QuantizedGraphModule,

this is to preserve the attributes since torch.fx.GraphModule did not preserve them, after https://github.com/pytorch/pytorch/pull/92062

we are preserving `model.meta`, so we can store the attributes in model.meta now to preserve them.

With this, we don't need to create a new type of GraphModule in these functions and can use GraphModule directly, this

is useful for quantization in pytorch 2.0 flow, if other transformations are using GraphModule as well, the quantization passes will be composable with them

Test Plan:

python test/test_quantization.py TestQuantizeFx

python test/test_quantization.py TestQuantizeFxOps

python test/test_quantization.py TestQuantizeFxModels

python test/test_quantization.py TestQuantizePT2E

Imported from OSS

Differential Revision: D42979722

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94412

Approved by: https://github.com/vkuzo

Summary:

https://github.com/pytorch/pytorch/pull/94170 broke some Meta-only tests because it broke the following syntax:

```

import torch.nn.intrinsic

_ = torch.nn.intrinsic.quantized.dynamic.*

```

This broke with the name change because the `ao` folder is currently doing lazy import loading, but the original folders are not.

For now, just unbreak the folders needed for the tests to pass. We will follow-up with ensuring this doesn't break for other folders in a future PR.

Test plan:

```

python test/test_quantization.py -k AOMigrationNNIntrinsic.test_modules_no_import_nn_intrinsic_quantized_dynamic

```

Fixes #ISSUE_NUMBER

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94458

Approved by: https://github.com/jerryzh168

Summary:

The caching allocator can be configured to round memory allocations in order to reduce fragmentation. Sometimes however, the overhead from rounding can be higher than the fragmentation it helps reduce.

We have added a new stat to CUDA caching allocator stats to help track if rounding is adding too much overhead and help tune the roundup_power2_divisions flag:

- "requested_bytes.{current,peak,allocated,freed}": memory requested by client code, compare this with allocated_bytes to check if allocation rounding adds too much overhead

Test Plan: Added test case in caffe2/test/test_cuda.py

Differential Revision: D40810674

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88575

Approved by: https://github.com/zdevito

Summary:

There are a few races/permission errors in file creation, fixing

OSS:

1. caffe2/torch/_dynamo/utils.py, get_debug_dir: multiple process may conflict on it even it's using us. Adding pid to it

2. caffe2/torch/_dynamo/config.py: may not be a right assumption that we have permission to cwd

Test Plan: sandcastle

Differential Revision: D42905908

Pull Request resolved: https://github.com/pytorch/pytorch/pull/93407

Approved by: https://github.com/soumith, https://github.com/mlazos

Summary: Need to re-register the underscored function in order to have the op present in predictor. This is because older models have been exported with the underscored version.

Test Plan: See if predictor tests pass?

Reviewed By: cpuhrsch

Differential Revision: D43138338

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94452

Approved by: https://github.com/cpuhrsch

Preferring dash over underscore in command-line options. Add `--command-arg-name` to the argument parser. The old arguments with underscores `--command_arg_name` are kept for backward compatibility.

Both dashes and underscores are used in the PyTorch codebase. Some argument parsers only have dashes or only have underscores in arguments. For example, the `torchrun` utility for distributed training only accepts underscore arguments (e.g., `--master_port`). The dashes are more common in other command-line tools. And it looks to be the default choice in the Python standard library:

`argparse.BooleanOptionalAction`: 4a9dff0e5a/Lib/argparse.py (L893-L895)

```python

class BooleanOptionalAction(Action):

def __init__(...):

if option_string.startswith('--'):

option_string = '--no-' + option_string[2:]

_option_strings.append(option_string)

```

It adds `--no-argname`, not `--no_argname`. Also typing `_` need to press the shift or the caps-lock key than `-`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94505

Approved by: https://github.com/ezyang, https://github.com/seemethere

`dsa_add_new_assertion_failure` is currently causing duplicate definition issues. Possible solutions:

1. Put the device code in a .cu file - requires device linking, which would be very painful to get setup.

2. inline the code - could cause bloat, especially since a function might include many DSAs.

3. Anonymous namespace - balances the above two. Putting the code in a .cu file would ensure that there's a single copy of the function, but it's hard to setup. Inlining the code would cause bloat. An anonymous namespace is easy to setup and produces a single copy of the function per translation unit, which allows the function to be called many times without bloat.

Differential Revision: D42998295

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94064

Approved by: https://github.com/ezyang

- fix num_output_dims calculation

- fix median_out_mps key

- cast tensor sent to sortWithTensor and argSortWithTensor

- note down same issue for unique

- unblock median from blocklist

- adding test_median_int16 test

Fixes #ISSUE_NUMBER

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94489

Approved by: https://github.com/razarmehr

Changes:

1. `typing_extensions -> typing-extentions` in dependency. Use dash rather than underline to fit the [PEP 503: Normalized Names](https://peps.python.org/pep-0503/#normalized-names) convention.

```python

import re

def normalize(name):

return re.sub(r"[-_.]+", "-", name).lower()

```

2. Import `Literal`, `Protocal`, and `Final` from standard library as of Python 3.8+

3. Replace `Union[Literal[XXX], Literal[YYY]]` to `Literal[XXX, YYY]`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94490

Approved by: https://github.com/ezyang, https://github.com/albanD

Part of fixing #88098

## Context

This is 1/3 PRs to address issue 88098 (move label check failure logic from `check_labels.py` workflow to `trymerge.py` mergebot. Due to the messy cross-script imports and potential circular dependencies, it requires some refactoring to the scripts before, the functional PR can be cleanly implemented.

## What Changed

1. Extract extracts label utils fcns to a `label_utils.py` module from the `export_pytorch_labels.py` script.

2. Small improvements to naming, interface and test coverage

## Note to Reviewers

This series of PRs is to replace the original PR https://github.com/pytorch/pytorch/pull/92682 to make the changes more modular and easier to review.

* 1st PR: this one

* 2nd PR: https://github.com/Goldspear/pytorch/pull/2

* 3rd PR: https://github.com/Goldspear/pytorch/pull/3

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94179

Approved by: https://github.com/ZainRizvi

inductor/test_torchinductor suite is not running as part of the CI. I have triaged this down to a bug in the arguments supplied in test/run_test.py

Currently test_inductor runs the test suites as:

`PYTORCH_TEST_WITH_INDUCTOR=0 python test/run_test.py --include inductor/test_torchinductor --include inductor/test_torchinductor_opinfo --verbose`

Which will only set off the test_torchinductor_opinfo suite

Example from CI logs: https://github.com/pytorch/pytorch/actions/runs/3926246136/jobs/6711985831#step:10:45089

```

+ PYTORCH_TEST_WITH_INDUCTOR=0

+ python test/run_test.py --include inductor/test_torchinductor --include inductor/test_torchinductor_opinfo --verbose

Ignoring disabled issues: []

/var/lib/jenkins/workspace/test/run_test.py:1193: DeprecationWarning: distutils Version classes are deprecated. Use packaging.version instead.

if torch.version.cuda is not None and LooseVersion(torch.version.cuda) >= "11.6":

Selected tests:

inductor/test_torchinductor_opinfo

Prioritized test from test file changes.

reordering tests for PR:

prioritized: []

the rest: ['inductor/test_torchinductor_opinfo']

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/92833

Approved by: https://github.com/seemethere

When TorchScript Value has an optional tensor, `dtype()` or `scalarType()` is not available and raise (by design).

The symbolic `_op_with_optional_float_cast` must check whether the tensor is otpional or not before calling the scalar type resolution API. This PR fixes that

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94427

Approved by: https://github.com/abock, https://github.com/shubhambhokare1

Per @ezyang's advice, added magic sym_int method. This works for 1.0 * s0 optimization, but can't evaluate `a>0` for some args, and still misses some optimization that model rewrite achieves, so swin still fails

(rewrite replaces `B = int(windows.shape[0] / (H * W / window_size / window_size))` with `B = (windows.shape[0] // int(H * W / window_size / window_size))` and model passes)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94365

Approved by: https://github.com/ezyang

Currently there is a potential conflict for `GLIBCXX_USE_CXX11_ABI` configuration if users don't explicitly set this variable.

In `caffe2/CMakeLists.txt`, if the variable is not set, an `abi checker` will be used to retrieve the ABI configuration from compiler.

https://github.com/pytorch/pytorch/blob/master/caffe2/CMakeLists.txt#L1165-L1183

However, in 'torch/csrc/Module.cpp`, if the variable is not set, it will be set to `0`. The conflict happens when the default ABI of the compiler is `1`.

https://github.com/pytorch/pytorch/blob/master/torch/csrc/Module.cpp#L1612

This PR eliminate this uncertainty and potential conflict.

The ABI will be checked and set in `CMakeLists.txt`, and pass the value to `caffe2/CMakeLists.txt`. Meanwhile, in case the `caffe2/CMakeLists.txt` is directly invoked from a `cmake` command, The original GLIBC check logic is kept in this file.

If users doesn't explicitly assign a value to `GLIBCXX_USE_CXX11_ABI`, the `abi checker` will be executed and set the value accordingly. If the `abi checker` failed to compile or execute, the value will be set to `0`. If users explicitly assigned a value, then the provided value will be used.

Moreover, if `GLIBCXX_USE_CXX11_ABI` is set to `0`, the '-DGLIBCXX_USE_CXX11_ABI=0' flag won't be appended to `CMAKE_CXX_FLAGS`. Thus, whether to use ABI=0 or ABI=1 fully depends on compiler's default configuration. It could cause an issue that even users explicitly set `GLIBCXX_USE_CXX11_ABI` to `0`, the compiler still builds the binaries with ABI=1.

https://github.com/pytorch/pytorch/blob/master/CMakeLists.txt#L44-L51

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94306

Approved by: https://github.com/malfet

# Summary

Add more checks around shape constraints as well as update the sdp_utils to properly catch different head_dims between qk and v for flash_attention which is not supported.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94274

Approved by: https://github.com/cpuhrsch

- Fix wrong results in AvgPool2D when `count_include_pad=True`

- Fix issues with adaptive average and max pool2d

- Remove the redundant blocking copies from `AdaptiveMaxPool2d`

- Add `divisor` to cached string key to avoid conflicts

- Add test case when both `ceil_mode` and `count_include_pad` are True (previously failed).

- Clean up redundant code

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94348

Approved by: https://github.com/kulinseth

Historically, we work out `size_hint` by working it out on the fly by doing a substitution on the sympy expression with the `var_to_val` mapping. With this change, we also maintain the hint directly on SymNode (in `expr._hint`) and use it in lieu of Sympy substitution when it is available (mostly guards on SymInt, etc; in particular, in idiomatic Inductor code, we typically manipulate Sympy expressions directly and so do not have a way to conveniently maintain hints.)

While it's possible this will give us modest performance improvements, this is not the point of this PR; the goal is to make it easier to carefully handle unbacked SymInts, where hints are expected not to be available. You can now easily test if a SymInt is backed or not by checking `symint.node.hint is None`.

Signed-off-by: Edward Z. Yang <ezyang@meta.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94201

Approved by: https://github.com/voznesenskym

Supports the following with dynamic shapes:

```python

for element in tensor:

# do stuff with element

```

Approach follows what's done when `call_range()` is invoked with dynamic shape inputs: guard on tensor size and continue tracing with a real size value from `dyn_dim0_size.evaluate_expr()`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94326

Approved by: https://github.com/ezyang

Summary: It looks like setting torch.backends.cudnn.deterministic to

True is not enough for eliminating non-determinism when testing

benchmarks with --accuracy, so let's turn off cudnn completely.

With this change, mobilenet_v3_large does not show random failure on my

local environment. Also take this chance to clean up CI skip lists.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94363

Approved by: https://github.com/ezyang

All this time, PyTorch and ONNX has different strategy for None in output. And in internal test, we flatten the torch outputs to see if the rest of them matched. However, this doesn't work anymore in scripting after Optional node is introduced, since some of None would be kept.

#83184 forces script module to keep all Nones from Pytorch, but in ONNX, the model only keeps the ones generated with Optional node, and deletes those meaningless None.

This PR uses Optional node to keep those meaningless None in output as well, so when it comes to script module result comparison, Pytorch and ONNX should have the same amount of Nones.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84789

Approved by: https://github.com/BowenBao

Fix#82589

Why:

1. **full_check** works in `onnx::checker::check_model` function as it turns on **strict_mode** in `onnx::shape_inference::InferShapes()` which I think that was the intention of this part of code.

2. **strict_mode** catches failed shape type inference (invalid ONNX model from onnx perspective) and ONNXRUNTIME can't run these invalid models, as ONNXRUNTIME actually rely on ONNX shape type inference to optimize ONNX graph. Why we don't set it True for default? >>> some of existing users use other platform, such as caffe2 to run ONNX model which doesn't need valid ONNX model to run.

3. This PR doesn't change the original behavior of `check_onnx_proto`, but add a warning message for those models which can't pass strict shape type inference, saying the models would fail on onnxruntime.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83186

Approved by: https://github.com/justinchuby, https://github.com/thiagocrepaldi, https://github.com/jcwchen, https://github.com/BowenBao

Add `collect_ciflow_labels.py` that automatically extracts all labels from workflow files and adds the to pytorch-probot.yml

Same script can also be used to validate that all tags are referenced in the config

Add this validation to quickchecks

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94368

Approved by: https://github.com/jeanschmidt

Summary:

This PR tries to decompose the operators in torch.ops.quantized_decomposed namespace to more

primitive aten operators, this would free us from maintaining the semantics of the quantize/dequantize

operators, which can be expressed more precises in terms of underlying aten operators

Note: this PR just adds them to the decomposition table, we haven't enable this by default yet

Test Plan:

python test/test_quantization.py TestQuantizePT2E.test_q_dq_decomposition