mirror of

https://github.com/pytorch/pytorch.git

synced 2025-10-22 22:25:10 +08:00

Compare commits

1736 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| b13b7010b9 | |||

| 5c79046d39 | |||

| 30fd222b80 | |||

| 761eef1f19 | |||

| 8aa1cefed8 | |||

| 0d908d813b | |||

| 1c391f6f93 | |||

| be146fd721 | |||

| 2979f4b989 | |||

| 22b3600f19 | |||

| 215813d7ac | |||

| dc7695a47a | |||

| 032a65edff | |||

| e4b4e515cd | |||

| 4b1f5f4bd6 | |||

| afd576ec0e | |||

| 95aa2af377 | |||

| 6774d39c96 | |||

| 567faedc59 | |||

| 3eab8a71e2 | |||

| 2fd4d088ff | |||

| 5d274cd499 | |||

| 8051dec608 | |||

| f2c1071c33 | |||

| bb71117ecc | |||

| d25433a099 | |||

| 7dd45490f8 | |||

| bf632544e6 | |||

| 282402d4f3 | |||

| cce03074f5 | |||

| f2f63773d8 | |||

| 84aa41824c | |||

| 25c8a117af | |||

| ae122707b5 | |||

| b4fe5ad641 | |||

| 5a761dbe65 | |||

| dd893391d5 | |||

| e8196f990d | |||

| 269b77a1b2 | |||

| 476d85dd3f | |||

| 63f6c0d692 | |||

| b546fa3fcd | |||

| 1d656b6769 | |||

| 3acbbb30f2 | |||

| 52911f9e47 | |||

| a65e0f488c | |||

| 8dc5d2a22e | |||

| bb353ccc17 | |||

| ced0054a9e | |||

| 68ee5ede29 | |||

| 4df98e2927 | |||

| 6ccac5ce28 | |||

| 3865606299 | |||

| d3334db627 | |||

| 50f5a4dd18 | |||

| b60936b9ae | |||

| 2d750b9da5 | |||

| ca376d4584 | |||

| ef183a1d23 | |||

| f4d8944973 | |||

| 6b7aef63ac | |||

| b3ab4b1094 | |||

| 1e8cb82a2d | |||

| dd399a8d68 | |||

| faac0f5c25 | |||

| c36f47bd1e | |||

| 3d1888cd95 | |||

| 97a82a3018 | |||

| 5cd313ed23 | |||

| b414494035 | |||

| c10efc646e | |||

| 348531ad8d | |||

| 714b2b8bf6 | |||

| fe4bd5066b | |||

| e17d84d38e | |||

| b9aef6bc03 | |||

| 0056b08834 | |||

| bd0df61bb5 | |||

| d9678c2e34 | |||

| b3c0aa3b7d | |||

| 77fbc12f23 | |||

| 7e46eb1613 | |||

| 821656d2d8 | |||

| 86e40ed875 | |||

| b9379cfab7 | |||

| f0b75c4aa4 | |||

| 7654b3f49e | |||

| 37ebbc2809 | |||

| 29ddbc3e37 | |||

| 16a133ed9a | |||

| c4d1318662 | |||

| 379ae6d865 | |||

| 24376ff9d3 | |||

| be6322e4b5 | |||

| 62063b2f62 | |||

| 13b1580613 | |||

| e50a1f19b3 | |||

| e86db387ba | |||

| 704ee3ca68 | |||

| 9004652c7b | |||

| aca6ce984c | |||

| ed8773f7bd | |||

| 48f48b6ff2 | |||

| 615b27eadf | |||

| 170d790b66 | |||

| e216f557fd | |||

| 997312c233 | |||

| d602b3a834 | |||

| f531d98341 | |||

| 6bdd5ecaf5 | |||

| bfbde9d6eb | |||

| b9c816a796 | |||

| 2f5c215d34 | |||

| 01650ac9de | |||

| ce536aa355 | |||

| fc0af33a18 | |||

| c7c4778af6 | |||

| 73a65cd29f | |||

| b785ed0ac0 | |||

| b2d077d81d | |||

| b1c2714ad5 | |||

| a462edd0f6 | |||

| c2425fc9a1 | |||

| fbcedf2da2 | |||

| 3d95e13b33 | |||

| 228e1a8696 | |||

| 3fa8a3ff46 | |||

| 4647f753bc | |||

| 7ba5e7cea1 | |||

| 9b626a8047 | |||

| bd0e9a73c7 | |||

| 2b1cd919ce | |||

| 8e46a15605 | |||

| 15a9fbdedb | |||

| 6336300880 | |||

| 5073132837 | |||

| 65b66264d4 | |||

| 0f872ed02f | |||

| 761d6799be | |||

| 0d179aa8db | |||

| 5b171ad7c2 | |||

| ac9245aeb3 | |||

| 60736bdf99 | |||

| 7d58765cee | |||

| 76f7d749e4 | |||

| 0b7374eb44 | |||

| 6fff764155 | |||

| 8ced72ccb8 | |||

| b1ae7f90d5 | |||

| 8b61ee522e | |||

| 76ca3eb191 | |||

| fea50a51ee | |||

| 51e589ed73 | |||

| 2e87643761 | |||

| ba9a85f271 | |||

| 0714d7a3ca | |||

| 34ce58c909 | |||

| c238ee3681 | |||

| f5338a1fb8 | |||

| d96ad41191 | |||

| f17cfe4293 | |||

| aec182ae72 | |||

| c93c884ee2 | |||

| c42a2d4d24 | |||

| f89252c336 | |||

| 490c15fae9 | |||

| f2d72ba10f | |||

| 2108b42b92 | |||

| bae8df62d3 | |||

| 98775b6bb4 | |||

| b7cc2a501f | |||

| 0720ba53b3 | |||

| ff5fa11129 | |||

| 5e7f5db332 | |||

| b5f7592140 | |||

| f366e5fc81 | |||

| 48f087f6ce | |||

| 7ad948ffa9 | |||

| 3277d83648 | |||

| 1487278fdf | |||

| 977630bc15 | |||

| 12efd53dba | |||

| 37e05485d9 | |||

| c76770f40e | |||

| da725830c2 | |||

| fc6fcf23f7 | |||

| b190f1b5bc | |||

| dfca8dfdc5 | |||

| b46d5e0b04 | |||

| f19a11a306 | |||

| cfcf69703f | |||

| e22b8e0d17 | |||

| fbfba6bdca | |||

| 3cc89afde6 | |||

| 1e4aee057c | |||

| 8dfcf7e35a | |||

| 76de151ddd | |||

| 2676cc46c2 | |||

| 1bf7bc9768 | |||

| 3c41c9fe46 | |||

| 6ff7750364 | |||

| 4d25c3d048 | |||

| 267b7ade50 | |||

| 80429ad9f7 | |||

| 5ca6516ecb | |||

| 67f94557ff | |||

| 61bd5a0643 | |||

| 748d011c8b | |||

| 5d5cfe2e57 | |||

| 7cbe255296 | |||

| 4ef303698c | |||

| 83e8b3f6c3 | |||

| 502ebed796 | |||

| 68ff58d771 | |||

| 969c1602e6 | |||

| 5e1d6a3691 | |||

| 533cfc0381 | |||

| 2b23712dc3 | |||

| 88275da5e8 | |||

| bd7a5ad6f0 | |||

| 1f6f82dbcf | |||

| 1f8939937a | |||

| b3d41a5f96 | |||

| fec2d493a9 | |||

| 86ee75f63f | |||

| 31941918cf | |||

| 19a65d2bea | |||

| 819d4b2b83 | |||

| b87c113cf4 | |||

| b25182971f | |||

| 1ee2c47e37 | |||

| 2dc563f1f1 | |||

| 15ba71a275 | |||

| e5b3fc49d6 | |||

| ae1766951d | |||

| 02d08dafd9 | |||

| 13a5090695 | |||

| 8e32e4c04c | |||

| cf991310c3 | |||

| 938706099e | |||

| 3330287dc7 | |||

| 38c8520adf | |||

| 492e1746af | |||

| 91a8109cfd | |||

| 161490d34a | |||

| 9c302852eb | |||

| 8654fcfd60 | |||

| b3d527d9a0 | |||

| 4d495218c9 | |||

| 13a041284c | |||

| c60c1a003d | |||

| 97add1a5ea | |||

| ca02930e47 | |||

| 20d5e95077 | |||

| eb4a7dc11d | |||

| f722498b72 | |||

| aadfb6fe83 | |||

| 6c273594c9 | |||

| e475c82fa1 | |||

| 0c2e6665df | |||

| 6295e6e94b | |||

| 670a4aa708 | |||

| 1bdc2e64ed | |||

| c587be1e50 | |||

| bd481596f5 | |||

| a504d56b43 | |||

| 91c4dfccea | |||

| 27f618c44d | |||

| a14482a1df | |||

| aa50c5734b | |||

| 293001a4fe | |||

| 638cfdf150 | |||

| 5f80a14525 | |||

| 1342fd3975 | |||

| 8d4af38489 | |||

| 575a064e66 | |||

| 3ab21a3c4f | |||

| 2f592e6c7d | |||

| 5661ffb766 | |||

| 9b74503daa | |||

| 24848f1cd8 | |||

| a31a07ede9 | |||

| c8c4c9b23d | |||

| e1ed9303f0 | |||

| a43aab13c2 | |||

| c698b4a45e | |||

| c6a0ffab50 | |||

| 8ba7cc30d1 | |||

| 61bf08ca24 | |||

| 6ada3c0c16 | |||

| 60061fbe79 | |||

| 46e7042add | |||

| d0c182773b | |||

| b6f60585b5 | |||

| 4b0e3ee219 | |||

| 838842d4b2 | |||

| e71cf20192 | |||

| adb4cb2b5b | |||

| 6073f9b46c | |||

| 8e8022b735 | |||

| da82d2dd70 | |||

| 82176473a5 | |||

| 2d269a9a72 | |||

| 240372a991 | |||

| 5b10411c8c | |||

| 4c474a9939 | |||

| 7ea6ae57c8 | |||

| 42633f8986 | |||

| 84248690a9 | |||

| 53409ca0fb | |||

| c2c1710047 | |||

| 876202503f | |||

| 946a7d9bc3 | |||

| 608bcd3b15 | |||

| 632b02a477 | |||

| 0db9c63300 | |||

| 873ed4e6b6 | |||

| 01bd43037d | |||

| 68c9e3f232 | |||

| a25c8555eb | |||

| dfd1dff383 | |||

| 8f391d4d51 | |||

| 2a6b7685ae | |||

| eb9573107d | |||

| ee43cd7adc | |||

| 4ca26fbc1b | |||

| c165226325 | |||

| 49295ebe54 | |||

| 455038e470 | |||

| ca7f02ea0c | |||

| 04aba1caec | |||

| f6c1bbfa48 | |||

| 4e2c8c6db5 | |||

| c26b9c0a5e | |||

| aaf41c61a6 | |||

| dd844f741b | |||

| 7117a9012e | |||

| 1bdc28161a | |||

| 5e150caf38 | |||

| c0c62d099a | |||

| b9ece39685 | |||

| 15ef008877 | |||

| b14d6318f8 | |||

| 7c44506441 | |||

| 937ba581d7 | |||

| 2ae54f1194 | |||

| a217fefee1 | |||

| 34b7fed802 | |||

| 5221745c21 | |||

| 000ca44b16 | |||

| 8f3d44033b | |||

| 7cc14c595a | |||

| 797544c47a | |||

| 0426f2f3ec | |||

| 336eeee895 | |||

| 593f867e3e | |||

| 385913be1c | |||

| 6aaa14f5fe | |||

| 07f5b21ef1 | |||

| e454870396 | |||

| 2822013437 | |||

| 72c1982734 | |||

| 0de2ea305a | |||

| d899385a3d | |||

| c6d6cbe8a6 | |||

| 85e82e85d8 | |||

| a1534cc37d | |||

| 8c8dc791ef | |||

| 63edca44f2 | |||

| 8d90ab2d9b | |||

| bd5303010d | |||

| 16d2c3d7b3 | |||

| 407a92dc26 | |||

| 0a893abc7b | |||

| 34fa5e0dc7 | |||

| 712686ce91 | |||

| 518864a7e0 | |||

| 750fb5cc73 | |||

| 0f4749907a | |||

| bd2dc63ef6 | |||

| 19a8795450 | |||

| d9dccfdd71 | |||

| 7547a06c4f | |||

| 8929b75795 | |||

| 4d37ef878c | |||

| 126e77d5c6 | |||

| 53eec78bea | |||

| a4edaec81a | |||

| 92481b59d3 | |||

| 6c77fa9121 | |||

| aeb7a72620 | |||

| 73d232ee45 | |||

| c0c65bf915 | |||

| f6cee952af | |||

| e74184f679 | |||

| 3884d36176 | |||

| e7c6886a00 | |||

| ed8e92f63d | |||

| fb97df5d65 | |||

| e9b05c71b4 | |||

| 7926324385 | |||

| 1527b37c26 | |||

| de4659659b | |||

| a96a8c8336 | |||

| 691aa19b88 | |||

| 6b07dc9e22 | |||

| 8aa259b52b | |||

| ac9312e9f8 | |||

| 91a17b702b | |||

| c54597e0b2 | |||

| a9785bba44 | |||

| 833b8cbc7a | |||

| 75aeb16e05 | |||

| fc354a0d6e | |||

| 262611fcd3 | |||

| b8a34f3033 | |||

| 10bb6bb9b8 | |||

| 3c9ef69c37 | |||

| dee987d6ee | |||

| 138f254ec1 | |||

| c7c8aaa7f0 | |||

| d0db624e02 | |||

| e3e7b76310 | |||

| dad02bceb9 | |||

| b195285879 | |||

| 8f3da5b51d | |||

| 825e919eb8 | |||

| acb0ce8885 | |||

| 72089c9c36 | |||

| cf2f158fec | |||

| 41ddc2a786 | |||

| e4886f6589 | |||

| 6470b5bd21 | |||

| 44196955e2 | |||

| f08ec1394d | |||

| f8fb25e0a2 | |||

| 6a0c66752f | |||

| a1bd4efb08 | |||

| b43ce05268 | |||

| 80e56cfda9 | |||

| 24701fc5a7 | |||

| f78a266d99 | |||

| f096fb6859 | |||

| a3e11d606b | |||

| 79232c24e2 | |||

| 15d9d499ab | |||

| 962084c8e8 | |||

| 7518b1eefb | |||

| 8215d7a4ba | |||

| 5aaa220d84 | |||

| 12c16ab9bc | |||

| 76520512e7 | |||

| 66de965882 | |||

| 10d32fb0b7 | |||

| e72c9b6e4a | |||

| ac1f68127a | |||

| 60d1852c7b | |||

| d53eb521fc | |||

| 9808932f10 | |||

| ea876eb6d5 | |||

| 0a45864866 | |||

| 2560b39796 | |||

| 21afa4c88b | |||

| 9fc3c5e4d2 | |||

| 3e3501c98d | |||

| 5e6fcd02b5 | |||

| d46ebcfadf | |||

| 41480c8cf2 | |||

| 236890d902 | |||

| 55632d81d2 | |||

| 0b276d622e | |||

| c81491b37d | |||

| 42e189425f | |||

| 3cfa0d7199 | |||

| 7c9e088661 | |||

| e78aa4bb84 | |||

| f8e94d0d8b | |||

| ebe6f40fce | |||

| 5fb37efb46 | |||

| 4f47855873 | |||

| 52ae6f682f | |||

| c35f58f97b | |||

| 659b2f3154 | |||

| 5ea05cfb96 | |||

| dc9a5b7d2f | |||

| f7ab5a128a | |||

| 368cbe615d | |||

| d4c9a3782b | |||

| 172dca5e8b | |||

| 818bf0c408 | |||

| 03dcf8a83b | |||

| 604f607fd1 | |||

| 956d946c25 | |||

| 970caaa621 | |||

| 00a5980cdf | |||

| e24eee04f0 | |||

| f1b3af4ee2 | |||

| fb2d28f477 | |||

| 3a704ff725 | |||

| 0180e638e5 | |||

| 95c6ae04fb | |||

| 27c4c6e0af | |||

| da17414b3f | |||

| be2b27a747 | |||

| aec2c8f752 | |||

| 13e34b4679 | |||

| 57373c7c29 | |||

| 79f5bf84e5 | |||

| 3ed720079e | |||

| e7c1e6a8e3 | |||

| f1d0d73ed7 | |||

| 9c411513bf | |||

| ce78bc898b | |||

| 887002e932 | |||

| 31dea5ff23 | |||

| ec4602a973 | |||

| a38749d15f | |||

| 6ee77b4edd | |||

| 343d65db91 | |||

| 6328981fcf | |||

| a90913105c | |||

| 9368596059 | |||

| 80ed795ff1 | |||

| a2938e3d11 | |||

| 2ad967dbe4 | |||

| 7415c090ac | |||

| a1fa995044 | |||

| 3c2ecc6b15 | |||

| fa1516d319 | |||

| 5e26f49db4 | |||

| 7694f65120 | |||

| b5ebf68df1 | |||

| aa46055274 | |||

| 2cad802b68 | |||

| 2d01f384f1 | |||

| f8d4f980b3 | |||

| 4f5a6c366e | |||

| ecfcf39f30 | |||

| 3975a2676e | |||

| 138ee75a3b | |||

| 0048f228cb | |||

| 2748b920ab | |||

| a92a2312d4 | |||

| 945ce5cdb0 | |||

| b39de2cbbe | |||

| 49a555e0f5 | |||

| ce13900148 | |||

| 4c77ad6ee4 | |||

| 0bc4246425 | |||

| c45ff2efe6 | |||

| 99b520cc5d | |||

| e05607aee1 | |||

| a360ba1734 | |||

| c661b963b9 | |||

| e374dc1696 | |||

| 116e0c7f38 | |||

| 45596d5289 | |||

| 342e7b873d | |||

| 00410c4496 | |||

| 8b9276bbee | |||

| 3238786ea1 | |||

| 07ebbcbcb3 | |||

| ca555abcf9 | |||

| 63893c3fa2 | |||

| f8ae34706e | |||

| f8e89fbe11 | |||

| 30d208010c | |||

| 017c7efb43 | |||

| 0c69fd559a | |||

| c991258b93 | |||

| 9f89692dcd | |||

| c28575a4eb | |||

| c9db9c2317 | |||

| 16a09304b4 | |||

| 58a88d1ac0 | |||

| b740878697 | |||

| 7179002bfb | |||

| 43b5be1d78 | |||

| 173c81c2d2 | |||

| ee4c77c59f | |||

| 30ec12fdd5 | |||

| 269ec0566f | |||

| a0a95c95d4 | |||

| 1335b7c1da | |||

| 6d14ef8083 | |||

| 26a492acf3 | |||

| f2741e8038 | |||

| 8d1a6975d2 | |||

| c414bf0aaf | |||

| 99f4864674 | |||

| 784cbeff5b | |||

| 9302f860ae | |||

| ac8a5e7f0d | |||

| 798fc16bbf | |||

| 0f65c9267d | |||

| be45231ccb | |||

| 279aea683b | |||

| 8aa8f791fc | |||

| 6464e69e21 | |||

| a93812e4e5 | |||

| 225f942044 | |||

| d951d5b1cd | |||

| 2082ccbf59 | |||

| 473e795277 | |||

| a09f653f52 | |||

| 90fe6dd528 | |||

| 57a2ccf777 | |||

| b5f6fdb814 | |||

| 205b9bc05f | |||

| 14d5d52789 | |||

| 9c218b419f | |||

| a69d819901 | |||

| 517fb2f410 | |||

| fef2b1526d | |||

| 3719994c96 | |||

| 35c2821d71 | |||

| e4812b3903 | |||

| 4cc11066b2 | |||

| 85b64d77b7 | |||

| db7948d7d5 | |||

| 3d40c0562d | |||

| 146bcc0e70 | |||

| 8d9f6c2583 | |||

| ac32d8b706 | |||

| 15c1dad340 | |||

| 6d8baf7c30 | |||

| 7ced682ff5 | |||

| 89cab4f5e6 | |||

| a0afb79898 | |||

| d6fa3b3fd5 | |||

| f91bb96071 | |||

| 3b6644d195 | |||

| 652b468ec2 | |||

| af110d37f2 | |||

| 38967568ca | |||

| df79631a72 | |||

| 95f0fa8a92 | |||

| 1c6ff53b60 | |||

| 1dbf44c00d | |||

| 1259a0648b | |||

| b0055f6229 | |||

| 90040afc44 | |||

| 59bc96bdc2 | |||

| 676ffee542 | |||

| 77136e4c13 | |||

| 604e13775f | |||

| 02380a74e3 | |||

| 4461ae8090 | |||

| 2b948c42cd | |||

| 133c1e927f | |||

| b2ae054410 | |||

| 2290798a83 | |||

| fd600b11a6 | |||

| b5c9f5c4c3 | |||

| b8a5b1ed8e | |||

| ca74bb17b8 | |||

| 69d8331195 | |||

| eab5c1975c | |||

| e67b525388 | |||

| 5171e56b82 | |||

| f467848448 | |||

| 7e4ddcfe8a | |||

| 3152be5fb3 | |||

| b076944dc5 | |||

| 3a07228509 | |||

| 24a2f2e3a0 | |||

| b32dd4a876 | |||

| 4f4bd81228 | |||

| 59b23d79c6 | |||

| 8c14630e35 | |||

| cc32de8ef9 | |||

| 44696c1375 | |||

| 82088a8110 | |||

| d5e45b2278 | |||

| bdfef2975c | |||

| b4bb4b64a1 | |||

| 3e91c5e1ad | |||

| 2b88d85505 | |||

| 50651970b8 | |||

| 4a8906dd8a | |||

| 68e2769a13 | |||

| 17c998e99a | |||

| 35758f51f2 | |||

| e8102b0a9b | |||

| 04f2bc9aa7 | |||

| d070178dd3 | |||

| c9ec7fad52 | |||

| f0a6ca4d53 | |||

| fd92470e23 | |||

| 8369664445 | |||

| 35e1adfe82 | |||

| eb91fc5e5d | |||

| d186fdb34c | |||

| 0f04f71b7e | |||

| 87f1959be7 | |||

| a538055e81 | |||

| 0e345aaf6d | |||

| c976dd339d | |||

| 71cef62436 | |||

| 3a29055044 | |||

| 59d66e6963 | |||

| 46bc43a80f | |||

| 7fa60b2e44 | |||

| c78893f912 | |||

| 0d2a4e1a9e | |||

| 088f14c697 | |||

| 4bf7be7bd5 | |||

| b2ab6891c5 | |||

| 39ab5bcba8 | |||

| 42f131c09f | |||

| 89dca6ffdc | |||

| b7f36f93d5 | |||

| 58320d5082 | |||

| a461804a65 | |||

| 817f6cc59d | |||

| 108936169c | |||

| f60ae085e6 | |||

| 85dda09f95 | |||

| 4f479a98d4 | |||

| 35ba948dde | |||

| 6b4ed52f10 | |||

| dcf5f8671c | |||

| 5340291add | |||

| 1c6fe58574 | |||

| 9f2111af73 | |||

| 2ed6c6d479 | |||

| 01ac2d3791 | |||

| eac687df5a | |||

| 6a2785aef7 | |||

| 849cbf3a47 | |||

| a0c614ece3 | |||

| 1b97f088cb | |||

| 097399cdeb | |||

| 7ee152881e | |||

| 3074f8eb81 | |||

| 748208775f | |||

| 5df17050bf | |||

| 92df0eb2bf | |||

| 995195935b | |||

| be8376eb88 | |||

| b650a45b9c | |||

| 8a20e22239 | |||

| 7c5014d803 | |||

| 62ac1b4bdd | |||

| 0633c08ec9 | |||

| cf87cc9214 | |||

| f908432eb3 | |||

| 1bd291c57c | |||

| b277df6705 | |||

| ec4d597c59 | |||

| d2ef49384e | |||

| b5dc36f278 | |||

| 41976e2b60 | |||

| 3dac1b9936 | |||

| d2bb56647f | |||

| 224422eed6 | |||

| 3c26f7a205 | |||

| 9ac9809f27 | |||

| 7bf6e984ef | |||

| 10f78985e7 | |||

| dc95f66a95 | |||

| d8f4d5f91e | |||

| 47f56f0230 | |||

| b4018c4c30 | |||

| 43fbdd3b45 | |||

| 803d032077 | |||

| 9d2d884313 | |||

| c0600e655a | |||

| 671ed89f2a | |||

| e0372643e1 | |||

| b5cf1d2fc7 | |||

| c1ca9044bd | |||

| 52c2a92013 | |||

| 541ab961d8 | |||

| 849794cd2c | |||

| f47fa2cb04 | |||

| 7a162dd97a | |||

| b123bace1b | |||

| 483490cc25 | |||

| 8d60e39fdc | |||

| e7dff91cf3 | |||

| ab5776449c | |||

| a229582238 | |||

| a0df8fde62 | |||

| e4a3aa9295 | |||

| be98c5d12d | |||

| bc6a71b1f5 | |||

| 26f1e2ca9c | |||

| 75d850cfd2 | |||

| f4870ca5c6 | |||

| 235d5400e1 | |||

| 491d5ba4fd | |||

| d42eadfeb9 | |||

| 9a40821069 | |||

| 2975f539ff | |||

| 64ca584199 | |||

| 5263469e21 | |||

| c367e0b64e | |||

| 183b3aacd2 | |||

| 101950ce92 | |||

| 239ae94389 | |||

| 55e850d825 | |||

| 62af45d99f | |||

| 1ac038ab24 | |||

| 77a925ab66 | |||

| d0d33d3ae7 | |||

| 9b7eceddc8 | |||

| 24af02154c | |||

| 86ec14e594 | |||

| 8a29338837 | |||

| 29918c6ca5 | |||

| 80a44e84dc | |||

| 5497b1babb | |||

| bef70aa377 | |||

| 0d30f77889 | |||

| e27bb3e993 | |||

| 179d5efc81 | |||

| b55e38801d | |||

| e704ec5c6f | |||

| 6cda6bb34c | |||

| 46f0248466 | |||

| 310ec57fd7 | |||

| cd82b2b869 | |||

| 126a1cc398 | |||

| bf650f05b3 | |||

| f2606a7502 | |||

| b07fe52ee0 | |||

| b07358b329 | |||

| 2aea8077f9 | |||

| 41f9c14297 | |||

| 135687f04a | |||

| b140e70b58 | |||

| ec987b57f6 | |||

| 596677232c | |||

| 9d74e139e5 | |||

| d2a93c3102 | |||

| bc475cad67 | |||

| 45d6212fd2 | |||

| f45d75ed22 | |||

| b03407289f | |||

| 55a794e6ec | |||

| 93ed476e7d | |||

| 10faa303bc | |||

| 6fa371cb0d | |||

| 18a2691b4b | |||

| f7bd3f7932 | |||

| f8dee4620a | |||

| 800e24616a | |||

| d63a435787 | |||

| a9c2809ce3 | |||

| fa61159dd0 | |||

| a215e000e9 | |||

| f16a624b35 | |||

| 61c2896cb8 | |||

| 22ebc3f205 | |||

| 8fa9f443ec | |||

| bb72ccf1a5 | |||

| 2e73456f5c | |||

| 3e49a2b4b7 | |||

| 4694e4050b | |||

| 59b9eeff49 | |||

| 1744fad8c2 | |||

| e46d942ca6 | |||

| 93a6136863 | |||

| 230bde94e7 | |||

| 20fffc8bb7 | |||

| 861a3f3a30 | |||

| ee52102943 | |||

| 26516f667e | |||

| 5586f48ad5 | |||

| cc6e3c92d2 | |||

| a2ef5782d0 | |||

| 0c1c0e21b8 | |||

| ffcc38cf05 | |||

| cc24b68584 | |||

| 8a70067b92 | |||

| 33b227c45b | |||

| fb68be952d | |||

| f413ee087d | |||

| 6495f5dd30 | |||

| 8e09f0590b | |||

| 08d346df9c | |||

| 12cf96e358 | |||

| 765a720d1c | |||

| cace62f94c | |||

| 767c96850d | |||

| b73e78edbb | |||

| 7914cc119d | |||

| 2b13eb2a6c | |||

| 8768e64e97 | |||

| 9212b9ca09 | |||

| 0d0f197682 | |||

| 281e34d1b7 | |||

| 287ba38905 | |||

| ed9dbff4e0 | |||

| 6ba4e48521 | |||

| b7269f2295 | |||

| 5ab317d4a6 | |||

| 431bcf7afa | |||

| 41909e8c5b | |||

| 56245426eb | |||

| 3adcb2c157 | |||

| 6d12185cc9 | |||

| 258c9ffb2c | |||

| dede431dd9 | |||

| 6312d29d80 | |||

| ab5f26545b | |||

| 6567c1342d | |||

| 3d6c2e023c | |||

| 89d930335b | |||

| 04393cd47d | |||

| 28f0cf6cee | |||

| 1af9a9637f | |||

| 1031d671fb | |||

| ee91b22317 | |||

| 220183ed78 | |||

| 504d2ca171 | |||

| d535aa94a1 | |||

| 0376a1909b | |||

| f757077780 | |||

| 9f7114a4a1 | |||

| 7d03da0890 | |||

| 4e0cecae7f | |||

| 72dbb76a15 | |||

| cceb926af3 | |||

| 0d7d29fa57 | |||

| be3276fcdd | |||

| 09c94a170c | |||

| f2a18004a7 | |||

| 1a3ff1bd28 | |||

| a5d3c779c7 | |||

| 9d32e60dc2 | |||

| f6913f56ea | |||

| 801fe8408f | |||

| cf4a979836 | |||

| 91f2946310 | |||

| 2bd7a3c31d | |||

| a681f6759b | |||

| cb849524f3 | |||

| 1f5951693a | |||

| 87748ffd4c | |||

| 0580f5a928 | |||

| 88d9fdec2e | |||

| 506a40ce44 | |||

| bf0e185bd6 | |||

| 5b3ccec10d | |||

| eb07581502 | |||

| 934a2b6878 | |||

| bec6ab47b6 | |||

| 49480f1548 | |||

| 18a3c62d9b | |||

| 6322cf3234 | |||

| 4e2b154342 | |||

| bb1019d1ec | |||

| c2d32030a2 | |||

| 162170fd7b | |||

| ea728e7c5e | |||

| aea6ba4bcd | |||

| ab357c14fc | |||

| 606aa43da0 | |||

| 8bfa802665 | |||

| ff5b73c0b3 | |||

| 86c95014a4 | |||

| 288c950c5e | |||

| b27d4de850 | |||

| 61063ebade | |||

| 3e70e26278 | |||

| 66e7e42800 | |||

| 0fecec14b8 | |||

| a7f24ccb76 | |||

| 08a1bc71c0 | |||

| 04e896a4b4 | |||

| 5dcfb80b36 | |||

| 9da60c39ce | |||

| 379860e457 | |||

| bcfa2d6c79 | |||

| 8b492bbc47 | |||

| a49b7b0f58 | |||

| c781ac414a | |||

| 656dca6edb | |||

| 830adfd151 | |||

| 6f7c8e4ef8 | |||

| 2ba6678766 | |||

| 71a47d1bed | |||

| 51bf6321ea | |||

| aa8916e7c6 | |||

| 2e24da2a0b | |||

| c94ccafb61 | |||

| 80a827d3da | |||

| 6909c8da48 | |||

| c07105a796 | |||

| c40c061a9f | |||

| a9bd27ce5c | |||

| 2e36c4ea2d | |||

| 4e45385a8d | |||

| cf5e925c10 | |||

| 709255d995 | |||

| f3cb636294 | |||

| e3f440b1d0 | |||

| f6b94dd830 | |||

| 3911a1d395 | |||

| ebd3648fd6 | |||

| f698f09cb7 | |||

| 86aa5dae05 | |||

| 179c82ffb4 | |||

| 233017f01f | |||

| 597bbfeacd | |||

| 99a169c17e | |||

| 0613ac90cd | |||

| 78871d829a | |||

| d40a7bf9eb | |||

| b27f576f29 | |||

| 073dfd8b88 | |||

| 509dd57c2e | |||

| 7a837b7a14 | |||

| dee864116a | |||

| e51d0bef97 | |||

| 2fd78112ab | |||

| 5c14bd2888 | |||

| 84b4665e02 | |||

| 26d626a47c | |||

| 6ff6299c65 | |||

| 071e68d99d | |||

| 78c1094d93 | |||

| 56fc639c9f | |||

| 51084a9054 | |||

| f8ae5c93e9 | |||

| ad286c0692 | |||

| a483b3903d | |||

| 6564d39777 | |||

| 8f1b7230fe | |||

| c0b7608965 | |||

| 56dd4132c4 | |||

| 91494cb496 | |||

| 9057eade95 | |||

| a28317b263 | |||

| 25c3603266 | |||

| ae6f2dd11c | |||

| 3aaa1771d5 | |||

| 2034396a3c | |||

| 0cad668065 | |||

| f644a11b82 | |||

| d7e3b2ef29 | |||

| fc5ec87478 | |||

| ed4023127b | |||

| 2bd4e5f5f6 | |||

| d2dcbc26f8 | |||

| 2f05eefe9a | |||

| 7d1afa78b9 | |||

| dac9b020e0 | |||

| eb77b79df9 | |||

| 456998f043 | |||

| c09f07edd9 | |||

| 66320c498c | |||

| 8cb8a0a146 | |||

| aeed8a6ea4 | |||

| c82537462b | |||

| a8a02ff560 | |||

| 72a9df19c8 | |||

| 5b9b9634f9 | |||

| c279a91c03 | |||

| ef6a764509 | |||

| 4db5afdf7e | |||

| 7867187451 | |||

| 4f8e6ec42a | |||

| 64c8a13773 | |||

| 395ab4a287 | |||

| 15dc862056 | |||

| f2daa616d1 | |||

| 64a50f5ad3 | |||

| 1d0f86144c | |||

| 89e93bba9d | |||

| 3290d4c7d6 | |||

| ca22befc93 | |||

| b08df5b9c0 | |||

| ebd3c3291c | |||

| 16728d2f26 | |||

| 34dab66f44 | |||

| 3a111c7499 | |||

| 3600c94ec5 | |||

| e2f8b00e00 | |||

| 65ed1eba48 | |||

| 7fff7977fe | |||

| add5922aac | |||

| a94b54a533 | |||

| bea82b9da6 | |||

| 2e7debe282 | |||

| 1cee5a359c | |||

| b08862405e | |||

| d57e1a6756 | |||

| c9172c5bc9 | |||

| 5d5e877a05 | |||

| 1e794c87ae | |||

| d9cb1b545a | |||

| 23f611f14d | |||

| 42b28d0d69 | |||

| d0cf5f7b65 | |||

| 4699c817e8 | |||

| 4f490c16e9 | |||

| bcdab7a632 | |||

| 7f51af7cbc | |||

| b4ae60cac8 | |||

| 4d03d96e8b | |||

| a39ffebc3a | |||

| 4bba6082ed | |||

| b111632965 | |||

| 0a34b34bfe | |||

| 6b821ece22 | |||

| d3b2096bfd | |||

| 9f1b12bf06 | |||

| e64fca4b04 | |||

| b941e73f4f | |||

| c57873d3cb | |||

| f3bc3275ac | |||

| 8df26e6c5c | |||

| 5c8ecb8150 | |||

| 3928f7740a | |||

| 1767f73e6b | |||

| 9e7d5e93ab | |||

| 70c6ee93a2 | |||

| 5cbf8504ef | |||

| 9a393b023d | |||

| 30bf464f73 | |||

| 9fb1f8934b | |||

| f3f02b23a0 | |||

| 7668cdd32c | |||

| f9dafdcf09 | |||

| d284a419c1 | |||

| b45844e3d9 | |||

| 6caa7e0fff | |||

| 1669fffb8d | |||

| 18aa86eebd | |||

| 075e49d3f4 | |||

| a6695b8365 | |||

| 06ee48b391 | |||

| fcaeffbbd4 | |||

| 6146a9a641 | |||

| 83de8e40d5 | |||

| 30590c46a3 | |||

| a3a5e56287 | |||

| 185c96d63a | |||

| be61ad6eb4 | |||

| 222dfd2259 | |||

| b06e1c7e1d | |||

| 6876abba51 | |||

| 0798466a01 | |||

| 2cda782273 | |||

| 7d1c9554b6 | |||

| a29d16f1a8 | |||

| 6d0c1c0f17 | |||

| 5ed4b5c25b | |||

| 6fe89c5e44 | |||

| fda8c37641 | |||

| 6d5a0ff3a1 | |||

| f8718dd355 | |||

| 85af686797 | |||

| 0f6ec3f15f | |||

| 44644c50ee | |||

| 9749f7eacc | |||

| d9a2bdb9df | |||

| 57e678c94b | |||

| 516f127cfd | |||

| e477add103 | |||

| ba3d577875 | |||

| 917e4f47c4 | |||

| 0143dac247 | |||

| d2390f3616 | |||

| 949ea73402 | |||

| d1e2fe0efe | |||

| 584ada12bf | |||

| 3ead72f654 | |||

| 9ce96d3bd3 | |||

| 5549c003d9 | |||

| 46105bf90b | |||

| 73ce3b3702 | |||

| 1c6225dc2f | |||

| 44874542c8 | |||

| 31f2846aff | |||

| bc08011e72 | |||

| 7cccc216d0 | |||

| 09493603f6 | |||

| e799bd0ba9 | |||

| 40247b0382 | |||

| cd2e9c5119 | |||

| 0b6f7b12b1 | |||

| 86e42ba291 | |||

| e0a18cafd3 | |||

| 8c2f77cab6 | |||

| c1bd6ba1e1 | |||

| df59b89fbb | |||

| 8fd9cc160c | |||

| 28e3f07b63 | |||

| 513d902df1 | |||

| fce14a9f51 | |||

| 884107da01 | |||

| caa79a354a | |||

| 5bb873a2fe | |||

| bc0442d7df | |||

| cfcd33552b | |||

| 5f6b9fd5ba | |||

| 469dce4a2d | |||

| 55d32de331 | |||

| 4491d2d3cb | |||

| f9669b9b9a | |||

| 246d5f37c7 | |||

| 293bfb03dd | |||

| 4def4e696b | |||

| b6e58c030a | |||

| bf00308ab2 | |||

| e3e786e35e | |||

| fd67794574 | |||

| 104b502919 | |||

| a18cd3ba92 | |||

| 0676cad200 | |||

| 3b1d217310 | |||

| 93bcb2e7ba | |||

| ebc70f7919 | |||

| e32af0196e | |||

| 3e5c121c56 | |||

| e644f6ed2c | |||

| 551a7c72f3 | |||

| 05b121841e | |||

| c29aea89ee | |||

| 103e70ccc5 | |||

| ec7ecbe2dd | |||

| 7a06dbb87e | |||

| 1234e434fa | |||

| 2d374f982e | |||

| 4e73630a95 | |||

| e867baa5f9 | |||

| 04b750cb52 | |||

| 97c7b12542 | |||

| 0dfec752a3 | |||

| f16f68e103 | |||

| 4b7f8f9b77 | |||

| 9969d50833 | |||

| 7355c63845 | |||

| 16cac6442a | |||

| 5009ae5548 | |||

| 32647e285e | |||

| 6df334ea68 | |||

| f8501042c1 | |||

| be085b8f6c | |||

| ef557761dd | |||

| 15377ac391 | |||

| ad5fdef6ac | |||

| 0cb5943be8 | |||

| fb593d5f28 | |||

| 645c913e4f | |||

| b4f4cca875 | |||

| 6027513574 | |||

| 849188fdab | |||

| a9c14a5306 | |||

| 2da36a14d1 | |||

| 2ee451f5f7 | |||

| f2d7e94948 | |||

| 2031dfc08a | |||

| 34ede14877 | |||

| 2af3098e5a | |||

| 2e44511b13 | |||

| 7bc4aa7e72 | |||

| e2458bce97 | |||

| ae9789fccc | |||

| 45ef25ea27 | |||

| ad2d413c0b | |||

| 30924ff1e0 | |||

| 383c48968f | |||

| bbe8627a3f | |||

| 2bd36604e2 | |||

| 9ed47ef531 | |||

| 139f98a872 | |||

| c825895190 | |||

| 42e835ebb8 | |||

| a7d5fdf54e | |||

| 3b4e41f6ec | |||

| 5505e1de7d | |||

| 6d329e418b | |||

| 3a11afb57f | |||

| df86e02c9e | |||

| deebc1383e | |||

| 19f2f1a9d3 | |||

| 4dc13ecdd8 | |||

| b4b6e356ef | |||

| 9000f40e61 | |||

| f137c0c05a | |||

| b43a02a9aa | |||

| 30be715900 | |||

| 71cf8e14cb | |||

| ffd4863b23 | |||

| 4c17098bb8 | |||

| bcfdd18599 | |||

| 067662d280 | |||

| 93d02e4686 | |||

| 12de115305 | |||

| b5d13296c6 | |||

| 86288265ad | |||

| a559d94a44 | |||

| 1eb6870853 | |||

| f88c3e9c12 | |||

| 942ca477a6 | |||

| b0e33fb473 | |||

| d58b627b98 | |||

| b85fc35f9a | |||

| bcb466fb76 | |||

| 6db721b5dd | |||

| 140c65e52b | |||

| 29e8d77ce0 | |||

| b66a4ea919 | |||

| d3d59e5024 | |||

| 5285da0418 | |||

| a76e69d709 | |||

| 4d0d775d16 | |||

| 98f67e90d5 | |||

| fee67c2e1a | |||

| c295f26a00 | |||

| 8a09c45f28 | |||

| 79ead42ade | |||

| 94e52e1d17 | |||

| 3931beee81 | |||

| d293c17d21 | |||

| 1a3920e5dc | |||

| ffc3eb1a24 | |||

| 2f5d4a7318 | |||

| 70553f4253 | |||

| 8d39fb4094 | |||

| 7d10b2370f | |||

| 31ec7650ac | |||

| c014920dc1 | |||

| 17e3d4e1ee | |||

| b01c785805 | |||

| 0eea71f878 | |||

| ec7a287801 | |||

| 4bc585a2fe | |||

| 429f2d6765 | |||

| a0c7e3cf04 | |||

| 9cd68129da | |||

| aa6f6117b7 | |||

| 6fa9c87aa4 | |||

| ee14cf9438 | |||

| 0391bbb376 | |||

| 28ada0c634 | |||

| 2c233d23ad | |||

| 59c628803a | |||

| 6b830bc77f | |||

| f30081a313 | |||

| c15648c6b5 | |||

| a02917f502 | |||

| 70d8bd04c0 | |||

| ad2cee0cae | |||

| 756a7122ad | |||

| 3d6ebde756 | |||

| daa30aa992 | |||

| 39459eb238 | |||

| 0325e2f646 | |||

| 93b8b5631f | |||

| 60ab1ce0c1 | |||

| 2f186df52d | |||

| 452e07d432 | |||

| 05d1404b9c | |||

| 2acee24332 | |||

| e7639e55f8 | |||

| f978eca477 | |||

| eb3ac2b367 | |||

| 968d386b36 | |||

| 38cb3d0227 | |||

| 6f606dd5f9 | |||

| bab616cf11 | |||

| 966adc6291 | |||

| 518cb6ec7c | |||

| 34bcd4c237 | |||

| a121127082 | |||

| 50326e94b1 | |||

| 160723b5b4 | |||

| 7991125293 | |||

| 96f61bff30 | |||

| a94488f584 | |||

| f2cf673d3a | |||

| c4595a3dd6 | |||

| 5db118e64b | |||

| 1620c56808 | |||

| e88e0026b1 | |||

| ace9b49e28 | |||

| da90751add | |||

| 8cc566f7b5 | |||

| 02ad199905 | |||

| c3e0811d86 | |||

| 499d1c5709 | |||

| cf16ec45e1 | |||

| daa15dcceb | |||

| 32556cbe5e | |||

| 74d9c674f5 | |||

| a4da558fa0 | |||

| dba6d1d57f | |||

| b01c4338c9 | |||

| 811d947da3 | |||

| de7bf7efe6 | |||

| 5537df9927 | |||

| 81fea93741 | |||

| df1065a2d8 | |||

| c2e3bf2145 | |||

| a4d849ef68 | |||

| 957c9f3853 | |||

| 3958b6b0e1 | |||

| 5d70feb573 | |||

| a22af69335 | |||

| 1213149a2f | |||

| 398b6f75cd | |||

| e46e05e7c5 | |||

| 166028836d | |||

| 3cbe66ba8c | |||

| 99de537a2e | |||

| 1d0afdf9f7 | |||

| 4db6667923 | |||

| 80e16e44aa | |||

| 58b134b793 | |||

| 6efefac2df | |||

| 0c9670ddf0 | |||

| 53c65ddc6a | |||

| 33371c5164 | |||

| 64dd1419c5 | |||

| 108068a417 | |||

| 6e8ed95ada | |||

| 39c9f9e9e8 | |||

| b555588f5d | |||

| 47ef4bb0a0 | |||

| b34654bf97 | |||

| 6068df3ab2 | |||

| bb35999f51 | |||

| 25c51c49aa | |||

| 833bedb46b | |||

| 3d8eba7b42 | |||

| ab0e86ae4b | |||

| 94b35312d0 | |||

| f4ebc65a12 | |||

| 2bc9da4f5e | |||

| e034f258e3 | |||

| 39adf6dbd2 | |||

| 112df5f664 | |||

| 3564b77553 | |||

| c813e93d85 | |||

| ff59385034 | |||

| ea4f812a12 | |||

| dbe540e49f | |||

| c1c0969834 | |||

| b87f26ce26 | |||

| 67335e638c | |||

| 90916f34a7 | |||

| 11b38a6895 | |||

| a1f5fe6a8f | |||

| 5cad164dee | |||

| 7dd28b885d | |||

| c20828478e | |||

| 3e1c88e3e0 | |||

| e98a4ea336 | |||

| e8a5f00866 | |||

| d92b7da733 | |||

| 7ff16baa7d | |||

| 93e60715af | |||

| 14965cfce9 | |||

| da1e3f084d | |||

| 0b0a62420c | |||

| c92c82aa1a | |||

| 4742c08c7c | |||

| 9c6ced1c0a | |||

| a33c9bd774 | |||

| c8a4734b97 | |||

| 3f7ab95890 | |||

| 2d8c2972ae | |||

| 941cf4e63d | |||

| 57610a7471 | |||

| f5a6a3b0e9 | |||

| bab7f89cdc | |||

| cb5d4e836f | |||

| 3a5544f060 | |||

| 412019dbe4 | |||

| f9d9c92560 | |||

| 7f4ff0e615 | |||

| 3eac7164f4 | |||

| f9d25e8e72 | |||

| 52ed57352a | |||

| 1828e7c42f | |||

| 2c89ae4e8a | |||

| 779a460030 | |||

| 0312f939d6 | |||

| 60a8a9e918 | |||

| 89666fc4fe | |||

| 44527ab5be | |||

| a0cf6658c5 | |||

| 5107f23126 | |||

| 4a5557203b | |||

| c020a8502b | |||

| 44481354fc | |||

| 974fb1b09a | |||

| 4e9f0a8255 | |||

| fa1f286cae | |||

| 85bd287b7b | |||

| 0eff3897e3 | |||

| e26e35a9ee | |||

| 980300b381 | |||

| 1cf87e8a0b | |||

| 817d860af5 | |||

| 0be5031a93 | |||

| 1ed488da4f | |||

| ddf1598ef8 | |||

| 4a8a185aa4 | |||

| 4cdeae3283 | |||

| 5030d76acf | |||

| c51e2c8b8c | |||

| eec0420eb3 | |||

| e66ea56bb3 | |||

| eefa0c7b40 | |||

| a489884da4 | |||

| 7a74d3fc9e | |||

| e71204b52f | |||

| ca330b110a | |||

| 6c77476cc1 | |||

| cabd6848e4 | |||

| e3dbc6110e | |||

| 1d6715fe20 | |||

| 06ab3f962f | |||

| df77a8a81a | |||

| 94b7c32eb3 | |||

| 8fdec15a55 | |||

| e8b1217b28 | |||

| f56f06d88d | |||

| 0f7a1e27d0 | |||

| 5114d94ad9 | |||

| f74c42bf00 | |||

| a8e816f450 | |||

| a90c259eda | |||

| e223564a55 | |||

| 7847d77405 | |||

| 089d223922 | |||

| 930085ec9c | |||

| b5f7720ab9 | |||

| a0a2d9885a | |||

| 0557143801 | |||

| 64a15928d7 | |||

| d1fda539b7 | |||

| 95d545e75b | |||

| 491fbfdc8c | |||

| 5d24432322 | |||

| da5bb373e6 | |||

| 6584b35db2 | |||

| e5874ea40d | |||

| 4bad029fd4 | |||

| 7920b9229b | |||

| 9ee6189bf9 | |||

| 9b7d47935a | |||

| 19ec206bad | |||

| fb39971464 | |||

| 3ea1da3b2c | |||

| a0fb1ab86e | |||

| 60f4d285af | |||

| aa092f03d8 | |||

| f2cb3e0b7b | |||

| 5206c98c1b | |||

| 420c2ae745 | |||

| 874179a871 | |||

| 1d9b10d312 | |||

| ccf7a3043f | |||

| 26a614b4d1 | |||

| 297fa957f7 | |||

| 05fb544f23 | |||

| 96cd92a0a9 | |||

| 31c45e0a08 | |||

| 65d4055366 | |||

| 1f2695e875 | |||

| 59556d0943 | |||

| c65e795435 | |||

| 9842be4b15 | |||

| eb6419f02b | |||

| a6d1f6aee4 | |||

| 73d15cf643 | |||

| a4dd9d0b86 | |||

| 49cd6f99d8 | |||

| 159cba815b | |||

| cbb76eae04 | |||

| 21a189fee6 | |||

| 4cff149c46 | |||

| 788ff68c1f | |||

| 009667c26c | |||

| d9f8f39a9a | |||

| 1fe27380f0 | |||

| 621d6a4475 | |||

| 30700ede39 | |||

| 8a76dc8b59 | |||

| f646391f26 | |||

| 1703f2abed | |||

| ee85fe1a9c | |||

| 0703e0e897 | |||

| a442b5f5cc | |||

| 3d6b805652 | |||

| 58f507f9e3 | |||

| 24fe4b8af3 | |||

| 46fa7d987b | |||

| 90a7a79a19 | |||

| 64938f75b3 | |||

| 043be6f55c | |||

| cef9bf7f29 | |||

| dac5a0a07e | |||

| 76ac35cdaa | |||

| c1f0e10a59 | |||

| 1bd76a717d | |||

| cd0929aa5e | |||

| 1486d880b0 | |||

| b738b09606 | |||

| 7188f0851d | |||

| 47700a4c20 | |||

| 3aeb0f5408 | |||

| 07d1acd798 | |||

| 4cffa2219a | |||

| 11852e5c22 | |||

| eb22208a4e | |||

| bf8b4779cc | |||

| 0e7ab61a83 | |||

| 3e4ddf25d0 | |||

| 1a3b585209 | |||

| a5504231b5 | |||

| c63d042c57 | |||

| 70c8d43831 | |||

| 5837d3480c | |||

| ab1382b652 | |||

| 9553e46ed7 | |||

| 34b673844f | |||

| a2a840df93 | |||

| 5b23b67092 | |||

| f9d186d33a | |||

| 93f959b03b | |||

| 8f3f90a986 | |||

| c7a66ddf74 | |||

| b60d7e1476 | |||

| 4a1e099974 | |||

| 4bbe5a095d | |||

| 7280ff8f3d | |||

| f45213a276 | |||

| 346194bd8e | |||

| 959304bc0d | |||

| fffdd5170a | |||

| ec22828169 | |||

| 19c0474ae4 | |||

| ddc0c61692 | |||

| 1fbcb44ae6 | |||

| 7eaaf57d2b | |||

| fc643f2407 | |||

| 939b0a4297 | |||

| 234c8c9ef3 | |||

| 75bad643bd | |||

| 229e3ec184 | |||

| 6ba435f822 | |||

| 774a6f1093 | |||

| 814728a6aa | |||

| 7654698a0e | |||

| ff785e5f17 | |||

| cc645de37b | |||

| cc62ee229e | |||

| 24476090df | |||

| 5fef59beb4 | |||

| 1d0fe075eb | |||

| 47b0797fe1 | |||

| ed401cc29b | |||

| 34ea3fd17e | |||

| 68b400fbf3 | |||

| b3a9e1333d | |||

| 428ec5b2a3 | |||

| 55c42ad681 | |||

| f09c8ab1e8 | |||

| 7a1aa6b563 | |||

| 9ae84f5d6b | |||

| e51e922924 | |||

| 9fcc523485 | |||

| 67d1ab9106 | |||

| da6d2009e0 | |||

| 155132d336 | |||

| 08ddfe03d2 | |||

| 5d4716a8a3 | |||

| aa8f669a3d | |||

| d5e507fc7f | |||

| 620491a649 | |||

| 7edfc57228 | |||

| bd3cf73e6e | |||

| 177505b757 | |||

| 9d9d8cd59f | |||

| 1657af1567 | |||

| acb93d1aed | |||

| 889ad3d4e6 | |||

| 93538def65 | |||

| e5067b6611 | |||

| 0629fb62d7 | |||

| 0177cf3ea4 | |||

| 658aca1469 | |||

| 03df4c7759 | |||

| 0d4f8f4e95 | |||

| ddd3f2084d | |||

| dba3ec9428 | |||

| 9de361a1b9 | |||

| c0c959b1be | |||

| e30bf95989 | |||

| b16cc5d197 | |||

| e6f4a83da6 | |||

| 1a8bae5b2f | |||

| e8eb285a59 | |||

| b508d28123 | |||

| 62b551798f | |||

| dfbebe395c | |||

| 85280b5bf4 | |||

| fb53cfd9b0 | |||

| 92d2123d8d | |||

| ec3de28ae5 | |||

| 86dc136fa9 | |||

| 172f316ac2 | |||

| 941d9da08c | |||

| 5554a4c9f0 | |||

| 9442285526 | |||

| caa40b8dd3 | |||

| 2758353380 | |||

| fe1a956715 | |||

| c05312f151 | |||

| 5966316771 | |||

| 130ee246e2 | |||

| 90af7c73ef | |||

| 3251681207 | |||

| d332c41e71 | |||

| c9da89254b | |||

| eb2d869f71 | |||

| f1e92fe2a3 | |||

| b5400c54df | |||

| a4de6016f8 | |||

| 4807909e3f | |||

| dd0884b707 | |||

| e1634ca6cb | |||

| 651a6edc5c | |||

| ada5edce88 | |||

| 41ce4ca9fc | |||

| 27d32ac5d9 | |||

| 0673d5f44f |

18

.gitignore

vendored

18

.gitignore

vendored

@ -2,16 +2,34 @@ build/

|

||||

dist/

|

||||

torch.egg-info/

|

||||

*/**/__pycache__

|

||||

torch/version.py

|

||||

torch/csrc/generic/TensorMethods.cpp

|

||||

torch/lib/*.so*

|

||||

torch/lib/*.dylib*

|

||||

torch/lib/*.h

|

||||

torch/lib/build

|

||||

torch/lib/tmp_install

|

||||

torch/lib/include

|

||||

torch/lib/torch_shm_manager

|

||||

torch/csrc/cudnn/cuDNN.cpp

|

||||

torch/csrc/nn/THNN.cwrap

|

||||

torch/csrc/nn/THNN.cpp

|

||||

torch/csrc/nn/THCUNN.cwrap

|

||||

torch/csrc/nn/THCUNN.cpp

|

||||

torch/csrc/nn/THNN_generic.cwrap

|

||||

torch/csrc/nn/THNN_generic.cpp

|

||||

torch/csrc/nn/THNN_generic.h

|

||||

docs/src/**/*

|

||||

test/data/legacy_modules.t7

|

||||

test/data/gpu_tensors.pt

|

||||

test/htmlcov

|

||||

test/.coverage

|

||||

*/*.pyc

|

||||

*/**/*.pyc

|

||||

*/**/**/*.pyc

|

||||

*/**/**/**/*.pyc

|

||||

*/**/**/**/**/*.pyc

|

||||

*/*.so*

|

||||

*/**/*.so*

|

||||

*/**/*.dylib*

|

||||

test/data/legacy_serialized.pt

|

||||

|

||||

31

.travis.yml

31

.travis.yml

@ -3,22 +3,27 @@ language: python

|

||||

python:

|

||||

- 2.7.8

|

||||

- 2.7

|

||||

- 3.3

|

||||

- 3.4

|

||||

- 3.5

|

||||

- 3.6

|

||||

- nightly

|

||||

|

||||

cache:

|

||||

- ccache

|

||||

- directories:

|

||||

- $HOME/.ccache

|

||||

|

||||

install:

|

||||

- export CC="gcc-4.8"

|

||||

- export CXX="g++-4.8"

|

||||

- travis_retry pip install -r requirements.txt

|

||||

- travis_retry pip install .

|

||||

- unset CCACHE_DISABLE

|

||||

- export CCACHE_DIR=$HOME/.ccache

|

||||

- export CC="ccache gcc-4.8"

|

||||

- export CXX="ccache g++-4.8"

|

||||

- ccache --show-stats

|

||||

- travis_retry pip install --upgrade pip setuptools wheel

|

||||

- travis_retry pip install -r requirements.txt --only-binary=scipy

|

||||

- python setup.py install

|

||||

|

||||

script:

|

||||

- python test/test_torch.py

|

||||

- python test/test_legacy_nn.py

|

||||

- python test/test_nn.py

|

||||

- python test/test_autograd.py

|

||||

- OMP_NUM_THREADS=2 ./test/run_test.sh

|

||||

|

||||

addons:

|

||||

apt:

|

||||

@ -35,3 +40,9 @@ sudo: false

|

||||

|

||||

matrix:

|

||||

fast_finish: true

|

||||

include:

|

||||

env: LINT_CHECK

|

||||

python: "2.7"

|

||||

addons: true

|

||||

install: pip install flake8

|

||||

script: flake8

|

||||

|

||||

74

CONTRIBUTING.md

Normal file

74

CONTRIBUTING.md

Normal file

@ -0,0 +1,74 @@

|

||||

## Contributing to PyTorch

|

||||

|

||||

If you are interested in contributing to PyTorch, your contributions will fall

|

||||

into two categories:

|

||||

1. You want to propose a new Feature and implement it

|

||||

- post about your intended feature, and we shall discuss the design and

|

||||

implementation. Once we agree that the plan looks good, go ahead and implement it.

|

||||

2. You want to implement a feature or bug-fix for an outstanding issue

|

||||

- Look at the outstanding issues here: https://github.com/pytorch/pytorch/issues

|

||||

- Especially look at the Low Priority and Medium Priority issues

|

||||

- Pick an issue and comment on the task that you want to work on this feature

|

||||

- If you need more context on a particular issue, please ask and we shall provide.

|

||||

|

||||

Once you finish implementing a feature or bugfix, please send a Pull Request to

|

||||

https://github.com/pytorch/pytorch

|

||||

|

||||

If you are not familiar with creating a Pull Request, here are some guides:

|

||||

- http://stackoverflow.com/questions/14680711/how-to-do-a-github-pull-request

|

||||

- https://help.github.com/articles/creating-a-pull-request/

|

||||

|

||||

|

||||

## Developing locally with PyTorch

|

||||

|

||||

To locally develop with PyTorch, here are some tips:

|

||||

|

||||

1. Uninstall all existing pytorch installs

|

||||

```

|

||||

conda uninstall pytorch

|

||||

pip uninstall torch

|

||||

pip uninstall torch # run this command twice

|

||||

```

|

||||

|

||||

2. Locally clone a copy of PyTorch from source:

|

||||

|

||||

```

|

||||

git clone https://github.com/pytorch/pytorch

|

||||

cd pytorch

|

||||

```

|

||||

|

||||

3. Install PyTorch in `build develop` mode:

|

||||

|

||||

A full set of instructions on installing PyTorch from Source are here:

|

||||

https://github.com/pytorch/pytorch#from-source

|

||||

|

||||

The change you have to make is to replace

|

||||

|

||||

`python setup.py install`

|

||||

|

||||

with

|

||||

|

||||

```

|

||||

python setup.py build develop

|

||||

```

|

||||

|

||||

This is especially useful if you are only changing Python files.

|

||||

|

||||

This mode will symlink the python files from the current local source tree into the

|

||||

python install.

|

||||

|

||||

Hence, if you modify a python file, you do not need to reinstall pytorch again and again.

|

||||

|

||||

For example:

|

||||

- Install local pytorch in `build develop` mode

|

||||

- modify your python file torch/__init__.py (for example)

|

||||

- test functionality

|

||||

- modify your python file torch/__init__.py

|

||||

- test functionality

|

||||

- modify your python file torch/__init__.py

|

||||

- test functionality

|

||||

|

||||

You do not need to repeatedly install after modifying python files.

|

||||

|

||||

|

||||

Hope this helps, and thanks for considering to contribute.

|

||||

38

Dockerfile

Normal file

38

Dockerfile

Normal file

@ -0,0 +1,38 @@

|

||||

FROM nvidia/cuda:8.0-devel-ubuntu16.04

|

||||

|

||||

RUN echo "deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1604/x86_64 /" > /etc/apt/sources.list.d/nvidia-ml.list

|

||||

|

||||

ENV CUDNN_VERSION 6.0.20

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends \

|

||||

build-essential \

|

||||

cmake \

|

||||

git \

|

||||

curl \

|

||||

ca-certificates \

|

||||

libjpeg-dev \

|

||||

libpng-dev \

|

||||

libcudnn6=$CUDNN_VERSION-1+cuda8.0 \

|

||||

libcudnn6-dev=$CUDNN_VERSION-1+cuda8.0 && \

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

|

||||

RUN curl -o ~/miniconda.sh -O https://repo.continuum.io/miniconda/Miniconda3-4.2.12-Linux-x86_64.sh && \

|

||||

chmod +x ~/miniconda.sh && \

|

||||

~/miniconda.sh -b -p /opt/conda && \

|

||||

rm ~/miniconda.sh && \

|

||||

/opt/conda/bin/conda install conda-build && \

|

||||

/opt/conda/bin/conda create -y --name pytorch-py35 python=3.5.2 numpy scipy ipython mkl&& \

|

||||

/opt/conda/bin/conda clean -ya

|

||||

ENV PATH /opt/conda/envs/pytorch-py35/bin:$PATH

|

||||

RUN conda install --name pytorch-py35 -c soumith magma-cuda80

|

||||

# This must be done before pip so that requirements.txt is available

|

||||

WORKDIR /opt/pytorch

|

||||

COPY . .

|

||||

|

||||

RUN cat requirements.txt | xargs -n1 pip install --no-cache-dir && \

|

||||

TORCH_CUDA_ARCH_LIST="3.5 5.2 6.0 6.1+PTX" TORCH_NVCC_FLAGS="-Xfatbin -compress-all" \

|

||||

CMAKE_LIBRARY_PATH=/opt/conda/envs/pytorch-py35/lib \

|

||||

CMAKE_INCLUDE_PATH=/opt/conda/envs/pytorch-py35/include \

|

||||

pip install -v .

|

||||

|

||||

WORKDIR /workspace

|

||||

RUN chmod -R a+w /workspace

|

||||

38

LICENSE

Normal file

38

LICENSE

Normal file

@ -0,0 +1,38 @@

|

||||

Copyright (c) 2016- Facebook, Inc (Adam Paszke)

|

||||

Copyright (c) 2014- Facebook, Inc (Soumith Chintala)

|

||||

Copyright (c) 2011-2014 Idiap Research Institute (Ronan Collobert)

|

||||

Copyright (c) 2012-2014 Deepmind Technologies (Koray Kavukcuoglu)

|

||||

Copyright (c) 2011-2012 NEC Laboratories America (Koray Kavukcuoglu)

|

||||

Copyright (c) 2011-2013 NYU (Clement Farabet)

|

||||

Copyright (c) 2006-2010 NEC Laboratories America (Ronan Collobert, Leon Bottou, Iain Melvin, Jason Weston)

|

||||

Copyright (c) 2006 Idiap Research Institute (Samy Bengio)

|

||||

Copyright (c) 2001-2004 Idiap Research Institute (Ronan Collobert, Samy Bengio, Johnny Mariethoz)

|

||||

|

||||

All rights reserved.

|

||||

|

||||

Redistribution and use in source and binary forms, with or without

|

||||

modification, are permitted provided that the following conditions are met:

|

||||

|

||||

1. Redistributions of source code must retain the above copyright

|

||||

notice, this list of conditions and the following disclaimer.

|

||||

|

||||

2. Redistributions in binary form must reproduce the above copyright

|

||||

notice, this list of conditions and the following disclaimer in the

|

||||

documentation and/or other materials provided with the distribution.

|

||||

|

||||

3. Neither the names of Facebook, Deepmind Technologies, NYU, NEC Laboratories America

|

||||

and IDIAP Research Institute nor the names of its contributors may be

|

||||

used to endorse or promote products derived from this software without

|

||||

specific prior written permission.

|

||||

|

||||

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

||||

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

||||

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

|

||||

ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE

|

||||

LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

|

||||

CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

|

||||

SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

|

||||

INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

|

||||

CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

|

||||

ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

|

||||

POSSIBILITY OF SUCH DAMAGE.

|

||||

22

Makefile

22

Makefile

@ -1,22 +0,0 @@

|

||||

# Add main target here - setup.py doesn't understand the need to recompile

|

||||

# after generic files change

|

||||

.PHONY: all clean torch

|

||||

|

||||

all: install

|

||||

|

||||

torch:

|

||||

python3 setup.py build

|

||||

|

||||

install:

|

||||

python3 setup.py install

|

||||

|

||||

clean:

|

||||

@rm -rf build

|

||||

@rm -rf dist

|

||||

@rm -rf torch.egg-info

|

||||

@rm -rf tools/__pycache__

|

||||

@rm -rf torch/csrc/generic/TensorMethods.cpp

|

||||

@rm -rf torch/lib/tmp_install

|

||||

@rm -rf torch/lib/build

|

||||

@rm -rf torch/lib/*.so*

|

||||

@rm -rf torch/lib/*.h

|

||||

470

README.md

470

README.md

@ -1,271 +1,243 @@

|

||||

# pytorch [alpha-1]

|

||||

<p align="center"><img width="40%" src="docs/source/_static/img/pytorch-logo-dark.png" /></p>

|

||||

|

||||

The project is still under active development and is likely to drastically change in short periods of time.

|

||||

We will be announcing API changes and important developments via a newsletter, github issues and post a link to the issues on slack.

|

||||

Please remember that at this stage, this is an invite-only closed alpha, and please don't distribute code further.

|

||||

This is done so that we can control development tightly and rapidly during the initial phases with feedback from you.

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

PyTorch is a python package that provides two high-level features:

|

||||

- Tensor computation (like numpy) with strong GPU acceleration

|

||||

- Deep Neural Networks built on a tape-based autograd system

|

||||

|

||||

You can reuse your favorite python packages such as numpy, scipy and Cython to extend PyTorch when needed.

|

||||

|

||||

We are in an early-release Beta. Expect some adventures and rough edges.

|

||||

|

||||

- [More About PyTorch](#more-about-pytorch)

|

||||

- [Installation](#installation)

|

||||

- [Binaries](#binaries)

|

||||

- [From source](#from-source)

|

||||

- [Docker image](#docker-image)

|

||||

- [Getting Started](#getting-started)

|

||||

- [Communication](#communication)

|

||||

- [Releases and Contributing](#releases-and-contributing)

|

||||

- [The Team](#the-team)

|

||||

|

||||

| System | Python | Status |

|

||||

| --- | --- | --- |

|

||||

| Linux CPU | 2.7.8, 2.7, 3.5, nightly | [](https://travis-ci.org/pytorch/pytorch) |

|

||||

| Linux GPU | 2.7 | [](https://build.pytorch.org/job/pytorch-master-py2) |

|

||||

| Linux GPU | 3.5 | [](https://build.pytorch.org/job/pytorch-master-py3) |

|

||||

|

||||

## More about PyTorch

|

||||

|

||||

At a granular level, PyTorch is a library that consists of the following components:

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td><b> torch </b></td>

|

||||

<td> a Tensor library like NumPy, with strong GPU support </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b> torch.autograd </b></td>

|

||||

<td> a tape based automatic differentiation library that supports all differentiable Tensor operations in torch </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b> torch.nn </b></td>

|

||||

<td> a neural networks library deeply integrated with autograd designed for maximum flexibility </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b> torch.multiprocessing </b></td>

|

||||

<td> python multiprocessing, but with magical memory sharing of torch Tensors across processes. Useful for data loading and hogwild training. </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b> torch.utils </b></td>

|

||||

<td> DataLoader, Trainer and other utility functions for convenience </td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><b> torch.legacy(.nn/.optim) </b></td>

|

||||

<td> legacy code that has been ported over from torch for backward compatibility reasons </td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

Usually one uses PyTorch either as:

|

||||

|

||||

- A replacement for numpy to use the power of GPUs.

|

||||

- a deep learning research platform that provides maximum flexibility and speed

|

||||

|

||||

Elaborating further:

|

||||

|

||||

### A GPU-ready Tensor library

|

||||

|

||||

If you use numpy, then you have used Tensors (a.k.a ndarray).

|

||||

|

||||

<p align=center><img width="30%" src="docs/source/_static/img/tensor_illustration.png" /></p>

|

||||

|

||||

PyTorch provides Tensors that can live either on the CPU or the GPU, and accelerate

|

||||

compute by a huge amount.

|

||||

|

||||

We provide a wide variety of tensor routines to accelerate and fit your scientific computation needs

|

||||

such as slicing, indexing, math operations, linear algebra, reductions.

|

||||

And they are fast!

|

||||

|

||||

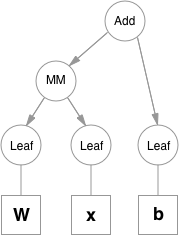

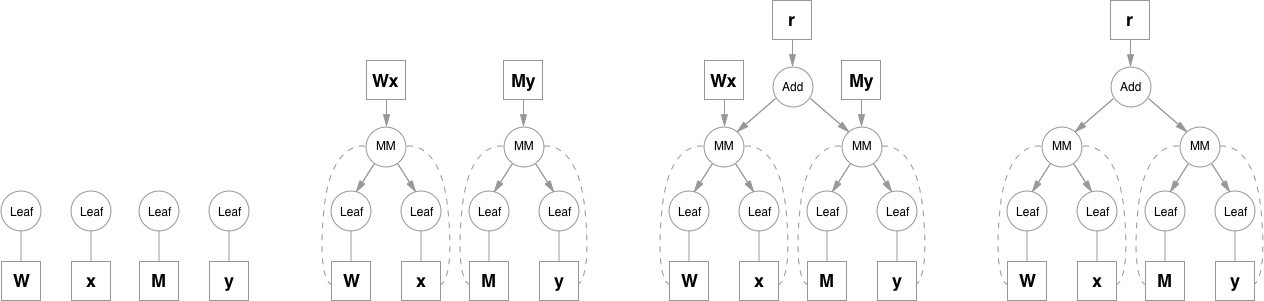

### Dynamic Neural Networks: Tape based Autograd

|

||||

|

||||

PyTorch has a unique way of building neural networks: using and replaying a tape recorder.

|

||||

|

||||

Most frameworks such as `TensorFlow`, `Theano`, `Caffe` and `CNTK` have a static view of the world.

|

||||

One has to build a neural network, and reuse the same structure again and again.

|

||||

Changing the way the network behaves means that one has to start from scratch.

|

||||

|

||||

With PyTorch, we use a technique called Reverse-mode auto-differentiation, which allows you to

|

||||

change the way your network behaves arbitrarily with zero lag or overhead. Our inspiration comes

|

||||

from several research papers on this topic, as well as current and past work such as

|

||||

[autograd](https://github.com/twitter/torch-autograd),

|

||||

[autograd](https://github.com/HIPS/autograd),

|

||||

[Chainer](http://chainer.org), etc.

|

||||

|

||||

While this technique is not unique to PyTorch, it's one of the fastest implementations of it to date.

|

||||

You get the best of speed and flexibility for your crazy research.

|

||||

|

||||

<p align=center><img width="80%" src="docs/source/_static/img/dynamic_graph.gif" /></p>

|

||||

|

||||

### Python first

|

||||

|

||||

PyTorch is not a Python binding into a monolothic C++ framework.

|

||||

It is built to be deeply integrated into Python.

|

||||

You can use it naturally like you would use numpy / scipy / scikit-learn etc.

|

||||

You can write your new neural network layers in Python itself, using your favorite libraries

|

||||

and use packages such as Cython and Numba.

|

||||

Our goal is to not reinvent the wheel where appropriate.

|

||||

|

||||

### Imperative experiences

|

||||

|

||||

PyTorch is designed to be intuitive, linear in thought and easy to use.

|

||||

When you execute a line of code, it gets executed. There isn't an asynchronous view of the world.

|

||||

When you drop into a debugger, or receive error messages and stack traces, understanding them is straight-forward.

|

||||

The stack-trace points to exactly where your code was defined.

|

||||

We hope you never spend hours debugging your code because of bad stack traces or asynchronous and opaque execution engines.

|

||||

|

||||

### Fast and Lean

|

||||

|

||||

PyTorch has minimal framework overhead. We integrate acceleration libraries

|

||||

such as Intel MKL and NVIDIA (CuDNN, NCCL) to maximize speed.

|

||||

At the core, its CPU and GPU Tensor and Neural Network backends

|

||||

(TH, THC, THNN, THCUNN) are written as independent libraries with a C99 API.

|

||||

They are mature and have been tested for years.

|

||||

|

||||

Hence, PyTorch is quite fast -- whether you run small or large neural networks.

|

||||

|

||||

The memory usage in PyTorch is extremely efficient compared to Torch or some of the alternatives.

|

||||

We've written custom memory allocators for the GPU to make sure that

|

||||

your deep learning models are maximally memory efficient.

|

||||

This enables you to train bigger deep learning models than before.

|

||||

|

||||

### Extensions without pain

|

||||

|

||||

Writing new neural network modules, or interfacing with PyTorch's Tensor API was designed to be straight-forward

|

||||

and with minimal abstractions.

|

||||

|

||||

You can write new neural network layers in Python using the torch API

|

||||

[or your favorite numpy based libraries such as SciPy](http://pytorch.org/tutorials/advanced/numpy_extensions_tutorial.html).

|

||||

|

||||

If you want to write your layers in C/C++, we provide an extension API based on

|

||||

[cffi](http://cffi.readthedocs.io/en/latest/) that is efficient and with minimal boilerplate.

|

||||

There is no wrapper code that needs to be written. You can see [a tutorial here](http://pytorch.org/tutorials/advanced/c_extension.html) and [an example here](https://github.com/pytorch/extension-ffi).

|

||||

|

||||

|

||||

## Installation

|

||||

|

||||

### Binaries

|

||||

Commands to install from binaries via Conda or pip wheels are on our website:

|

||||

|

||||

[http://pytorch.org](http://pytorch.org)

|

||||

|

||||

### From source

|

||||

|

||||

If you are installing from source, we highly recommend installing an [Anaconda](https://www.continuum.io/downloads) environment.

|

||||

You will get a high-quality BLAS library (MKL) and you get a controlled compiler version regardless of your Linux distro.

|

||||

|

||||

Once you have [anaconda](https://www.continuum.io/downloads) installed, here are the instructions.

|

||||

|

||||

If you want to compile with CUDA support, install

|

||||

- [NVIDIA CUDA](https://developer.nvidia.com/cuda-downloads) 7.5 or above

|

||||

- [NVIDIA CuDNN](https://developer.nvidia.com/cudnn) v5.x

|

||||

|

||||

If you want to disable CUDA support, export environment variable `NO_CUDA=1`.

|

||||

|

||||

#### Install optional dependencies

|

||||

|

||||

On Linux

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

pip install .

|

||||

export CMAKE_PREFIX_PATH=[anaconda root directory]

|

||||

|

||||

# Install basic dependencies

|

||||

conda install numpy mkl setuptools cmake gcc cffi

|

||||

|

||||

# Add LAPACK support for the GPU

|

||||

conda install -c soumith magma-cuda75 # or magma-cuda80 if CUDA 8.0

|

||||

```

|

||||

|

||||

To install with CUDA support change `WITH_CUDA = False` to `WITH_CUDA = True` in `setup.py`.

|

||||

On OSX

|

||||

```bash

|

||||

export CMAKE_PREFIX_PATH=[anaconda root directory]

|

||||

conda install numpy setuptools cmake cffi

|

||||

```

|

||||

|

||||

#### Install PyTorch

|

||||

```bash

|

||||

export MACOSX_DEPLOYMENT_TARGET=10.9 # if OSX

|

||||

pip install -r requirements.txt

|

||||

python setup.py install

|

||||

```

|

||||

|

||||

### Docker image

|

||||

|

||||

Dockerfile is supplied to build images with cuda support and cudnn v6. Build as usual

|

||||

```

|

||||

docker build -t pytorch-cudnnv6 .

|

||||

```

|

||||

and run with nvidia-docker:

|

||||

```

|

||||

nvidia-docker run --rm -ti --ipc=host pytorch-cudnnv5

|

||||

```

|

||||

Please note that pytorch uses shared memory to share data between processes, so if torch multiprocessing is used (e.g.

|

||||

for multithreaded data loaders) the default shared memory segment size that container runs with is not enough, and you

|

||||

should increase shared memory size either with --ipc=host or --shm-size command line options to nvidia-docker run.

|

||||

|

||||

|

||||

## Getting Started

|

||||

|

||||

Three pointers to get you started:

|

||||

- [Tutorials: get you started with understanding and using PyTorch](http://pytorch.org/tutorials/)

|

||||

- [Examples: easy to understand pytorch code across all domains](https://github.com/pytorch/examples)

|

||||

- The API Reference: [http://pytorch.org/docs/](http://pytorch.org/docs/)

|

||||

|

||||

## Communication

|

||||

* forums: discuss implementations, research, etc. http://discuss.pytorch.org

|

||||

* github issues: bug reports, feature requests, install issues, RFCs, thoughts, etc.

|

||||

* slack: general chat, online discussions, collaboration etc. https://pytorch.slack.com/ . If you need a slack invite, ping me at soumith@pytorch.org

|

||||

* slack: general chat, online discussions, collaboration etc. https://pytorch.slack.com/ . If you need a slack invite, ping us at soumith@pytorch.org

|

||||

* newsletter: no-noise, one-way email newsletter with important announcements about pytorch. You can sign-up here: http://eepurl.com/cbG0rv

|

||||

|

||||

## Timeline

|

||||

## Releases and Contributing

|

||||

|

||||

We will run the alpha releases weekly for 6 weeks.

|

||||

After that, we will reevaluate progress, and if we are ready, we will hit beta-0. If not, we will do another two weeks of alpha.

|

||||

PyTorch has a 90 day release cycle (major releases).

|

||||

It's current state is Beta (v0.1.6), we expect no obvious bugs. Please let us know if you encounter a bug by [filing an issue](https://github.com/pytorch/pytorch/issues).

|

||||

|

||||

* alpha-0: Working versions of torch, cutorch, nn, cunn, optim fully unit tested with seamless numpy conversions

|

||||

* alpha-1: Serialization to/from disk with sharing intact. initial release of the new neuralnets package based on a Chainer-like design

|

||||

* alpha-2: sharing tensors across processes for hogwild training or data-loading processes. a rewritten optim package for this new nn.

|

||||

* alpha-3: binary installs (prob will take @alexbw 's help here), contbuilds, etc.

|

||||

* alpha-4: a ton of examples across vision, nlp, speech, RL -- this phase might make us rethink parts of the APIs, and hence want to do this in alpha than beta

|

||||

* alpha-5: Putting a simple and efficient story around multi-machine training. Probably simplistic like torch-distlearn. Building the website, release scripts, more documentation, etc.

|

||||

* alpha-6: [no plan yet]

|

||||

We appreciate all contributions. If you are planning to contribute back bug-fixes, please do so without any further discussion.

|

||||

|

||||

The beta phases will be leaning more towards working with all of you, convering your use-cases, active development on non-core aspects.

|

||||

If you plan to contribute new features, utility functions or extensions to the core, please first open an issue and discuss the feature with us.

|

||||

Sending a PR without discussion might end up resulting in a rejected PR, because we might be taking the core in a different direction than you might be aware of.

|

||||

|

||||

## pytorch vs torch: important changes

|

||||

**For the next release cycle, these are the 3 big features we are planning to add:**

|

||||

|

||||

We've decided that it's time to rewrite/update parts of the old torch API, even if it means losing some of backward compatibility (we can hack up a model converter that converts correctly).

|

||||

This section lists the biggest changes, and suggests how to shift from torch to pytorch.

|

||||

1. [Distributed PyTorch](https://github.com/pytorch/pytorch/issues/241) (a draft implementation is present in this [branch](https://github.com/apaszke/pytorch-dist) )

|

||||

2. Backward of Backward - Backpropagating through the optimization process itself. Some past and recent papers such as

|

||||

[Double Backprop](http://yann.lecun.com/exdb/publis/pdf/drucker-lecun-91.pdf) and [Unrolled GANs](https://arxiv.org/abs/1611.02163) need this.

|

||||

3. Lazy Execution Engine for autograd - This will enable us to optionally introduce caching and JIT compilers to optimize autograd code.

|

||||

|

||||

For now there's no pytorch documentation.

|

||||

Since all currently implemented modules are very similar to the old ones, it's best to use torch7 docs for now (having in mind several differences described below).

|

||||

|

||||

### Library structure

|

||||

## The Team

|

||||

|

||||

All core modules are merged into a single repository.

|

||||

Most of them will be rewritten and will be completely new (more on this below), but we're providing a Python version of old packages under torch.legacy namespace.

|

||||

* torch (torch)

|

||||

* cutorch (torch.cuda)

|

||||

* nn (torch.legacy.nn)

|

||||

* cunn (torch.legacy.cunn)

|

||||

* optim (torch.legacy.optim)

|

||||