Cap opset version at 17 for torch.onnx.export and suggest users to use the dynamo exporter. Warn users instead of failing hard because we should still allow users to create custom symbolic functions for opset>17.

Also updates the default opset version by running `tools/onnx/update_default_opset_version.py`.

Fixes#107801Fixes#107446

Pull Request resolved: https://github.com/pytorch/pytorch/pull/107829

Approved by: https://github.com/BowenBao

Fixes#97728Fixes#98622

Fixes https://github.com/microsoft/onnx-script/issues/393

Provide op_level_debug in exporter which creates randomnied torch.Tensor based on FakeTensorProp real shape as inputs of both torch ops and ONNX symbolic function. The PR leverages on Transformer class to create a new fx.Graph, but shares the same Module with the original one to save memory.

The test is different from [op_correctness_test.py](https://github.com/microsoft/onnx-script/blob/main/onnxscript/tests/function_libs/torch_aten/ops_correctness_test.py) as op_level_debug generating real tensors based on the fake tensors in the model.

Limitation:

1. Some of the trace_only function is not supported due to lack of param_schema which leads to arg/kwargs wronly split and ndarray wrapping. (WARNINGS in SARIF)

2. The ops with dim/indices (INT64) is not supported that they need the information(shape) from other input args. (WARNINGS in SARIF)

3. sym_size and built-in ops are not supported.

4. op_level_debug only labels results in SARIF. It doesn't stop exporter.

5. Introduce ONNX owning FakeTensorProp supports int/float/bool

6. parametrized op_level_debug and dynamic_shapes into FX tests

Pull Request resolved: https://github.com/pytorch/pytorch/pull/97494

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

* CI Test environment to install onnx and onnx-script.

* Add symbolic function for `bitwise_or`, `convert_element_type` and `masked_fill_`.

* Update symbolic function for `slice` and `arange`.

* Update .pyi signature for `_jit_pass_onnx_graph_shape_type_inference`.

Co-authored-by: Wei-Sheng Chin <wschin@outlook.com>

Co-authored-by: Ti-Tai Wang <titaiwang@microsoft.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/94564

Approved by: https://github.com/abock

## Summary

The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`.

The new registry

- Has faster lookup by storing only functions in the opset version they are defined in

- Is easier to manage and interact with due to its class design

- Builds the foundation for the more flexible registration process detailed in #83787

Implementation changes

- **Breaking**: Remove `torch.onnx.symbolic_registry`

- `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility

- Update _onnx_supported_ops.py for doc generation to include quantized ops.

- Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp`

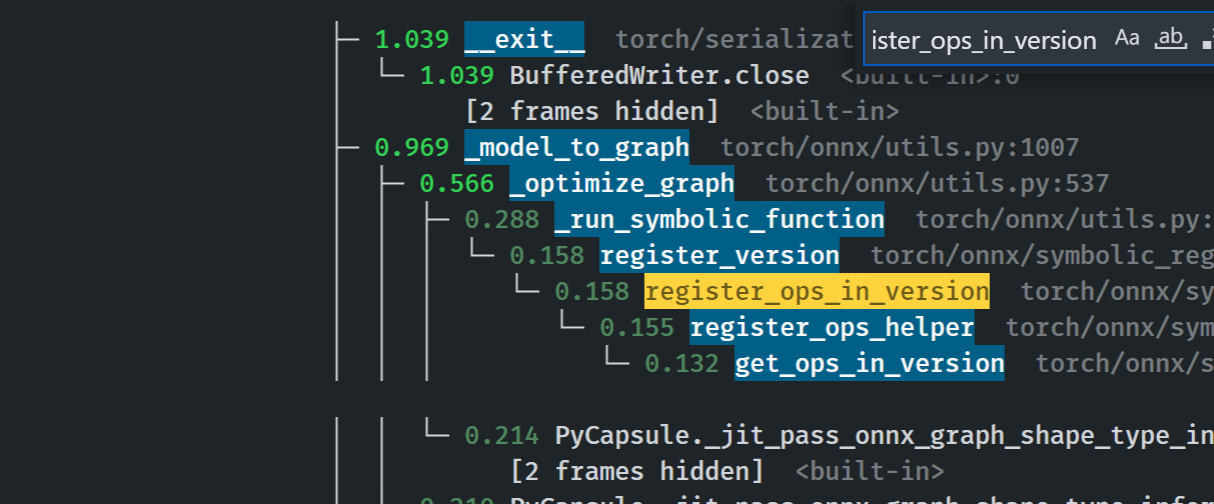

## Profiling results

-0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc.

### After

```

└─ 1.641 export <@beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1

└─ 1.641 export torch/onnx/utils.py:185

└─ 1.640 _export torch/onnx/utils.py:1331

├─ 0.889 _model_to_graph torch/onnx/utils.py:1005

│ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535

│ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0

│ │ │ [2 frames hidden] <built-in>

│ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670

│ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782

│ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18

│ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0

│ │ │ [2 frames hidden] <built-in>

│ │ └─ 0.033 [self]

```

### Before

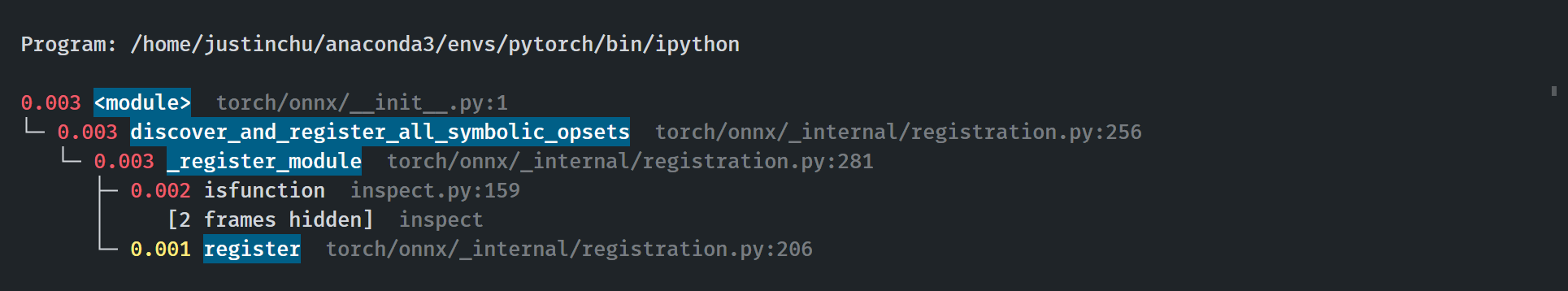

### Start up time

The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84382

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

Reduce circular dependencies

- Lift constants and flags from `symbolic_helper` to `_constants` and `_globals`

- Standardized constant naming to make it consistant

- Make `utils` strictly dependent on `symbolic_helper`, removing inline imports from symbolic_helper

- Move side effects from `utils` to `_patch_torch`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77142

Approved by: https://github.com/garymm, https://github.com/BowenBao