Summary:

The `tensor_proto` function in the TensorBoard summary writer code doesn't correctly encode `torch.bfloat16` tensors; it tries to use a data type of `DT_BFLOAT` when creating the protobuf, but `DT_BFLOAT` is not a valid enum value (see `types.proto`). The correct value to use when encoding tensors of this type is `DT_BLOAT16`. This diff updates the type map in the summary code to use the correct type.

While fixing this error, I also noticed the wrong field of the protobuf was being used when encoding tensors of this type; per the docs in the proto file, the DT_HALF and DT_BFLOAT16 types should use the `half_val` field, not `float_val`. Since this might confuse folks trying to read this data from storage in the future, I've updated the code to correctly use to `half_val` field for these cases. Note that there's no real size advantage from doing this, since both the `half_val` and `float_val` fields are 32 bits long.

Test Plan:

Added a parameterized unit test that tests encoding tensors with `torch.half`, `torch.float16`, and `torch.bfloat16` data types.

# Before this change

The test fails with an `ValueError` due to the incorrect enum label:

```

======================================================================

ERROR: test_bfloat16_tensor_proto (test_tensorboard.TestTensorProtoSummary)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/data/users/jcarreiro/fbsource/buck-out/v2/gen/fbcode/f88b3f368c9334db/caffe2/test/__tensorboard__/tensorboard#link-tree/torch/testing/_internal/common_utils.py", line 2382, in wrapper

method(*args, **kwargs)

File "/data/users/jcarreiro/fbsource/buck-out/v2/gen/fbcode/f88b3f368c9334db/caffe2/test/__tensorboard__/tensorboard#link-tree/test_tensorboard.py", line 871, in test_bfloat16_tensor_proto

tensor_proto(

File "/data/users/jcarreiro/fbsource/buck-out/v2/gen/fbcode/f88b3f368c9334db/caffe2/test/__tensorboard__/tensorboard#link-tree/torch/utils/tensorboard/summary.py", line 400, in tensor_proto

tensor_proto = TensorProto(**tensor_proto_args)

ValueError: unknown enum label "DT_BFLOAT"

To execute this test, run the following from the base repo dir:

python test/__tensorboard__/tensorboard#link-tree/test_tensorboard.py -k test_bfloat16_tensor_proto

This message can be suppressed by setting PYTORCH_PRINT_REPRO_ON_FAILURE=0

----------------------------------------------------------------------

```

# After this change

The test passes.

Reviewed By: tanvigupta17

Differential Revision: D48828958

Pull Request resolved: https://github.com/pytorch/pytorch/pull/108351

Approved by: https://github.com/hamzajzmati, https://github.com/XilunWu

**Line 492: ANTIALIAS updated to Resampling.LANCZOS**

Removes the following Depreciation Warning:

`DeprecationWarning: ANTIALIAS is deprecated and will be removed in Pillow 10 (2023-07-01). `

`Use Resampling.LANCZOS instead.`

---

```

try:

ANTIALIAS = Image.Resampling.LANCZOS

except AttributeError:

ANTIALIAS = Image.ANTIALIAS

image = image.resize((scaled_width, scaled_height), ANTIALIAS)

```

Now Resampling.LANCZOS will be used unless it gives an AttributeError exception in which case it will revert back to using Image.ANTIALIAS.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85679

Approved by: https://github.com/albanD

### Description

Small code refactoring to make the code more pythonic by utilizing the Python `with` statement

### Issue

Not an issue

### Testing

This is a code refactoring

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82929

Approved by: https://github.com/malfet

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59435

Sometimes we need to compare 10+ digits. Currenlty tensorboard only saves float32. Provide an option to save float64

Reviewed By: yuguo68

Differential Revision: D28856352

fbshipit-source-id: 05d12e6f79b6237b3497b376d6665c9c38e03cf7

Summary:

This PR adds fixes mypy issues on the current pytorch main branch. In special, it replaces occurrences of `np.bool/np.float` to `np.bool_/np.float64`, respectively:

```

test/test_numpy_interop.py:145: error: Module has no attribute "bool"; maybe "bool_" or "bool8"? [attr-defined]

test/test_numpy_interop.py:159: error: Module has no attribute "float"; maybe "float_", "cfloat", or "float64"? [attr-defined]

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52090

Reviewed By: walterddr

Differential Revision: D26469596

Pulled By: malfet

fbshipit-source-id: e55a5c6da7b252469e05942e0d2588e7f92b88bf

Summary:

Stumbled upon a little gem in the audio conversion for `SummaryWriter.add_audio()`: two Python `for` loops to convert a float array to little-endian int16 samples. On my machine, this took 35 seconds for a 30-second 22.05 kHz excerpt. The same can be done directly in numpy in 1.65 milliseconds. (No offense, I'm glad that the functionality was there!)

Would also be ready to extend this to support stereo waveforms, or should this become a separate PR?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/44201

Reviewed By: J0Nreynolds

Differential Revision: D23831002

Pulled By: edward-io

fbshipit-source-id: 5c8f1ac7823d1ed41b53c4f97ab9a7bac33ea94b

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/40720

Add support for populating domain_discrete field in TensorBoard add_hparams API

Test Plan: Unit test test_hparams_domain_discrete

Reviewed By: edward-io

Differential Revision: D22291347

fbshipit-source-id: 78db9f62661c9fe36cd08d563db0e7021c01428d

Summary: Add support for populating domain_discrete field in TensorBoard add_hparams API

Test Plan: Unit test test_hparams_domain_discrete

Reviewed By: edward-io

Differential Revision: D22227939

fbshipit-source-id: d2f0cd8e5632cbcc578466ff3cd587ee74f847af

Summary:

The root cause of incorrect rendering is that numbers are treated as a string if the data type is not specified. Therefore the data is sort based on the first digit.

closes https://github.com/pytorch/pytorch/issues/29906

cc orionr sanekmelnikov

Pull Request resolved: https://github.com/pytorch/pytorch/pull/31544

Differential Revision: D21105403

Pulled By: natalialunova

fbshipit-source-id: a676ff5ab94c5bdb653615d43219604e54747e56

Summary:

The function originally comes from 4279f99847/tensorflow/python/ops/summary_op_util.py (L45-L68)

As its comment says:

```

# In the past, the first argument to summary ops was a tag, which allowed

# arbitrary characters. Now we are changing the first argument to be the node

# name. This has a number of advantages (users of summary ops now can

# take advantage of the tf name scope system) but risks breaking existing

# usage, because a much smaller set of characters are allowed in node names.

# This function replaces all illegal characters with _s, and logs a warning.

# It also strips leading slashes from the name.

```

This function is only for compatibility with TF's operator name restrictions, and is therefore no longer valid in pytorch. By removing it, tensorboard summaries can use more characters in the names.

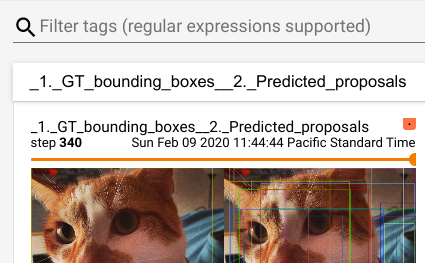

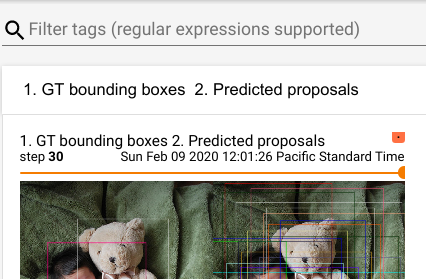

Before:

After:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33133

Differential Revision: D20089307

Pulled By: ezyang

fbshipit-source-id: 3552646dce1d5fa0bde7470f32d5376e67ec31c6

Summary:

I accidentally added a TF dependency in #20413 by using the from tensorboard.plugins.mesh.summary import _get_json_config import.

I'm removing it at the cost of code duplication.

orionr, Please review.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/21066

Reviewed By: natalialunova

Differential Revision: D15538746

Pulled By: orionr

fbshipit-source-id: 8a822719a4a9f5d67f1badb474e3a73cefce507f

Summary:

As a part of https://github.com/pytorch/pytorch/pull/20580 I noticed that we had some unusual variable naming in `summary.py`. This cleans it up and also removes some variables that weren't being used.

I'll wait until we have an `add_custom_scalars` test to land this.

cc lanpa natalialunova

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20861

Differential Revision: D15503420

Pulled By: orionr

fbshipit-source-id: 86d105a346198a1ca543d1c5d297804402ab5a0c

Summary:

I started adding support for the new **[mesh/point cloud](https://github.com/tensorflow/graphics/blob/master/tensorflow_graphics/g3doc/tensorboard.md)** data type introduced to TensorBoard recently.

I created the functions to add the data, created the appropriate summaries.

This new data type however requires a **Merged** summary containing the data for the vertices, colors and faces.

I got stuck at this stage. Maybe someone can help. lanpa?

I converted the example code by Google to PyTorch:

```python

import numpy as np

import trimesh

import torch

from torch.utils.tensorboard import SummaryWriter

sample_mesh = 'https://storage.googleapis.com/tensorflow-graphics/tensorboard/test_data/ShortDance07_a175_00001.ply'

log_dir = 'runs/torch'

batch_size = 1

# Camera and scene configuration.

config_dict = {

'camera': {'cls': 'PerspectiveCamera', 'fov': 75},

'lights': [

{

'cls': 'AmbientLight',

'color': '#ffffff',

'intensity': 0.75,

}, {

'cls': 'DirectionalLight',

'color': '#ffffff',

'intensity': 0.75,

'position': [0, -1, 2],

}],

'material': {

'cls': 'MeshStandardMaterial',

'roughness': 1,

'metalness': 0

}

}

# Read all sample PLY files.

mesh = trimesh.load_remote(sample_mesh)

vertices = np.array(mesh.vertices)

# Currently only supports RGB colors.

colors = np.array(mesh.visual.vertex_colors[:, :3])

faces = np.array(mesh.faces)

# Add batch dimension, so our data will be of shape BxNxC.

vertices = np.expand_dims(vertices, 0)

colors = np.expand_dims(colors, 0)

faces = np.expand_dims(faces, 0)

# Create data placeholders of the same shape as data itself.

vertices_tensor = torch.as_tensor(vertices)

faces_tensor = torch.as_tensor(faces)

colors_tensor = torch.as_tensor(colors)

writer = SummaryWriter(log_dir)

writer.add_mesh('mesh_color_tensor', vertices=vertices_tensor, faces=faces_tensor,

colors=colors_tensor, config_dict=config_dict)

writer.close()

```

I tried adding only the vertex summary, hence the others are supposed to be optional.

I got the following error from TensorBoard and it also didn't display the points:

```

Traceback (most recent call last):

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/werkzeug/serving.py", line 302, in run_wsgi

execute(self.server.app)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/werkzeug/serving.py", line 290, in execute

application_iter = app(environ, start_response)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/backend/application.py", line 309, in __call__

return self.data_applications[clean_path](environ, start_response)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/werkzeug/wrappers/base_request.py", line 235, in application

resp = f(*args[:-2] + (request,))

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/plugins/mesh/mesh_plugin.py", line 252, in _serve_mesh_metadata

tensor_events = self._collect_tensor_events(request)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/plugins/mesh/mesh_plugin.py", line 188, in _collect_tensor_events

tensors = self._multiplexer.Tensors(run, instance_tag)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/backend/event_processing/plugin_event_multiplexer.py", line 400, in Tensors

return accumulator.Tensors(tag)

File "/home/dawars/workspace/pytorch/venv/lib/python3.6/site-packages/tensorboard/backend/event_processing/plugin_event_accumulator.py", line 437, in Tensors

return self.tensors_by_tag[tag].Items(_TENSOR_RESERVOIR_KEY)

KeyError: 'mesh_color_tensor_COLOR'

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20413

Differential Revision: D15500737

Pulled By: orionr

fbshipit-source-id: 426e8b966037d08c065bce5198fd485fd80a2b67

Summary:

When adding custom scalars like this

```python

from torch.utils.tensorboard import SummaryWriter

with SummaryWriter() as writer:

writer.add_custom_scalars({'Stuff': {

'Losses': ['MultiLine', ['loss/(one|two)']],

'Metrics': ['MultiLine', ['metric/(three|four)']],

}})

```

This error is raised:

```

TypeError: Parameter to MergeFrom() must be instance of same class: expected tensorboard.SummaryMetadata.PluginData got list.

```

Removing the square brackets around `SummaryMetadata.PluginData(plugin_name='custom_scalars')` should be enough to fix it.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/20580

Differential Revision: D15469700

Pulled By: orionr

fbshipit-source-id: 7ce58034bc2a74ab149fee6419319db68d8abafe

Summary:

This PR adds TensorBoard logging support natively within PyTorch. It is based on the tensorboardX code developed by lanpa and relies on changes inside the tensorflow/tensorboard repo landing at https://github.com/tensorflow/tensorboard/pull/2065.

With these changes users can simply `pip install tensorboard; pip install torch` and then log PyTorch data directly to the TensorBoard protobuf format using

```

import torch

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter()

s1 = torch.rand(1)

writer.add_scalar('data/scalar1', s1[0], 0)

writer.close()

```

Design:

- `EventFileWriter` and `RecordWriter` from tensorboardX now live in tensorflow/tensorboard

- `SummaryWriter` and PyTorch-specific conversion from tensors, nn modules, etc. now live in pytorch/pytorch. We also support Caffe2 blobs and nets.

Action items:

- [x] `from torch.utils.tensorboard import SummaryWriter`

- [x] rename functions

- [x] unittests

- [x] move actual writing function to tensorflow/tensorboard in https://github.com/tensorflow/tensorboard/pull/2065

Review:

- Please review for PyTorch standard formatting, code usage, etc.

- Please verify unittest usage is correct and executing in CI

Any significant changes made here will likely be synced back to github.com/lanpa/tensorboardX/ in the future.

cc orionr, ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16196

Differential Revision: D15062901

Pulled By: orionr

fbshipit-source-id: 3812eb6aa07a2811979c5c7b70810261f9ea169e