Summary:

Fixes https://github.com/pytorch/pytorch/issues/63435

Adds optional tensor arguments to check handling torch function checks. The only one I didn't do this for in the functional file was `multi_head_attention_forward` since that already took care of some optional tensor arguments but not others so it seemed like arguments were specifically chosen

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63967

Reviewed By: albanD

Differential Revision: D30640441

Pulled By: ezyang

fbshipit-source-id: 5ef9554d2fb6c14779f8f45542ab435fb49e5d0f

Summary:

Fixes https://github.com/pytorch/pytorch/issues/11959

Alternative approach to creating a new `CrossEntropyLossWithSoftLabels` class. This PR simply adds support for "soft targets" AKA class probabilities to the existing `CrossEntropyLoss` and `NLLLoss` classes.

Implementation is dumb and simple right now, but future work can add higher performance kernels for this case.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61044

Reviewed By: zou3519

Differential Revision: D29876894

Pulled By: jbschlosser

fbshipit-source-id: 75629abd432284e10d4640173bc1b9be3c52af00

Summary:

There is a very common error when writing docs: One forgets to write a matching `` ` ``, and something like ``:attr:`x`` is rendered in the docs. This PR fixes most (all?) of these errors (and a few others).

I found these running ``grep -r ">[^#<][^<]*\`"`` on the `docs/build/html/generated` folder. The regex finds an HTML tag that does not start with `#` (as python comments in example code may contain backticks) and that contains a backtick in the rendered HTML.

This regex has not given any false positive in the current codebase, so I am inclined to suggest that we should add this check to the CI. Would this be possible / reasonable / easy to do malfet ?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60474

Reviewed By: mrshenli

Differential Revision: D29309633

Pulled By: albanD

fbshipit-source-id: 9621e0e9f87590cea060dd084fa367442b6bd046

Summary:

Fixes https://github.com/pytorch/pytorch/issues/27655

This PR adds a C++ and Python version of ReflectionPad3d with structured kernels. The implementation uses lambdas extensively to better share code from the backward and forward pass.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59791

Reviewed By: gchanan

Differential Revision: D29242015

Pulled By: jbschlosser

fbshipit-source-id: 18e692d3b49b74082be09f373fc95fb7891e1b56

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56674

`torch.nn.functional.multi_head_attention_forward` supports a long tail of options and variations of the multihead attention computation. Its complexity is mostly due to arbitrating among options, preparing values in multiple ways, and so on - the attention computation itself is a small fraction of the implementation logic, which is relatively simple but can be hard to pick out.

The goal of this PR is to

- make the internal logic of `multi_head_attention_forward` less entangled and more readable, with the attention computation steps easily discernible from their surroundings.

- factor out simple helpers to perform the actual attention steps, with the aim of making them available to other attention-computing contexts.

Note that these changes should leave the signature and output of `multi_head_attention_forward` completely unchanged, so not BC-breaking. Later PRs should present new multihead attention entry points, but deprecating this one is out of scope for now.

Changes are in two parts:

- the implementation of `multi_head_attention_forward` has been extensively resequenced, which makes the rewrite look more total than it actually is. Changes to argument-processing logic are largely confined to a) minor perf tweaks/control flow tightening, b) error message improvements, and c) argument prep changes due to helper function factoring (e.g. merging `key_padding_mask` with `attn_mask` rather than applying them separately)

- factored helper functions are defined just above `multi_head_attention_forward`, with names prefixed with `_`. (A future PR may pair them with corresponding modules, but for now they're private.)

Test Plan: Imported from OSS

Reviewed By: gmagogsfm

Differential Revision: D28344707

Pulled By: bhosmer

fbshipit-source-id: 3bd8beec515182c3c4c339efc3bec79c0865cb9a

Summary:

Fixes https://github.com/pytorch/pytorch/issues/45687

Fix changes the input size check for `InstanceNorm*d` to be more restrictive and correctly reject sizes with only a single spatial element, regardless of batch size, to avoid infinite variance.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56659

Reviewed By: pbelevich

Differential Revision: D27948060

Pulled By: jbschlosser

fbshipit-source-id: 21cfea391a609c0774568b89fd241efea72516bb

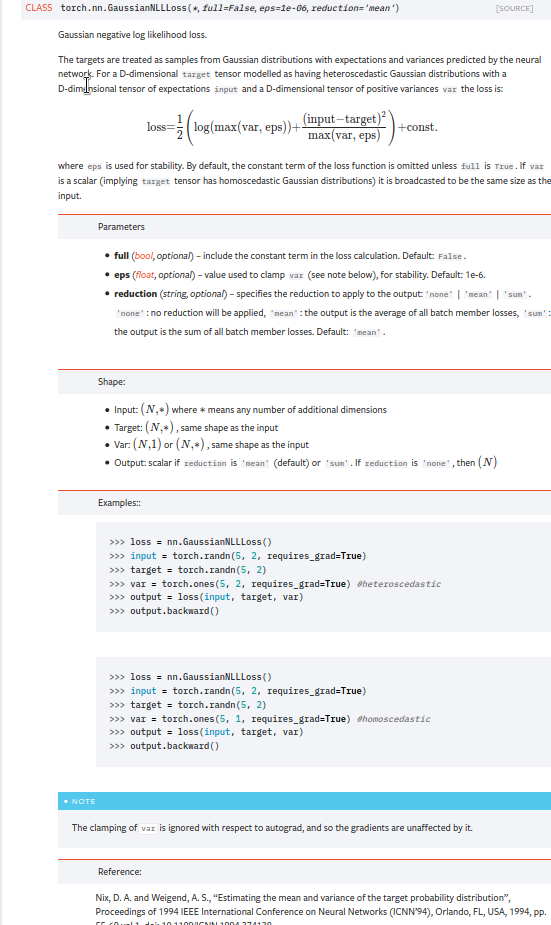

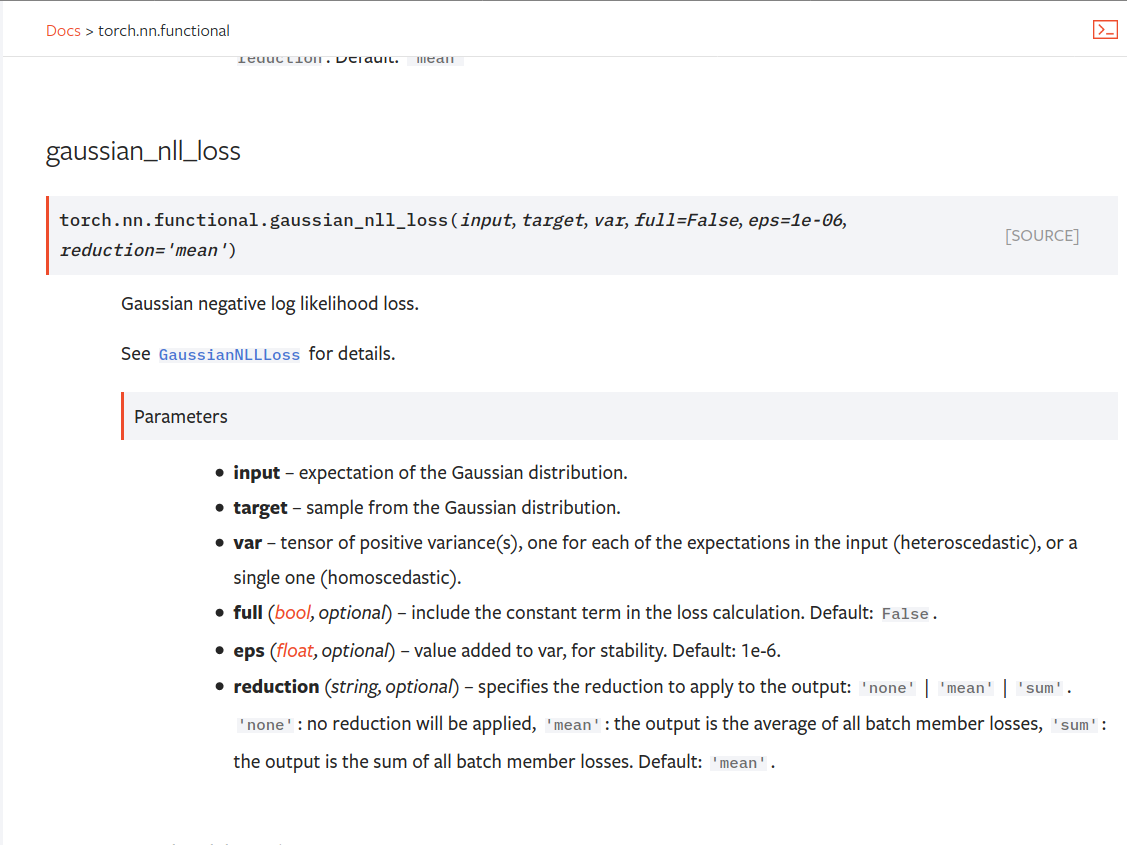

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Match updated `Embedding` docs from https://github.com/pytorch/pytorch/pull/54026 as closely as possible. Additionally, update the C++ side `Embedding` docs, since those were missed in the previous PR.

There are 6 (!) places for docs:

1. Python module form in `sparse.py` - includes an additional line about newly constructed `Embedding`s / `EmbeddingBag`s

2. Python `from_pretrained()` in `sparse.py` (refers back to module docs)

3. Python functional form in `functional.py`

4. C++ module options - includes an additional line about newly constructed `Embedding`s / `EmbeddingBag`s

5. C++ `from_pretrained()` options

6. C++ functional options

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56065

Reviewed By: malfet

Differential Revision: D27908383

Pulled By: jbschlosser

fbshipit-source-id: c5891fed1c9d33b4b8cd63500a14c1a77d92cc78

Summary:

As this diff shows, currently there are a couple hundred instances of raw `noqa` in the codebase, which just ignore all errors on a given line. That isn't great, so this PR changes all existing instances of that antipattern to qualify the `noqa` with respect to a specific error code, and adds a lint to prevent more of this from happening in the future.

Interestingly, some of the examples the `noqa` lint catches are genuine attempts to qualify the `noqa` with a specific error code, such as these two:

```

test/jit/test_misc.py:27: print(f"{hello + ' ' + test}, I'm a {test}") # noqa E999

test/jit/test_misc.py:28: print(f"format blank") # noqa F541

```

However, those are still wrong because they are [missing a colon](https://flake8.pycqa.org/en/3.9.1/user/violations.html#in-line-ignoring-errors), which actually causes the error code to be completely ignored:

- If you change them to anything else, the warnings will still be suppressed.

- If you add the necessary colons then it is revealed that `E261` was also being suppressed, unintentionally:

```

test/jit/test_misc.py:27:57: E261 at least two spaces before inline comment

test/jit/test_misc.py:28:35: E261 at least two spaces before inline comment

```

I did try using [flake8-noqa](https://pypi.org/project/flake8-noqa/) instead of a custom `git grep` lint, but it didn't seem to work. This PR is definitely missing some of the functionality that flake8-noqa is supposed to provide, though, so if someone can figure out how to use it, we should do that instead.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56272

Test Plan:

CI should pass on the tip of this PR, and we know that the lint works because the following CI run (before this PR was finished) failed:

- https://github.com/pytorch/pytorch/runs/2365189927

Reviewed By: janeyx99

Differential Revision: D27830127

Pulled By: samestep

fbshipit-source-id: d6dcf4f945ebd18cd76c46a07f3b408296864fcb

Summary:

This PR adds a `padding_idx` parameter to `nn.EmbeddingBag` and `nn.functional.embedding_bag`. As with `nn.Embedding`'s `padding_idx` argument, if an embedding's index is equal to `padding_idx` it is ignored, so it is not included in the reduction.

This PR does not add support for `padding_idx` for quantized or ONNX `EmbeddingBag` for opset10/11 (opset9 is supported). In these cases, an error is thrown if `padding_idx` is provided.

Fixes https://github.com/pytorch/pytorch/issues/3194

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49237

Reviewed By: walterddr, VitalyFedyunin

Differential Revision: D26948258

Pulled By: jbschlosser

fbshipit-source-id: 3ca672f7e768941f3261ab405fc7597c97ce3dfc

Summary:

* Lowering NLLLoss/CrossEntropyLoss to ATen dispatch

* This allows the MLC device to override these ops

* Reduce code duplication between the Python and C++ APIs.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53789

Reviewed By: ailzhang

Differential Revision: D27345793

Pulled By: albanD

fbshipit-source-id: 99c0d617ed5e7ee8f27f7a495a25ab4158d9aad6

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45667

First part of #3867 (Pooling operators still to do)

This adds a `padding='same'` mode to the interface of `conv{n}d`and `nn.Conv{n}d`. This should match the behaviour of `tensorflow`. I couldn't find it explicitly documented but through experimentation I found `tensorflow` returns the shape `ceil(len/stride)` and always adds any extra asymmetric padding onto the right side of the input.

Since the `native_functions.yaml` schema doesn't seem to support strings or enums, I've moved the function interface into python and it now dispatches between the numerically padded `conv{n}d` and the `_conv{n}d_same` variant. Underscores because I couldn't see any way to avoid exporting a function into the `torch` namespace.

A note on asymmetric padding. The total padding required can be odd if both the kernel-length is even and the dilation is odd. mkldnn has native support for asymmetric padding, so there is no overhead there, but for other backends I resort to padding the input tensor by 1 on the right hand side to make the remaining padding symmetrical. In these cases, I use `TORCH_WARN_ONCE` to notify the user of the performance implications.

Test Plan: Imported from OSS

Reviewed By: ejguan

Differential Revision: D27170744

Pulled By: jbschlosser

fbshipit-source-id: b3d8a0380e0787ae781f2e5d8ee365a7bfd49f22

Summary:

I edited the documentation for `nn.SiLU` and `F.silu` to:

- Explain that SiLU is also known as swish and that it stands for "Sigmoid Linear Unit."

- Ensure that "SiLU" is correctly capitalized.

I believe these changes will help users find the function they're looking for by adding relevant keywords to the docs.

Fixes: N/A

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53239

Reviewed By: jbschlosser

Differential Revision: D26816998

Pulled By: albanD

fbshipit-source-id: b4e9976e6b7e88686e3fa7061c0e9b693bd6d198

Summary:

-Lower Relu6 to ATen

-Change Python and C++ to reflect change

-adds an entry in native_functions.yaml for that new function

-this is needed as we would like to intercept ReLU6 at a higher level with an XLA-approach codegen.

-Should pass functional C++ tests pass. But please let me know if more tests are required.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52723

Reviewed By: ailzhang

Differential Revision: D26641414

Pulled By: albanD

fbshipit-source-id: dacfc70a236c4313f95901524f5f021503f6a60f