We have known for a while that we should in principle support SymBool as a separate concept from SymInt and SymFloat ( in particular, every distinct numeric type should get its own API). However, recent work with unbacked SymInts in, e.g., https://github.com/pytorch/pytorch/pull/90985 have made this a priority to implement. The essential problem is that our logic for computing the contiguity of tensors performs branches on the passed in input sizes, and this causes us to require guards when constructing tensors from unbacked SymInts. Morally, this should not be a big deal because, we only really care about the regular (non-channels-last) contiguity of the tensor, which should be guaranteed since most people aren't calling `empty_strided` on the tensor, however, because we store a bool (not a SymBool, prior to this PR it doesn't exist) on TensorImpl, we are forced to *immediately* compute these values, even if the value ends up not being used at all. In particular, even when a user allocates a contiguous tensor, we still must compute channels-last contiguity (as some contiguous tensors are also channels-last contiguous, but others are not.)

This PR implements SymBool, and makes TensorImpl use SymBool to store the contiguity information in ExtraMeta. There are a number of knock on effects, which I now discuss below.

* I introduce a new C++ type SymBool, analogous to SymInt and SymFloat. This type supports logical and, logical or and logical negation. I support the bitwise operations on this class (but not the conventional logic operators) to make it clear that logical operations on SymBool are NOT short-circuiting. I also, for now, do NOT support implicit conversion of SymBool to bool (creating a guard in this case). This does matter too much in practice, as in this PR I did not modify the equality operations (e.g., `==` on SymInt) to return SymBool, so all preexisting implicit guards did not need to be changed. I also introduced symbolic comparison functions `sym_eq`, etc. on SymInt to make it possible to create SymBool. The current implementation of comparison functions makes it unfortunately easy to accidentally introduce guards when you do not mean to (as both `s0 == s1` and `s0.sym_eq(s1)` are valid spellings of equality operation); in the short term, I intend to prevent excess guarding in this situation by unit testing; in the long term making the equality operators return SymBool is probably the correct fix.

* ~~I modify TensorImpl to store SymBool for the `is_contiguous` fields and friends on `ExtraMeta`. In practice, this essentially meant reverting most of the changes from https://github.com/pytorch/pytorch/pull/85936 . In particular, the fields on ExtraMeta are no longer strongly typed; at the time I was particularly concerned about the giant lambda I was using as the setter getting a desynchronized argument order, but now that I have individual setters for each field the only "big list" of boolean arguments is in the constructor of ExtraMeta, which seems like an acceptable risk. The semantics of TensorImpl are now that we guard only when you actually attempt to access the contiguity of the tensor via, e.g., `is_contiguous`. By in large, the contiguity calculation in the implementations now needs to be duplicated (as the boolean version can short circuit, but the SymBool version cannot); you should carefully review the duplicate new implementations. I typically use the `identity` template to disambiguate which version of the function I need, and rely on overloading to allow for implementation sharing. The changes to the `compute_` functions are particularly interesting; for most of the functions, I preserved their original non-symbolic implementation, and then introduce a new symbolic implementation that is branch-less (making use of our new SymBool operations). However, `compute_non_overlapping_and_dense` is special, see next bullet.~~ This appears to cause performance problems, so I am leaving this to an update PR.

* (Update: the Python side pieces for this are still in this PR, but they are not wired up until later PRs.) While the contiguity calculations are relatively easy to write in a branch-free way, `compute_non_overlapping_and_dense` is not: it involves a sort on the strides. While in principle we can still make it go through by using a data oblivious sorting network, this seems like too much complication for a field that is likely never used (because typically, it will be obvious that a tensor is non overlapping and dense, because the tensor is contiguous.) So we take a different approach: instead of trying to trace through the logic computation of non-overlapping and dense, we instead introduce a new opaque operator IsNonOverlappingAndDenseIndicator which represents all of the compute that would have been done here. This function returns an integer 0 if `is_non_overlapping_and_dense` would have returned `False`, and an integer 1 otherwise, for technical reasons (Sympy does not easily allow defining custom functions that return booleans). The function itself only knows how to evaluate itself if all of its arguments are integers; otherwise it is left unevaluated. This means we can always guard on it (as `size_hint` will always be able to evaluate through it), but otherwise its insides are left a black box. We typically do NOT expect this custom function to show up in actual boolean expressions, because we will typically shortcut it due to the tensor being contiguous. It's possible we should apply this treatment to all of the other `compute_` operations, more investigation necessary. As a technical note, because this operator takes a pair of a list of SymInts, we need to support converting `ArrayRef<SymNode>` to Python, and I also unpack the pair of lists into a single list because I don't know if Sympy operations can actually validly take lists of Sympy expressions as inputs. See for example `_make_node_sizes_strides`

* On the Python side, we also introduce a SymBool class, and update SymNode to track bool as a valid pytype. There is some subtlety here: bool is a subclass of int, so one has to be careful about `isinstance` checks (in fact, in most cases I replaced `isinstance(x, int)` with `type(x) is int` for expressly this reason.) Additionally, unlike, C++, I do NOT define bitwise inverse on SymBool, because it does not do the correct thing when run on booleans, e.g., `~True` is `-2`. (For that matter, they don't do the right thing in C++ either, but at least in principle the compiler can warn you about it with `-Wbool-operation`, and so the rule is simple in C++; only use logical operations if the types are statically known to be SymBool). Alas, logical negation is not overrideable, so we have to introduce `sym_not` which must be used in place of `not` whenever a SymBool can turn up. To avoid confusion with `__not__` which may imply that `operators.__not__` might be acceptable to use (it isn't), our magic method is called `__sym_not__`. The other bitwise operators `&` and `|` do the right thing with booleans and are acceptable to use.

* There is some annoyance working with booleans in Sympy. Unlike int and float, booleans live in their own algebra and they support less operations than regular numbers. In particular, `sympy.expand` does not work on them. To get around this, I introduce `safe_expand` which only calls expand on operations which are known to be expandable.

TODO: this PR appears to greatly regress performance of symbolic reasoning. In particular, `python test/functorch/test_aotdispatch.py -k max_pool2d` performs really poorly with these changes. Need to investigate.

Signed-off-by: Edward Z. Yang <ezyang@meta.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/92149

Approved by: https://github.com/albanD, https://github.com/Skylion007

This refactor was prompted by challenges handling mixed int/float

operations in C++. A previous version of this patch

added overloads for each permutation of int/float and was unwieldy

https://github.com/pytorch/pytorch/pull/87722/ This PR takes a different

approach.

The general outline of the patch is to combine the C++ types SymIntNode

and SymFloatNode into a single type, SymNode. This is type erased; we

no longer know statically at C++ if we have an int/float and have to test

it with the is_int()/is_float() virtual methods. This has a number of

knock on effects.

- We no longer have C++ classes to bind to Python. Instead, we take an

entirely new approach to our Python API, where we have a SymInt/SymFloat

class defined entirely in Python, which hold a SymNode (which corresponds

to the C++ SymNode). However, SymNode is not pybind11-bound; instead,

it lives as-is in Python, and is wrapped into C++ SymNode using PythonSymNode

when it goes into C++. This implies a userland rename.

In principle, it is also possible for the canonical implementation of SymNode

to be written in C++, and then bound to Python with pybind11 (we have

this code, although it is commented out.) However, I did not implement

this as we currently have no C++ implementations of SymNode.

Because we do return SymInt/SymFloat from C++ bindings, the C++ binding

code needs to know how to find these classes. Currently, this is done

just by manually importing torch and getting the attributes.

- Because SymInt/SymFloat are easy Python wrappers, __sym_dispatch__ now

takes SymInt/SymFloat, rather than SymNode, bringing it in line with how

__torch_dispatch__ works.

Some miscellaneous improvements:

- SymInt now has a constructor that takes SymNode. Note that this

constructor is ambiguous if you pass in a subclass of SymNode,

so an explicit downcast is necessary. This means toSymFloat/toSymInt

are no more. This is a mild optimization as it means rvalue reference

works automatically.

- We uniformly use the caster for c10::SymInt/SymFloat, rather than

going the long way via the SymIntNode/SymFloatNode.

- Removed some unnecessary toSymInt/toSymFloat calls in normalize_*

functions, pretty sure this doesn't do anything.

- guard_int is now a free function, since to guard on an int you cannot

assume the method exists. A function can handle both int and SymInt

inputs.

- We clean up the magic method definition code for SymInt/SymFloat/SymNode.

ONLY the user classes (SymInt/SymFloat) get magic methods; SymNode gets

plain methods; this is to help avoid confusion between the two types.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

cc @jansel @mlazos @soumith @voznesenskym @yanboliang @penguinwu @anijain2305

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87817

Approved by: https://github.com/albanD, https://github.com/anjali411

This is a new version of #15648 based on the latest master branch.

Unlike the previous PR where I fixed a lot of the doctests in addition to integrating xdoctest, I'm going to reduce the scope here. I'm simply going to integrate xdoctest, and then I'm going to mark all of the failing tests as "SKIP". This will let xdoctest run on the dashboards, provide some value, and still let the dashboards pass. I'll leave fixing the doctests themselves to another PR.

In my initial commit, I do the bare minimum to get something running with failing dashboards. The few tests that I marked as skip are causing segfaults. Running xdoctest results in 293 failed, 201 passed tests. The next commits will be to disable those tests. (unfortunately I don't have a tool that will insert the `#xdoctest: +SKIP` directive over every failing test, so I'm going to do this mostly manually.)

Fixes https://github.com/pytorch/pytorch/issues/71105

@ezyang

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82797

Approved by: https://github.com/ezyang

Done via

```

git grep -l 'SymbolicIntNode' | xargs sed -i 's/SymbolicIntNode/SymIntNodeImpl/g'

```

Reasoning for the change:

* Sym is shorter than Symbolic, and consistent with SymInt

* You usually will deal in shared_ptr<...>, so we're going to

reserve the shorter name (SymIntNode) for the shared pointer.

But I don't want to update the Python name, so afterwards I ran

```

git grep -l _C.SymIntNodeImpl | xargs sed -i 's/_C.SymIntNodeImpl/_C.SymIntNode/'

```

and manually fixed up the binding code

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/82350

Approved by: https://github.com/Krovatkin

This PR adds support for `SymInt`s in python. Namely,

* `THPVariable_size` now returns `sym_sizes()`

* python arg parser is modified to parse PyObjects into ints and `SymbolicIntNode`s

* pybind11 bindings for `SymbolicIntNode` are added, so size expressions can be traced

* a large number of tests added to demonstrate how to implement python symints.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78135

Approved by: https://github.com/ezyang

Summary:

Following https://github.com/pytorch/rfcs/blob/master/RFC-0019-Extending-PyTorch-Quantization-to-Custom-Backends.md we implemented

the backend configuration for fbgemm/qnnpack backend, currently it was under fx folder, but we'd like to use this for all different

workflows, including eager, fx graph and define by run quantization, this PR moves it to torch.ao.quantization namespace so that

it can be shared by different workflows

Also moves some utility functions specific to fx to fx/backend_config_utils.py and some files are kept in fx folder (quantize_handler.py and fuse_handler.py)

Test Plan:

python test/teset_quantization.py TestQuantizeFx

python test/teset_quantization.py TestQuantizeFxOps

python test/teset_quantization.py TestQuantizeFxModels

python test/test_quantization.py TestAOMigrationQuantization

python test/test_quantization.py TestAOMigrationQuantizationFx

Reviewers:

Subscribers:

Tasks:

Tags:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/75823

Approved by: https://github.com/vkuzo

Summary:

Working towards https://docs.google.com/document/d/10yx2-4gs0gTMOimVS403MnoAWkqitS8TUHX73PN8EjE/edit?pli=1#

This PR:

- Ensure that all the submodules are listed in a rst file (that ensure they are considered by the coverage tool)

- Remove some long deprecated code that just error out on import

- Remove the allow list altogether to ensure nothing gets added back there

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73983

Reviewed By: anjali411

Differential Revision: D34787908

Pulled By: albanD

fbshipit-source-id: 163ce61e133b12b2f2e1cbe374f979e3d6858db7

(cherry picked from commit c9edfead7a01dc45bfc24eaf7220d2a84ab1f62e)

PR #72405 added four new types to the public python API:

`torch.ComplexFloatTensor`, `torch.ComplexDoubleTensor`,

`torch.cuda.ComplexFloatTensor` and `torch.cuda.ComplexDoubleTensor`.

I believe this was unintentional and a clarifying comment as to the

purpose of `all_declared_types` is needed to avoid this in future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73370

Summary:

This pull request introduces `SoftplusTransform` to `torch.distributions.transforms`. `SoftplusTransform` transforms via the mapping `Softplus(x) = log(1 + exp(x))`. Note that the transform is different to [`torch.nn.Softplus`](https://pytorch.org/docs/stable/generated/torch.nn.Softplus.html#torch.nn.Softplus), as that has additional `beta` and `threshold` parameters. Inverse and `log_abs_det_jacobian` for a more complex `SoftplusTransform` can be added in the future.

vitkl fritzo

Addresses the issue discussed here: [pyro issue 855](https://github.com/pyro-ppl/numpyro/issues/855)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52300

Reviewed By: albanD, ejguan

Differential Revision: D34082655

Pulled By: neerajprad

fbshipit-source-id: 6114e74ee5d73c1527191bed612a142d691e2094

(cherry picked from commit a181a3a9e53a34214a503d38760ad7778d08a680)

Summary:

Fixespytorch/pytorch.github.io#929

The pytorch doc team would like to move to only major.minor documentation at https://pytorch.org/docs/versions.html, not major.minor.patch. This has been done in the CI scripts, but the generated documentation still has the patch version. Remove it when building RELEASE documentation. This allows simplifying the logic, using `'.'.join(torch_version.split('.')[:2])` since we no longer care about trimming off the HASH: it automatically gets removed.

holly1238, brianjo

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72706

Reviewed By: samdow

Differential Revision: D34215815

Pulled By: albanD

fbshipit-source-id: 8437036cc6636674d9ab8b1666f37b561d0527e1

(cherry picked from commit d8caf988f958656f357a497372a782ff69829f9e)

Summary:

This PR adds a transform that uses the cumulative distribution function of a given probability distribution.

For example, the following code constructs a simple Gaussian copula.

```python

# Construct a Gaussian copula from a multivariate normal.

base_dist = MultivariateNormal(

loc=torch.zeros(2),

scale_tril=LKJCholesky(2).sample(),

)

transform = CumulativeDistributionTransform(Normal(0, 1))

copula = TransformedDistribution(base_dist, [transform])

```

The following snippet creates a "wrapped" Gaussian copula for correlated positive variables with Weibull marginals.

```python

transforms = [

CumulativeDistributionTransform(Normal(0, 1)),

CumulativeDistributionTransform(Weibull(4, 2)).inv,

]

wrapped_copula = TransformedDistribution(base_dist, transforms)

```

cc fritzo

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72495

Reviewed By: ejguan

Differential Revision: D34085919

Pulled By: albanD

fbshipit-source-id: 7917391519a96b0d9b54c52db65d1932f961d070

(cherry picked from commit 572196146ede48a279828071941f6eeb8fc98a56)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72499

Pull Request resolved: https://github.com/pytorch/benchmark/pull/740

To fx2trt out of tree to remove bloatness of PyTorch core.

It's the first and major step. Next, we will move acc_tracer out of the tree and rearrange some fx passes.

Reviewed By: suo

Differential Revision: D34065866

fbshipit-source-id: c72b7ad752d0706abd9a63caeef48430e85ec56d

(cherry picked from commit 91647adbcae9cae7c55aa46f4279d42b35bc723e)

Summary:

**Summary:** This commit adds the `torch.nn.qat.dynamic.modules.Linear`

module, the dynamic counterpart to `torch.nn.qat.modules.Linear`.

Functionally these are very similar, except the dynamic version

expects a memoryless observer and is converted into a dynamically

quantized module before inference.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67325

Test Plan:

`python3 test/test_quantization.py TestQuantizationAwareTraining.test_dynamic_qat_linear`

**Reviewers:** Charles David Hernandez, Jerry Zhang

**Subscribers:** Charles David Hernandez, Supriya Rao, Yining Lu

**Tasks:** 99696812

**Tags:** pytorch

Reviewed By: malfet, jerryzh168

Differential Revision: D32178739

Pulled By: andrewor14

fbshipit-source-id: 5051bdd7e06071a011e4e7d9cc7769db8d38fd73

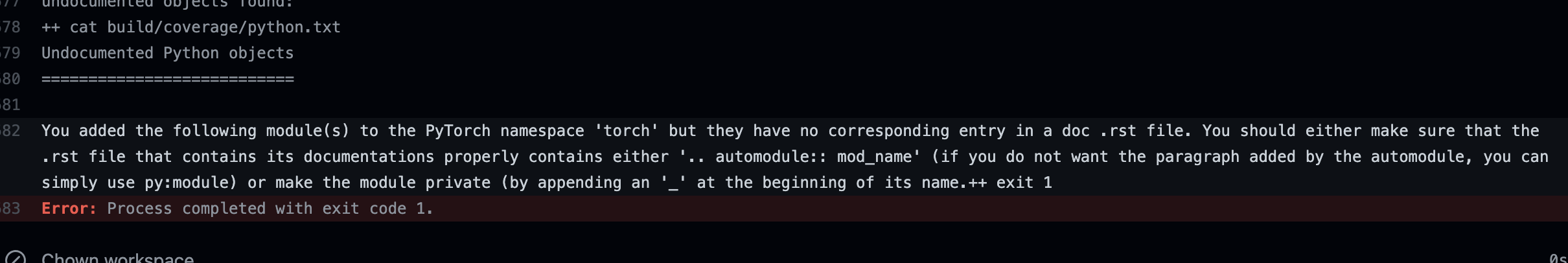

Summary:

Add check to make sure we do not add new submodules without documenting them in an rst file.

This is especially important because our doc coverage only runs for modules that are properly listed.

temporarily removed "torch" from the list to make sure the failure in CI looks as expected. EDIT: fixed now

This is what a CI failure looks like for the top level torch module as an example:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67440

Reviewed By: jbschlosser

Differential Revision: D32005310

Pulled By: albanD

fbshipit-source-id: 05cb2abc2472ea4f71f7dc5c55d021db32146928

Summary:

This reduces the chance of a newly added functions to be ignored by mistake.

The only test that this impacts is the coverage test that runs as part of the python doc build. So if that one works, it means that the update to the list here is correct.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67395

Reviewed By: jbschlosser

Differential Revision: D31991936

Pulled By: albanD

fbshipit-source-id: 5b4ce7764336720827501641311cc36f52d2e516

Summary:

Sphinx 4.x is out, but it seems that requires many more changes to

adopt. So instead use the latest version of 3.x, which includes

several nice features.

* Add some noindex directives to deal with warnings that would otherwise

be triggered by this change due to conflicts between the docstrings

declaring a function and the autodoc extension declaring the

same function.

* Update distributions.utils.lazy_property to make it look like a

regular property when sphinx autodoc inspects classes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61601

Reviewed By: ejguan

Differential Revision: D29801876

Pulled By: albanD

fbshipit-source-id: 544d2434a15ceb77bff236e934dbd8e4dbd9d160

Summary:

CI built the documentation for the recent 1.9.0rc1 tag, but left the git version in the `version`, so (as of now) going to https://pytorch.org/docs/1.9.0/index.html and looking at the version in the upper-left corner shows "1.9.0a0+git5f0bbb3" not "1.9.0". This PR should change that to cut off everything after and including the "a".

It should be cherry-picked to the release/1.9 branch so that the next rc will override the current documentation with a "cleaner" version.

brianjo

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58486

Reviewed By: zou3519

Differential Revision: D28640476

Pulled By: malfet

fbshipit-source-id: 9fd1063f4a2bc90fa8c1d12666e8c0de3d324b5c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61556

Prior to 1.10.0 `torch.__version__` was stored as a str and so many did

comparisons against `torch.__version__` as if it were a str. In order to not

break them we have TorchVersion which masquerades as a str while also

having the ability to compare against both packaging.version.Version as

well as tuples of values, eg. (1, 2, 1)

Examples:

Comparing a TorchVersion object to a Version object

```

TorchVersion('1.10.0a') > Version('1.10.0a')

```

Comparing a TorchVersion object to a Tuple object

```

TorchVersion('1.10.0a') > (1, 2) # 1.2

TorchVersion('1.10.0a') > (1, 2, 1) # 1.2.1

```

Comparing a TorchVersion object against a string

```

TorchVersion('1.10.0a') > '1.2'

TorchVersion('1.10.0a') > '1.2.1'

```

Resolves https://github.com/pytorch/pytorch/issues/61540

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: zou3519

Differential Revision: D29671234

Pulled By: seemethere

fbshipit-source-id: 6044805918723b4aca60bbec4b5aafc1189eaad7

Summary:

Trying to run the doctests for the complete documentation hangs if it reaches the examples of `torch.futures`. It turns out to be only syntax errors, which are normally just reported. My guess is that `doctest` probably doesn't work well for failures within async stuff.

Anyway, while debugging this, I fixed the syntax.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61029

Reviewed By: mruberry

Differential Revision: D29571923

Pulled By: mrshenli

fbshipit-source-id: bb8112be5302c6ec43151590b438b195a8f30a06

Summary:

brianjo

- Add a javascript snippet to close the expandable left navbar sections 'Notes', 'Language Bindings', 'Libraries', 'Community'

- Fix two latex bugs that were causing output in the log that might have been misleading when looking for true doc build problems

- Change the way release versions interact with sphinx. I tested these via building docs twice: once with `export RELEASE=1` and once without.

- Remove perl scripting to turn the static version text into a link to the versions.html document. Instead, put this where it belongs in the layout.html template. This is the way the domain libraries (text, vision, audio) do it.

- There were two separate templates for master and release, with the only difference between them is that the master has an admonition "You are viewing unstable developer preview docs....". Instead toggle that with the value of `release`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53851

Reviewed By: mruberry

Differential Revision: D27085875

Pulled By: ngimel

fbshipit-source-id: c2d674deb924162f17131d895cb53cef08a1f1cb

Summary:

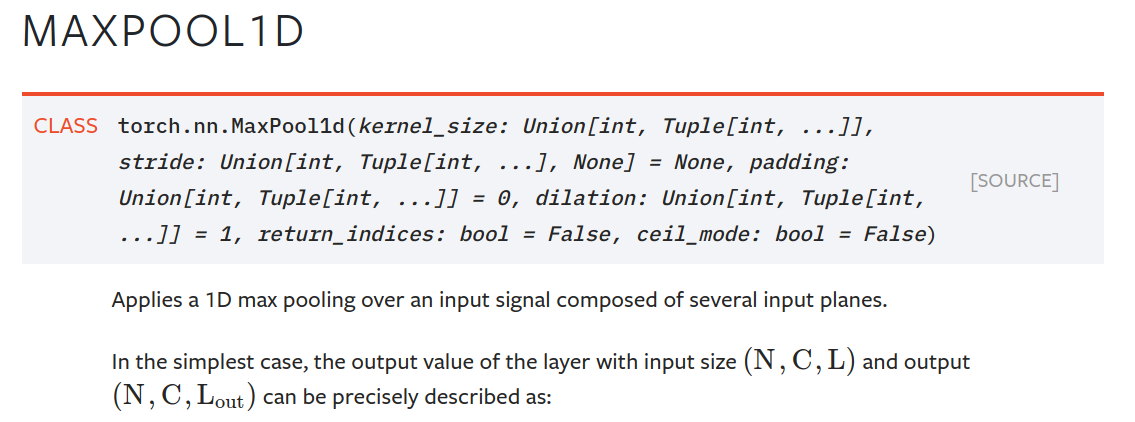

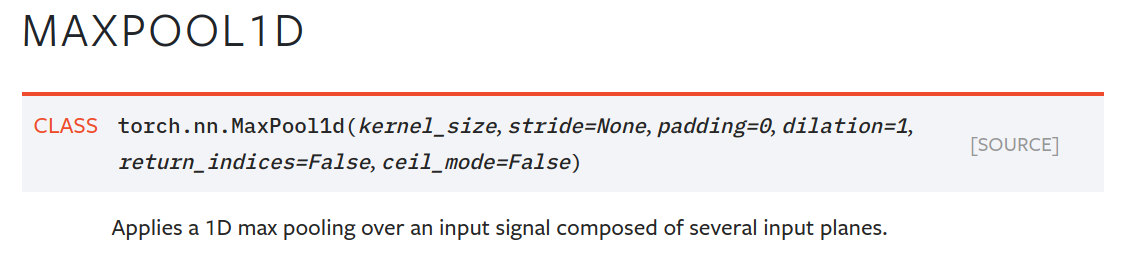

One unintended side effect of moving type annotations inline was that those annotations now show up in signatures in the html docs. This is more confusing and ugly than it is helpful. An example for `MaxPool1d`:

This makes the docs readable again. The parameter descriptions often already have type information, and there will be many cases where the type annotations will make little sense to the user (e.g., returning typevar T, long unions).

Change to `MaxPool1d` example:

Note that once we can build the docs with Sphinx 3 (which is far off right now), we have two options to make better use of the extra type info in the annotations (some of which is useful):

- `autodoc_type_aliases`, so we can leave things like large unions unevaluated to keep things readable

- `autodoc_typehints = 'description'`, which moves the annotations into the parameter descriptions.

Another, more labour-intensive option, is what vadimkantorov suggested in gh-44964: show annotations on hover. Could also be done with some foldout, or other optional way to make things visible. Would be nice, but requires a Sphinx contribution or plugin first.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49294

Reviewed By: glaringlee

Differential Revision: D25535272

Pulled By: ezyang

fbshipit-source-id: 5017abfea941a7ae8c4595a0d2bdf8ae8965f0c4

Summary:

Fixes gh-39007

We replaced actual content with links to generated content in many places to break the documentation into manageable chunks. This caused references like

```

https://pytorch.org/docs/stable/torch.html#torch.flip

```

to become

```

https://pytorch.org/docs/master/generated/torch.flip.html#torch.flip

```

The textual content that was located at the old reference was replaced with a link to the new reference. This PR adds a `<p id="xxx"/p>` reference next to the link, so that the older references from outside tutorials and forums still work: they will bring the user to the link that they can then follow through to see the actual content.

The way this is done is to monkeypatch the sphinx writer method that produces the link. It is ugly but practical, and in my mind not worse than adding javascript to do the same thing.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/39086

Differential Revision: D22462421

Pulled By: jlin27

fbshipit-source-id: b8f913b38c56ebb857c5a07bded6509890900647