Per many C++ code-style guides members(for [example](https://google.github.io/styleguide/cppguide.html#Enumerator_Names) ) members of `enum` should be CamelCased,

and only defines should be ALL_CAPS

Changes `MemOverlap`, `MemOverlapStatus` and `CmpEvalResult` enum values

Also, `YES`, `NO`, `TRUE` and `FALSE` are often system defines

Fixes among other things, current iOS build regression, see, which manifests as follows (see [this](6e90572bb9):

```

/Users/runner/work/pytorch/pytorch/aten/src/ATen/MemoryOverlap.h:19:29: error: expected identifier

enum class MemOverlap { NO, YES, TOO_HARD };

^

/Applications/Xcode_12.4.app/Contents/Developer/Platforms/iPhoneSimulator.platform/Developer/SDKs/iPhoneSimulator14.4.sdk/usr/include/objc/objc.h:89:13: note: expanded from macro 'YES'

#define YES __objc_yes

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/79772

Approved by: https://github.com/drisspg, https://github.com/kulinseth

Analyze the range to determine if a condition cannot be satisfied. Suppose the for-loop body contains `IfThenElse` or `CompareSelect` while the condition of the two statements depends on the for-loop index `Var`. In that case, we will analyze the range to check whether the condition could always be satisfied or not. If the condition is deterministic, simplify the logic.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/76793

Approved by: https://github.com/huiguoo

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72390

This class didn't add much value and only caused more boilerplate code.

This change removes the class and updates all the use cases with

uses of `ExprHandle`.

A side effect of this change is different names in loop variables, which

caused massive mechanical changes in our tests.

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D34030296

Pulled By: ZolotukhinM

fbshipit-source-id: 2ba4e313506a43ab129a10d99e72b638b7d40108

(cherry picked from commit c2ec46a0587cafd4e915c5bf1e0dc0b5d244e8d5)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72389

This is an NFC change that just prepares the code for the upcoming

deletion of `DimArg` class. This change makes `Compute` and `Reduce`

APIs to use `ExprHandle` everywhere.

There should be no observable behavior change from this PR.

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D34030295

Pulled By: ZolotukhinM

fbshipit-source-id: 3fd035b6a6bd0a07ccfa92e118819478ae85412a

(cherry picked from commit 1b0a4b6fac54aa4d4735df435d345a30ba0d8a53)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64887

BufHandle has exactly the same functionality and should be used instead.

Differential Revision:

D30889483

D30889483

Test Plan: Imported from OSS

Reviewed By: navahgar

Pulled By: ZolotukhinM

fbshipit-source-id: 365fe8e396731b88920535a3de96bd3301aaa3f3

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63587

Now that there is no classes using KernelArena for memory management we

can remove it.

Differential Revision:

D30429115

D30429115

Test Plan: Imported from OSS

Reviewed By: navahgar

Pulled By: ZolotukhinM

fbshipit-source-id: 375f6f9294d27790645eeb7cb5a8e87047a57544

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63586

This is another commit in transition from KernelArena memory management.

Tensor is essentially just a pair of <BufPtr, StmtPtr> and we don't need

to dynamically allocate it at all - it's cheap to pass it by value, and

that's what we're switching to in this commit.

After this change nothing uses KernelScope/KernelArena and they can be

safely removed.

Differential Revision:

D30429114

D30429114

Test Plan: Imported from OSS

Reviewed By: navahgar

Pulled By: ZolotukhinM

fbshipit-source-id: f90b859cfe863692b7beffbe9bd0e4143df1e819

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63778

This is a preparation for a switch from raw pointers to shared pointers

as a memory model for TE expressions and statements.

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D30487425

Pulled By: ZolotukhinM

fbshipit-source-id: 9cbe817b7d4e5fc2f150b29bb9b3bf578868f20c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/63195

This helps us to later switch from using KernelArena with raw pointers

to shared pointers without having to change all our source files at

once.

The changes are mechanical and should not affect any functionality.

With this PR, we're changing the following:

* `Add*` --> `AddPtr`

* `new Add(...)` --> `alloc<Add>(...)`

* `dynamic_cast<Add*>` --> `to<Add>`

* `static_cast<Add*>` --> `static_to<Add>`

Due to some complications with args forwarding, some places became more

verbose, e.g.:

* `new Block({})` --> `new Block(std::vector<ExprPtr>())`

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D30292779

Pulled By: ZolotukhinM

fbshipit-source-id: 150301c7d2df56b608b035827b6a9a87f5e2d9e9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62336

This PR was generated by removing `const` for all types of nodes in NNC IR, and fixing compilation errors that were the result of this change.

This is the first step in making all NNC mutations in-place.

Test Plan: Imported from OSS

Reviewed By: iramazanli

Differential Revision: D30049829

Pulled By: navahgar

fbshipit-source-id: ed14e2d2ca0559ffc0b92ac371f405579c85dd63

Summary:

As GoogleTest `TEST` macro is non-compliant with it as well as `DEFINE_DISPATCH`

All changes but the ones to `.clang-tidy` are generated using following script:

```

for i in `find . -type f -iname "*.c*" -or -iname "*.h"|xargs grep cppcoreguidelines-avoid-non-const-global-variables|cut -f1 -d:|sort|uniq`; do sed -i "/\/\/ NOLINTNEXTLINE(cppcoreguidelines-avoid-non-const-global-variables)/d" $i; done

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62008

Reviewed By: driazati, r-barnes

Differential Revision: D29838584

Pulled By: malfet

fbshipit-source-id: 1b2f8602c945bd4ce50a9bfdd204755556e31d13

Summary:

This is an automatic change generated by the following script:

```

#!/usr/bin/env python3

from subprocess import check_output, check_call

import os

def get_compiled_files_list():

import json

with open("build/compile_commands.json") as f:

data = json.load(f)

files = [os.path.relpath(node['file']) for node in data]

for idx, fname in enumerate(files):

if fname.startswith('build/') and fname.endswith('.DEFAULT.cpp'):

files[idx] = fname[len('build/'):-len('.DEFAULT.cpp')]

return files

def run_clang_tidy(fname):

check_call(["python3", "tools/clang_tidy.py", "-c", "build", "-x", fname,"-s"])

changes = check_output(["git", "ls-files", "-m"])

if len(changes) == 0:

return

check_call(["git", "commit","--all", "-m", f"NOLINT stubs for {fname}"])

def main():

git_files = check_output(["git", "ls-files"]).decode("ascii").split("\n")

compiled_files = get_compiled_files_list()

for idx, fname in enumerate(git_files):

if fname not in compiled_files:

continue

if fname.startswith("caffe2/contrib/aten/"):

continue

print(f"[{idx}/{len(git_files)}] Processing {fname}")

run_clang_tidy(fname)

if __name__ == "__main__":

main()

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56892

Reviewed By: H-Huang

Differential Revision: D27991944

Pulled By: malfet

fbshipit-source-id: 5415e1eb2c1b34319a4f03024bfaa087007d7179

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55825

The mask has never been used (in vectorization we generate an explicit

`IfThenElse` construct when we need to mask out some elements). The PR

removes it and cleans up all its traces from tests.

Differential Revision: D27717776

Test Plan: Imported from OSS

Reviewed By: navahgar

Pulled By: ZolotukhinM

fbshipit-source-id: 41d1feeea4322da75b3999d661801c2a7f82b9db

Summary:

Switched to short forms of `splitWithTail` / `splitWithMask` for all tests in `test/cpp/tensorexpr/test_*.cpp` (except test_loopnest.cpp)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55542

Reviewed By: mrshenli

Differential Revision: D27632033

Pulled By: jbschlosser

fbshipit-source-id: dc2ba134f99bff8951ae61e564cd1daea92c41df

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54997

DepTracker was used to automatically pull in dependent computations from

output ones. While it seems quite convenient, it's led to several

architectural issues, which are fixed in this stack.

DepTracker worked on Tensors, which is a pair of Buf and Stmt. However,

Stmt could become stale and there was no way to reliably update the

corresponding tensor. We're now using Bufs and Stmts directly and moving

away from using Tensors to avoid these problems.

Removing DepTracker allowed to unify Loads and FunctionCalls, which

essentially were duplicates of each other.

Test Plan: Imported from OSS

Reviewed By: navahgar

Differential Revision: D27446414

Pulled By: ZolotukhinM

fbshipit-source-id: a2a32749d5b28beed92a601da33d126c0a2cf399

Summary:

Makes two changes in NNC for intermediate buffer allocations:

1. Flattens dimensions of buffers allocated in LoopNest::prepareForCodegen() to match their flattened usages.

2. Adds support for tracking memory dependencies of Alloc/Free to the MemDependencyChecker, which will allow us to check safety of accesses to intermediate buffers (coming in a future diff).

I didn't add any new tests as the mem dependency checker tests already cover it pretty well, particularly the GEMM test.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49554

Reviewed By: VitalyFedyunin

Differential Revision: D25643133

Pulled By: nickgg

fbshipit-source-id: 66be3054eb36f0a4279d0c36562e63aa2dae371c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/48160

We no longer use the custom c++ test infra anyways, so move to pure

gtest.

Fixes#45703

ghstack-source-id: 116977283

Test Plan: `buck test //caffe2/test/cpp/tensorexpr`

Reviewed By: navahgar, nickgg

Differential Revision: D25046618

fbshipit-source-id: da34183d87465f410379048148c28e1623618553

Summary:

Refactors the ReduceOp node to remove the last remaining deferred functionality: completing the interaction between the accumulator buffer and the body. This fixes two issues with reductions:

1. Nodes inside the interaction could not be visited or modified, meaning we could generate bad code when the interaction was complex.

2. The accumulator load was created at expansion time and so could not be modified in some ways (ie. vectorization couldn't act on these loads).

This simplifies reduction logic quite a bit, but theres a bit more involved in the rfactor transform.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47709

Reviewed By: ZolotukhinM

Differential Revision: D24904220

Pulled By: nickgg

fbshipit-source-id: 159e5fd967d2d1f8697cfa96ce1bb5fc44920a40

Summary:

Adds a helper function to Bounds Inference / Memory Analaysis infrastructure which returns the kind of hazard found between two Stmts (e.g. Blocks or Loops). E.g.

```

for (int i = 0; i < 10; ++i) {

A[x] = i * 2;

}

for (int j = 0; j < 10; ++j) {

B[x] = A[x] / 2;

}

```

The two loops have a `ReadAfterWrite` hazard, while in this example:

```

for (int i = 0; i < 10; ++i) {

A[x] = i * 2;

}

for (int j = 0; j < 10; ++j) {

A[x] = B[x] / 2;

}

```

The loops have a `WriteAfterWrite` hazard.

This isn't 100% of what we need for loop fusion, for example we don't check the strides of the loop to see if they match.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/47684

Reviewed By: malfet

Differential Revision: D24873587

Pulled By: nickgg

fbshipit-source-id: 991149e5942e769612298ada855687469a219d62

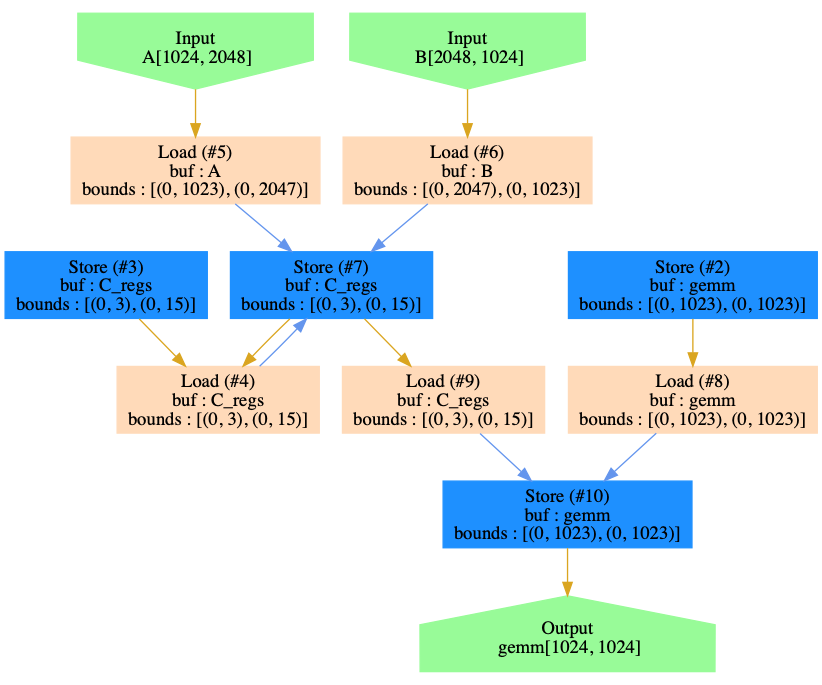

Summary:

Adds a new piece of infrastructure to the NNC fused-kernel generation compiler, which builds a dependency graph of the reads and writes to memory regions in a kernel.

It can be used to generate graphs like this from the GEMM benchmark (not this only represents memory hierarchy not compute hierarchy):

Or to answer questions like this:

```

Tensor* c = Compute(...);

Tensor* d = Compute(...);

LoopNest loop({d});

MemDependencyChecker analyzer;

loop.root_stmt()->accept(analyzer);

if (analyzer.dependsDirectly(loop.getLoopStmtsFor(d)[0], loop.getLoopStmtsFor(c)[0]) {

// do something, maybe computeInline

}

```

Or this:

```

Tensor* d = Compute(...);

LoopNest loop({d});

MemDependencyChecker analyzer(loop.getInputs(), loop.getOutputs());

const Buf* output = d->buf();

for (const Buf* input : inputs) {

if (!analyzer.dependsIndirectly(output, input)) {

// signal that this input is unused

}

}

```

This is a monster of a diff, and I apologize. I've tested it as well as possible for now, but it's not hooked up to anything yet so should not affect any current usages of the NNC fuser.

**How it works:**

Similar to the registerizer, the MemDependencyChecker walks the IR aggregating memory accesses into scopes, then merges those scopes into their parent scope and tracks which writes are responsible for the last write to a particular region of memory, adding dependency links where that region is used.

This relies on a bunch of math on symbolic contiguous regions which I've pulled out into its own file (bounds_overlap.h/cpp). Sometimes this wont be able to infer dependence with 100% accuracy but I think it should always be conservative and occaisionally add false positives but I'm aware of no false negatives.

The hardest part of the analysis is determining when a Load inside a For loop depends on a Store that is lower in the IR from a previous iteration of the loop. This depends on a whole bunch of factors, including whether or not we should consider loop iteration order. The analyzer comes with configuration of this setting. For example this loop:

```

for (int i = 0; i < 10; ++i) {

A[x] = B[x] + 1;

}

```

has no inter loop dependence, since each iteration uses a distinct slice of both A and B. But this one:

```

for (int i = 0; i < 10; ++i) {

A[0] = A[0] + B[x];

}

```

Has a self loop dependence between the Load and the Store of A. This applies to many cases that are not reductions as well. In this example:

```

for (int i =0; i < 10; ++i) {

A[x] = A[x+1] + x;

}

```

Whether or not it has self-loop dependence depends on if we are assuming the execution order is fixed (or whether this loop could later be parallelized). If the read from `A[x+1]` always comes before the write to that same region then it has no dependence.

The analyzer can correctly handle dynamic shapes, but we may need more test coverage of real world usages of dynamic shapes. I unit test some simple and pathological cases, but coverage could be better.

**Next Steps:**

Since the PR was already so big I didn't actually hook it up anywhere, but I had planned on rewriting bounds inference based on the dependency graph. Will do that next.

There are few gaps in this code which could be filled in later if we need it:

* Upgrading the bound math to work with write strides, which will reduce false positive dependencies.

* Better handling of Conditions, reducing false positive dependencies when a range is written in both branches of a Cond.

* Support for AtomicAdd node added in Cuda codegen.

**Testing:**

See new unit tests, I've tried to be verbose about what is being tested. I ran the python tests but there shouldn't be any way for this work to affect them yet.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46952

Reviewed By: ejguan

Differential Revision: D24730346

Pulled By: nickgg

fbshipit-source-id: 654c67c71e9880495afd3ae0efc142e95d5190df