99f2491af9

Revert "Use absolute path path.resolve() -> path.absolute() ( #129409 )"

...

This reverts commit 45411d1fc9a2b6d2f891b6ab0ae16409719e09fc.

Reverted https://github.com/pytorch/pytorch/pull/129409 on behalf of https://github.com/jeanschmidt due to Breaking internal CI, @albanD please help get this PR merged ([comment](https://github.com/pytorch/pytorch/pull/129409#issuecomment-2571316444 ))

2025-01-04 14:17:20 +00:00

45411d1fc9

Use absolute path path.resolve() -> path.absolute() ( #129409 )

...

Changes:

1. Always explicit `.absolute()`: `Path(__file__)` -> `Path(__file__).absolute()`

2. Replace `path.resolve()` with `path.absolute()` if the code is resolving the PyTorch repo root directory.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/129409

Approved by: https://github.com/albanD

2025-01-03 20:03:40 +00:00

cc4e70b7c3

Revert "Use absolute path path.resolve() -> path.absolute() ( #129409 )"

...

This reverts commit 135c7db99d646b8bd9603bf969d47d3dec5987b1.

Reverted https://github.com/pytorch/pytorch/pull/129409 on behalf of https://github.com/malfet due to need to revert to as dependency of https://github.com/pytorch/pytorch/pull/129374 ([comment](https://github.com/pytorch/pytorch/pull/129409#issuecomment-2562969825 ))

2024-12-26 17:26:06 +00:00

135c7db99d

Use absolute path path.resolve() -> path.absolute() ( #129409 )

...

Changes:

1. Always explicit `.absolute()`: `Path(__file__)` -> `Path(__file__).absolute()`

2. Replace `path.resolve()` with `path.absolute()` if the code is resolving the PyTorch repo root directory.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/129409

Approved by: https://github.com/albanD

2024-12-24 08:33:08 +00:00

a3abfa5cb5

[BE][Easy][1/19] enforce style for empty lines in import segments ( #129752 )

...

See https://github.com/pytorch/pytorch/pull/129751#issue-2380881501 . Most changes are auto-generated by linter.

You can review these PRs via:

```bash

git diff --ignore-all-space --ignore-blank-lines HEAD~1

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/129752

Approved by: https://github.com/ezyang , https://github.com/malfet

2024-07-16 00:42:56 +00:00

26f4f10ac8

[5/N][Easy] fix typo for usort config in pyproject.toml (kown -> known): sort torch ( #127126 )

...

The `usort` config in `pyproject.toml` has no effect due to a typo. Fixing the typo make `usort` do more and generate the changes in the PR. Except `pyproject.toml`, all changes are generated by `lintrunner -a --take UFMT --all-files`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/127126

Approved by: https://github.com/kit1980

2024-05-27 14:49:57 +00:00

55c0ab2887

Revert "[5/N][Easy] fix typo for usort config in pyproject.toml (kown -> known): sort torch ( #127126 )"

...

This reverts commit 7763c83af67eebfdd5185dbe6ce15ece2b992a0f.

Reverted https://github.com/pytorch/pytorch/pull/127126 on behalf of https://github.com/XuehaiPan due to Broken CI ([comment](https://github.com/pytorch/pytorch/pull/127126#issuecomment-2133044286 ))

2024-05-27 09:22:08 +00:00

7763c83af6

[5/N][Easy] fix typo for usort config in pyproject.toml (kown -> known): sort torch ( #127126 )

...

The `usort` config in `pyproject.toml` has no effect due to a typo. Fixing the typo make `usort` do more and generate the changes in the PR. Except `pyproject.toml`, all changes are generated by `lintrunner -a --take UFMT --all-files`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/127126

Approved by: https://github.com/kit1980

ghstack dependencies: #127122 , #127123 , #127124 , #127125

2024-05-27 04:22:18 +00:00

19502ff6aa

Fixed typo in build_activation_images.py ( #117458 )

...

In line 24 of build_activation_images.py, I changed "programmaticly" to "programmatically" to be dramatically correct.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/117458

Approved by: https://github.com/malfet

2024-01-15 03:27:40 +00:00

f70844bec7

Enable UFMT on a bunch of low traffic Python files outside of main files ( #106052 )

...

Signed-off-by: Edward Z. Yang <ezyang@meta.com >

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106052

Approved by: https://github.com/albanD , https://github.com/Skylion007

2023-07-27 01:01:17 +00:00

243dd7e74f

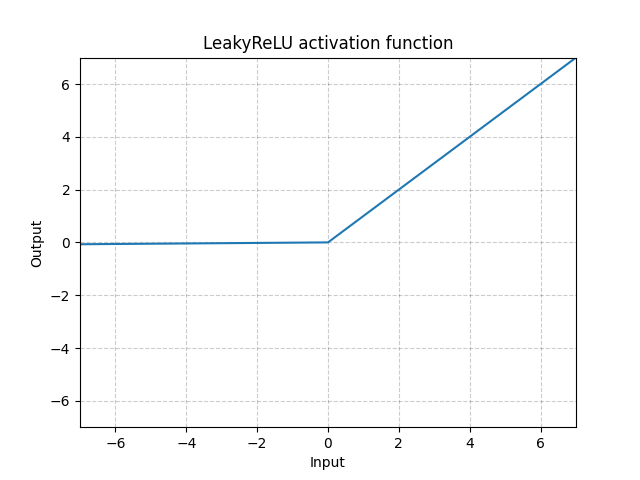

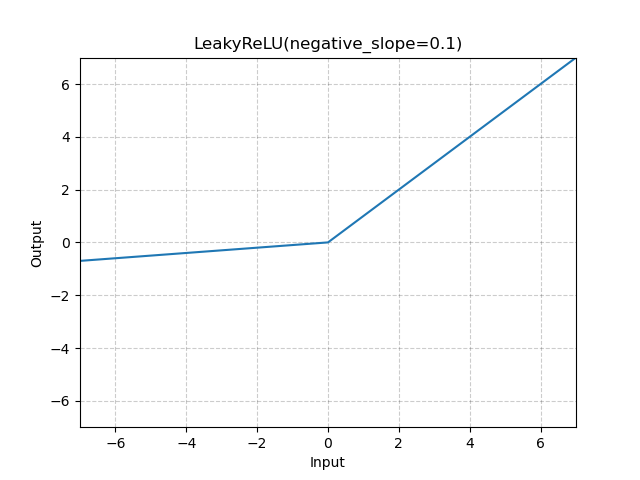

Fix LeakyReLU image ( #78508 )

...

Fixes #56363 , Fixes #78243

| [Before](https://pytorch.org/docs/stable/generated/torch.nn.LeakyReLU.html ) | [After](https://docs-preview.pytorch.org/78508/generated/torch.nn.LeakyReLU.html ) |

| --- | --- |

|  |  |

- Plot `LeakyReLU` with `negative_slope=0.1` instead of `negative_slope=0.01`

- Changed the title from `"{function_name} activation function"` to the name returned by `_get_name()` (with parameter info). The full list is attached at the end.

- Modernized the script and ran black on `docs/source/scripts/build_activation_images.py`. Apologies for the ugly diff.

```

ELU(alpha=1.0)

Hardshrink(0.5)

Hardtanh(min_val=-1.0, max_val=1.0)

Hardsigmoid()

Hardswish()

LeakyReLU(negative_slope=0.1)

LogSigmoid()

PReLU(num_parameters=1)

ReLU()

ReLU6()

RReLU(lower=0.125, upper=0.3333333333333333)

SELU()

SiLU()

Mish()

CELU(alpha=1.0)

GELU(approximate=none)

Sigmoid()

Softplus(beta=1, threshold=20)

Softshrink(0.5)

Softsign()

Tanh()

Tanhshrink()

```

cc @brianjo @mruberry @svekars @holly1238

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78508

Approved by: https://github.com/jbschlosser

2022-06-07 16:32:45 +00:00

11ca641491

[docs] Add images to some activation functions ( #65415 )

...

Summary:

Fixes https://github.com/pytorch/pytorch/issues/65368 . See discussion in the issue.

cc mruberry SsnL jbschlosser soulitzer

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65415

Reviewed By: soulitzer

Differential Revision: D31093303

Pulled By: albanD

fbshipit-source-id: 621c74c7a2aceee95e3d3b708c7f1a1d59e59b93

2021-09-22 11:05:29 -07:00

f0ada4bd54

[docs] Remove .data from some docs ( #65358 )

...

Summary:

Related to https://github.com/pytorch/pytorch/issues/30987 . Fix the following task:

- [ ] Remove the use of `.data` in all our internal code:

- [ ] ...

- [x] `docs/source/scripts/build_activation_images.py` and `docs/source/notes/extending.rst`

In `docs/source/scripts/build_activation_images.py`, I used `nn.init` because the snippet already assumes `nn` is available (the class inherits from `nn.Module`).

cc albanD

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65358

Reviewed By: malfet

Differential Revision: D31061790

Pulled By: albanD

fbshipit-source-id: be936c2035f0bdd49986351026fe3e932a5b4032

2021-09-21 06:32:31 -07:00

1022443168

Revert D30279364: [codemod][lint][fbcode/c*] Enable BLACK by default

...

Test Plan: revert-hammer

Differential Revision:

D30279364 (b004307252

2021-08-12 11:45:01 -07:00

b004307252

[codemod][lint][fbcode/c*] Enable BLACK by default

...

Test Plan: manual inspection & sandcastle

Reviewed By: zertosh

Differential Revision: D30279364

fbshipit-source-id: c1ed77dfe43a3bde358f92737cd5535ae5d13c9a

2021-08-12 10:58:35 -07:00

09a8f22bf9

Add mish activation function ( #58648 )

...

Summary:

See issus: https://github.com/pytorch/pytorch/issues/58375

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58648

Reviewed By: gchanan

Differential Revision: D28625390

Pulled By: jbschlosser

fbshipit-source-id: 23ea2eb7d5b3dc89c6809ff6581b90ee742149f4

2021-05-25 10:36:21 -07:00

4ae832e106

Optimize SiLU (Swish) op in PyTorch ( #42976 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42976

Optimize SiLU (Swish) op in PyTorch.

Some benchmark result

input = torch.rand(1024, 32768, dtype=torch.float, device="cpu")

forward: 221ms -> 133ms

backward: 600ms -> 170ms

input = torch.rand(1024, 32768, dtype=torch.double, device="cpu")

forward: 479ms -> 297ms

backward: 1438ms -> 387ms

input = torch.rand(8192, 32768, dtype=torch.float, device="cuda")

forward: 24.34ms -> 9.83ms

backward: 97.05ms -> 29.03ms

input = torch.rand(4096, 32768, dtype=torch.double, device="cuda")

forward: 44.24ms -> 30.15ms

backward: 126.21ms -> 49.68ms

Test Plan: buck test mode/dev-nosan //caffe2/test:nn -- "SiLU"

Reviewed By: houseroad

Differential Revision: D23093593

fbshipit-source-id: 1ba7b95d5926c4527216ed211a5ff1cefa3d3bfd

2020-08-16 13:21:57 -07:00

2460dced8f

Add torch.nn.GELU for GELU activation ( #28944 )

...

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28944

Add torch.nn.GELU for GELU activation

Test Plan: buck test mode/dev-nosan //caffe2/test:nn -- "GELU"

Reviewed By: hl475, houseroad

Differential Revision: D18240946

fbshipit-source-id: 6284b30def9bd4c12bf7fb2ed08b1b2f0310bb78

2019-11-03 21:55:05 -08:00

6fc75eadf0

Add CELU activation to pytorch ( #8551 )

...

Summary:

Also fuse input scale multiplication into ELU

Paper:

https://arxiv.org/pdf/1704.07483.pdf

Pull Request resolved: https://github.com/pytorch/pytorch/pull/8551

Differential Revision: D9088477

Pulled By: SsnL

fbshipit-source-id: 877771bee251b27154058f2b67d747c9812c696b

2018-08-01 07:54:44 -07:00

49f88ac956

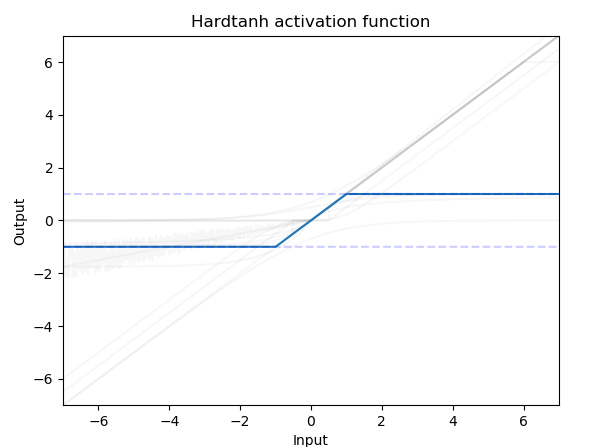

Add grid lines for activation images, fixes #9130 ( #9134 )

...

Summary:

1. Add dashed light blue line for asymptotes.

2. RReLU was missing the activation image.

3. make clean in docs will remove the activation images too.

Sample image:

Closes https://github.com/pytorch/pytorch/pull/9134

Differential Revision: D8726880

Pulled By: ezyang

fbshipit-source-id: 35f00ee08a34864ec15ffd6228097a9efbc8dd62

2018-07-03 19:10:00 -07:00

e0f3e5dc77

fix activation images not showing up on official website ( #6367 )

2018-04-07 11:06:24 -04:00

32b3841553

[ready] General documentation improvements ( #5450 )

...

* Improvize documentation

1. Add formula for erf, erfinv

2. Make exp, expm1 similar to log, log1p

3. Symbol change in ge, le, ne, isnan

* Fix minor nit in the docstring

* More doc improvements

1. Added some formulae

2. Complete scanning till "Other Operations" in Tensor docs

* Add more changes

1. Modify all torch.Tensor wherever required

* Fix Conv docs

1. Fix minor nits in the references for LAPACK routines

* Improve Pooling docs

1. Fix lint error

* Improve docs for RNN, Normalization and Padding

1. Fix flake8 error for pooling

* Final fixes for torch.nn.* docs.

1. Improve Loss Function documentation

2. Improve Vision Layers documentation

* Fix lint error

* Improve docstrings in torch.nn.init

* Fix lint error

* Fix minor error in torch.nn.init.sparse

* Fix Activation and Utils Docs

1. Fix Math Errors

2. Add explicit clean to Makefile in docs to prevent running graph generation script

while cleaning

3. Fix utils docs

* Make PYCMD a Makefile argument, clear up prints in the build_activation_images.py

* Fix batch norm doc error

2018-03-08 13:21:12 -05:00

b1dec4a74f

Fix doc-push ( #5494 )

2018-03-01 17:37:30 +01:00

7b33ef4cff

Documentation cleanup for activation functions ( #5457 )

2018-03-01 14:53:11 +01:00