Summary:

During development it is common practice to put `type: ignore` comments on lines that are correct, but `mypy` doesn't recognize this. This often stems from the fact, that the used `mypy` version wasn't able to handle the used pattern.

With every new release `mypy` gets better at handling complex code. In addition to fix all the previously accepted but now failing patterns, we should also revisit all `type: ignore` comments to see if they are still needed or not. Fortunately, we don't need to do it manually: by adding `warn_unused_ignores = True` to the configuration, `mypy` will error out in case it encounters an `type: ignore` that is no longer needed.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60006

Reviewed By: jbschlosser, malfet

Differential Revision: D29133237

Pulled By: albanD

fbshipit-source-id: 41e82edc5cd5affa7ccedad044b59b94dad4425a

Summary:

Like the title says. The OpInfo pattern can be confusing when first encountered, so this note links the Developer Wiki and tracking issue, plus elaborates on the goals and structure of the OpInfo pattern.

cc imaginary-person, who I can't add as a reviewer, unfortunately

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57428

Reviewed By: SplitInfinity

Differential Revision: D29221874

Pulled By: mruberry

fbshipit-source-id: aa73228748c9c96eadf2b2397a8b2ec31383971e

Summary:

Simplifies the OpInfo dtype tests and produces nicer error messages, like:

```

AssertionError: Items in the first set but not the second:

torch.bfloat16

Items in the second set but not the first:

torch.int64 : Attempted to compare [set] types: Expected: {torch.float64, torch.float32, torch.float16, torch.bfloat16}; Actual: {torch.float64, torch.float32, torch.float16, torch.int64}.

The supported dtypes for logcumsumexp on cuda according to its OpInfo are

{torch.float64, torch.float32, torch.float16, torch.int64}, but the detected supported dtypes are {torch.float64, torch.float32, torch.float16, torch.bfloat16}.

The following dtypes should be added to the OpInfo: {torch.bfloat16}. The following dtypes should be removed from the OpInfo: {torch.int64}.

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60157

Reviewed By: ngimel

Differential Revision: D29188665

Pulled By: mruberry

fbshipit-source-id: e84c9892c6040ea47adb027cfef3a6c0fd2f9f3c

Summary:

Fixes https://github.com/pytorch/pytorch/issues/3025

## Background

This PR implements a function similar to numpy's [`isin()`](https://numpy.org/doc/stable/reference/generated/numpy.isin.html#numpy.isin).

The op supports integral and floating point types on CPU and CUDA (+ half & bfloat16 for CUDA). Inputs can be one of:

* (Tensor, Tensor)

* (Tensor, Scalar)

* (Scalar, Tensor)

Internally, one of two algorithms is selected based on the number of elements vs. test elements. The heuristic for deciding which algorithm to use is taken from [numpy's implementation](fb215c7696/numpy/lib/arraysetops.py (L575)): if `len(test_elements) < 10 * len(elements) ** 0.145`, then a naive brute-force checking algorithm is used. Otherwise, a stablesort-based algorithm is used.

I've done some preliminary benchmarking to verify this heuristic on a devgpu, and determined for a limited set of tests that a power value of `0.407` instead of `0.145` is a better inflection point. For now, the heuristic has been left to match numpy's, but input is welcome for the best way to select it or whether it should be left the same as numpy's.

Tests are adapted from numpy's [isin and in1d tests](7dcd29aaaf/numpy/lib/tests/test_arraysetops.py).

Note: my locally generated docs look terrible for some reason, so I'm not including the screenshot for them until I figure out why.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53125

Test Plan:

```

python test/test_ops.py # Ex: python test/test_ops.py TestOpInfoCPU.test_supported_dtypes_isin_cpu_int32

python test/test_sort_and_select.py # Ex: python test/test_sort_and_select.py TestSortAndSelectCPU.test_isin_cpu_int32

```

Reviewed By: soulitzer

Differential Revision: D29101165

Pulled By: jbschlosser

fbshipit-source-id: 2dcc38d497b1e843f73f332d837081e819454b4e

Summary:

Based from https://github.com/pytorch/pytorch/pull/50466

Adds the initial implementation of `torch.cov` similar to `numpy.cov`. For simplicity, we removed support for many parameters in `numpy.cov` that are either redundant such as `bias`, or have simple workarounds such as `y` and `rowvar`.

cc PandaBoi

TODO

- [x] Improve documentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58311

Reviewed By: mruberry

Differential Revision: D28994140

Pulled By: heitorschueroff

fbshipit-source-id: 1890166c0a9c01e0a536acd91571cd704d632f44

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59711

This is the exact same PR as before.

This was reverted before the PR below was faulty.

Test Plan: Imported from OSS

Reviewed By: zou3519

Differential Revision: D28995762

Pulled By: albanD

fbshipit-source-id: 65940ad93bced9b5f97106709d603d1cd7260812

Summary:

Related issue: https://github.com/pytorch/pytorch/issues/58833

__changes__

- slowpath tests: pass every dtype&device tensors and compare the behavior with regular functions including inplace

- check of #cudaLaunchKernel

- rename `ForeachUnaryFuncInfo` -> `ForeachFuncInfo`: This change is mainly for the future binary/pointwise test refactors

cc: ngimel ptrblck mcarilli

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58960

Reviewed By: ejguan

Differential Revision: D28926135

Pulled By: ngimel

fbshipit-source-id: 4eb21dcebbffffaf79259e31961626e0707fb8d1

Summary:

Echo on https://github.com/pytorch/pytorch/pull/58260#discussion_r637467625

similar to `test_unsupported_dtype` which only check exception raised on the first sample. we should do similar things for unsupported_backward as well. The goal for both test is to remind developer to

1. add a new dtype to the support list if they are fulling runnable without failure (over all samples)

2. replace the skip mechanism which will indefinitely ignore tests without warning

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59455

Test Plan: CI.

Reviewed By: mruberry

Differential Revision: D28927169

Pulled By: walterddr

fbshipit-source-id: 2993649fc17a925fa331e27c8ccdd9b24dd22c20

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59553

Added a test for 0x0 sparse coo input for sparse_unary_ufuncs.

This test fails for `conj` on master.

Modified `unsupportedTypes` for test_sparse_consistency, complex dtypes

pass, but float16 doesn't pass for `conj` because `to_dense()` doesn't

work with float16.

Fixes https://github.com/pytorch/pytorch/issues/59549

Test Plan: Imported from OSS

Reviewed By: jbschlosser

Differential Revision: D28968215

Pulled By: anjali411

fbshipit-source-id: 44e99f0ce4aa45b760d79995a021e6139f064fea

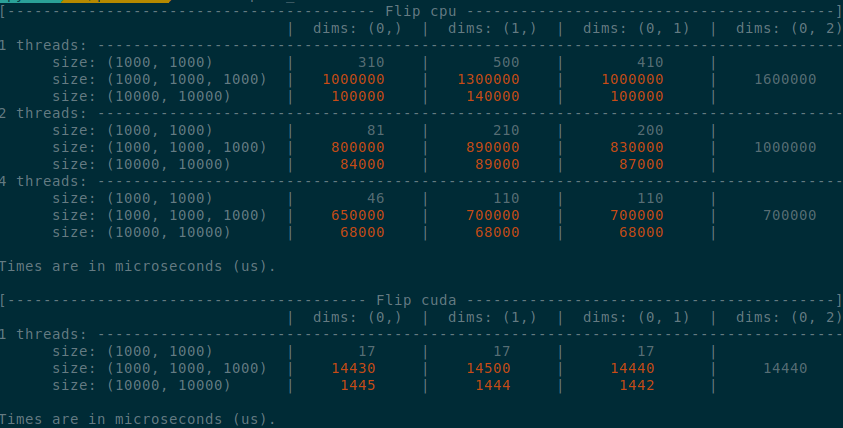

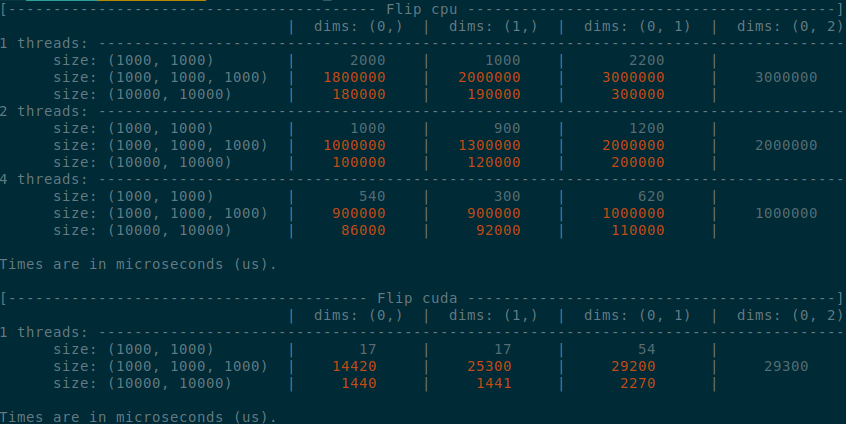

Summary:

Implements an idea by ngimel to improve the performance of `torch.flip` via a clever hack into TI to bypass the fact that TI is not designed to work with negative indices.

Something that might be added is vectorisation support on CPU, given how simple the implementation is now.

Some low-hanging fruits that I did not implement:

- Write it as a structured kernel

- Migrate the tests to opinfos

- Have a look at `cumsum_backward` and `cumprod_backward`, as I think that they could be implemented faster with `flip`, now that `flip` is fast.

**Edit**

This operation already has OpInfos and it cannot be migrated to a structured kernel because it implements quantisation

Summary of the PR:

- x1.5-3 performance boost on CPU

- x1.5-2 performance boost on CUDA

- Comparable performance across dimensions, regardless of the strides (thanks TI)

- Simpler code

<details>

<summary>

Test Script

</summary>

```python

from itertools import product

import torch

from torch.utils.benchmark import Compare, Timer

def get_timer(size, dims, num_threads, device):

x = torch.rand(*size, device=device)

timer = Timer(

"torch.flip(x, dims=dims)",

globals={"x": x, "dims": dims},

label=f"Flip {device}",

description=f"dims: {dims}",

sub_label=f"size: {size}",

num_threads=num_threads,

)

return timer.blocked_autorange(min_run_time=5)

def get_params():

sizes = ((1000,)*2, (1000,)*3, (10000,)*2)

for size, device in product(sizes, ("cpu", "cuda")):

threads = (1, 2, 4) if device == "cpu" else (1,)

list_dims = [(0,), (1,), (0, 1)]

if len(size) == 3:

list_dims.append((0, 2))

for num_threads, dims in product(threads, list_dims):

yield size, dims, num_threads, device

def compare():

compare = Compare([get_timer(*params) for params in get_params()])

compare.trim_significant_figures()

compare.colorize()

compare.print()

compare()

```

</details>

<details>

<summary>

Benchmark PR

</summary>

</details>

<details>

<summary>

Benchmark master

</summary>

</details>

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58747

Reviewed By: agolynski

Differential Revision: D28877076

Pulled By: ngimel

fbshipit-source-id: 4fa6eb519085950176cb3a9161eeb3b6289ec575

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54987

Based off of ezyang (https://github.com/pytorch/pytorch/pull/44799) and bdhirsh (https://github.com/pytorch/pytorch/pull/43702) 's prototype:

Here's a summary of the changes in this PR:

This PR adds a new dispatch key called Conjugate. This enables us to make conjugate operation a view and leverage the specialized library functions that fast path with the hermitian operation (conj + transpose).

1. Conjugate operation will now return a view with conj bit (1) for complex tensors and returns self for non-complex tensors as before. This also means `torch.view_as_real` will no longer be a view on conjugated complex tensors and is hence disabled. To fill the gap, we have added `torch.view_as_real_physical` which would return the real tensor agnostic of the conjugate bit on the input complex tensor. The information about conjugation on the old tensor can be obtained by calling `.is_conj()` on the new tensor.

2. NEW API:

a) `.conj()` -- now returning a view.

b) `.conj_physical()` -- does the physical conjugate operation. If the conj bit for input was set, you'd get `self.clone()`, else you'll get a new tensor with conjugated value in its memory.

c) `.conj_physical_()`, and `out=` variant

d) `.resolve_conj()` -- materializes the conjugation. returns self if the conj bit is unset, else returns a new tensor with conjugated values and conj bit set to 0.

e) `.resolve_conj_()` in-place version of (d)

f) `view_as_real_physical` -- as described in (1), it's functionally same as `view_as_real`, just that it doesn't error out on conjugated tensors.

g) `view_as_real` -- existing function, but now errors out on conjugated tensors.

3. Conjugate Fallback

a) Vast majority of PyTorch functions would currently use this fallback when they are called on a conjugated tensor.

b) This fallback is well equipped to handle the following cases:

- functional operation e.g., `torch.sin(input)`

- Mutable inputs and in-place operations e.g., `tensor.add_(2)`

- out-of-place operation e.g., `torch.sin(input, out=out)`

- Tensorlist input args

- NOTE: Meta tensors don't work with conjugate fallback.

4. Autograd

a) `resolve_conj()` is an identity function w.r.t. autograd

b) Everything else works as expected.

5. Testing:

a) All method_tests run with conjugate view tensors.

b) OpInfo tests that run with conjugate views

- test_variant_consistency_eager/jit

- gradcheck, gradgradcheck

- test_conj_views (that only run for `torch.cfloat` dtype)

NOTE: functions like `empty_like`, `zero_like`, `randn_like`, `clone` don't propagate the conjugate bit.

Follow up work:

1. conjugate view RFC

2. Add neg bit to re-enable view operation on conjugated tensors

3. Update linalg functions to call into specialized functions that fast path with the hermitian operation.

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision: D28227315

Pulled By: anjali411

fbshipit-source-id: acab9402b9d6a970c6d512809b627a290c8def5f

Summary:

sample_inputs_diff constructs all five positional arguments for [diff ](https://pytorch.org/docs/stable/generated/torch.diff.html) but uses only the first three. This doesn't seem to be intentional.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59181

Test Plan: This change expands coverage of diff's OpInfo sample inputs. Related tests still pass.

Reviewed By: mruberry

Differential Revision: D28878359

Pulled By: saketh-are

fbshipit-source-id: 1466f6c6c341490885c85bc6271ad8b3bcdf3a3e

Summary:

Resubmit of https://github.com/pytorch/pytorch/issues/59108, closes https://github.com/pytorch/pytorch/issues/24754, closes https://github.com/pytorch/pytorch/issues/24616

This reuses `linalg_vector_norm` to calculate the norms. I just add a new kernel that turns the norm into a normalization factor, then multiply the original tensor using a normal broadcasted `mul` operator. The result is less code, and better performance to boot.

#### Benchmarks (CPU):

| Shape | Dim | Before | After (1 thread) | After (8 threads) |

|:------------:|:---:|--------:|-----------------:|------------------:|

| (10, 10, 10) | 0 | 11.6 us | 4.2 us | 4.2 us |

| | 1 | 14.3 us | 5.2 us | 5.2 us |

| | 2 | 12.7 us | 4.6 us | 4.6 us |

| (50, 50, 50) | 0 | 330 us | 120 us | 24.4 us |

| | 1 | 350 us | 135 us | 28.2 us |

| | 2 | 417 us | 130 us | 24.4 us |

#### Benchmarks (CUDA)

| Shape | Dim | Before | After |

|:------------:|:---:|--------:|--------:|

| (10, 10, 10) | 0 | 12.5 us | 12.1 us |

| | 1 | 13.1 us | 12.2 us |

| | 2 | 13.1 us | 11.8 us |

| (50, 50, 50) | 0 | 33.7 us | 11.6 us |

| | 1 | 36.5 us | 15.8 us |

| | 2 | 41.1 us | 15 us |

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59250

Reviewed By: mruberry

Differential Revision: D28820359

Pulled By: ngimel

fbshipit-source-id: 572486adabac8135d52a9b8700f9d145c2a4ed45

Summary:

Fixes https://github.com/pytorch/pytorch/issues/57508

Earlier, a few CUDA `gradgrad` checks (see the list of ops below) were disabled because of them being too slow. There have been improvements (see https://github.com/pytorch/pytorch/issues/57508 for reference) and this PR aimed on:

1. Time taken by `gradgrad` checks on CUDA for the ops listed below.

2. Enabling the tests again if the times sound reasonable

Ops considered: `addbmm, baddbmm, bmm, cholesky, symeig, inverse, linalg.cholesky, linalg.cholesky_ex, linalg.eigh, linalg.qr, lu, qr, solve, triangular_solve, linalg.pinv, svd, linalg.svd, pinverse, linalg.householder_product, linalg.solve`.

For numbers (on time taken) on a separate CI run: https://github.com/pytorch/pytorch/pull/57802#issuecomment-836169691.

cc: mruberry albanD pmeier

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57802

Reviewed By: ngimel

Differential Revision: D28784106

Pulled By: mruberry

fbshipit-source-id: 9b15238319f143c59f83d500e831d66d98542ff8