Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/75605

Usecase: Milan models have multiple backends and need to use static dispatch to save on static initialization time and to hit native functions directly from the unboxed APIs.

This change passes in List[BackendIndex] and adds ability to generate code for multiple static backends with 1 or 0 kernels

ghstack-source-id: 154525738

(Note: this ignores all push blocking failures!)

Test Plan:

Builds lite_predictor_flatbuffer with multiple backends

```

buck build --config pt.enable_lightweight_dispatch=1 --config pt.static_dispatch_backend=CPU,QuantizedCPU,CompositeExplicitAutograd //xplat/caffe2/fb/lite_predictor:lite_predictor_flatbuffer

```

Reviewed By: larryliu0820

Differential Revision: D35510644

fbshipit-source-id: f985718ad066f8578b006b4759c4a3bd6caac176

(cherry picked from commit a6999729c8cc26c54b8d5684f6585d6c50d8d913)

Summary:

RFC: https://github.com/pytorch/rfcs/pull/40

This PR (re)introduces python codegen for unboxing wrappers. Given an entry of `native_functions.yaml` the codegen should be able to generate the corresponding C++ code to convert ivalues from the stack to their proper types. To trigger the codegen, run

```

tools/jit/gen_unboxing.py -d cg/torch/share/ATen

```

Merged changes on CI test. In https://github.com/pytorch/pytorch/issues/71782 I added an e2e test for static dispatch + codegen unboxing. The test exports a mobile model of mobilenetv2, load and run it on a new binary for lite interpreter: `test/mobile/custom_build/lite_predictor.cpp`.

## Lite predictor build specifics

1. Codegen: `gen.py` generates `RegisterCPU.cpp` and `RegisterSchema.cpp`. Now with this PR, once `static_dispatch` mode is enabled, `gen.py` will not generate `TORCH_LIBRARY` API calls in those cpp files, hence avoids interaction with the dispatcher. Once `USE_LIGHTWEIGHT_DISPATCH` is turned on, `cmake/Codegen.cmake` calls `gen_unboxing.py` which generates `UnboxingFunctions.h`, `UnboxingFunctions_[0-4].cpp` and `RegisterCodegenUnboxedKernels_[0-4].cpp`.

2. Build: `USE_LIGHTWEIGHT_DISPATCH` adds generated sources into `all_cpu_cpp` in `aten/src/ATen/CMakeLists.txt`. All other files remain unchanged. In reality all the `Operators_[0-4].cpp` are not necessary but we can rely on linker to strip them off.

## Current CI job test coverage update

Created a new CI job `linux-xenial-py3-clang5-mobile-lightweight-dispatch-build` that enables the following build options:

* `USE_LIGHTWEIGHT_DISPATCH=1`

* `BUILD_LITE_INTERPRETER=1`

* `STATIC_DISPATCH_BACKEND=CPU`

This job triggers `test/mobile/lightweight_dispatch/build.sh` and builds `libtorch`. Then the script runs C++ tests written in `test_lightweight_dispatch.cpp` and `test_codegen_unboxing.cpp`. Recent commits added tests to cover as many C++ argument type as possible: in `build.sh` we installed PyTorch Python API so that we can export test models in `tests_setup.py`. Then we run C++ test binary to run these models on lightweight dispatch enabled runtime.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69881

Reviewed By: iseeyuan

Differential Revision: D33692299

Pulled By: larryliu0820

fbshipit-source-id: 211e59f2364100703359b4a3d2ab48ca5155a023

(cherry picked from commit 58e1c9a25e3d1b5b656282cf3ac2f548d98d530b)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/65851

Design doc: https://docs.google.com/document/d/12rtlHnPUpaJ-I52Iob3L0WA3rKRr_OY7fXqeCvn2MVY/edit

First read the design doc to understand the user syntax. In this PR, we have converted add to use ufunc codegen; most of the cpp changes are deleting the preexisting implementations of add, and ufunc/add.h are the new implementations in the ufunc format.

The bulk of this PR is in the new codegen machinery. Here's the order to read the files:

* `tools/codegen/model.py`

* Some self-explanatory utility classes: `ScalarType`, `DTYPE_CLASSES`

* New classes for representing ufunc entries in `native_functions.yaml`: `UfuncKey` and `UfuncInnerLoop`, as well as parsing logic for these entries. UfuncKey has some unusual entries (e.g., CPUScalar) that don't show up in the documentation, more on these below).

* A predicate `is_ufunc_dispatch_key` for testing which dispatch keys should get automatically generated when an operator opts into ufuncs (CPU and CUDA, for now!)

* `tools/codegen/api/types.py`

* More self-explanatory utility stuff: ScalarTypeToCppMapping mapping ScalarType to CppTypes; Binding.rename for changing the name of a binding (used when we assign constructor variables to member variables inside CUDA functors)

* New VectorizedCType, representing `at::vec::Vectorized<T>`. This is used inside vectorized CPU codegen.

* New `scalar_t` and `opmath_t` BaseCppTypes, representing template parameters that we work with when doing codegen inside ufunc kernel loops (e.g., where you previously had Tensor, now you have `scalar_t`)

* `StructuredImplSignature` represents a `TORCH_IMPL_FUNC` definition, and straightforwardly follows from preexisting `tools.codegen.api.structured`

* `tools/codegen/translate.py` - Yes, we use translate a LOT in this PR. I improved some of the documentation, the only substantive changes are adding two new conversions: given a `scalar_t` or a `const Scalar&`, make it convertible to an `opmath_t`

* `tools/codegen/api/ufunc.py`

* OK, now we're at the meaty stuff. This file represents the calling conventions of three important concepts in ufunc codegen, which we'll describe shortly. All of these APIs are relatively simple, since there aren't any complicated types by the time you get to kernels.

* stubs are the DispatchStub trampolines that CPU kernels use to get to their vectorized versions. They drop all Tensor arguments (as they are in TensorIterator) but otherwise match the structured calling convention

* ufuncs are the inner loop template functions that you wrote in ufunc/add.h which do the actual computation in question. Here, all the Tensors and Scalars have been converted into the computation type (`opmath_t` in CUDA, `scalar_t` in CPU)

* ufunctors are a CUDA-only concept representing functors that take some of their arguments on a host-side constructor, and the rest in the device-side apply. Once again, Tensors and Scalars are converted into the computation type, `opmath_t`, but for clarity all the functions take `scalar_t` as argument (as this is the type that is most salient at the call site). Because the constructor and apply are code generated separately, `ufunctor_arguments` returns a teeny struct `UfunctorBindings`

* `tools/codegen/dest/ufunc.py` - the workhorse. This gets its own section below.

* `tools/codegen/gen.py` - just calling out to the new dest.ufunc implementation to generate UfuncCPU_add.cpp, UFuncCPUKernel_add.cpp and UfuncCUDA_add.cu files per ufunc operator. Each of these files does what you expect (small file that registers kernel and calls stub; CPU implementation; CUDA implementation). There is a new file manager for UFuncCPUKernel files as these need to get replicated by cmake for vectorization. One little trick to avoid recompilation is we directly replicate code generated forward declarations in these files, to reduce the number of headers we depend on (this is codegen, we're just doing the preprocessors job!)

* I'll talk about build system adjustments below.

OK, let's talk about tools/codegen/dest/ufunc.py. This file can be roughly understood in two halves: one for CPU code generation, and the other for CUDA code generation.

**CPU codegen.** Here's roughly what we want to generate:

```

// in UfuncCPU_add.cpp

using add_fn = void (*)(TensorIteratorBase&, const at::Scalar&);

DECLARE_DISPATCH(add_fn, add_stub);

DEFINE_DISPATCH(add_stub);

TORCH_IMPL_FUNC(ufunc_add_CPU)

(const at::Tensor& self, const at::Tensor& other, const at::Scalar& alpha, const at::Tensor& out) {

add_stub(device_type(), *this, alpha);

}

// in UfuncCPUKernel_add.cpp

void add_kernel(TensorIteratorBase& iter, const at::Scalar& alpha) {

at::ScalarType st = iter.common_dtype();

RECORD_KERNEL_FUNCTION_DTYPE("add_stub", st);

switch (st) {

AT_PRIVATE_CASE_TYPE("add_stub", at::ScalarType::Bool, bool, [&]() {

auto _s_alpha = alpha.to<scalar_t>();

cpu_kernel(iter, [=](scalar_t self, scalar_t other) {

return ufunc::add(self, other, _s_alpha);

});

})

AT_PRIVATE_CASE_TYPE(

"add_stub", at::ScalarType::ComplexFloat, c10::complex<float>, [&]() {

auto _s_alpha = alpha.to<scalar_t>();

auto _v_alpha = at::vec::Vectorized<scalar_t>(_s_alpha);

cpu_kernel_vec(

iter,

[=](scalar_t self, scalar_t other) {

return ufunc::add(self, other, _s_alpha);

},

[=](at::vec::Vectorized<scalar_t> self,

at::vec::Vectorized<scalar_t> other) {

return ufunc::add(self, other, _v_alpha);

});

})

...

```

The most interesting change about the generated code is what previously was an `AT_DISPATCH` macro invocation is now an unrolled loop. This makes it easier to vary behavior per-dtype (you can see in this example that the entry for bool and float differ) without having to add extra condtionals on top.

Otherwise, to generate this code, we have to hop through several successive API changes:

* In TORCH_IMPL_FUNC(ufunc_add_CPU), go from StructuredImplSignature to StubSignature (call the stub). This is normal argument massaging in the classic translate style.

* In add_kernel, go from StubSignature to UfuncSignature. This is nontrivial, because we must do various conversions outside of the inner kernel loop. These conversions are done by hand, setting up the context appropriately, and then the final ufunc call is done using translate. (BTW, I introduce a new convention here, call on a Signature, for code generating a C++ call, and I think we should try to use this convention elsewhere)

The other piece of nontrivial logic is the reindexing by dtype. This reindexing exists because the native_functions.yaml format is indexed by UfuncKey:

```

Generic: add (AllAndComplex, BFloat16, Half)

ScalarOnly: add (Bool)

```

but when we do code generation, we case on dtype first, and then we generate a `cpu_kernel` or `cpu_kernel_vec` call. We also don't care about CUDA code generation (which Generic) hits. Do this, we lower these keys into two low level keys, CPUScalar and CPUVector, which represent the CPU scalar and CPU vectorized ufuncs, respectively (Generic maps to CPUScalar and CPUVector, while ScalarOnly maps to CPUScalar only). Reindexing then gives us:

```

AllAndComplex:

CPUScalar: add

CPUVector: add

Bool:

CPUScalar: add

...

```

which is a good format for code generation, but too wordy to force native_functions.yaml authors to write. Note that when reindexing, it is possible for there to be a conflicting definition for the same dtype; we just define a precedence order and have one override the other, so that it is easy to specialize on a particular dtype if necessary. Also note that because CPUScalar/CPUVector are part of UfuncKey, technically you can manually specify them in native_functions.yaml, although I don't expect this functionality to be used.

**CUDA codegen.** CUDA code generation has many of the same ideas as CPU codegen, but it needs to know about functors, and stubs are handled slightly differently. Here is what we want to generate:

```

template <typename scalar_t>

struct CUDAFunctorOnSelf_add {

using opmath_t = at::opmath_type<scalar_t>;

opmath_t other_;

opmath_t alpha_;

CUDAFunctorOnSelf_add(opmath_t other, opmath_t alpha)

: other_(other), alpha_(alpha) {}

__device__ scalar_t operator()(scalar_t self) {

return ufunc::add(static_cast<opmath_t>(self), other_, alpha_);

}

};

... two more functors ...

void add_kernel(TensorIteratorBase& iter, const at::Scalar & alpha) {

TensorIteratorBase& iter = *this;

at::ScalarType st = iter.common_dtype();

RECORD_KERNEL_FUNCTION_DTYPE("ufunc_add_CUDA", st);

switch (st) {

AT_PRIVATE_CASE_TYPE("ufunc_add_CUDA", at::ScalarType::Bool, bool, [&]() {

using opmath_t = at::opmath_type<scalar_t>;

if (false) {

} else if (iter.is_cpu_scalar(1)) {

CUDAFunctorOnOther_add<scalar_t> ufunctor(

iter.scalar_value<opmath_t>(1), (alpha).to<opmath_t>());

iter.remove_operand(1);

gpu_kernel(iter, ufunctor);

} else if (iter.is_cpu_scalar(2)) {

CUDAFunctorOnSelf_add<scalar_t> ufunctor(

iter.scalar_value<opmath_t>(2), (alpha).to<opmath_t>());

iter.remove_operand(2);

gpu_kernel(iter, ufunctor);

} else {

gpu_kernel(iter, CUDAFunctor_add<scalar_t>((alpha).to<opmath_t>()));

}

})

...

REGISTER_DISPATCH(add_stub, &add_kernel);

TORCH_IMPL_FUNC(ufunc_add_CUDA)

(const at::Tensor& self,

const at::Tensor& other,

const at::Scalar& alpha,

const at::Tensor& out) {

add_kernel(*this, alpha);

}

```

The functor business is the bulk of the complexity. Like CPU, we decompose CUDA implementation into three low-level keys: CUDAFunctor (normal, all CUDA kernels will have this), and CUDAFunctorOnOther/CUDAFunctorOnScalar (these are to support Tensor-Scalar specializations when the Scalar lives on CPU). Both Generic and ScalarOnly provide ufuncs for CUDAFunctor, but for us to also lift these into Tensor-Scalar specializations, the operator itself must be eligible for Tensor-Scalar specialization. At the moment, this is hardcoded to be all binary operators, but in the future we can use tags in native_functions.yaml to disambiguate (or perhaps expand codegen to handle n-ary operators).

The reindexing process not only reassociates ufuncs by dtype, but it also works out if Tensor-Scalar specializations are needed and codegens the ufunctors necessary for the level of specialization here (`compute_ufunc_cuda_functors`). Generating the actual kernel (`compute_ufunc_cuda_dtype_body`) just consists of, for each specialization, constructing the functor and then passing it off to `gpu_kernel`. Most of the hard work is in functor generation, where we take care to make sure `operator()` has the correct input and output types (which `gpu_kernel` uses to arrange for memory accesses to the actual CUDA tensor; if you get these types wrong, your kernel will still work, it will just run very slowly!)

There is one big subtlety with CUDA codegen: this won't work:

```

Generic: add (AllAndComplex, BFloat16, Half)

ScalarOnly: add_bool (Bool)

```

This is because, even though there are separate Generic/ScalarOnly entries, we only generate a single functor to cover ALL dtypes in this case, and the functor has the ufunc name hardcoded into it. You'll get an error if you try to do this; to fix it, just make sure the ufunc is named the same consistently throughout. In the code, you see this because after testing for the short circuit case (when a user provided the functor themselves), we squash all the generic entries together and assert their ufunc names are the same. Hypothetically, if we generated a separate functor per dtype, we could support differently named ufuncs but... why would you do that to yourself. (One piece of nastiness is that the native_functions.yaml syntax doesn't stop you from shooting yourself in the foot.)

A brief word about CUDA stubs: technically, they are not necessary, as there is no CPU/CPUKernel style split for CUDA kernels (so, if you look, structured impl actually calls add_kernel directly). However, there is some code that still makes use of CUDA stubs (in particular, I use the stub to conveniently reimplement sub in terms of add), so we still register it. This might be worth frying some more at a later point in time.

**Build system changes.** If you are at FB, you should review these changes in fbcode, as there are several changes in files that are not exported to ShipIt.

The build system changes in this patch are substantively complicated by the fact that I have to implement these changes five times:

* OSS cmake build

* OSS Bazel build

* FB fbcode Buck build

* FB xplat Buck build (selective build)

* FB ovrsource Buck build

Due to technical limitations in the xplat Buck build related to selective build, it is required that you list every ufunc header manually (this is done in tools/build_variables.bzl)

The OSS cmake changes are entirely in cmake/Codegen.cmake there is a new set of files cpu_vec_generated (corresponding to UfuncCPUKernel files) which is wired up in the same way as other files. These files are different because they need to get compiled multiple times under different vectorization settings. I adjust the codegen, slightly refactoring the inner loop into its own function so I can use different base path calculation depending on if the file is traditional (in the native/cpu folder) or generated (new stuff from this diff.

The Bazel/Buck changes are organized around tools/build_variables.bzl, which contain the canonical list of ufunc headers (aten_ufunc_headers), and tools/ufunc_defs.bzl (added to ShipIt export list in D34465699) which defines a number of functions that compute the generated cpu, cpu kernel and cuda files based on the headers list. For convenience, these functions take a genpattern (a string with a {} for interpolation) which can be used to easily reformat the list of formats in target form, which is commonly needed in the build systems.

The split between build_variables.bzl and ufunc_defs.bzl is required because build_variables.bzl is executed by a conventional Python interpreter as part of the OSS cmake, but we require Skylark features to implement the functions in ufunc_defs.bzl (I did some quick Googling but didn't find a lightweight way to run the Skylark interpreter in open source.)

With these new file lists, the rest of the build changes are mostly inserting references to these files wherever necessary; in particular, cpu kernel files have to be worked into the multiple vectorization build flow (intern_build_aten_ops in OSS Bazel). Most of the subtlety relates to selective build. Selective build requires operator files to be copied per overall selective build; as dhruvbird explains to me, glob expansion happens during the action graph phase, but the selective build handling of TEMPLATE_SOURCE_LIST is referencing the target graph. In other words, we can't use a glob to generate deps for another rule, because we need to copy files from wherever (included generated files) to a staging folder so the rules can pick them up.

It can be somewhat confusing to understand which bzl files are associated with which build. Here are the relevant mappings for files I edited:

* Used by everyone - tools/build_tools.bzl, tools/ufunc_defs.bzl

* OSS Bazel - aten.bzl, BUILD.bazel

* FB fbcode Buck - TARGETS

* FB xplat Buck -BUCK, pt_defs.bzl, pt_template_srcs.bzl

* FB ovrsource Buck - ovrsource_defs.bzl, pt_defs.bzl

Note that pt_defs.bzl is used by both xplat and ovrsource. This leads to the "tiresome" handling for enabled backends, as selective build is CPU only, but ovrsource is CPU and CUDA.

BTW, while I was at it, I beefed up fb/build_arvr.sh to also do a CUDA ovrsource build, which was not triggered previously.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D31306586

Pulled By: ezyang

fbshipit-source-id: 210258ce83f578f79cf91b77bfaeac34945a00c6

(cherry picked from commit d65157b0b894b6701ee062f05a5f57790a06c91c)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/72869

The ordering here doesn't really matter, but in a future patch

I will make a change where vectorized CPU codegen does have to

be here, and moving it ahead of time (with no code changes)

will make the latter diff cleaner.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D34282229

Pulled By: ezyang

fbshipit-source-id: 3397cb0e062d63cc9853f6248f17c3558013798b

(cherry picked from commit 98c616024969f9df90c7fb09741ed9be7b7a20f1)

Summary:

https://github.com/pytorch/pytorch/issues/66406

implemented z arch 14/15 vector SIMD additions.

so far besides bfloat all other types have their SIMD implementation.

it has 99% coverage and currently passing the local test.

it is concise and the main SIMD file is only one header file

it's using template metaprogramming, mostly. but still, there are a few macrosses left with the intention not to modify PyTorch much

Sleef supports z15

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66407

Reviewed By: mrshenli

Differential Revision: D33370163

Pulled By: malfet

fbshipit-source-id: 0e5a57f31b22a718cd2a9ac59753fb468cdda140

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68247

This splits `Functions.h`, `Operators.h`, `NativeFunctions.h` and

`NativeMetaFunctions.h` into seperate headers per operator base name.

With `at::sum` as an example, we can include:

```cpp

<ATen/core/sum.h> // Like Functions.h

<ATen/core/sum_ops.h> // Like Operators.h

<ATen/core/sum_native.h> // Like NativeFunctions.h

<ATen/core/sum_meta.h> // Like NativeMetaFunctions.h

```

The umbrella headers are still being generated, but all they do is

include from the `ATen/ops' folder.

Further, `TensorBody.h` now only includes the operators that have

method variants. Which means files that only include `Tensor.h` don't

need to be rebuilt when you modify function-only operators. Currently

there are about 680 operators that don't have method variants, so this

is potentially a significant win for incremental builds.

Test Plan: Imported from OSS

Reviewed By: mrshenli

Differential Revision: D32596272

Pulled By: albanD

fbshipit-source-id: 447671b2b6adc1364f66ed9717c896dae25fa272

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68246

Currently the codegen produces a list of output files at CMake

configuration time and the build system has no way of knowing if the

outputs change. So if that happens, you basically need to delete the

build folder and re-run from scratch.

Instead, this generates the output list every time the code generation

is run and changes the output to be a `.cmake` file that gets included

in the main cmake configuration step. That means the build system

knows to re-run cmake automatically if a new output is added. So, for

example you could change the number of shards that `Operators.cpp` is

split into and it all just works transparently to the user.

Test Plan: Imported from OSS

Reviewed By: zou3519

Differential Revision: D32596268

Pulled By: albanD

fbshipit-source-id: 15e0896aeaead90aed64b9c8fda70cf28fef13a2

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67656

Currently, each cpu kernel file is copied into the build folder 3 times to give them different compilation flags. This changes it to instead generate 3 files that `#include` the original file. The biggest difference is that updating a copied file requires `cmake` to re-run, whereas include dependencies are natively handled by `ninja`.

A side benefit is that included files show up directly in the build dependency graph, whereas `cmake` file copies don't.

Test Plan: Imported from OSS

Reviewed By: dagitses

Differential Revision: D32566108

Pulled By: malfet

fbshipit-source-id: ae75368fede37e7ca03be6ade3d4e4a63479440d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68180

Since we've open sourced the tracing-based selective build, we can deprecate the

op-dependency-graph-based selective build and the static analyzer tool that

produces the dependency graph.

ghstack-source-id: 143108377

Test Plan: CIs

Reviewed By: seemethere

Differential Revision: D32358467

fbshipit-source-id: c61523706b85a49361416da2230ec1b035b8b99c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67497

This allows more of the code-generation to happen in parallel, whereas

previously all codegen was serialized.

Test Plan: Imported from OSS

Reviewed By: dagitses, mruberry

Differential Revision: D32027250

Pulled By: albanD

fbshipit-source-id: 6407c4c3e25ad15d542aa73da6ded6a309c8eb6a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

### Would the downclocking caused by AVX512 pose an issue?

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

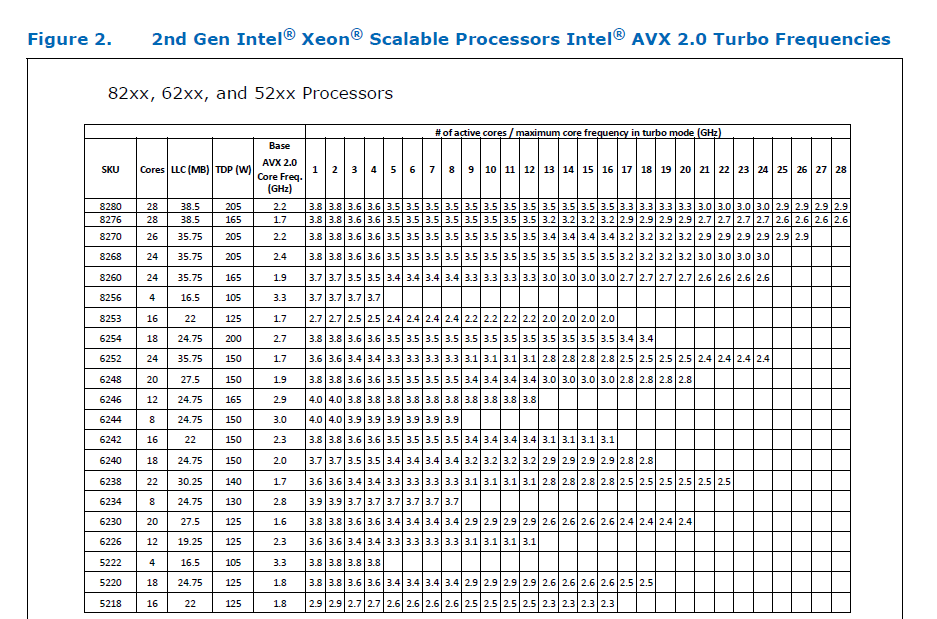

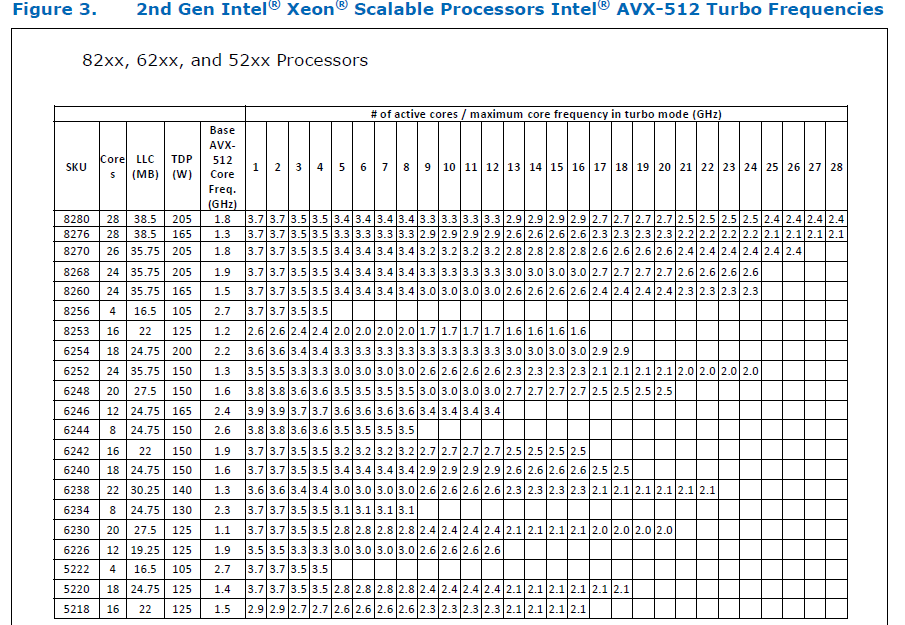

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59573

To do mobile selective build, we have several options:

1. static dispatch;

2. dynamic dispatch + static analysis (to create the dependency graph);

3. dynamic dispatch + tracing;

We are developing 3. For open source, we used to only support 1, and

currently we support both 1 and 2.

This file is only used for 2. It was introduced when we deprecated

the static dispatch (1). The motivation was to make sure we have a

low-friction selective build workflow for dynamic dispatch (2).

As the name indicates, it is the *default* dependency graph that users

can try if they don't bother to run the static analyzer themselves.

We have a CI to run the full workflow of 2 on every PR, which creates

the dependency graph on-the-fly instead of using the committed file.

Since the workflow to automatically update the file has been broken

for a while, it started to confuse other pytorch developers as people

are already manually editing it, and it might be broken for some models

already.

We reintroduced the static dispatch recently, so we decide to deprecate

this file now and automatically turn on static dispatch if users run

selective build without providing the static analysis graph.

The tracing-based selective build will be the ultimate solution we'd

like to provide for OSS, but it will take some more effort to polish

and release.

Differential Revision:

D28941020

D28941020

Test Plan: Imported from OSS

Reviewed By: dhruvbird

Pulled By: ljk53

fbshipit-source-id: 9977ab8568e2cc1bdcdecd3d22e29547ef63889e

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51957

This is a simplified version of #51554.

Compared to #51554, this version only supports statically dispatching to

a specific backend. The benefit is that it skipped the dispatch key

computation logic thus has less framework overhead. The downside is that

if input tensors do not match the specified backend it will throw error

instead of falling back to regular dispatch.

Sample code:

```

Tensor empty(IntArrayRef size, TensorOptions options, c10::optional<MemoryFormat> memory_format) {

return at::cpu::empty(size, options, memory_format);

}

// aten::conj(Tensor(a) self) -> Tensor(a)

Tensor conj(const Tensor & self) {

return at::math::conj(self);

}

// aten::conj.out(Tensor self, *, Tensor(a!) out) -> Tensor(a!)

Tensor & conj_out(Tensor & out, const Tensor & self) {

return at::cpu::conj_out(out, self);

}

// aten::conj.out(Tensor self, *, Tensor(a!) out) -> Tensor(a!)

Tensor & conj_outf(const Tensor & self, Tensor & out) {

return at::cpu::conj_out(out, self);

}

// aten::_conj(Tensor self) -> Tensor

Tensor _conj(const Tensor & self) {

return at::defaultbackend::_conj(self);

}

```

For ops without the specific backend dispatch, it will throw error:

```

// aten::_use_cudnn_ctc_loss(Tensor log_probs, Tensor targets, int[] input_lengths, int[] target_lengths, int blank) -> bool

bool _use_cudnn_ctc_loss(const Tensor & log_probs, const Tensor & targets, IntArrayRef input_lengths, IntArrayRef target_lengths, int64_t blank) {

TORCH_CHECK(false, "Static dispatch does not support _use_cudnn_ctc_loss for CPU.");

}

```

Differential Revision: D26337857

Test Plan: Imported from OSS

Reviewed By: bhosmer

Pulled By: ljk53

fbshipit-source-id: a8e95799115c349de3c09f04a26b01d21a679364

Summary:

### Pytorch Vec256 ppc64le support

implemented types:

- double

- float

- int16

- int32

- int64

- qint32

- qint8

- quint8

- complex_float

- complex_double

Notes:

All basic vector operations are implemented:

There are a few problems:

- minimum maximum nan propagation for ppc64le is missing and was not checked

- complex multiplication, division, sqrt, abs are implemented as PyTorch x86. they can overflow and have precision problems than std ones. That's why they were either excluded or tested in smaller domain range

- precisions of the implemented float math functions

~~Besides, I added CPU_CAPABILITY for power. but as because of quantization errors for DEFAULT I had to undef and use vsx for DEFAULT too~~

#### Details

##### Supported math functions

+ plus sign means vectorized, - minus sign means missing, (implementation notes are added inside braces)

(notes). Example: -(both ) means it was also missing on x86 side

g( func_name) means vectorization is using func_name

sleef - redirected to the Sleef

unsupported

function_name | float | double | complex float | complex double

|-- | -- | -- | -- | --|

acos | sleef | sleef | f(asin) | f(asin)

asin | sleef | sleef | +(pytorch impl) | +(pytorch impl)

atan | sleef | sleef | f(log) | f(log)

atan2 | sleef | sleef | unsupported | unsupported

cos | +((ppc64le:avx_mathfun) ) | sleef | -(both) | -(both)

cosh | f(exp) | -(both) | -(both) |

erf | sleef | sleef | unsupported | unsupported

erfc | sleef | sleef | unsupported | unsupported

erfinv | - (both) | - (both) | unsupported | unsupported

exp | + | sleef | - (x86:f()) | - (x86:f())

expm1 | f(exp) | sleef | unsupported | unsupported

lgamma | sleef | sleef | |

log | + | sleef | -(both) | -(both)

log10 | f(log) | sleef | f(log) | f(log)

log1p | f(log) | sleef | unsupported | unsupported

log2 | f(log) | sleef | f(log) | f(log)

pow | + f(exp) | sleef | -(both) | -(both)

sin | +((ppc64le:avx_mathfun) ) | sleef | -(both) | -(both)

sinh | f(exp) | sleef | -(both) | -(both)

tan | sleef | sleef | -(both) | -(both)

tanh | f(exp) | sleef | -(both) | -(both)

hypot | sleef | sleef | -(both) | -(both)

nextafter | sleef | sleef | -(both) | -(both)

fmod | sleef | sleef | -(both) | -(both)

[Vec256 Test cases Pr https://github.com/pytorch/pytorch/issues/42685](https://github.com/pytorch/pytorch/pull/42685)

Current list:

- [x] Blends

- [x] Memory: UnAlignedLoadStore

- [x] Arithmetics: Plus,Minu,Multiplication,Division

- [x] Bitwise: BitAnd, BitOr, BitXor

- [x] Comparison: Equal, NotEqual, Greater, Less, GreaterEqual, LessEqual

- [x] MinMax: Minimum, Maximum, ClampMin, ClampMax, Clamp

- [x] SignManipulation: Absolute, Negate

- [x] Interleave: Interleave, DeInterleave

- [x] Rounding: Round, Ceil, Floor, Trunc

- [x] Mask: ZeroMask

- [x] SqrtAndReciprocal: Sqrt, RSqrt, Reciprocal

- [x] Trigonometric: Sin, Cos, Tan

- [x] Hyperbolic: Tanh, Sinh, Cosh

- [x] InverseTrigonometric: Asin, ACos, ATan, ATan2

- [x] Logarithm: Log, Log2, Log10, Log1p

- [x] Exponents: Exp, Expm1

- [x] ErrorFunctions: Erf, Erfc, Erfinv

- [x] Pow: Pow

- [x] LGamma: LGamma

- [x] Quantization: quantize, dequantize, requantize_from_int

- [x] Quantization: widening_subtract, relu, relu6

Missing:

- [ ] Constructors, initializations

- [ ] Conversion , Cast

- [ ] Additional: imag, conj, angle (note: imag and conj only checked for float complex)

#### Notes on tests and testing framework

- some math functions are tested within domain range

- mostly testing framework randomly tests against std implementation within the domain or within the implementation domain for some math functions.

- some functions are tested against the local version. ~~For example, std::round and vector version of round differs. so it was tested against the local version~~

- round was tested against pytorch at::native::round_impl. ~~for double type on **Vsx vec_round failed for (even)+0 .5 values**~~ . it was solved by using vec_rint

- ~~**complex types are not tested**~~ **After enabling complex testing due to precision and domain some of the complex functions failed for vsx and x86 avx as well. I will either test it against local implementation or check within the accepted domain**

- ~~quantizations are not tested~~ Added tests for quantizing, dequantize, requantize_from_int, relu, relu6, widening_subtract functions

- the testing framework should be improved further

- ~~For now `-DBUILD_MOBILE_TEST=ON `will be used for Vec256Test too~~

Vec256 Test cases will be built for each CPU_CAPABILITY

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41541

Reviewed By: zhangguanheng66

Differential Revision: D23922049

Pulled By: VitalyFedyunin

fbshipit-source-id: bca25110afccecbb362cea57c705f3ce02f26098

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46938

It turns out that after https://github.com/pytorch/pytorch/pull/42194

landed we no longer actually generate any registrations into this

file. That means it's completely unnecessary.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: IvanKobzarev

Differential Revision: D24573518

Pulled By: ezyang

fbshipit-source-id: b41ada9e394b780f037f5977596a36b896b5648c

Summary:

I noticed while working on https://github.com/pytorch/pytorch/issues/45163 that edits to python files in the `tools/codegen/api/` directory wouldn't trigger rebuilds. This tells CMake about all of the dependencies, so rebuilds are triggered automatically.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/45275

Reviewed By: zou3519

Differential Revision: D23922805

Pulled By: ezyang

fbshipit-source-id: 0fbf2b6a9b2346c31b9b0384e5ad5e0eb0f70e9b

Summary:

[Tests for Vec256 classes https://github.com/pytorch/pytorch/issues/15676](https://github.com/pytorch/pytorch/issues/15676)

Testing

Current list:

- [x] Blends

- [x] Memory: UnAlignedLoadStore

- [x] Arithmetics: Plus,Minu,Multiplication,Division

- [x] Bitwise: BitAnd, BitOr, BitXor

- [x] Comparison: Equal, NotEqual, Greater, Less, GreaterEqual, LessEqual

- [x] MinMax: Minimum, Maximum, ClampMin, ClampMax, Clamp

- [x] SignManipulation: Absolute, Negate

- [x] Interleave: Interleave, DeInterleave

- [x] Rounding: Round, Ceil, Floor, Trunc

- [x] Mask: ZeroMask

- [x] SqrtAndReciprocal: Sqrt, RSqrt, Reciprocal

- [x] Trigonometric: Sin, Cos, Tan

- [x] Hyperbolic: Tanh, Sinh, Cosh

- [x] InverseTrigonometric: Asin, ACos, ATan, ATan2

- [x] Logarithm: Log, Log2, Log10, Log1p

- [x] Exponents: Exp, Expm1

- [x] ErrorFunctions: Erf, Erfc, Erfinv

- [x] Pow: Pow

- [x] LGamma: LGamma

- [x] Quantization: quantize, dequantize, requantize_from_int

- [x] Quantization: widening_subtract, relu, relu6

Missing:

- [ ] Constructors, initializations

- [ ] Conversion , Cast

- [ ] Additional: imag, conj, angle (note: imag and conj only checked for float complex)

#### Notes on tests and testing framework

- some math functions are tested within domain range

- mostly testing framework randomly tests against std implementation within the domain or within the implementation domain for some math functions.

- some functions are tested against the local version. ~~For example, std::round and vector version of round differs. so it was tested against the local version~~

- round was tested against pytorch at::native::round_impl. ~~for double type on **Vsx vec_round failed for (even)+0 .5 values**~~ . it was solved by using vec_rint

- ~~**complex types are not tested**~~ **After enabling complex testing due to precision and domain some of the complex functions failed for vsx and x86 avx as well. I will either test it against local implementation or check within the accepted domain**

- ~~quantizations are not tested~~ Added tests for quantizing, dequantize, requantize_from_int, relu, relu6, widening_subtract functions

- the testing framework should be improved further

- ~~For now `-DBUILD_MOBILE_TEST=ON `will be used for Vec256Test too~~

Vec256 Test cases will be built for each CPU_CAPABILITY

Fixes: https://github.com/pytorch/pytorch/issues/15676

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42685

Reviewed By: malfet

Differential Revision: D23034406

Pulled By: glaringlee

fbshipit-source-id: d1bf03acdfa271c88744c5d0235eeb8b77288ef8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/42629

How to approach reviewing this diff:

- The new codegen itself lives in `tools/codegen`. Start with `gen.py`, then read `model.py` and them the `api/` folder. The comments at the top of the files describe what is going on. The CLI interface of the new codegen is similar to the old one, but (1) it is no longer necessary to explicitly specify cwrap inputs (and now we will error if you do so) and (2) the default settings for source and install dir are much better; to the extent that if you run the codegen from the root source directory as just `python -m tools.codegen.gen`, something reasonable will happen.

- The old codegen is (nearly) entirely deleted; every Python file in `aten/src/ATen` was deleted except for `common_with_cwrap.py`, which now permanently finds its home in `tools/shared/cwrap_common.py` (previously cmake copied the file there), and `code_template.py`, which now lives in `tools/codegen/code_template.py`. We remove the copying logic for `common_with_cwrap.py`.

- All of the inputs to the old codegen are deleted.

- Build rules now have to be adjusted to not refer to files that no longer exist, and to abide by the (slightly modified) CLI.

- LegacyTHFunctions files have been generated and checked in. We expect these to be deleted as these final functions get ported to ATen. The deletion process is straightforward; just delete the functions of the ones you are porting. There are 39 more functions left to port.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Test Plan: Imported from OSS

Reviewed By: bhosmer

Differential Revision: D23183978

Pulled By: ezyang

fbshipit-source-id: 6073ba432ad182c7284a97147b05f0574a02f763

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43564

Static dispatch was originally introduced for mobile selective build.

Since we have added selective build support for dynamic dispatch and

tested it in FB production for months, we can deprecate static dispatch

to reduce the complexity of the codebase.

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D23324452

Pulled By: ljk53

fbshipit-source-id: d2970257616a8c6337f90249076fca1ae93090c7

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/43570

Add the default op dependency graph to the source tree - use it if user runs

custom build in dynamic dispatch mode without providing the graph.

Test Plan: Imported from OSS

Reviewed By: ezyang

Differential Revision: D23326988

Pulled By: ljk53

fbshipit-source-id: 5fefe90ca08bb0ca20284e87b70fe1dba8c66084

Summary:

Add support for including pytorch via an add_subdirectory()

This requires using PROJECT_* instead of CMAKE_* which refer to

the top-most project including pytorch.

TEST=add_subdirectory() into a pytorch checkout and build.

There are still some hardcoded references to TORCH_SRC_DIR, I will

fix in a follow on commit. For now you can create a symlink to

<pytorch>/torch/ in your project.

Change-Id: Ic2a8aec3b08f64e2c23d9e79db83f14a0a896abc

Pull Request resolved: https://github.com/pytorch/pytorch/pull/41387

Reviewed By: zhangguanheng66

Differential Revision: D22539944

Pulled By: ezyang

fbshipit-source-id: b7e9631021938255f0a6ea897a7abb061759093d

Summary:

This PR contains the initial version of Vulkan (GPU) Backend integration.

The primary target environment is Android, but the desktop build is also supported.

## CMake

Introducing three cmake options:

USE_VULKAN:

The main switch, if it is off, all other options do not affect.

USE_VULKAN_WRAPPER:

ON - Vulkan will be used loading it at runtime as "libvulkan.so" using libdl, every function call is wrapped in vulkan_wrapper.h.

OFF - linking with libvulkan.so directly

USE_VULKAN_SHADERC_RUNTIME:

ON - Shader compilation library will be linked, and shaders will be compiled runtime.

OFF - Shaders will be precompiled and shader compilation library is not included.

## Codegen

if `USE_VULKAN_SHADERC_RUNTIME` is ON:

Shaders precompilation () starts in cmake/VulkanCodegen.cmake, which calls `aten/src/ATen/native/vulkan/gen_glsl.py` or `aten/src/ATen/native/vulkan/gen_spv.py` to include shaders source or SPIR-V bytecode inside binary as uint32_t array in spv.h,spv.cpp.

if `USE_VULKAN_SHADERC_RUNTIME` is OFF:

The source of shaders is included as `glsl.h`,`glsl.cpp`.

All codegen results happen in the build directory.

## Build dependencies

cmake/Dependencies.cmake

If the target platform is Android - vulkan library, headers, Vulkan wrapper will be used from ANDROID_NDK.

Desktop build requires the VULKAN_SDK environment variable, and all vulkan dependencies will be used from it.

(Desktop build was tested only on Linux).

## Pytorch integration:

Adding 'Vulkan" as new Backend, DispatchKey, DeviceType.

We are using Strided layout without supporting strides at the moment, but we plan to support them in the future.

Using OpaqueTensorImpl where OpaqueHandle is copyable VulkanTensor,

more details in comments in `aten/src/ATen/native/vulkan/Vulkan.h`

Main code location: `aten/src/ATen/native/vulkan`

`aten/src/ATen/native/vulkan/VulkanAten.cpp` - connection link between ATen and Vulkan api (Vulkan.h) that converts at::Tensor to VulkanTensor.

`aten/src/ATen/native/Vulkan/Vulkan.h` - Vulkan API that contains VulkanTensor representation and functions to work with it. Plan to expose it for clients to be able to write their own Vulkan Ops.

`aten/src/ATen/native/vulkan/VulkanOps.cpp` - Vulkan Operations Implementations that uses Vulkan.h API

## GLSL shaders

Located in `aten/src/ATen/native/vulkan/glsl` as *.glsl files.

All shaders use Vulkan specialized constants for workgroup sizes with ids 1, 2, 3

## Supported operations

Code point:

conv2d no-groups

conv2d depthwise

addmm

upsample nearest 2d

clamp

hardtanh

## Testing

`aten/src/ATen/test/vulkan_test.cpp` - contains tests for

copy from CPU to Vulkan and back

all supported operations

Desktop builds supported, and testing can be done on a desktop that has Vulkan supported GPU or with installed software implementation of Vulkan, like https://github.com/google/swiftshader

## Vulkan execution

The initial implementation is trivial and waits every operator's execution.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/36491

Differential Revision: D21696709

Pulled By: IvanKobzarev

fbshipit-source-id: da3e5a770b1a1995e9465d7e81963e7de56217fa

Summary:

Replace hardcoded filelist in aten/src/ATen/CMakeLists.txt with one from `jit_source_sources`

Fix `append_filelist` to work independently from the location it was invoked

Pull Request resolved: https://github.com/pytorch/pytorch/pull/38526

Differential Revision: D21594582

Pulled By: malfet

fbshipit-source-id: c7f216a460edd474a6258ba5ddafd4c4f59b02be

Summary:

`configure_file` command adds its input as a top-level dependency triggering make file regeneration if file timestamp have changed

Also abort CMAKE if `exec` of build_variables.bzl failed for some reason

Pull Request resolved: https://github.com/pytorch/pytorch/pull/36809

Test Plan: Add invalid statement to build_variables.bzl and check that build process fails

Differential Revision: D21100721

Pulled By: malfet

fbshipit-source-id: 79a54aa367fb8dedb269c78b9538b4da203d856b

Summary:

Mimic `.bzl` parsing logic from https://github.com/pytorch/FBGEMM/pull/344

Generate `libtorch_cmake_sources` by running following script:

```

def read_file(path):

with open(path) as f:

return f.read()

def get_cmake_torch_srcs():

caffe2_cmake = read_file("caffe2/CMakeLists.txt")

start = caffe2_cmake.find("set(TORCH_SRCS")

end = caffe2_cmake.find(")", start)

return caffe2_cmake[start:end+1]

def get_cmake_torch_srcs_list():

caffe2_torch_srcs = get_cmake_torch_srcs()

unfiltered_list = [x.strip() for x in get_cmake_torch_srcs().split("\n") if len(x.strip())>0]

return [x.replace("${TORCH_SRC_DIR}/","torch/") for x in unfiltered_list if 'TORCH_SRC_DIR' in x]

import imp

build_variables = imp.load_source('build_variables', 'tools/build_variables.bzl')

libtorch_core_sources = set(build_variables.libtorch_core_sources)

caffe2_torch_srcs = set(get_cmake_torch_srcs_list())

if not libtorch_core_sources.issubset(caffe2_torch_srcs):

print("libtorch_core_sources must be a subset of caffe2_torch_srcs")

print(sorted(caffe2_torch_srcs.difference(libtorch_core_sources)))

```

Move common files between `libtorch_cmake_sources` and `libtorch_extra_sources` to `libtorch_jit_core_sources`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/36737

Test Plan: CI

Differential Revision: D21078753

Pulled By: malfet

fbshipit-source-id: f46ca48d48aa122188f028136c14687ff52629ed

Summary:

PR #32521 has several issues with mobile builds:

1. It didn't work with static dispatch (which OSS mobile build currently uses);

2. PR #34275 fixed 1) but it doesn't fix custom build for #32521;

3. manuallyBoxedKernel has a bug with ops which only have catchAllKernel: 2d7ede5f71

Both 1) and 2) have similar root cause - some JIT side code expects certain schemas to be registered in JIT registry.

For example: considering this code snippet: https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/frontend/builtin_functions.cpp#L10

```

auto scalar_operators_source = CodeTemplate(

R"SCRIPT(

def mul(a : ${Scalar}, b : Tensor) -> Tensor:

return b * a

...

```

It expects "aten::mul.Scalar(Tensor self, Scalar other) -> Tensor" to be registered in JIT - it doesn't necessarily need to call the implementation, though; otherwise it will fail some type check: https://github.com/pytorch/pytorch/pull/34013#issuecomment-592982889

Before #32521, all JIT registrations happen in register_aten_ops_*.cpp generated by gen_jit_dispatch.py.

After #32521, for ops with full c10 templated boxing/unboxing support, JIT registrations happen in TypeDefault.cpp/CPUType.cpp/... generated by aten/gen.py, with c10 register API via RegistrationListener in register_c10_ops.cpp. However, c10 registration in TypeDefault.cpp/CPUType.cpp/... are gated by `#ifndef USE_STATIC_DISPATCH`, thus these schemas won't be registered in JIT registry when USE_STATIC_DISPATCH is enabled.

PR #34275 fixes the problem by moving c10 registration out of `#ifndef USE_STATIC_DISPATCH` in TypeDefault.cpp/CPUType.cpp/..., so that all schemas can still be registered in JIT. But it doesn't fix custom build, where we only keep c10 registrations for ops used by specific model directly (for static dispatch custom build) and indirectly (for dynamic dispatch custom build). Currently there is no way for custom build script to know things like "aten::mul.Scalar(Tensor self, Scalar other) -> Tensor" needs to be kept, and in fact the implementation is not needed, only schema needs to be registered in JIT.

Before #32521, the problem was solved by keeping a DUMMY placeholder for unused ops in register_aten_ops_*.cpp: https://github.com/pytorch/pytorch/blob/master/tools/jit/gen_jit_dispatch.py#L326

After #32521, we could do similar thing by forcing aten/gen.py to register ALL schema strings for selective build - which is what is PR is doing.

Measured impact on custom build size (for MobileNetV2):

```

SELECTED_OP_LIST=MobileNetV2.yaml scripts/build_pytorch_android.sh armeabi-v7a

```

Before: 3,404,978

After: 3,432,569

~28K compressed size increase due to including more schema strings.

The table below summarizes the relationship between codegen flags and 5 build configurations that are related to mobile:

```

+--------------------------------------+-----------------------------------------------------------------------------+--------------------------------------------+

| | Open Source | FB BUCK |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

| | Default Build | Custom Build w/ Stat-Disp | Custom Build w/ Dyna-Disp | Full-JIT | Lite-JIT |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

| Dispatch Type | Static | Static | Dynamic | Dynamic (WIP) | Dynamic (WIP) |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

| ATen/gen.py | | | | | |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

| --op_registration_whitelist | unset | used root ops | closure(used root ops) | unset | closure(possibly used ops) |

| --backend_whitelist | CPU Q-CPU | CPU Q-CPU | CPU Q-CPU | CPU Q-CPU | CPU Q-CPU |

| --per_op_registration | false | false | false | false | true |

| --force_schema_registration | false | true | true | false | false |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

| tools/setup_helpers/generate_code.py | | | | | |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

| --disable-autograd | true | true | true | false | WIP |

| --selected-op-list-path | file(used root ops) | file(used root ops) | file(used root ops) | unset | WIP |

| --disable_gen_tracing | false | false | false | false | WIP |

+--------------------------------------+---------------------+---------------------------+---------------------------+---------------+----------------------------+

```

Differential Revision: D20397421

Test Plan: Imported from OSS

Pulled By: ljk53

fbshipit-source-id: 906750949ecacf68ac1e810fd22ee99f2e968d0b

Summary:

PR #32521 broke static dispatch because some ops are no longer

registered in register_aten_ops_*.cpp - it expects the c10 registers in

TypeDefault.cpp / CPUType.cpp / etc to register these ops. However, all

c10 registers are inside `#ifndef USE_STATIC_DISPATCH` section.

To measure the OSS mobile build size impact of this PR:

```

# default build: SELECTED_OP_LIST=MobileNetV2.yaml scripts/build_pytorch_android.sh armeabi-v7a

# mobilenetv2 custom build: scripts/build_pytorch_android.sh armeabi-v7a

```

- Before this PR, Android AAR size for arm-v7:

* default build: 5.5M;

* mobilenetv2 custom build: 3.2M;

- After this PR:

* default build: 6.4M;

* mobilenetv2 custom build: 3.3M;

It regressed default build size by ~1M because more root ops are

registered by c10 registers, e.g. backward ops which are filtered out by

gen_jit_dispatch.py for inference-only mobile build.

mobilenetv2 custom build size regressed by ~100k presumably because

the op whitelist is not yet applied to things like BackendSelectRegister.

Differential Revision: D20266240

Test Plan: Imported from OSS

Pulled By: ljk53

fbshipit-source-id: 97a9a06779f8c62fe3ff5cce089aa7fa9dee3c4a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/34055

Enable custom mobile build with dynamic dispatch for OSS build.

It calls a python util script to calculate transitive dependencies from

the op dependency graph and the list of used root ops, then pass the

result as the op registration whitelist to aten codegen, so that only

these used ops are registered and kept at link time.

For custom build with dynamic dispatch to work correctly, it's critical

to have the accurate list of used ops. Current assumption is that only

those ops referenced by TorchScript model are used. It works well if

client code doesn't call libtorch API (e.g. tensor methods) directly;

otherwise the extra used ops need to be added to the whitelist manually,

as shown by the HACK in prepare_model.py.

Also, if JIT starts calling extra ops independent of specific model,

then the extra ops need to be added to the whitelist as well.

Verified the correctness of the whole process with MobileNetV2:

```

TEST_CUSTOM_BUILD_DYNAMIC=1 test/mobile/custom_build/build.sh

```

Test Plan: Imported from OSS

Reviewed By: bhosmer

Differential Revision: D20193327

Pulled By: ljk53

fbshipit-source-id: 9d369b8864856b098342aea79e0ac8eec04149aa

Summary:

This PR move glu to Aten(CPU).

Test script:

```

import torch

import torch.nn.functional as F

import time

torch.manual_seed(0)

def _time():

if torch.cuda.is_available():

torch.cuda.synchronize()

return time.time()

device = "cpu"

#warm up

for n in [10, 100, 1000, 10000]:

input = torch.randn(128, n, requires_grad=True, device=device)

grad_output = torch.ones(128, n // 2, device=device)

for i in range(1000):

output = F.glu(input)

output.backward(grad_output)

for n in [10, 100, 1000, 10000]:

fwd_t = 0

bwd_t = 0

input = torch.randn(128, n, requires_grad=True, device=device)

grad_output = torch.ones(128, n // 2, device=device)

for i in range(10000):

t1 = _time()

output = F.glu(input)

t2 = _time()

output.backward(grad_output)

t3 = _time()

fwd_t = fwd_t + (t2 -t1)

bwd_t = bwd_t + (t3 - t2)

fwd_avg = fwd_t / 10000 * 1000

bwd_avg = bwd_t / 10000 * 1000

print("input size(128, %d) forward time is %.2f (ms); backwad avg time is %.2f (ms)."

% (n, fwd_avg, bwd_avg))

```

Test device: **skx-8180.**

Before:

```

input size(128, 10) forward time is 0.04 (ms); backwad avg time is 0.08 (ms).

input size(128, 100) forward time is 0.06 (ms); backwad avg time is 0.14 (ms).

input size(128, 1000) forward time is 0.11 (ms); backwad avg time is 0.31 (ms).

input size(128, 10000) forward time is 1.52 (ms); backwad avg time is 2.04 (ms).

```

After:

```

input size(128, 10) forward time is 0.02 (ms); backwad avg time is 0.05 (ms).

input size(128, 100) forward time is 0.04 (ms); backwad avg time is 0.09 (ms).

input size(128, 1000) forward time is 0.07 (ms); backwad avg time is 0.17 (ms).

input size(128, 10000) forward time is 0.13 (ms); backwad avg time is 1.03 (ms).

```

Fix https://github.com/pytorch/pytorch/issues/24707, https://github.com/pytorch/pytorch/issues/24708.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/33179

Differential Revision: D19839835

Pulled By: VitalyFedyunin

fbshipit-source-id: e4d3438556a1068da2c4a7e573d6bbf8d2a6e2b9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/27086

This is a major source of merge conflicts, and AFAICT isn't necessary anymore (it may have been necessary for some mobile build stuff in the past).

This is a commandeer of #25031

Test Plan: Imported from OSS

Reviewed By: ljk53

Differential Revision: D17687345

Pulled By: ezyang

fbshipit-source-id: bf6131af835ed1f9e3c10699c81d4454a240445f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/26131

Changes in this PR:

- For each operator with use_c10_dispatcher: True, additionally generate a c10 registration line in TypeDefault.cpp, CPUType.cpp, and other backend files.

- This doesn't change globalATenDispatch yet, the c10 registration is purely additional and the operator calling path doesn't change. A diff further up the stack will change these things.

- Enable the use_c10_dispatcher: True flag for about ~70% of operators

- This also changes the c10->jit operator export because ATen ops are already exported to JIT directly and we don't want to export the registered c10 ops because they would clash

- For this, we need a way to recognize if a certain operator is already moved from ATen to c10, this is done by generating a OpsAlreadyMovedToC10.cpp file with the list. A diff further up in the stack will also need this file to make sure we don't break the backend extension API for these ops.

Reasons for some ops to be excluded (i.e. not have the `use_c10_dispatcher` flag set to true):

- `Tensor?(a!)` (i.e. optional tensor with annotations) not supported in c++ function schema parser yet

- `-> void` in native_functions.yaml vs `-> ()` expected by function schema parser

- out functions have different argument order in C++ as in the jit schema

- `Tensor?` (i.e. optional tensor) doesn't work nicely with undefined tensor sometimes being undefined tensor and sometimes being None.

- fixed-size arrays like `int[3]` not supported in c10 yet

These will be fixed in separate diffs and then the exclusion tag will be removed.

ghstack-source-id: 90060748

Test Plan: a diff stacked on top uses these registrations to call these ops from ATen

Differential Revision: D16603131

fbshipit-source-id: 315eb83d0b567eb0cd49973060b44ee1d6d64bfb