beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

Fixes#109889

This PR adds `torch.export.export` as another `FXGraphExtractor` implementation. `torch.onnx.dynamo_export` automatically uses this new FX tracer when a `torch.export.ExportedProgram` is specified as `model`

Implementation is back compatible, thus non `ExportedProgram` models are handled the exact same way as before

Pull Request resolved: https://github.com/pytorch/pytorch/pull/111497

Approved by: https://github.com/BowenBao

Fixes#109889

This PR adds `torch.export.export` as another `FXGraphExtractor` implementation. `torch.onnx.dynamo_export` automatically uses this new FX tracer when a `torch.export.ExportedProgram` is specified as `model`

Implementation is back compatible, thus non `ExportedProgram` models are handled the exact same way as before

Pull Request resolved: https://github.com/pytorch/pytorch/pull/111497

Approved by: https://github.com/BowenBao

Merges startswith, endswith calls to into a single call that feeds in a tuple. Not only are these calls more readable, but it will be more efficient as it iterates through each string only once.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/96754

Approved by: https://github.com/ezyang

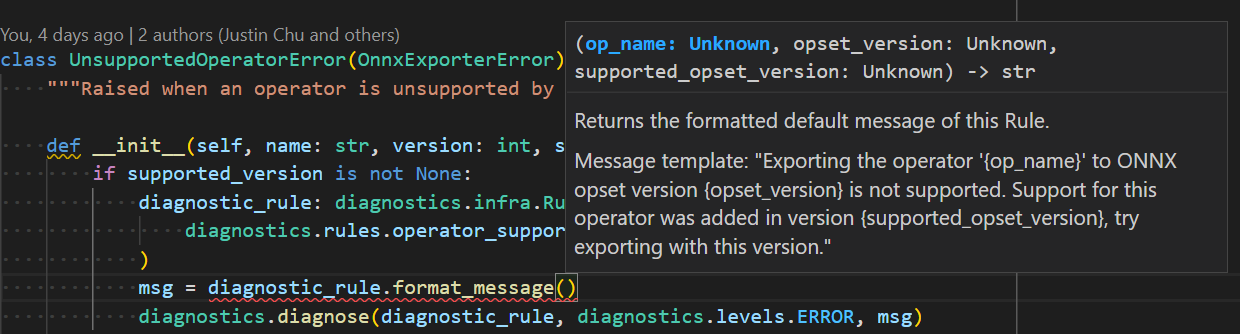

* Reflect required arguments in method signature for each diagnostic rule. Previous design accepts arbitrary sized tuple which is hard to use and prone to error.

* Removed `DiagnosticTool` to keep things compact.

* Removed specifying supported rule set for tool(context) and checking if rule of reported diagnostic falls inside the set, to keep things compact.

* Initial overview markdown file.

* Change `full_description` definition. Now `text` field should not be empty. And its markdown should be stored in `markdown` field.

* Change `message_default_template` to allow only named fields (excluding numeric fields). `field_name` provides clarity on what argument is expected.

* Added `diagnose` api to `torch.onnx._internal.diagnostics`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87830

Approved by: https://github.com/abock

This PR adds an internal wrapper on the [beartype](https://github.com/beartype/beartype) library to perform runtime type checking in `torch.onnx`. It uses beartype when it is found in the environment and is reduced to a no-op when beartype is not found.

Setting the env var `TORCH_ONNX_EXPERIMENTAL_RUNTIME_TYPE_CHECK=ERRORS` will turn on the feature. setting `TORCH_ONNX_EXPERIMENTAL_RUNTIME_TYPE_CHECK=DISABLED` will disable all checks. When not set and `beartype` is installed, a warning message is emitted.

Now when users call an api with invalid arguments e.g.

```python

torch.onnx.export(conv, y, path, export_params=True, training=False)

# traning should take TrainingModel, not bool

```

they get

```

Traceback (most recent call last):

File "bisect_m1_error.py", line 63, in <module>

main()

File "bisect_m1_error.py", line 59, in main

reveal_error()

File "bisect_m1_error.py", line 32, in reveal_error

torch.onnx.export(conv, y, cpu_model_path, export_params=True, training=False)

File "<@beartype(torch.onnx.utils.export) at 0x1281f5a60>", line 136, in export

File "pytorch/venv/lib/python3.9/site-packages/beartype/_decor/_error/errormain.py", line 301, in raise_pep_call_exception

raise exception_cls( # type: ignore[misc]

beartype.roar.BeartypeCallHintParamViolation: @beartyped export() parameter training=False violates type hint <class 'torch._C._onnx.TrainingMode'>, as False not instance of <protocol "torch._C._onnx.TrainingMode">.

```

when `TORCH_ONNX_EXPERIMENTAL_RUNTIME_TYPE_CHECK` is not set and `beartype` is installed, a warning message is emitted.

```

>>> torch.onnx.export("foo", "bar", "f")

<stdin>:1: CallHintViolationWarning: Traceback (most recent call last):

File "/home/justinchu/dev/pytorch/torch/onnx/_internal/_beartype.py", line 54, in _coerce_beartype_exceptions_to_warnings

return beartyped(*args, **kwargs)

File "<@beartype(torch.onnx.utils.export) at 0x7f1d4ab35280>", line 39, in export

File "/home/justinchu/anaconda3/envs/pytorch/lib/python3.9/site-packages/beartype/_decor/_error/errormain.py", line 301, in raise_pep_call_exception

raise exception_cls( # type: ignore[misc]

beartype.roar.BeartypeCallHintParamViolation: @beartyped export() parameter model='foo' violates type hint typing.Union[torch.nn.modules.module.Module, torch.jit._script.ScriptModule, torch.jit.ScriptFunction], as 'foo' not <protocol "torch.jit.ScriptFunction">, <protocol "torch.nn.modules.module.Module">, or <protocol "torch.jit._script.ScriptModule">.

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/justinchu/dev/pytorch/torch/onnx/_internal/_beartype.py", line 63, in _coerce_beartype_exceptions_to_warnings

return func(*args, **kwargs)

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 482, in export

_export(

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 1422, in _export

with exporter_context(model, training, verbose):

File "/home/justinchu/anaconda3/envs/pytorch/lib/python3.9/contextlib.py", line 119, in __enter__

return next(self.gen)

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 177, in exporter_context

with select_model_mode_for_export(

File "/home/justinchu/anaconda3/envs/pytorch/lib/python3.9/contextlib.py", line 119, in __enter__

return next(self.gen)

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 95, in select_model_mode_for_export

originally_training = model.training

AttributeError: 'str' object has no attribute 'training'

```

We see the error is caught right when the type mismatch happens, improving from what otherwise would become `AttributeError: 'str' object has no attribute 'training'`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83673

Approved by: https://github.com/BowenBao

### Description

- Clearer error messages with more context

- Created `SymbolicValueError` which adds context of the value to the error message

- Type annotation

example error message:

```

torch.onnx.errors.SymbolicValueError: ONNX symbolic does not understand the Constant node '%1 : Long(2, strides=[1], device=cpu) = onnx::Constant[value= 3 3 [ CPULongType{2} ]]()

' specified with descriptor 'is'. [Caused by the value '1 defined in (%1 : Long(2, strides=[1], device=cpu) = onnx::Constant[value= 3 3 [ CPULongType{2} ]]()

)' (type 'Tensor') in the TorchScript graph. The containing node has kind 'onnx::Constant'.]

Inputs:

Empty

Outputs:

#0: 1 defined in (%1 : Long(2, strides=[1], device=cpu) = onnx::Constant[value= 3 3 [ CPULongType{2} ]]()

) (type 'Tensor')

```

### Issue

- #77316 (Runtime error during symbolic conversion)

### Testing

Unit tested

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83007

Approved by: https://github.com/BowenBao