Summary:

Will not land before the release, but would be good to have this function documented in master for its use in distributed debugability.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58322

Reviewed By: SciPioneer

Differential Revision: D28595405

Pulled By: rohan-varma

fbshipit-source-id: fb00fa22fbe97a38c396eae98a904d1c4fb636fa

Summary:

Temporary fix for https://github.com/pytorch/pytorch/issues/42218.

Numerically, grid_sampler should be fine in fp32 or fp16. So grid_sampler really belongs on the promote list. But performancewise, native grid_sampler backward kernels use gpuAtomicAdd, which is notoriously slow in fp16. So the simplest functionality fix is to put grid_sampler on the fp32 list.

In https://github.com/pytorch/pytorch/pull/58618 I implement the right long-term fix (refactoring kernels to use fp16-friendly fastAtomicAdd and moving grid_sampler to the promote list). But that's more invasive, and for 1.9 ngimel says this simple temporary fix is preferred.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58679

Reviewed By: soulitzer

Differential Revision: D28576559

Pulled By: ngimel

fbshipit-source-id: d653003f37eaedcbb3eaac8d7fec26c343acbc07

Summary:

* Fix lots of links.

* Minor improvements for consistency, clarity or grammar.

* Update jit_python_reference to note the limitations on __exit__.

(Related to https://github.com/pytorch/pytorch/issues/41420).

* Fix a comment in exit_transforms.cpp: removed the word "not" which

made the comment say the opposite of the truth.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57991

Reviewed By: malfet

Differential Revision: D28522247

Pulled By: SplitInfinity

fbshipit-source-id: fc63a59d19ea6c89f957c9f7d451be17d1c5fc91

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58056

This PR addresses an action item in #3428: disabling search engine

indexing of master documentation. This is desireable because we want to

direct users to our stable documentation (instead of master

documentation) because they are more likely to have a stable version of

PyTorch installed.

Test Plan:

1. run `make html`, check that the noindex tags are there

2. run `make html-stable, check that the noindex tags aren't there

Reviewed By: bdhirsh

Differential Revision: D28490504

Pulled By: zou3519

fbshipit-source-id: 695c944c4962b2bd484dd7a5e298914a37abe787

Summary:

Added a simple section indicating distributed profiling is expected to work similar to other torch operators, and is supported for all communication backends out of the box.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58286

Reviewed By: bdhirsh

Differential Revision: D28436489

Pulled By: rohan-varma

fbshipit-source-id: ce1905a987c0ede8011e8086a2c30edc777b4a38

Summary:

Adds a new file under `torch/nn/utils/parametrizations.py` which should contain all the parametrization implementations

For spectral_norm we add the `SpectralNorm` module which can be registered using `torch.nn.utils.parametrize.register_parametrization` or using a wrapper: `spectral_norm`, the same API the old implementation provided.

Most of the logic is borrowed from the old implementation:

- Just like the old implementation, there should be cases when retrieving the weight should perform another power iteration (thus updating the weight) and cases where it shouldn't. For example in eval mode `self.training=True`, we do not perform power iteration.

There are also some differences/difficulties with the new implementation:

- Using new parametrization functionality as-is there doesn't seem to be a good way to tell whether a 'forward' call was the result of parametrizations are unregistered (and leave_parametrizations=True) or when the injected property's getter was invoked. The issue is that we want perform power iteration in the latter case but not the former, but we don't have this control as-is. So, in this PR I modified the parametrization functionality to change the module to eval mode before triggering their forward call

- Updates the vectors based on weight on initialization to fix https://github.com/pytorch/pytorch/issues/51800 (this avoids silently update weights in eval mode). This also means that we perform twice any many power iterations by the first forward.

- right_inverse is just the identity for now, but maybe it should assert that the passed value already satisfies the constraints

- So far, all the old spectral_norm tests have been cloned, but maybe we don't need so much testing now that the core functionality is already well tested

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57784

Reviewed By: ejguan

Differential Revision: D28413201

Pulled By: soulitzer

fbshipit-source-id: e8f1140f7924ca43ae4244c98b152c3c554668f2

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58039

The new function has the following signature

`inv_ex(Tensor inpit, *, bool check_errors=False) -> (Tensor inverse, Tensor info)`.

When `check_errors=True`, an error is thrown if the matrix is not invertible; `check_errors=False` - responsibility for checking the result is on the user.

`linalg_inv` is implemented using calls to `linalg_inv_ex` now.

Resolves https://github.com/pytorch/pytorch/issues/25095

Test Plan: Imported from OSS

Reviewed By: ngimel

Differential Revision: D28405148

Pulled By: mruberry

fbshipit-source-id: b8563a6c59048cb81e206932eb2f6cf489fd8531

Summary:

Fixes https://github.com/pytorch/pytorch/issues/56608

- Adds binding to the `c10::InferenceMode` RAII class in `torch._C._autograd.InferenceMode` through pybind. Also binds the `torch.is_inference_mode` function.

- Adds context manager `torch.inference_mode` to manage an instance of `c10::InferenceMode` (global). Implemented in `torch.autograd.grad_mode.py` to reuse the `_DecoratorContextManager` class.

- Adds some tests based on those linked in the issue + several more for just the context manager

Issues/todos (not necessarily for this PR):

- Improve short inference mode description

- Small example

- Improved testing since there is no direct way of checking TLS/dispatch keys

-

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58045

Reviewed By: agolynski

Differential Revision: D28390595

Pulled By: soulitzer

fbshipit-source-id: ae98fa036c6a2cf7f56e0fd4c352ff804904752c

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58160

This PR updates the Torch Distributed Elastic documentation with references to the new `c10d` backend.

ghstack-source-id: 128783809

Test Plan: Visually verified the correct

Reviewed By: tierex

Differential Revision: D28384996

fbshipit-source-id: a40b0c37989ce67963322565368403e2be5d2592

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57341

Require that users be explicit about what they are going to be

interning. There are a lot of changes that are enabled by this. The new

overall scheme is:

PackageExporter maintains a dependency graph. Users can add to it,

either explicitly (by issuing a `save_*` call) or explicitly (through

dependency resolution). Users can also specify what action to take when

PackageExporter encounters a module (deny, intern, mock, extern).

Nothing (except pickles, tho that can be changed with a small amount

of work) is written to the zip archive until we are finalizing the

package. At that point, we consult the dependency graph and write out

the package exactly as it tells us to.

This accomplishes two things:

1. We can gather up *all* packaging errors instead of showing them one at a time.

2. We require that users be explicit about what's going in packages, which is a common request.

Differential Revision: D28114185

Test Plan: Imported from OSS

Reviewed By: SplitInfinity

Pulled By: suo

fbshipit-source-id: fa1abf1c26be42b14c7e7cf3403ecf336ad4fc12

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58170

Now comm hook can be supported on MPI and GLOO backends besides NCCL. No longer need these warnings and check.

ghstack-source-id: 128799123

Test Plan: N/A

Reviewed By: agolynski

Differential Revision: D28388861

fbshipit-source-id: f56a7b9f42bfae1e904f58cdeccf7ceefcbb0850

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58023

Clearly state that some features of RPC aren't yet compatible with CUDA.

ghstack-source-id: 128688856

Test Plan: None

Reviewed By: agolynski

Differential Revision: D28347605

fbshipit-source-id: e8df9a4696c61a1a05f7d2147be84d41aeeb3b48

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/50048

To reflect the many changes introduced recently.

In my mind, CUDAFuture should be considered a "private" subclass, which in practice should always be returned as a downcast pointer to an ivalue::Future. Hence, we should document the CUDA behavior in the superclass, even if it's CUDA-agnostic, since that's the interface the users will see also for CUDA-enabled futures.

ghstack-source-id: 128640983

Test Plan: Built locally and looked at them.

Reviewed By: mrshenli

Differential Revision: D25757474

fbshipit-source-id: c6f66ba88fa6c4fc33601f31136422d6cf147203

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57965

The bold effect does not work under quotes, so move it out.

ghstack-source-id: 128570357

Test Plan:

locally view

{F614715259}

Reviewed By: rohan-varma

Differential Revision: D28329694

fbshipit-source-id: 299b427f4c0701ba70c84148f65203a6e2d6ac61

Summary:

This PR is focused on the API for `linalg.matrix_norm` and delegates computations to `linalg.norm` for the moment.

The main difference between the norms is when `dim=None`. In this case

- `linalg.norm` will compute a vector norm on the flattened input if `ord=None`, otherwise it requires the input to be either 1D or 2D in order to disambiguate between vector and matrix norm

- `linalg.vector_norm` will flatten the input

- `linalg.matrix_norm` will compute the norm over the last two dimensions, treating the input as batch of matrices

In future PRs, the computations will be moved to `torch.linalg.matrix_norm` and `torch.norm` and `torch.linalg.norm` will delegate computations to either `linalg.vector_norm` or `linalg.matrix_norm` based on the arguments provided.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57127

Reviewed By: mrshenli

Differential Revision: D28186736

Pulled By: mruberry

fbshipit-source-id: 99ce2da9d1c4df3d9dd82c0a312c9570da5caf25

Summary:

Redo of https://github.com/pytorch/pytorch/issues/56373 out of stack.

---

To reviewers: **please be nitpicky**. I've read this so often that I probably missed some typos and inconsistencies.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57247

Reviewed By: albanD

Differential Revision: D28247402

Pulled By: mruberry

fbshipit-source-id: 71142678ee5c82cc8c0ecc1dad6a0b2b9236d3e6

Summary:

Pull Request resolved: https://github.com/pytorch/elastic/pull/148

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56811

Moves docs sphinx `*.rst` files from the torchelastic repository to torch. Note: only moves the rst files the next step is to link it to the main pytorch `index.rst` and write new `examples.rst`

Reviewed By: H-Huang

Differential Revision: D27974751

fbshipit-source-id: 8ff9f242aa32e0326c37da3916ea0633aa068fc5

Summary:

Fixes https://github.com/pytorch/pytorch/issues/54555

It has been discussed in the issue https://github.com/pytorch/pytorch/issues/54555 that {h,v,d}split methods unexpectedly matches argument of single int[] when it is expected to match single argument of int. The same unexpected behavior can happen in other functions/methods which can take both int[] and int? as single argument signatures.

In this PR we solve this problem by giving higher priority to int/int? arguments over int[] while sorting signatures.

We also add methods of {h,v,d}split methods here, which helped us to discover this unexpected behavior.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57346

Reviewed By: ezyang

Differential Revision: D28121234

Pulled By: iramazanli

fbshipit-source-id: 851cf40b370707be89298177b51ceb4527f4b2d6

Summary:

The new function has the following signature `cholesky_ex(Tensor input, *, bool check_errors=False) -> (Tensor L, Tensor infos)`. When `check_errors=True`, an error is thrown if the decomposition fails; `check_errors=False` - responsibility for checking the decomposition is on the user.

When `check_errors=False`, we don't have host-device memory transfers for checking the values of the `info` tensor.

Rewrote the internal code for `torch.linalg.cholesky`. Added `cholesky_stub` dispatch. `linalg_cholesky` is implemented using calls to `linalg_cholesky_ex` now.

Resolves https://github.com/pytorch/pytorch/issues/57032.

Ref. https://github.com/pytorch/pytorch/issues/34272, https://github.com/pytorch/pytorch/issues/47608, https://github.com/pytorch/pytorch/issues/47953

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56724

Reviewed By: ngimel

Differential Revision: D27960176

Pulled By: mruberry

fbshipit-source-id: f05f3d5d9b4aa444e41c4eec48ad9a9b6fd5dfa5

Summary:

You can find the latest rendered version in the `python_doc_build` CI job below, in the artifact tab of that build on circle CI

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55966

Reviewed By: H-Huang

Differential Revision: D28032446

Pulled By: albanD

fbshipit-source-id: 227ad37b03d39894d736c19cae3195b4d56fc62f

Summary:

This PR tries to make the docs of `torch.linalg` have/be:

- More uniform notation and structure for every function.

- More uniform use of back-quotes and the `:attr:` directive

- More readable for a non-specialised audience through explanations of the form that factorisations take and when would it be beneficial to use what arguments in some solvers.

- More connected among the different functions through the use of the `.. seealso::` directive.

- More information on when do gradients explode / when is a function silently returning a wrong result / when things do not work in general

I tried to follow the structure of "one short description and then the rest" to be able to format the docs like those of `torch.` or `torch.nn`. I did not do that yet, as I am waiting for the green light on this idea:

https://github.com/pytorch/pytorch/issues/54878#issuecomment-816636171

What this PR does not do:

- Clean the documentation of other functions that are not in the `linalg` module (although I started doing this for `torch.svd`, but then I realised that this PR would touch way too many functions).

Fixes https://github.com/pytorch/pytorch/issues/54878

cc mruberry IvanYashchuk

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56265

Reviewed By: H-Huang

Differential Revision: D27993986

Pulled By: mruberry

fbshipit-source-id: adde7b7383387e1213cc0a6644331f0632b7392d

Summary:

No oustanding issue, can create it if needed.

Was looking for that resource and it was moved without fixing the documentation.

Cheers

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56776

Reviewed By: heitorschueroff

Differential Revision: D27967020

Pulled By: ezyang

fbshipit-source-id: a5cd7d554da43a9c9e44966ccd0b0ad9eef2948c

Summary:

In the optimizer documentation, many of the learning rate schedulers [examples](https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate) are provided according to a generic template. In this PR we provide a precise simple use case example to show how to use learning rate schedulers. Moreover, in a followup example we show an example how to chain two schedulers next to each other.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56705

Reviewed By: ezyang

Differential Revision: D27966704

Pulled By: iramazanli

fbshipit-source-id: f32b2d70d5cad7132335a9b13a2afa3ac3315a13

Summary:

The pre-amble here is misformatted at least and is hard to make sense of: https://pytorch.org/docs/master/quantization.html#prototype-fx-graph-mode-quantization

This PR is trying to make things easier to understand.

As I'm new to this please verify that my modifications remain in line with what may have been meant originally.

Thanks.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52192

Reviewed By: ailzhang

Differential Revision: D27941730

Pulled By: vkuzo

fbshipit-source-id: 6c4bbf7c87d8fb87ab5d588b690a72045752e47a

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56528

Tried to search across internal and external usage of DataLoader. People haven't started to use `generator` for `DataLoader`.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D27908487

Pulled By: ejguan

fbshipit-source-id: 14c83ed40d4ba4dc988b121968a78c2732d8eb93

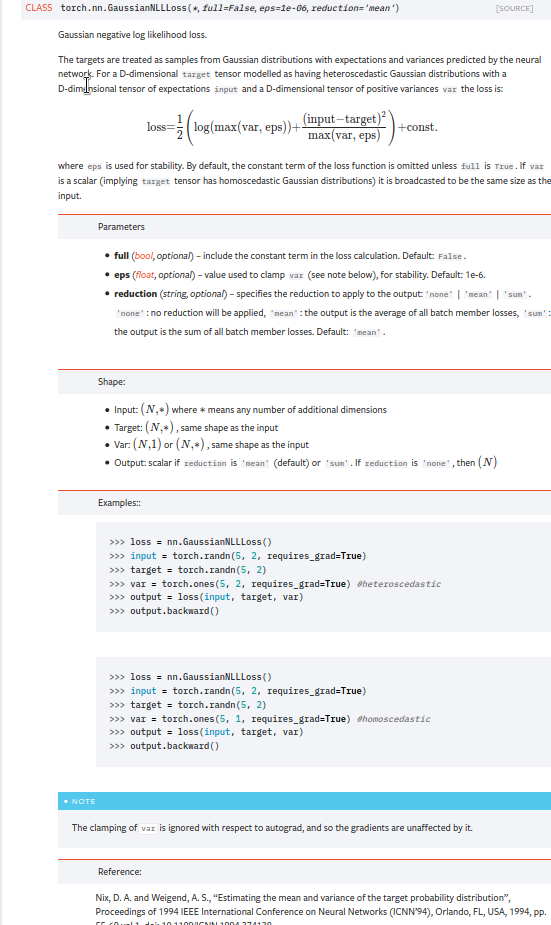

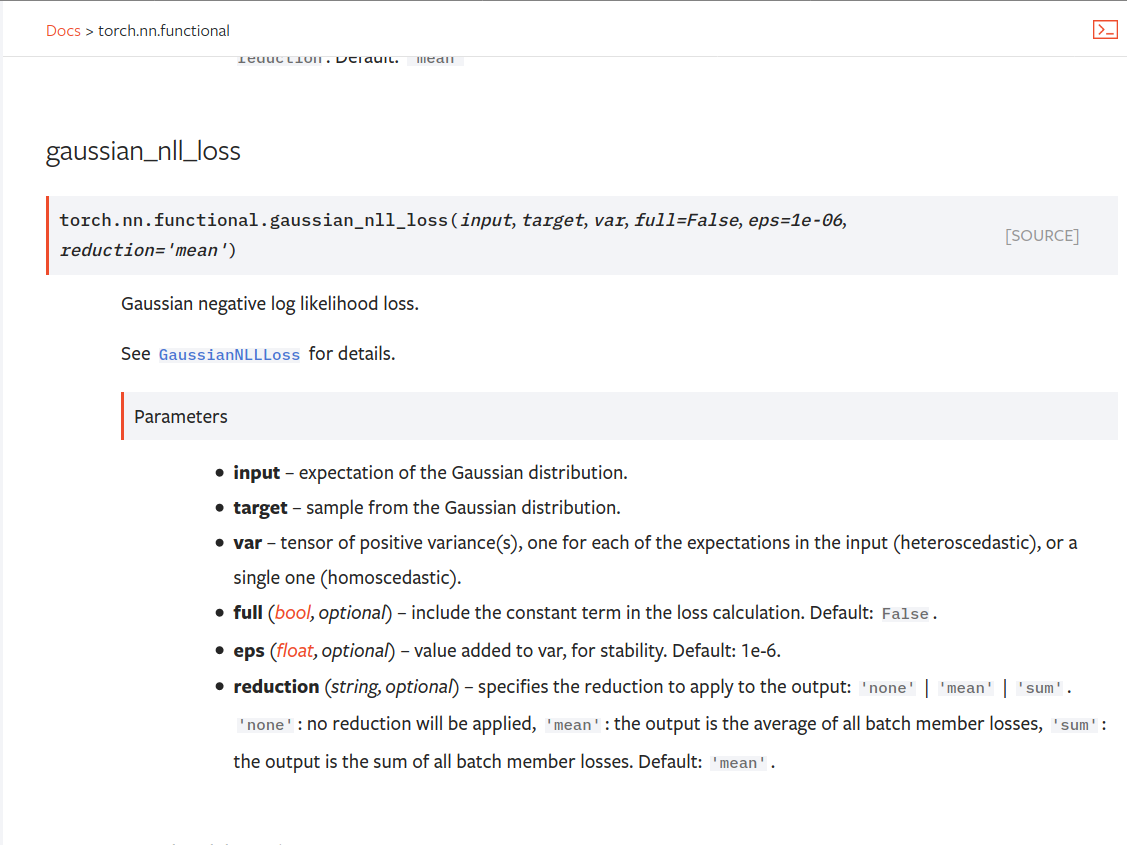

Summary:

Fixes https://github.com/pytorch/pytorch/issues/53964. cc albanD almson

## Major changes:

- Overhauled the actual loss calculation so that the shapes are now correct (in functional.py)

- added the missing doc in nn.functional.rst

## Minor changes (in functional.py):

- I removed the previous check on whether input and target were the same shape. This is to allow for broadcasting, say when you have 10 predictions that all have the same target.

- I added some comments to explain each shape check in detail. Let me know if these should be shortened/cut.

Screenshots of updated docs attached.

Let me know what you think, thanks!

## Edit: Description of change of behaviour (affecting BC):

The backwards-compatibility is only affected for the `reduction='none'` mode. This was the source of the bug. For tensors with size (N, D), the old returned loss had size (N), as incorrect summation was happening. It will now have size (N, D) as expected.

### Example

Define input tensors, all with size (2, 3).

`input = torch.tensor([[0., 1., 3.], [2., 4., 0.]], requires_grad=True)`

`target = torch.tensor([[1., 4., 2.], [-1., 2., 3.]])`

`var = 2*torch.ones(size=(2, 3), requires_grad=True)`

Initialise loss with reduction mode 'none'. We expect the returned loss to have the same size as the input tensors, (2, 3).

`loss = torch.nn.GaussianNLLLoss(reduction='none')`

Old behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([3.7897, 6.5397], grad_fn=<MulBackward0>. This has size (2).`

New behaviour:

`print(loss(input, target, var)) `

`# Gives tensor([[0.5966, 2.5966, 0.5966], [2.5966, 1.3466, 2.5966]], grad_fn=<MulBackward0>)`

`# This has the expected size, (2, 3).`

To recover the old behaviour, sum along all dimensions except for the 0th:

`print(loss(input, target, var).sum(dim=1))`

`# Gives tensor([3.7897, 6.5397], grad_fn=<SumBackward1>.`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56469

Reviewed By: jbschlosser, agolynski

Differential Revision: D27894170

Pulled By: albanD

fbshipit-source-id: 197890189c97c22109491c47f469336b5b03a23f

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56547

**Summary**

This commit tweaks the docstrings of `PackageExporter` so that they look

nicer on the docs website.

**Test Plan**

Continuous integration.

Test Plan: Imported from OSS

Reviewed By: ailzhang

Differential Revision: D27912965

Pulled By: SplitInfinity

fbshipit-source-id: 38c0a715365b8cfb9eecdd1b38ba525fa226a453