```Python

chebyshev_polynomial_v(input, n, *, out=None) -> Tensor

```

Chebyshev polynomial of the third kind $V_{n}(\text{input})$.

```Python

chebyshev_polynomial_w(input, n, *, out=None) -> Tensor

```

Chebyshev polynomial of the fourth kind $W_{n}(\text{input})$.

```Python

legendre_polynomial_p(input, n, *, out=None) -> Tensor

```

Legendre polynomial $P_{n}(\text{input})$.

```Python

shifted_chebyshev_polynomial_t(input, n, *, out=None) -> Tensor

```

Shifted Chebyshev polynomial of the first kind $T_{n}^{\ast}(\text{input})$.

```Python

shifted_chebyshev_polynomial_u(input, n, *, out=None) -> Tensor

```

Shifted Chebyshev polynomial of the second kind $U_{n}^{\ast}(\text{input})$.

```Python

shifted_chebyshev_polynomial_v(input, n, *, out=None) -> Tensor

```

Shifted Chebyshev polynomial of the third kind $V_{n}^{\ast}(\text{input})$.

```Python

shifted_chebyshev_polynomial_w(input, n, *, out=None) -> Tensor

```

Shifted Chebyshev polynomial of the fourth kind $W_{n}^{\ast}(\text{input})$.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78304

Approved by: https://github.com/mruberry

Adds:

```Python

bessel_j0(input, *, out=None) -> Tensor

```

Bessel function of the first kind of order $0$, $J_{0}(\text{input})$.

```Python

bessel_j1(input, *, out=None) -> Tensor

```

Bessel function of the first kind of order $1$, $J_{1}(\text{input})$.

```Python

bessel_j0(input, *, out=None) -> Tensor

```

Bessel function of the second kind of order $0$, $Y_{0}(\text{input})$.

```Python

bessel_j1(input, *, out=None) -> Tensor

```

Bessel function of the second kind of order $1$, $Y_{1}(\text{input})$.

```Python

modified_bessel_i0(input, *, out=None) -> Tensor

```

Modified Bessel function of the first kind of order $0$, $I_{0}(\text{input})$.

```Python

modified_bessel_i1(input, *, out=None) -> Tensor

```

Modified Bessel function of the first kind of order $1$, $I_{1}(\text{input})$.

```Python

modified_bessel_k0(input, *, out=None) -> Tensor

```

Modified Bessel function of the second kind of order $0$, $K_{0}(\text{input})$.

```Python

modified_bessel_k1(input, *, out=None) -> Tensor

```

Modified Bessel function of the second kind of order $1$, $K_{1}(\text{input})$.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78451

Approved by: https://github.com/mruberry

Adds:

```Python

chebyshev_polynomial_t(input, n, *, out=None) -> Tensor

```

Chebyshev polynomial of the first kind $T_{n}(\text{input})$.

If $n = 0$, $1$ is returned. If $n = 1$, $\text{input}$ is returned. If $n < 6$ or $|\text{input}| > 1$ the recursion:

$$T_{n + 1}(\text{input}) = 2 \times \text{input} \times T_{n}(\text{input}) - T_{n - 1}(\text{input})$$

is evaluated. Otherwise, the explicit trigonometric formula:

$$T_{n}(\text{input}) = \text{cos}(n \times \text{arccos}(x))$$

is evaluated.

## Derivatives

Recommended $k$-derivative formula with respect to $\text{input}$:

$$2^{-1 + k} \times n \times \Gamma(k) \times C_{-k + n}^{k}(\text{input})$$

where $C$ is the Gegenbauer polynomial.

Recommended $k$-derivative formula with respect to $\text{n}$:

$$\text{arccos}(\text{input})^{k} \times \text{cos}(\frac{k \times \pi}{2} + n \times \text{arccos}(\text{input})).$$

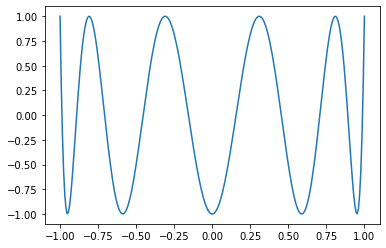

## Example

```Python

x = torch.linspace(-1, 1, 256)

matplotlib.pyplot.plot(x, torch.special.chebyshev_polynomial_t(x, 10))

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78196

Approved by: https://github.com/mruberry

Euler beta function:

```Python

torch.special.beta(input, other, *, out=None) → Tensor

```

`reentrant_gamma` and `reentrant_ln_gamma` implementations (using Stirling’s approximation) are provided. I started working on this before I realized we were missing a gamma implementation (despite providing incomplete gamma implementations). Uses the coefficients computed by Steve Moshier to replicate SciPy’s implementation. Likewise, it mimics SciPy’s behavior (instead of the behavior in Cephes).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78031

Approved by: https://github.com/mruberry

Summary:

Reference https://github.com/pytorch/pytorch/issues/50345

`zeta` was already present in the codebase to support computation of `polygamma`.

However, `zeta` only had `double(double, double)` signature **for CPU** before the PR (which meant that computation `polygamma` were always upcasted to `double` for zeta part).

With this PR, float computations will take place in float and double in double.

Have also refactored the code and moved the duplicate code from `Math.cuh` to `Math.h`

**Note**: For scipy, q is optional, and if it is `None`, it defaults `1` which corresponds to Reimann-Zeta. However, for `torch.specia.zeta`, I made it mandatory cause for me it feels odd without `q` this is Reimann-Zeta and with `q` it is the general Hurwitz Zeta. I think sticking to just general made more sense as passing `1` for q sounds trivial.

Verify:

* [x] Docs https://14234587-65600975-gh.circle-artifacts.com/0/docs/special.html#torch.special.zeta

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59623

Reviewed By: ngimel

Differential Revision: D29348269

Pulled By: mruberry

fbshipit-source-id: a3f9ebe1f7724dbe66de2b391afb9da1cfc3e4bb

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Changes:

* Add `i0e`

* Move some kernels from `UnaryOpsKernel.cu` to `UnarySpecialOpsKernel.cu` to decrease compilation time per file.

Time taken by i0e_vs_scipy tests: around 6.33.s

<details>

<summary>Test Run Log</summary>

```

(pytorch-cuda-dev) kshiteej@qgpu1:~/Pytorch/pytorch_module_special$ pytest test/test_unary_ufuncs.py -k _i0e_vs

======================================================================= test session starts ========================================================================

platform linux -- Python 3.8.6, pytest-6.1.2, py-1.9.0, pluggy-0.13.1

rootdir: /home/kshiteej/Pytorch/pytorch_module_special, configfile: pytest.ini

plugins: hypothesis-5.38.1

collected 8843 items / 8833 deselected / 10 selected

test/test_unary_ufuncs.py ...sss.... [100%]

========================================================================= warnings summary =========================================================================

../../.conda/envs/pytorch-cuda-dev/lib/python3.8/site-packages/torch/backends/cudnn/__init__.py:73

test/test_unary_ufuncs.py::TestUnaryUfuncsCUDA::test_special_i0e_vs_scipy_cuda_bfloat16

/home/kshiteej/.conda/envs/pytorch-cuda-dev/lib/python3.8/site-packages/torch/backends/cudnn/__init__.py:73: UserWarning: PyTorch was compiled without cuDNN/MIOpen support. To use cuDNN/MIOpen, rebuild PyTorch making sure the library is visible to the build system.

warnings.warn(

-- Docs: https://docs.pytest.org/en/stable/warnings.html

===================================================================== short test summary info ======================================================================

SKIPPED [3] test/test_unary_ufuncs.py:1182: not implemented: Could not run 'aten::_copy_from' with arguments from the 'Meta' backend. This could be because the operator doesn't exist for this backend, or was omitted during the selective/custom build process (if using custom build). If you are a Facebook employee using PyTorch on mobile, please visit https://fburl.com/ptmfixes for possible resolutions. 'aten::_copy_from' is only available for these backends: [BackendSelect, Named, InplaceOrView, AutogradOther, AutogradCPU, AutogradCUDA, AutogradXLA, UNKNOWN_TENSOR_TYPE_ID, AutogradMLC, AutogradNestedTensor, AutogradPrivateUse1, AutogradPrivateUse2, AutogradPrivateUse3, Tracer, Autocast, Batched, VmapMode].

BackendSelect: fallthrough registered at ../aten/src/ATen/core/BackendSelectFallbackKernel.cpp:3 [backend fallback]

Named: registered at ../aten/src/ATen/core/NamedRegistrations.cpp:7 [backend fallback]

InplaceOrView: fallthrough registered at ../aten/src/ATen/core/VariableFallbackKernel.cpp:56 [backend fallback]

AutogradOther: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradCPU: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradCUDA: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradXLA: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

UNKNOWN_TENSOR_TYPE_ID: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradMLC: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradNestedTensor: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse1: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse2: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

AutogradPrivateUse3: registered at ../torch/csrc/autograd/generated/VariableType_4.cpp:8761 [autograd kernel]

Tracer: registered at ../torch/csrc/autograd/generated/TraceType_4.cpp:9348 [kernel]

Autocast: fallthrough registered at ../aten/src/ATen/autocast_mode.cpp:250 [backend fallback]

Batched: registered at ../aten/src/ATen/BatchingRegistrations.cpp:1016 [backend fallback]

VmapMode: fallthrough registered at ../aten/src/ATen/VmapModeRegistrations.cpp:33 [backend fallback]

==================================================== 7 passed, 3 skipped, 8833 deselected, 2 warnings in 6.33s =====================================================

```

</details>

TODO:

* [x] Check rendered docs (https://11743402-65600975-gh.circle-artifacts.com/0/docs/special.html)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54409

Reviewed By: jbschlosser

Differential Revision: D27760472

Pulled By: mruberry

fbshipit-source-id: bdfbcaa798b00c51dc9513c34626246c8fc10548

Summary:

Related to https://github.com/pytorch/pytorch/issues/52256

Use autosummary instead of autofunction to create subpages for autograd functions. I left the autoclass parts intact but manually laid out their members.

Also the Latex formatting of the spcecial page emitted a warning (solved by adding `\begin{align}...\end{align}`) and fixed alignment of equations (by using `&=` instead of `=`).

zou3519

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55672

Reviewed By: jbschlosser

Differential Revision: D27736855

Pulled By: zou3519

fbshipit-source-id: addb56f4f81c82d8537884e0ff243c1e34969a6e

Summary:

Reference: https://github.com/pytorch/pytorch/issues/50345

Chages:

* Alias for sigmoid and logit

* Adds out variant for C++ API

* Updates docs to link back to `special` documentation

Pull Request resolved: https://github.com/pytorch/pytorch/pull/54759

Reviewed By: mrshenli

Differential Revision: D27615208

Pulled By: mruberry

fbshipit-source-id: 8bba908d1bea246e4aa9dbadb6951339af353556