Refactor torchscript based exporter logic to move them to a single (private) location for better code management. Original public module and method apis are preserved.

- Updated module paths in `torch/csrc/autograd/python_function.cpp` accordingly

- Removed `check_onnx_broadcast` from `torch/autograd/_functions/utils.py` because it is private&unused

@albanD / @soulitzer could you review changes in `torch/csrc/autograd/python_function.cpp` and

`torch/autograd/_functions/utils.py`? Thanks!

## BC Breaking

- **Deprecated members in `torch.onnx.verification` are removed**

Differential Revision: [D81236421](https://our.internmc.facebook.com/intern/diff/D81236421)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/161323

Approved by: https://github.com/titaiwangms, https://github.com/angelayi

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

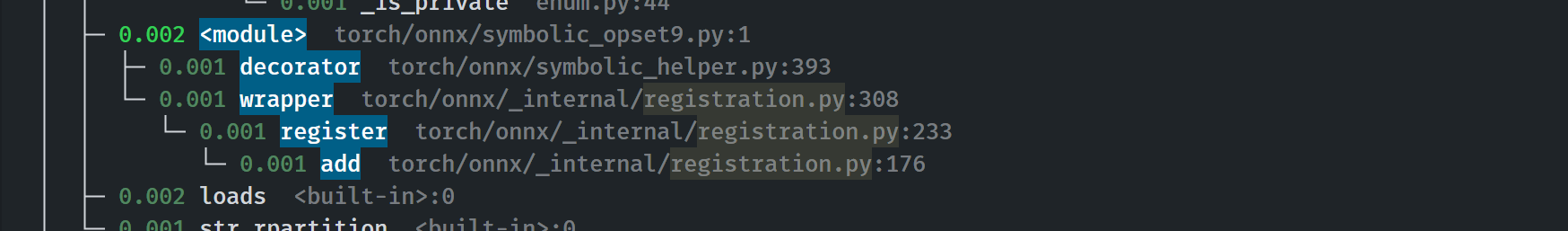

This is the 4th PR in the series of #83787. It enables the use of `@onnx_symbolic` across `torch.onnx`.

- **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `@onnx_symbolic` for registering the same function on multiple aten names.

- Decorate all symbolic functions with `@onnx_symbolic`

- Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%.

- Remove the outdated unit test `test_symbolic_opset9.py`

- Symbolic function registration moved from the first call to `_run_symbolic_function` to init time.

- Registration is fast:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84448

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

Enable runtime type checking for all torch.onnx public apis, symbolic functions and most helpers (minus two that does not have a checkable type: `_.JitType` does not exist) by adding the beartype decorator. Fix type annotations to makes unit tests green.

Profile:

export `torchvision.models.alexnet(pretrained=True)`

```

with runtime type checking: 21.314 / 10 passes

without runtime type checking: 20.797 / 10 passes

+ 2.48%

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84091

Approved by: https://github.com/BowenBao, https://github.com/thiagocrepaldi

Enable runtime type checking for all torch.onnx public apis, symbolic functions and most helpers (minus two that does not have a checkable type: `_.JitType` does not exist) by adding the beartype decorator. Fix type annotations to makes unit tests green.

Profile:

export `torchvision.models.alexnet(pretrained=True)`

```

with runtime type checking: 21.314 / 10 passes

without runtime type checking: 20.797 / 10 passes

+ 2.48%

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84091

Approved by: https://github.com/BowenBao

Cleaning up onnx module imports to prepare for updating `__init__`.

- Simplify importing the `_C` and `_C._onnx` name spaces

- Remove alias of the symbolic_helper module in imports

- Remove any module level function imports. Import modules instead

- Alias `symbilic_opsetx` as `opsetx`

- Fix some docstrings

Requires:

- https://github.com/pytorch/pytorch/pull/77448

Pull Request resolved: https://github.com/pytorch/pytorch/pull/77423

Approved by: https://github.com/BowenBao

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73284

Some important ops won't support optional type until opset 16,

so we can't fully test things end-to-end, but I believe this should

be all that's needed. Once ONNX Runtime supports opset 16,

we can do more testing and fix any remaining bugs.

Test Plan: Imported from OSS

Reviewed By: albanD

Differential Revision: D34625646

Pulled By: malfet

fbshipit-source-id: 537fcbc1e9d87686cc61f5bd66a997e99cec287b

Co-authored-by: BowenBao <bowbao@microsoft.com>

Co-authored-by: neginraoof <neginmr@utexas.edu>

Co-authored-by: Nikita Shulga <nshulga@fb.com>

(cherry picked from commit 822e79f31ae54d73407f34f166b654f4ba115ea5)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/67805

Also fix Reduce ops on binary_cross_entropy_with_logits

The graph says the output is a scalar but with `keepdims=1`

(the default), the output should be a tensor of rank 1. We set keep

`keepdims=0` to make it clear that we want a scalar output.

This previously went unnoticed because ONNX Runtime does not strictly

enforce shape inference mismatches if the model is not using the latest

opset version.

Test Plan: Imported from OSS

Reviewed By: msaroufim

Differential Revision: D32181304

Pulled By: malfet

fbshipit-source-id: 1462d8a313daae782013097ebf6341a4d1632e2c

Co-authored-by: Bowen Bao <bowbao@microsoft.com>