Refactor torchscript based exporter logic to move them to a single (private) location for better code management. Original public module and method apis are preserved.

- Updated module paths in `torch/csrc/autograd/python_function.cpp` accordingly

- Removed `check_onnx_broadcast` from `torch/autograd/_functions/utils.py` because it is private&unused

@albanD / @soulitzer could you review changes in `torch/csrc/autograd/python_function.cpp` and

`torch/autograd/_functions/utils.py`? Thanks!

## BC Breaking

- **Deprecated members in `torch.onnx.verification` are removed**

Differential Revision: [D81236421](https://our.internmc.facebook.com/intern/diff/D81236421)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/161323

Approved by: https://github.com/titaiwangms, https://github.com/angelayi

This word appears often in class descriptions and is not consistently spelled. Update comments and some function names to use the correct spelling consistently. Facilitates searching the codebase.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/155944

Approved by: https://github.com/Skylion007

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

beartype has served us well in identifying type errors and ensuring we call internal functions with the correct arguments (thanks!). However, the value of having beartype is diminished because of the following:

1. When beartype improves support for better Dict[] type checking, it discovered typing mistakes in some functions that were previously uncaught. This caused the exporter to fail with newer versions beartype when it used to succeed. Since we cannot fix PyTorch and release a new version just because of this, it creates confusion for users that have beartype in their environment from using torch.onnx

2. beartype adds an additional call line in the traceback, which makes the already thick dynamo stack even larger, affecting readability when users diagnose errors with the traceback.

3. Since the typing annotations need to be evaluated, we cannot use new syntaxes like `|` because we need to maintain compatibility with Python 3.8. We don't want to wait for PyTorch take py310 as the lowest supported Python before using the new typing syntaxes.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/130484

Approved by: https://github.com/titaiwangms

Summary

- The 'dynamo_export' diagnostics leverages the PT2 artifact logger to handle the verbosity

level of logs that are recorded in each SARIF log diagnostic. In addition to SARIF log,

terminal logging is by default disabled. Terminal logging can be activated by setting

the environment variable `TORCH_LOGS="onnx_diagnostics"`. When the environment variable

is set, it also fixes logging level to `logging.DEBUG`, overriding the verbosity level

specified in the diagnostic options.

See `torch/_logging/__init__.py` for more on PT2 logging.

- Replaces 'with_additional_message' with 'Logger.log' like apis.

- Introduce 'LazyString', adopted from 'torch._dynamo.utils', to skip

evaluation if the message will not be logged into diagnostic.

- Introduce 'log_source_exception' for easier exception logging.

- Introduce 'log_section' for easier markdown title logging.

- Updated all existing code to use new api.

- Removed 'arg_format_too_verbose' diagnostic.

- Rename legacy diagnostic classes for TorchScript Onnx Exporter to avoid

confusion.

Follow ups

- The 'dynamo_export' diagnostic now will not capture python stack

information at point of diagnostic creation. This will be added back in

follow up PRs for debug level logging.

- There is type mismatch due to subclassing 'Diagnostic' and 'DiagnosticContext'

for 'dynamo_export' to incorporate with PT2 logging. Follow up PR will

attempt to fix it.

- More docstrings with examples.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/106592

Approved by: https://github.com/titaiwangms

Summary

* Introduce `DiagnosticContext` to `torch.onnx.dynamo_export`.

* Remove `DiagnosticEngine` in preparations to update 'diagnostics' in `dynamo_export` to drop dependencies on global diagnostic context. No plans to update `torch.onnx.export` diagnostics.

Next steps

* Separate `torch.onnx.export` diagnostics and `torch.onnx.dynamo_export` diagnostics.

* Drop dependencies on global diagnostic context. https://github.com/pytorch/pytorch/pull/100219

* Replace 'print's with 'logger.log'.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/99668

Approved by: https://github.com/justinchuby, https://github.com/abock

Fixes#97728Fixes#98622

Fixes https://github.com/microsoft/onnx-script/issues/393

Provide op_level_debug in exporter which creates randomnied torch.Tensor based on FakeTensorProp real shape as inputs of both torch ops and ONNX symbolic function. The PR leverages on Transformer class to create a new fx.Graph, but shares the same Module with the original one to save memory.

The test is different from [op_correctness_test.py](https://github.com/microsoft/onnx-script/blob/main/onnxscript/tests/function_libs/torch_aten/ops_correctness_test.py) as op_level_debug generating real tensors based on the fake tensors in the model.

Limitation:

1. Some of the trace_only function is not supported due to lack of param_schema which leads to arg/kwargs wronly split and ndarray wrapping. (WARNINGS in SARIF)

2. The ops with dim/indices (INT64) is not supported that they need the information(shape) from other input args. (WARNINGS in SARIF)

3. sym_size and built-in ops are not supported.

4. op_level_debug only labels results in SARIF. It doesn't stop exporter.

5. Introduce ONNX owning FakeTensorProp supports int/float/bool

6. parametrized op_level_debug and dynamic_shapes into FX tests

Pull Request resolved: https://github.com/pytorch/pytorch/pull/97494

Approved by: https://github.com/justinchuby, https://github.com/BowenBao

`setType` API is not respected in current exporter because the graph-level shape type inference simply overrides every NOT ONNX Op shape we had from node-level shape type inference. To address this issue, this PR (1) makes custom Op with `setType` **reliable** in ConstantValueMap to secure its shape/type information in pass: _C._jit_pass_onnx. (2) If an invalid Op with shape/type in pass: _C._jit_pass_onnx_graph_shape_type_inference(graph-level), we recognize it as reliable.

1. In #62856, The refactor in onnx.cpp made regression on custom Op, as that was the step we should update custom Op shape/type information into ConstantValueMap for remaining Ops.

2. Add another condition besides IsValidONNXNode for custom Op setType in shape_type_inference.cpp. If all the node output has shape (not all dynamic), we say it's custom set type.

3. ~However, this PR won't solve the [issue](https://github.com/pytorch/pytorch/issues/87738#issuecomment-1292831219) that in the node-level shape type inference, exporter invokes the warning in terms of the unknow custom Op, since we process its symbolic_fn after this warning, but it would have shape/type if setType is used correctly. And that will be left for another issue to solve. #84661~ Add `no_type_warning` in UpdateReliable() and it only warns if non ONNX node with no given type appears.

Fixes#81693Fixes#87738

NOTE: not confident of this not breaking anything. Please share your thoughts if there is a robust test on your mind.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/88622

Approved by: https://github.com/BowenBao

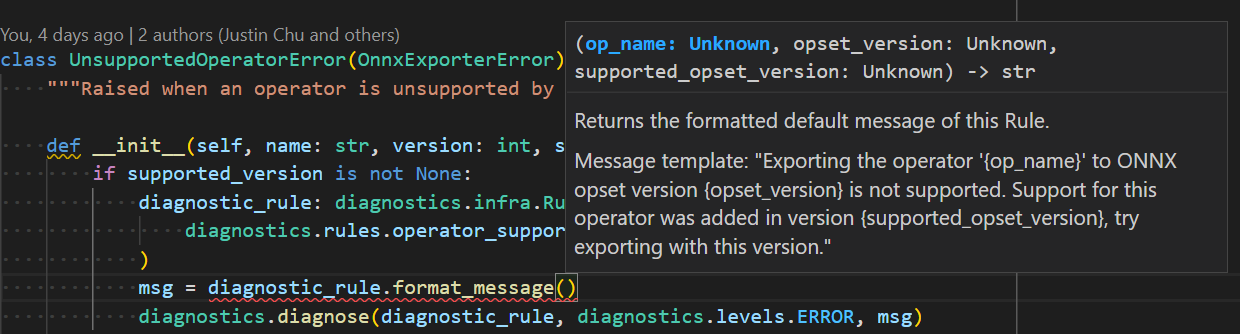

* Reflect required arguments in method signature for each diagnostic rule. Previous design accepts arbitrary sized tuple which is hard to use and prone to error.

* Removed `DiagnosticTool` to keep things compact.

* Removed specifying supported rule set for tool(context) and checking if rule of reported diagnostic falls inside the set, to keep things compact.

* Initial overview markdown file.

* Change `full_description` definition. Now `text` field should not be empty. And its markdown should be stored in `markdown` field.

* Change `message_default_template` to allow only named fields (excluding numeric fields). `field_name` provides clarity on what argument is expected.

* Added `diagnose` api to `torch.onnx._internal.diagnostics`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87830

Approved by: https://github.com/abock

Update `register_custom_op_symbolic`'s behavior to _only register the symbolic function at a single version_. This is more aligned with the semantics of the API signature.

As a result of this change, opset 7 and opset 8 implementations are now seen as fallback when the opset_version >= 9. Previously any ops internally registered to opset < 9 are not discoverable by an export version target >= 9. Updated the test to reflect this change.

The implication of this change is that users will need to register a symbolic function to the exact version when they want to override an existing symbolic. They are not impacted if (1) an implementation does not existing for the op, or (2) they are already registering to the exact version for export.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/85636

Approved by: https://github.com/BowenBao

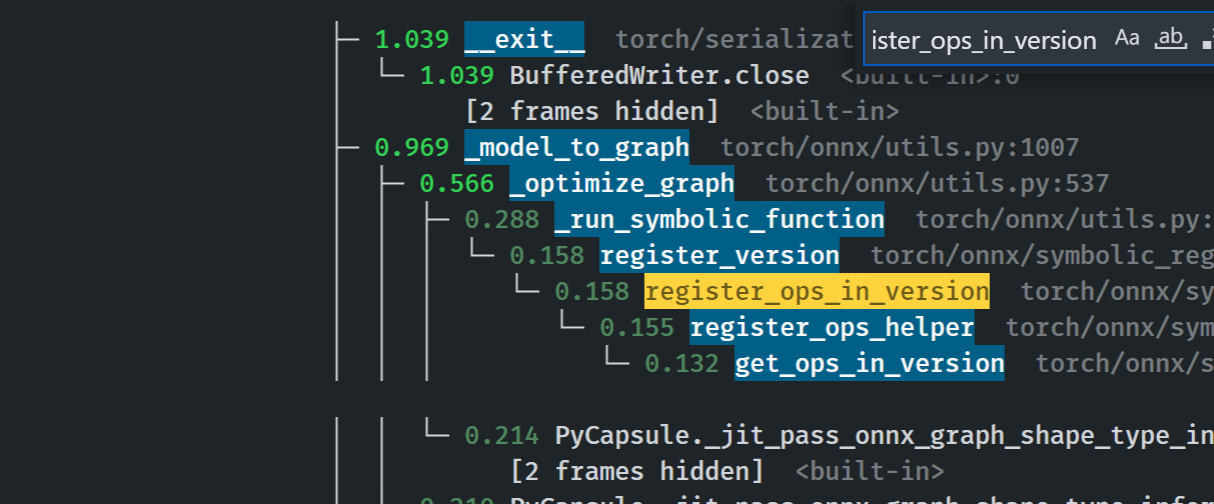

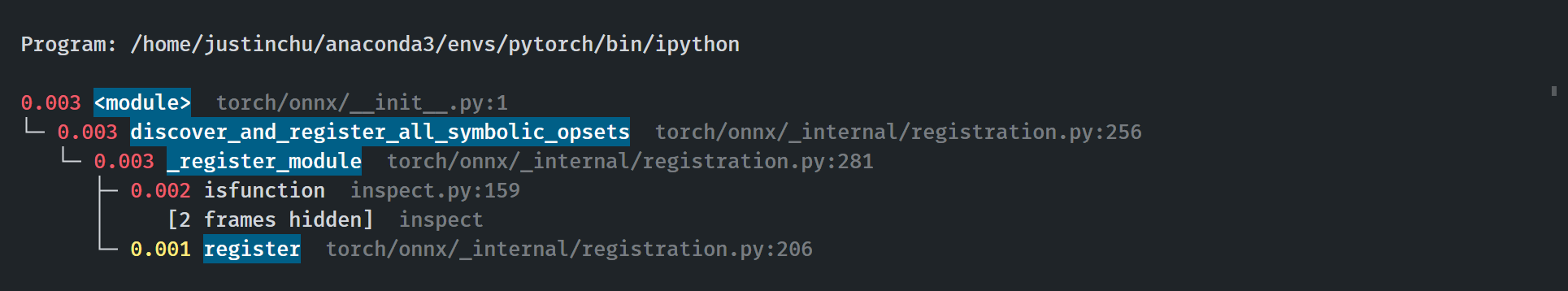

## Summary

The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`.

The new registry

- Has faster lookup by storing only functions in the opset version they are defined in

- Is easier to manage and interact with due to its class design

- Builds the foundation for the more flexible registration process detailed in #83787

Implementation changes

- **Breaking**: Remove `torch.onnx.symbolic_registry`

- `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility

- Update _onnx_supported_ops.py for doc generation to include quantized ops.

- Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp`

## Profiling results

-0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc.

### After

```

└─ 1.641 export <@beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1

└─ 1.641 export torch/onnx/utils.py:185

└─ 1.640 _export torch/onnx/utils.py:1331

├─ 0.889 _model_to_graph torch/onnx/utils.py:1005

│ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535

│ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0

│ │ │ [2 frames hidden] <built-in>

│ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670

│ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782

│ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18

│ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0

│ │ │ [2 frames hidden] <built-in>

│ │ └─ 0.033 [self]

```

### Before

### Start up time

The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448

Pull Request resolved: https://github.com/pytorch/pytorch/pull/84382

Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

This PR adds an internal wrapper on the [beartype](https://github.com/beartype/beartype) library to perform runtime type checking in `torch.onnx`. It uses beartype when it is found in the environment and is reduced to a no-op when beartype is not found.

Setting the env var `TORCH_ONNX_EXPERIMENTAL_RUNTIME_TYPE_CHECK=ERRORS` will turn on the feature. setting `TORCH_ONNX_EXPERIMENTAL_RUNTIME_TYPE_CHECK=DISABLED` will disable all checks. When not set and `beartype` is installed, a warning message is emitted.

Now when users call an api with invalid arguments e.g.

```python

torch.onnx.export(conv, y, path, export_params=True, training=False)

# traning should take TrainingModel, not bool

```

they get

```

Traceback (most recent call last):

File "bisect_m1_error.py", line 63, in <module>

main()

File "bisect_m1_error.py", line 59, in main

reveal_error()

File "bisect_m1_error.py", line 32, in reveal_error

torch.onnx.export(conv, y, cpu_model_path, export_params=True, training=False)

File "<@beartype(torch.onnx.utils.export) at 0x1281f5a60>", line 136, in export

File "pytorch/venv/lib/python3.9/site-packages/beartype/_decor/_error/errormain.py", line 301, in raise_pep_call_exception

raise exception_cls( # type: ignore[misc]

beartype.roar.BeartypeCallHintParamViolation: @beartyped export() parameter training=False violates type hint <class 'torch._C._onnx.TrainingMode'>, as False not instance of <protocol "torch._C._onnx.TrainingMode">.

```

when `TORCH_ONNX_EXPERIMENTAL_RUNTIME_TYPE_CHECK` is not set and `beartype` is installed, a warning message is emitted.

```

>>> torch.onnx.export("foo", "bar", "f")

<stdin>:1: CallHintViolationWarning: Traceback (most recent call last):

File "/home/justinchu/dev/pytorch/torch/onnx/_internal/_beartype.py", line 54, in _coerce_beartype_exceptions_to_warnings

return beartyped(*args, **kwargs)

File "<@beartype(torch.onnx.utils.export) at 0x7f1d4ab35280>", line 39, in export

File "/home/justinchu/anaconda3/envs/pytorch/lib/python3.9/site-packages/beartype/_decor/_error/errormain.py", line 301, in raise_pep_call_exception

raise exception_cls( # type: ignore[misc]

beartype.roar.BeartypeCallHintParamViolation: @beartyped export() parameter model='foo' violates type hint typing.Union[torch.nn.modules.module.Module, torch.jit._script.ScriptModule, torch.jit.ScriptFunction], as 'foo' not <protocol "torch.jit.ScriptFunction">, <protocol "torch.nn.modules.module.Module">, or <protocol "torch.jit._script.ScriptModule">.

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/justinchu/dev/pytorch/torch/onnx/_internal/_beartype.py", line 63, in _coerce_beartype_exceptions_to_warnings

return func(*args, **kwargs)

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 482, in export

_export(

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 1422, in _export

with exporter_context(model, training, verbose):

File "/home/justinchu/anaconda3/envs/pytorch/lib/python3.9/contextlib.py", line 119, in __enter__

return next(self.gen)

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 177, in exporter_context

with select_model_mode_for_export(

File "/home/justinchu/anaconda3/envs/pytorch/lib/python3.9/contextlib.py", line 119, in __enter__

return next(self.gen)

File "/home/justinchu/dev/pytorch/torch/onnx/utils.py", line 95, in select_model_mode_for_export

originally_training = model.training

AttributeError: 'str' object has no attribute 'training'

```

We see the error is caught right when the type mismatch happens, improving from what otherwise would become `AttributeError: 'str' object has no attribute 'training'`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/83673

Approved by: https://github.com/BowenBao