Package config/template files with torchgen

This PR packages native_functions.yaml, tags.yaml and ATen/templates

with torchgen.

This PR:

- adds a step to setup.py to copy the relevant files over into torchgen

- adds a docstring for torchgen (so `import torchgen; help(torchgen)`

says something)

- adds a helper function in torchgen so you can get the torchgen root

directory (and figure out where the packaged files are)

- changes some scripts to explicitly pass the location of torchgen,

which will be helpful for the first item in the Future section.

Future

======

- torchgen, when invoked from the command line, should use sources

in torchgen/packaged instead of aten/src. I'm unable to do this because

people (aka PyTorch CI) invokes `python -m torchgen.gen` without

installing torchgen.

- the source of truth for all of these files should be in torchgen.

This is a bit annoying to execute on due to potential merge conflicts

and dealing with merge systems

- CI and testing. The way things are set up right now is really fragile,

we should have a CI job for torchgen.

Test Plan

=========

I ran the following locally:

```

python -m torchgen.gen -s torchgen/packaged

```

and verified that it outputted files.

Furthermore, I did a setup.py install and checked that the files are

actually being packaged with torchgen.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78942

Approved by: https://github.com/ezyang

Fixes#78490

Following command:

```

conda install pytorch torchvision torchaudio -c pytorch-nightly

```

Installs libiomp . Hence we don't want to package libiomp with conda installs. However, we still keep it for libtorch and wheels.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78632

Approved by: https://github.com/malfet

Next stage of breaking up https://github.com/pytorch/pytorch/pull/74710

IR builder class introduced to decouple the explicit usage of `TsNode` in core lazy tensors.

Requires https://github.com/pytorch/pytorch/pull/75324 to be merged in first.

**Background**

- there are ~ 5 special ops used in lazy core but defined as :public {Backend}Node. (DeviceData, Expand, Scalar...)

- we currently require all nodes derive from {Backend}Node, so that backends can make this assumption safely

- it is hard to have shared 'IR classes' in core/ because they depend on 'Node'

**Motivation**

1. avoid copy-paste of "special" node classes for each backend

2. in general decouple and remove all dependencies that LTC has on the TS backend

**Summary of changes**

- new 'IRBuilder' interface that knows how to make 5 special ops

- move 'special' node classes to `ts_backend/`

- implement TSIRBuilder that makes the special TS Nodes

- new backend interface API to get the IRBuilder

- update core code to call the builder

CC: @wconstab @JackCaoG @henrytwo

Partially Fixes#74628

Pull Request resolved: https://github.com/pytorch/pytorch/pull/75433

Approved by: https://github.com/wconstab

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74387

Make temporary python bindings for flatbuffer to test ScriptModule save / load.

(Note: this ignores all push blocking failures!)

Test Plan: unittest

Reviewed By: iseeyuan

Differential Revision: D34968080

fbshipit-source-id: d23b16abda6e4b7ecf6b1198ed6e00908a3db903

(cherry picked from commit 5cbbc390c5f54146a1c469106ab4a6286c754325)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/74643

Previously `torch/csrc/deploy/interpreter/Optional.hpp` wasn't getting included in the wheel distribution created by `USE_DEPLOY=1 python setup.py bdist_wheel`, this pr fixes that

Test Plan: Imported from OSS

Reviewed By: d4l3k

Differential Revision: D35094459

Pulled By: PaliC

fbshipit-source-id: 50aea946cc5bb72720b993075bd57ccf8377db30

(cherry picked from commit 6ad5d96594f40af3d49d2137c2b3799a2d493b36)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/73991

Automatically generate `datapipe.pyi` via CMake and removing the generated .pyi file from Git. Users should have the .pyi file locally after building for the first time.

I will also be adding an internal equivalent diff for buck.

Test Plan: Imported from OSS

Reviewed By: ejguan

Differential Revision: D34868001

Pulled By: NivekT

fbshipit-source-id: 448c92da659d6b4c5f686407d3723933c266c74f

(cherry picked from commit 306dbc5f469e63bc141dac57ef310e6f0e16d9cd)

Summary:

RFC: https://github.com/pytorch/rfcs/pull/40

This PR (re)introduces python codegen for unboxing wrappers. Given an entry of `native_functions.yaml` the codegen should be able to generate the corresponding C++ code to convert ivalues from the stack to their proper types. To trigger the codegen, run

```

tools/jit/gen_unboxing.py -d cg/torch/share/ATen

```

Merged changes on CI test. In https://github.com/pytorch/pytorch/issues/71782 I added an e2e test for static dispatch + codegen unboxing. The test exports a mobile model of mobilenetv2, load and run it on a new binary for lite interpreter: `test/mobile/custom_build/lite_predictor.cpp`.

## Lite predictor build specifics

1. Codegen: `gen.py` generates `RegisterCPU.cpp` and `RegisterSchema.cpp`. Now with this PR, once `static_dispatch` mode is enabled, `gen.py` will not generate `TORCH_LIBRARY` API calls in those cpp files, hence avoids interaction with the dispatcher. Once `USE_LIGHTWEIGHT_DISPATCH` is turned on, `cmake/Codegen.cmake` calls `gen_unboxing.py` which generates `UnboxingFunctions.h`, `UnboxingFunctions_[0-4].cpp` and `RegisterCodegenUnboxedKernels_[0-4].cpp`.

2. Build: `USE_LIGHTWEIGHT_DISPATCH` adds generated sources into `all_cpu_cpp` in `aten/src/ATen/CMakeLists.txt`. All other files remain unchanged. In reality all the `Operators_[0-4].cpp` are not necessary but we can rely on linker to strip them off.

## Current CI job test coverage update

Created a new CI job `linux-xenial-py3-clang5-mobile-lightweight-dispatch-build` that enables the following build options:

* `USE_LIGHTWEIGHT_DISPATCH=1`

* `BUILD_LITE_INTERPRETER=1`

* `STATIC_DISPATCH_BACKEND=CPU`

This job triggers `test/mobile/lightweight_dispatch/build.sh` and builds `libtorch`. Then the script runs C++ tests written in `test_lightweight_dispatch.cpp` and `test_codegen_unboxing.cpp`. Recent commits added tests to cover as many C++ argument type as possible: in `build.sh` we installed PyTorch Python API so that we can export test models in `tests_setup.py`. Then we run C++ test binary to run these models on lightweight dispatch enabled runtime.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69881

Reviewed By: iseeyuan

Differential Revision: D33692299

Pulled By: larryliu0820

fbshipit-source-id: 211e59f2364100703359b4a3d2ab48ca5155a023

(cherry picked from commit 58e1c9a25e3d1b5b656282cf3ac2f548d98d530b)

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69255

One thing that I've found as I optimize profier is that there's a lot of intermingled code, where the kineto profiler relies on the legacy (autograd) profiler for generic operations. This made optimization hard because I had to manage too many complex dependencies. (Exaserbated by the USE_KINETO #ifdef's sprinkled around.) This PR is the first of several to restructure the profiler(s) so the later optimizations go in easier.

Test Plan: Unit tests

Reviewed By: aaronenyeshi

Differential Revision: D32671972

fbshipit-source-id: efa83b40dde4216f368f2a5fa707360031a85707

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68247

This splits `Functions.h`, `Operators.h`, `NativeFunctions.h` and

`NativeMetaFunctions.h` into seperate headers per operator base name.

With `at::sum` as an example, we can include:

```cpp

<ATen/core/sum.h> // Like Functions.h

<ATen/core/sum_ops.h> // Like Operators.h

<ATen/core/sum_native.h> // Like NativeFunctions.h

<ATen/core/sum_meta.h> // Like NativeMetaFunctions.h

```

The umbrella headers are still being generated, but all they do is

include from the `ATen/ops' folder.

Further, `TensorBody.h` now only includes the operators that have

method variants. Which means files that only include `Tensor.h` don't

need to be rebuilt when you modify function-only operators. Currently

there are about 680 operators that don't have method variants, so this

is potentially a significant win for incremental builds.

Test Plan: Imported from OSS

Reviewed By: mrshenli

Differential Revision: D32596272

Pulled By: albanD

fbshipit-source-id: 447671b2b6adc1364f66ed9717c896dae25fa272

Summary:

Remove all hardcoded AMD gfx targets

PyTorch build and Magma build will use rocm_agent_enumerator as

backup if PYTORCH_ROCM_ARCH env var is not defined

PyTorch extensions will use same gfx targets as the PyTorch build,

unless PYTORCH_ROCM_ARCH env var is defined

torch.cuda.get_arch_list() now works for ROCm builds

PyTorch CI dockers will continue to be built for gfx900 and gfx906 for now.

PYTORCH_ROCM_ARCH env var can be a space or semicolon separated list of gfx archs eg. "gfx900 gfx906" or "gfx900;gfx906"

cc jeffdaily sunway513 jithunnair-amd ROCmSupport KyleCZH

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61706

Reviewed By: seemethere

Differential Revision: D32735862

Pulled By: malfet

fbshipit-source-id: 3170e445e738e3ce373203e1e4ae99c84e645d7d

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/69251

This adds some actual documentation for deploy, which is probably useful

since we told everyone it was experimentally available so they will

probably be looking at what the heck it is.

It also wires up various compoenents of the OSS build to actually work

when used from an external project.

Differential Revision:

D32783312

D32783312

Test Plan: Imported from OSS

Reviewed By: wconstab

Pulled By: suo

fbshipit-source-id: c5c0a1e3f80fa273b5a70c13ba81733cb8d2c8f8

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68817

Looks like these files are getting used by downstream xla so we need to

include them in our package_data

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: mruberry

Differential Revision: D32622241

Pulled By: seemethere

fbshipit-source-id: 7b64e5d4261999ee58bc61185bada6c60c2bb5cc

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/68226

**Note that this PR is unusually big due to the urgency of the changes. Please reach out to me in case you wish to have a "pair" review.**

This PR introduces a major refactoring of the socket implementation of the C10d library. A big portion of the logic is now contained in the `Socket` class and a follow-up PR will further consolidate the remaining parts. As of today the changes in this PR offer:

- significantly better error handling and much more verbose logging (see the example output below)

- explicit support for IPv6 and dual-stack sockets

- correct handling of signal interrupts

- better Windows support

A follow-up PR will consolidate `send`/`recv` logic into `Socket` and fully migrate to non-blocking sockets.

## Example Output

```

[I logging.h:21] The client socket will attempt to connect to an IPv6 address on (127.0.0.1, 29501).

[I logging.h:21] The client socket is attempting to connect to [localhost]:29501.

[W logging.h:28] The server socket on [localhost]:29501 is not yet listening (Error: 111 - Connection refused), retrying...

[I logging.h:21] The server socket will attempt to listen on an IPv6 address.

[I logging.h:21] The server socket is attempting to listen on [::]:29501.

[I logging.h:21] The server socket has started to listen on [::]:29501.

[I logging.h:21] The client socket will attempt to connect to an IPv6 address on (127.0.0.1, 29501).

[I logging.h:21] The client socket is attempting to connect to [localhost]:29501.

[I logging.h:21] The client socket has connected to [localhost]:29501 on [localhost]:42650.

[I logging.h:21] The server socket on [::]:29501 has accepted a connection from [localhost]:42650.

[I logging.h:21] The client socket has connected to [localhost]:29501 on [localhost]:42722.

[I logging.h:21] The server socket on [::]:29501 has accepted a connection from [localhost]:42722.

[I logging.h:21] The client socket will attempt to connect to an IPv6 address on (127.0.0.1, 29501).

[I logging.h:21] The client socket is attempting to connect to [localhost]:29501.

[I logging.h:21] The client socket has connected to [localhost]:29501 on [localhost]:42724.

[I logging.h:21] The server socket on [::]:29501 has accepted a connection from [localhost]:42724.

[I logging.h:21] The client socket will attempt to connect to an IPv6 address on (127.0.0.1, 29501).

[I logging.h:21] The client socket is attempting to connect to [localhost]:29501.

[I logging.h:21] The client socket has connected to [localhost]:29501 on [localhost]:42726.

[I logging.h:21] The server socket on [::]:29501 has accepted a connection from [localhost]:42726.

```

ghstack-source-id: 143501987

Test Plan: Run existing unit and integration tests on devserver, Fedora, Ubuntu, macOS Big Sur, Windows 10.

Reviewed By: Babar, wilson100hong, mrshenli

Differential Revision: D32372333

fbshipit-source-id: 2204ffa28ed0d3683a9cb3ebe1ea8d92a831325a

Summary:

CAFFE2 has been deprecated for a while, but still included in every PyTorch build.

We should stop building it by default, although CI should still validate that caffe2 code is buildable.

Build even fewer dependencies when compiling mobile builds without Caffe2

Introduce `TEST_CAFFE2` in torch.common.utils

Skip `TestQuantizedEmbeddingOps` and `TestJit.test_old_models_bc` is code is compiled without Caffe2

Should be landed after https://github.com/pytorch/builder/pull/864

Pull Request resolved: https://github.com/pytorch/pytorch/pull/66658

Reviewed By: driazati, seemethere, janeyx99

Differential Revision: D31669156

Pulled By: malfet

fbshipit-source-id: 1cc45e2d402daf913a4685eb9f841cc3863e458d

Summary:

This PR introduces a new `torchrun` entrypoint that simply "points" to `python -m torch.distributed.run`. It is shorter and less error-prone to type and gives a nicer syntax than a rather cryptic `python -m ...` command line. Along with the new entrypoint the documentation is also updated and places where `torch.distributed.run` are mentioned are replaced with `torchrun`.

cc pietern mrshenli pritamdamania87 zhaojuanmao satgera rohan-varma gqchen aazzolini osalpekar jiayisuse agolynski SciPioneer H-Huang mrzzd cbalioglu gcramer23

Pull Request resolved: https://github.com/pytorch/pytorch/pull/64049

Reviewed By: cbalioglu

Differential Revision: D30584041

Pulled By: kiukchung

fbshipit-source-id: d99db3b5d12e7bf9676bab70e680d4b88031ae2d

Summary:

Using https://github.com/mreineck/pocketfft

Also delete explicit installation of pocketfft during the build as it will be available via submodule

Limit PocketFFT support to cmake-3.10 or newer, as `set_source_files_properties` does not seem to work as expected with cmake-3.5

Partially addresses https://github.com/pytorch/pytorch/issues/62821

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62841

Reviewed By: seemethere

Differential Revision: D30140441

Pulled By: malfet

fbshipit-source-id: d1a1cf1b43375321f5ec5b3d0b538f58082f7825

Summary:

This PR: (1) enables the use of a system-provided Intel TBB for building PyTorch, (2) removes `tbb:task_scheduler_init` references since it has been removed from TBB a while ago (3) marks the implementation of `_internal_set_num_threads` with a TODO as it requires a revision that fixes its thread allocation logic.

Tested with `test/run_test`; no new tests are introduced since there are no behavioral changes (removal of `tbb::task_scheduler_init` has no impact on the runtime behavior).

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61934

Reviewed By: malfet

Differential Revision: D29805416

Pulled By: cbalioglu

fbshipit-source-id: 22042b428b57b8fede9dfcc83878d679a19561dd

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

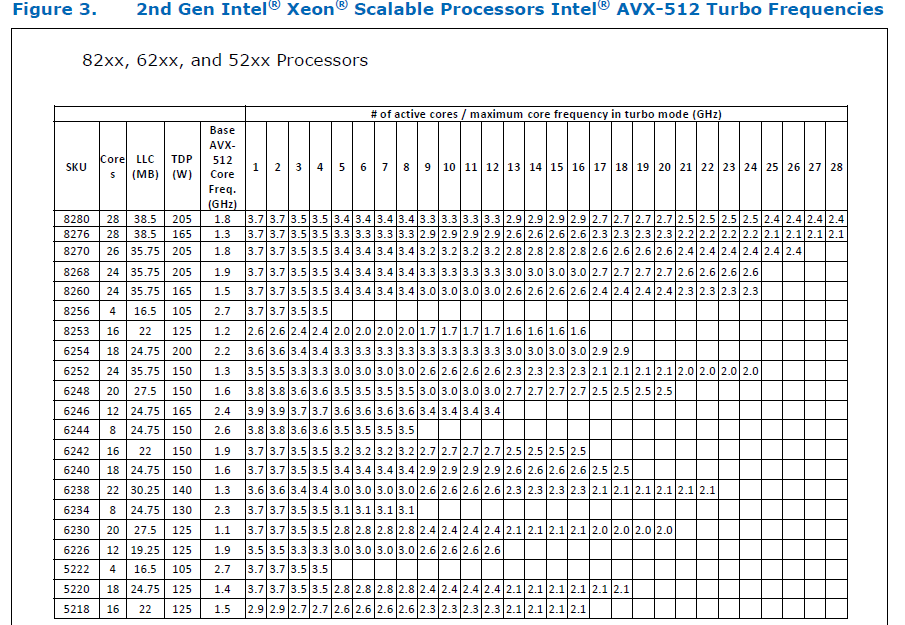

### Would the downclocking caused by AVX512 pose an issue?

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

Summary:

Since v1.7, oneDNN (MKL-DNN) has supported the use of Compute Library

for the Arm architeture to provide optimised convolution primitives

on AArch64.

This change enables the use of Compute Library in the PyTorch build.

Following the approach used to enable the use of CBLAS in MKLDNN,

It is enabled by setting the env vars USE_MKLDNN and USE_MKLDNN_ACL.

The location of the Compute Library build must be set useing `ACL_ROOT_DIR`.

This is an extension of the work in https://github.com/pytorch/pytorch/pull/50400

which added support for the oneDNN/MKL-DNN backend on AArch64.

_Note: this assumes that Compute Library has been built and installed at

ACL_ROOT_DIR. Compute library can be downloaded here:

`https://github.com/ARM-software/ComputeLibrary`_

Fixes #{issue number}

Pull Request resolved: https://github.com/pytorch/pytorch/pull/55913

Reviewed By: ailzhang

Differential Revision: D28559516

Pulled By: malfet

fbshipit-source-id: 29d24996097d0a54efc9ab754fb3f0bded290005

Summary:

In order to make it more convenient for maintainers to review the ATen AVX512 implementation, the namespace `vec256` is being renamed to `vec` in this PR, as modifying 77 files & creating 2 new files only took a few minutes, as these changes aren't significant, so fewer files would've to be reviewed while reviewing https://github.com/pytorch/pytorch/issues/56992.

The struct `Vec256` is not being renamed to `Vec`, but `Vectorized` instead, because there are some `using Vec=` statements in the codebase, so renaming it to `Vectorized` was more convenient. However, I can still rename it to `Vec`, if required.

### Changes made in this PR -

Created `aten/src/ATen/cpu/vec` with subdirectory `vec256` (vec512 would be added via https://github.com/pytorch/pytorch/issues/56992).

The changes were made in this manner -

1. First, a script was run to rename `vec256` to `vec` & `Vec` to `Vectorized` -

```

# Ref: https://stackoverflow.com/a/20721292

cd aten/src

grep -rli 'vec256\/vec256\.h' * | xargs -i@ sed -i 's/vec256\/vec256\.h/vec\/vec\.h/g' @

grep -rli 'vec256\/functional\.h' * | xargs -i@ sed -i 's/vec256\/functional\.h/vec\/functional\.h/g' @

grep -rli 'vec256\/intrinsics\.h' * | xargs -i@ sed -i 's/vec256\/intrinsics\.h/vec\/vec256\/intrinsics\.h/g' @

grep -rli 'namespace vec256' * | xargs -i@ sed -i 's/namespace vec256/namespace vec/g' @

grep -rli 'Vec256' * | xargs -i@ sed -i 's/Vec256/Vectorized/g' @

grep -rli 'vec256\:\:' * | xargs -i@ sed -i 's/vec256\:\:/vec\:\:/g' @

grep -rli 'at\:\:vec256' * | xargs -i@ sed -i 's/at\:\:vec256/at\:\:vec/g' @

cd ATen/cpu

mkdir vec

mv vec256 vec

cd vec/vec256

grep -rli 'cpu\/vec256\/' * | xargs -i@ sed -i 's/cpu\/vec256\//cpu\/vec\/vec256\//g' @

grep -rli 'vec\/vec\.h' * | xargs -i@ sed -i 's/vec\/vec\.h/vec\/vec256\.h/g' @

```

2. `vec256` & `VEC256` were replaced with `vec` & `VEC` respectively in 4 CMake files.

3. In `pytorch_vec/aten/src/ATen/test/`, `vec256_test_all_types.h` & `vec256_test_all_types.cpp` were renamed.

4. `pytorch_vec/aten/src/ATen/cpu/vec/vec.h` & `pytorch_vec/aten/src/ATen/cpu/vec/functional.h` were created.

Both currently have one line each & would have 5 when AVX512 support would be added for ATen.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58438

Reviewed By: malfet

Differential Revision: D28509615

Pulled By: ezyang

fbshipit-source-id: 63840df5f23b3b59e203d25816e2977c6a901780

Summary:

This PR is step 0 of adding PyTorch convolution bindings using the cuDNN frontend. The cuDNN frontend is the recommended way of using cuDNN v8 API. It is supposed to have faster release cycles, so that, for example, if people find a specific kernel has a bug, they can report it, and that kernel will be blocked in the cuDNN frontend and frameworks could just update that submodule without the need for waiting for a whole cuDNN release.

The work is not complete, and this PR is only step 0.

**What this PR does:**

- Add cudnn-frontend as a submodule.

- Modify cmake to build that submodule.

- Add bindings for convolution forward in `Conv_v8.cpp`, which is disabled by a macro by default.

- Tested manually by enabling the macro and run `test_nn.py`. All tests pass except those mentioned below.

**What this PR doesn't:**

- Only convolution forward, no backward. The backward will use v7 API.

- No 64bit-indexing support for some configuration. This is a known issue of cuDNN, and will be fixed in a later cuDNN version. PyTorch will not implement any workaround for issue, but instead, v8 API should be disabled on problematic cuDNN versions.

- No test beyond PyTorch's unit tests.

- Not tested for correctness on real models.

- Not benchmarked for performance.

- Benchmark cache is not thread-safe. (This is marked as `FIXME` in the code, and will be fixed in a follow-up PR)

- cuDNN benchmark is not supported.

- There are failing tests, which will be resolved later:

```

FAILED test/test_nn.py::TestNNDeviceTypeCUDA::test_conv_cudnn_nhwc_cuda_float16 - AssertionError: False is not true : Tensors failed to compare as equal!With rtol=0.001 and atol=1e-05, found 32 element(s) (out of 32) whose difference(s) exceeded the margin of error (in...

FAILED test/test_nn.py::TestNNDeviceTypeCUDA::test_conv_cudnn_nhwc_cuda_float32 - AssertionError: False is not true : Tensors failed to compare as equal!With rtol=1.3e-06 and atol=1e-05, found 32 element(s) (out of 32) whose difference(s) exceeded the margin of error (...

FAILED test/test_nn.py::TestNNDeviceTypeCUDA::test_conv_large_cuda - RuntimeError: CUDNN_BACKEND_OPERATION: cudnnFinalize Failed cudnn_status: 9

FAILED test/test_nn.py::TestNN::test_Conv2d_depthwise_naive_groups_cuda - AssertionError: False is not true : Tensors failed to compare as equal!With rtol=0 and atol=1e-05, found 64 element(s) (out of 64) whose difference(s) exceeded the margin of error (including 0 an...

FAILED test/test_nn.py::TestNN::test_Conv2d_deterministic_cudnn - RuntimeError: not supported yet

FAILED test/test_nn.py::TestNN::test_ConvTranspose2d_groups_cuda_fp32 - RuntimeError: cuDNN error: CUDNN_STATUS_BAD_PARAM

FAILED test/test_nn.py::TestNN::test_ConvTranspose2d_groups_cuda_tf32 - RuntimeError: cuDNN error: CUDNN_STATUS_BAD_PARAM

```

Although this is not a complete implementation of cuDNN v8 API binding, I still want to merge this first. This would allow me to do small and incremental work, for the ease of development and review.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51390

Reviewed By: malfet

Differential Revision: D28513167

Pulled By: ngimel

fbshipit-source-id: 9cc20c9dec5bbbcb1f94ac9e0f59b10c34f62740

Summary:

This adds some more compiler warnings ignores for everything that happens on a standard CPU build (CUDA builds still have a bunch of warnings so we can't turn on `-Werror` everywhere yet).

](https://our.intern.facebook.com/intern/diff/28005063/)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56630

Pulled By: driazati

Reviewed By: malfet

Differential Revision: D28005063

fbshipit-source-id: 541ed415eb0470ddf7e08c22c5eb6da9db26e9a0

Summary:

[distutils](https://docs.python.org/3/library/distutils.html) is on its way out and will be deprecated-on-import for Python 3.10+ and removed in Python 3.12 (see [PEP 632](https://www.python.org/dev/peps/pep-0632/)). There's no reason for us to keep it around since all the functionality we want from it can be found in `setuptools` / `sysconfig`. `setuptools` includes a copy of most of `distutils` (which is fine to use according to the PEP), that it uses under the hood, so this PR also uses that in some places.

Fixes#56527

Pull Request resolved: https://github.com/pytorch/pytorch/pull/57040

Pulled By: driazati

Reviewed By: nikithamalgifb

Differential Revision: D28051356

fbshipit-source-id: 1ca312219032540e755593e50da0c9e23c62d720

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/56868

See __init__.py for a summary of the tool.

The following sections are present in this initial version

- Model Size. Show the total model size, as well as a breakdown by

stored files, compressed files, and zip overhead. (I expect this

breakdown to be a bit more useful once data.pkl is compressed.)

- Model Structure. This is basically the output of

`show_pickle(data.pkl)`, but as a hierarchical structure.

Some structures cause this view to crash right now, but it can be

improved incrementally.

- Zip Contents. This is basically the output of `zipinfo -l`.

- Code. This is the TorchScript code. It's integrated with a blame

window at the bottom, so you can click "Blame Code", then click a bit

of code to see where it came from (based on the debug_pkl). This

currently doesn't render properly if debug_pkl is missing or

incomplete.

- Extra files (JSON). JSON dumps of each json file under /extra/, up to

a size limit.

- Extra Pickles. For each .pkl file in the model, we safely unpickle it

with `show_pickle`, then render it with `pprint` and include it here

if the size is not too large. We aren't able to install the pprint

hack that thw show_pickle CLI uses, so we get one-line rendering for

custom objects, which is not very useful. Built-in types look fine,

though. In particular, bytecode.pkl seems to look fine (and we

hard-code that file to ignore the size limit).

I'm checking in the JS dependencies to avoid a network dependency at

runtime. They were retrieved from the following URLS, then passed

through a JS minifier:

https://unpkg.com/htm@3.0.4/dist/htm.module.js?modulehttps://unpkg.com/preact@10.5.13/dist/preact.module.js?module

Test Plan:

Manually ran on a few models I had lying around.

Mostly tested in Chrome, but I also poked around in Firefox.

Reviewed By: dhruvbird

Differential Revision: D28020849

Pulled By: dreiss

fbshipit-source-id: 421c30ed7ca55244e9fda1a03b8aab830466536d

Summary:

Fixes https://github.com/pytorch/pytorch/issues/50577

Learning rate schedulers had not yet been implemented for the C++ API.

This pull request introduces the learning rate scheduler base class and the StepLR subclass. Furthermore, it modifies the existing OptimizerOptions such that the learning rate scheduler can modify the learning rate.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52268

Reviewed By: mrshenli

Differential Revision: D26818387

Pulled By: glaringlee

fbshipit-source-id: 2b28024a8ea7081947c77374d6d643fdaa7174c1

Summary:

In setup.py add logic to:

- Get list of submodules from .gitmodules file

- Auto-fetch submodules if none of them has been fetched

In CI:

- Test this on non-docker capable OSes (Windows and Mac)

- Use shallow submodule checkouts whenever possible

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53461

Reviewed By: ezyang

Differential Revision: D26871119

Pulled By: malfet

fbshipit-source-id: 8b23d6a4fcf04446eac11446e0113819476ef6ea

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53489

It appears that D26675801 (1fe6a6507e) broke Glow builds (and probably other instals) with the inclusion of the python_arg_parser include. That dep lives in a directory of its own and was not included in the setup.py.

Test Plan: OSS tests should catch this.

Reviewed By: ngimel

Differential Revision: D26878180

fbshipit-source-id: 70981340226a9681bb9d5420db56abba75e7f0a5

Summary:

Currently there's only one indicator for build_ext regarding distributed backend `USE_DISTRIBUTED`.

However one can build with selective backends. adding the 3 distributed backend option in setup.py

Pull Request resolved: https://github.com/pytorch/pytorch/pull/53214

Test Plan: Set the 3 options in environment and locally ran `python setup.py build_ext`

Reviewed By: janeyx99

Differential Revision: D26818259

Pulled By: walterddr

fbshipit-source-id: 688e8f83383d10ce23ee1f019be33557ce5cce07

Summary:

Do not build PyTorch if `setup.py` is called with 'sdist' option

Regenerate bundled license while sdist package is being built

Refactor `check_submodules` out of `build_deps` and check that submodules project are present during source package build stage.

Test that sdist package is configurable during `asan-build` step

Fixes https://github.com/pytorch/pytorch/issues/52843

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52908

Reviewed By: walterddr

Differential Revision: D26685176

Pulled By: malfet

fbshipit-source-id: 972a40ae36e194c0b4e0fc31c5e1af1e7a815185

Summary:

Move NumPy initialization from `initModule()` to singleton inside

`torch::utils::is_numpy_available()` function.

This singleton will print a warning, that NumPy integration is not

available, rather than fails to import torch altogether.

The warning be printed only once, and will look something like the

following:

```

UserWarning: Failed to initialize NumPy: No module named 'numpy.core' (Triggered internally at ../torch/csrc/utils/tensor_numpy.cpp:66.)

```

This is helpful if PyTorch was compiled with wrong NumPy version, of

NumPy is not commonly available on the platform (which is often the case

on AARCH64 or Apple M1)

Test that PyTorch is usable after numpy is uninstalled at the end of

`_test1` CI config.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/52794

Reviewed By: seemethere

Differential Revision: D26650509

Pulled By: malfet

fbshipit-source-id: a2d98769ef873862c3704be4afda075d76d3ad06

Summary:

Previously header files from jit/tensorexpr were not copied, this PR should enable copying.

This will allow other OSS projects like Glow to used TE.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49933

Reviewed By: Krovatkin, mruberry

Differential Revision: D25725927

Pulled By: protonu

fbshipit-source-id: 9d5a0586e9b73111230cacf044cd7e8f5c600ce9

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/49201

This unblocks kineto profiler for 1.8 release.

This PR supercedes https://github.com/pytorch/pytorch/pull/48391

Note: this will somewhat increase the size of linux server binaries, bc

we add libkineto.a and libcupti_static.a:

-rw-r--r-- 1 jenkins jenkins 1107502 Dec 10 21:16 build/lib/libkineto.a

-rw-r--r-- 1 root root 13699658 Nov 13 2019 /usr/local/cuda/lib64/libcupti_static.a

Test Plan:

CI

https://github.com/pytorch/pytorch/pull/48391

Imported from OSS

Reviewed By: ngimel

Differential Revision: D25480770

fbshipit-source-id: 037cd774f5547d9918d6055ef5cc952a54e48e4c