Summary:

[Here](https://docs.gradle.org/current/userguide/gradle_wrapper.html), there is the following description.

`The recommended way to execute any Gradle build is with the help of the Gradle Wrapper`

I took a little time to prepare Gradle for `pytorch_android` build. (version etc.)

I think using Gradle wrapper will make `pytorch_android` build more seamless.

Gradle wrapper version: 4.10.3

250c71121b/.circleci/scripts/build_android_gradle.sh (L13)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51067

Reviewed By: izdeby

Differential Revision: D26315718

Pulled By: IvanKobzarev

fbshipit-source-id: f8077d7b28dc0b03ee48bcdac2f5e47d9c1f04d9

Summary:

Reason:

To have one-step build for test android application based on the current code state that is ready for profiling with simpleperf, systrace etc. to profile performance inside the application.

## Parameters to control debug symbols stripping

Introducing /CMakeLists parameter `ANDROID_DEBUG_SYMBOLS` to be able not to strip symbols for pytorch (not add linker flag `-s`)

which is checked in `scripts/build_android.sh`

On gradle side stripping happens by default, and to prevent it we have to specify

```

android {

packagingOptions {

doNotStrip "**/*.so"

}

}

```

which is now controlled by new gradle property `nativeLibsDoNotStrip `

## Test_App

`android/test_app` - android app with one MainActivity that does inference in cycle

`android/build_test_app.sh` - script to build libtorch with debug symbols for specified android abis and adds `NDK_DEBUG=1` and `-PnativeLibsDoNotStrip=true` to keep all debug symbols for profiling.

Script assembles all debug flavors:

```

└─ $ find . -type f -name *apk

./test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

./test_app/app/build/outputs/apk/resnet/debug/test_app-resnet-debug.apk

```

## Different build configurations

Module for inference can be set in `android/test_app/app/build.gradle` as a BuildConfig parameters:

```

productFlavors {

mobilenetQuant {

dimension "model"

applicationIdSuffix ".mobilenetQuant"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_MOBILENET_QUANT'))

addManifestPlaceholders([APP_NAME: "PyMobileNetQuant"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-mobilenet\"")

}

resnet {

dimension "model"

applicationIdSuffix ".resnet"

buildConfigField ("String", "MODULE_ASSET_NAME", buildConfigProps('MODULE_ASSET_NAME_RESNET18'))

addManifestPlaceholders([APP_NAME: "PyResnet"])

buildConfigField ("String", "LOGCAT_TAG", "\"pytorch-resnet\"")

}

```

In that case we can setup several apps on the same device for comparison, to separate packages `applicationIdSuffix`: 'org.pytorch.testapp.mobilenetQuant' and different application names and logcat tags as `manifestPlaceholder` and another BuildConfig parameter:

```

─ $ adb shell pm list packages | grep pytorch

package:org.pytorch.testapp.mobilenetQuant

package:org.pytorch.testapp.resnet

```

In future we can add another BuildConfig params e.g. single/multi threads and other configuration for profiling.

At the moment 2 flavors - for resnet18 and for mobilenetQuantized

which can be installed on connected device:

```

cd android

```

```

gradle test_app:installMobilenetQuantDebug

```

```

gradle test_app:installResnetDebug

```

## Testing:

```

cd android

sh build_test_app.sh

adb install -r test_app/app/build/outputs/apk/mobilenetQuant/debug/test_app-mobilenetQuant-debug.apk

```

```

cd $ANDROID_NDK

python simpleperf/run_simpleperf_on_device.py record --app org.pytorch.testapp.mobilenetQuant -g --duration 10 -o /data/local/tmp/perf.data

adb pull /data/local/tmp/perf.data

python simpleperf/report_html.py

```

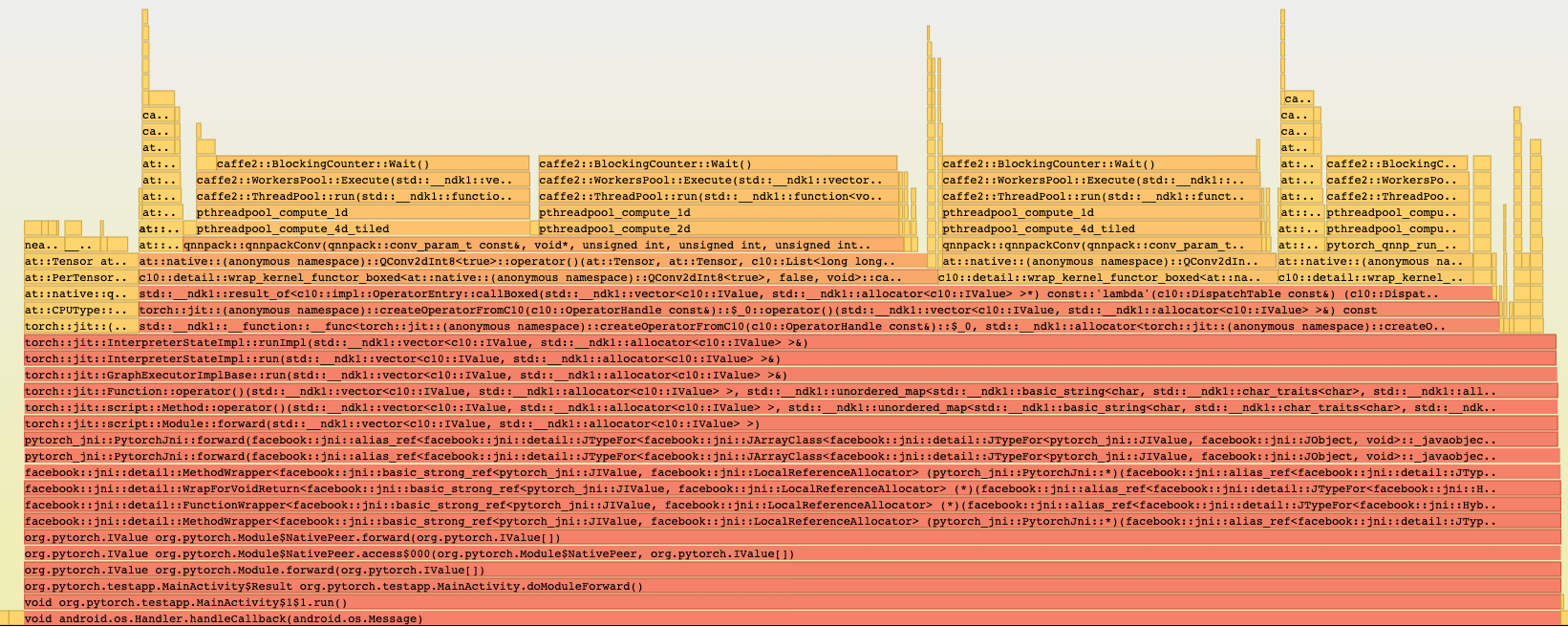

Simpleperf report has all symbols:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/28406

Differential Revision: D18386622

Pulled By: IvanKobzarev

fbshipit-source-id: 3a751192bbc4bc3c6d7f126b0b55086b4d586e7a

Summary:

Tensor has getDataAsFloatArray(), we also support Int and Byte Tensors,

adding symmetric methods for Int and Byte, that will throw

IllegalStateException if called for not appropriate type

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25183

Reviewed By: dreiss

Differential Revision: D17052674

Pulled By: IvanKobzarev

fbshipit-source-id: 1d44944461ad008e202e382152cd0690c61124f4

Summary:

TLDR; initial commit of android java-jni wrapper of pytorchscript c++ api

The main idea is to provide java interface for android developers to use pytorchscript modules.

java API tries to repeat semantic of c++ and python pytorchscript API

org.pytorch.Module (wrapper of torch::jit::script::Module)

- static Module load(String path)

- IValue forward(IValue... inputs)

- IValue runMethod(String methodName, IValue... inputs)

org.pytorch.Tensor (semantic of at::Tensor)

- newFloatTensor(long[] dims, float[] data)

- newFloatTensor(long[] dims, FloatBuffer data)

- newIntTensor(long[] dims, int[] data)

- newIntTensor(long[] dims, IntBuffer data)

- newByteTensor(long[] dims, byte[] data)

- newByteTensor(long[] dims, ByteBuffer data)

org.pytorch.IValue (semantic of at::IValue)

- static factory methods to create pytorchscript supported types

Examples of usage api could be found in PytorchInstrumentedTests.java:

Module module = Module.load(path);

IValue input = IValue.tensor(Tensor.newByteTensor(new long[]{1}, Tensor.allocateByteBuffer(1)));

IValue output = module.forward(input);

Tensor outputTensor = output.getTensor();

ThreadSafety:

Api is not thread safe, all synchronization must be done on caller side.

Mutability:

org.pytorch.Tensor buffer is DirectBuffer with native byte order, can be created with static factory methods specifing DirectBuffer.

At the moment org.pytorch.Tensor does not hold at::Tensor on jni side, it has: long[] dimensions, type, DirectByteBuffer blobData

Input tensors are mutable (can be modified and used for the next inference),

Uses values from buffer on the momment of Module#forward or Module#runMethod calls.

Buffers of input tensors is used directly by input at::Tensor

Output is copied from output at::Tensor and is immutable.

Dependencies:

Jni level is implemented with usage of fbjni library, that was developed in Facebook,

and was already used and opensourced in several opensource projects,

added to the repo as submodule from personal account to be able to switch submodule

when fbjni will be opensourced separately.

ghstack-source-id: b39c848359a70d717f2830a15265e4aa122279c0

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25084

Pull Request resolved: https://github.com/pytorch/pytorch/pull/25105

Reviewed By: dreiss

Differential Revision: D16988107

Pulled By: IvanKobzarev

fbshipit-source-id: 41ca7c9869f8370b8504c2ef8a96047cc16516d4