mirror of

https://github.com/huggingface/peft.git

synced 2025-10-22 00:18:52 +08:00

Compare commits

477 Commits

v0.0.2

...

smangrul/a

| Author | SHA1 | Date | |

|---|---|---|---|

| f31b35448b | |||

| 7d44026dea | |||

| ba90047d70 | |||

| 10cf3a4fa3 | |||

| aac7722b9e | |||

| ed396a69ed | |||

| ec267c644a | |||

| 9b5808938f | |||

| b10a8cedf6 | |||

| bfb264ad96 | |||

| 702f9377e3 | |||

| 0e33ac1efe | |||

| e27e883443 | |||

| ffbb6bcf9c | |||

| 8541b60acb | |||

| 96c0277a1b | |||

| b15c185939 | |||

| a955ef1088 | |||

| e06d94ddeb | |||

| 1681cebf60 | |||

| a09f66c8cd | |||

| 1869fe6e05 | |||

| 1c27e24d50 | |||

| 30fd5a4c88 | |||

| 3040782e04 | |||

| 1b8b17de86 | |||

| 029f416fce | |||

| a1953baef6 | |||

| e90dcc4be4 | |||

| 71b326db68 | |||

| 42ab10699b | |||

| 5a0e19dda1 | |||

| 86ad5ce55c | |||

| 61a8e3a3bd | |||

| 0675541154 | |||

| fa5957f7ca | |||

| 5265eb7ebd | |||

| 878a8bc990 | |||

| b1bafca333 | |||

| 92d38b50af | |||

| 5de5c24a8a | |||

| 062d95a09e | |||

| c33c42f158 | |||

| c46d76ae3a | |||

| 4f542e319f | |||

| b5e341bb8a | |||

| 06fd06a4d2 | |||

| 7d1d959879 | |||

| 39ef2546d5 | |||

| 9f7492577f | |||

| bef8e3584c | |||

| 032fff92fb | |||

| 6c8659f8f9 | |||

| 5884bdbea4 | |||

| 86290e9660 | |||

| 563acf0832 | |||

| f4526d57fc | |||

| d9b0a118af | |||

| f5352f08c5 | |||

| 48ffd07276 | |||

| eb01b5ee1d | |||

| a7ea02a709 | |||

| 66fd087205 | |||

| 0e8932f1cb | |||

| e2b8e3260d | |||

| c476c1e348 | |||

| 18544647ac | |||

| 8af8dbd2ec | |||

| 39fc09ec1b | |||

| 016722addd | |||

| fd10faedfa | |||

| 702d06098e | |||

| 0b62b4378b | |||

| b8b84cb6ce | |||

| 08cb3dde57 | |||

| 03eb378eb9 | |||

| 6b81d7179f | |||

| 0270b7c780 | |||

| 38e9c650ba | |||

| 9320373c12 | |||

| 019b7ff9d6 | |||

| b519e3f9e1 | |||

| e48dfc331c | |||

| 4d51464045 | |||

| 8563a63af2 | |||

| eb75374fb1 | |||

| 1cbc985018 | |||

| 58f4dee67a | |||

| a8d11b36a3 | |||

| 189a6b8e35 | |||

| e45529b149 | |||

| ba7b1011b8 | |||

| c23be52881 | |||

| 7fb5f90a38 | |||

| fcff23f005 | |||

| 42a184f742 | |||

| 7add756923 | |||

| 9914e76d5b | |||

| 668f045972 | |||

| 38f48dd769 | |||

| db55fb34b8 | |||

| 76d4ecd40d | |||

| 27f956a73b | |||

| dd1c0d87fe | |||

| 207d290865 | |||

| 5e8ee44091 | |||

| 662ebe593e | |||

| c42968617b | |||

| 3714aa2fff | |||

| 0fcc30dd43 | |||

| d6015bc11f | |||

| 4fd374e80d | |||

| 3d7770bfd5 | |||

| f173f97e9d | |||

| ef8523b5a4 | |||

| 63c5c9a2c0 | |||

| 5ed95f49d0 | |||

| 8a3fcd060d | |||

| b1059b73aa | |||

| 1a1cfe3479 | |||

| 8e53e16005 | |||

| 6a18585f25 | |||

| e509b8207d | |||

| a37156c2c7 | |||

| 632997d1fb | |||

| 0c1e3b470a | |||

| e8f66b8a42 | |||

| 2c2bbb4064 | |||

| 3890665e60 | |||

| 49a20c16dc | |||

| af1849e805 | |||

| 2822398fbe | |||

| 1ef4b61425 | |||

| f703cb2414 | |||

| 9413b555c4 | |||

| 8818740bef | |||

| 34027fe813 | |||

| 0bdb54f03f | |||

| 4ee024846b | |||

| 26577aba84 | |||

| b21559e042 | |||

| c0209c35ab | |||

| 070e3f75f3 | |||

| 4ca286c333 | |||

| 59778af504 | |||

| 10a2a6db5d | |||

| 70af02a2bc | |||

| cc82b674b5 | |||

| 6ba67723df | |||

| 202f1d7c3d | |||

| e1c41d7183 | |||

| 053573e0df | |||

| 1117d47721 | |||

| f982d75fa0 | |||

| fdebf8ac4f | |||

| ff282c2a8f | |||

| 7b7038273a | |||

| 0422df466e | |||

| f35b20a845 | |||

| c22a57420c | |||

| 04689b6535 | |||

| bd1b4b5aa9 | |||

| 445940fb7b | |||

| e8b0085d2b | |||

| 31560c67fb | |||

| d5feb8b787 | |||

| 3258b709a3 | |||

| a591b4b905 | |||

| 3aaf482704 | |||

| 07a4b8aacc | |||

| b6c751455e | |||

| dee2a96fea | |||

| b728f5f559 | |||

| 74e2a3da50 | |||

| 1a6151b91f | |||

| 7397160435 | |||

| 75808eb2a6 | |||

| 382b178911 | |||

| a7d5e518c3 | |||

| 072da6d9d6 | |||

| 4f8c134102 | |||

| b8a57a3649 | |||

| d892beb0e7 | |||

| 9a534d047c | |||

| c240a9693c | |||

| b3e6ef6224 | |||

| 3e6a88a8f9 | |||

| 37e1f9ba34 | |||

| d936aa9349 | |||

| 7888f699f5 | |||

| deff03f2c2 | |||

| 405f68f54a | |||

| 44f3e86b62 | |||

| 75131959d1 | |||

| b9433a8208 | |||

| 6f1f26f426 | |||

| dbdb8f3757 | |||

| 41b2fd770f | |||

| d4c2bc60e4 | |||

| 122f708ae8 | |||

| 18ccde8e86 | |||

| d4b64c8280 | |||

| 96ca100e34 | |||

| bd80d61b2a | |||

| 6e0f124df3 | |||

| 7ed9ad04bf | |||

| 8266e2ee4f | |||

| 8c83386ef4 | |||

| 4cbd6cfd43 | |||

| 96cd039036 | |||

| 46ab59628c | |||

| f569bc682b | |||

| af6794e424 | |||

| c7e22ccd75 | |||

| 3d1e87cb78 | |||

| c2ef46f145 | |||

| e29d6511f5 | |||

| 4d3b4ab206 | |||

| 127a74baa2 | |||

| 93f1d35cc7 | |||

| 697b6a3fe1 | |||

| 9299c88a43 | |||

| 2fe22da3a2 | |||

| 50191cd1ec | |||

| c2e9a6681a | |||

| 2b8c4b0416 | |||

| 519c07fb00 | |||

| ff9a1edbfd | |||

| 8058709d5a | |||

| f413e3bdaf | |||

| 45d7aab39a | |||

| 4ddb85ce1e | |||

| dd30335ffd | |||

| 39cbd7d8ed | |||

| 7ef47be5f5 | |||

| e536616888 | |||

| 11edb618c3 | |||

| cfe992f0f9 | |||

| f948a9b4ae | |||

| 86f4e45dcc | |||

| 8e61e26370 | |||

| 622a5a231e | |||

| 7c31f51567 | |||

| 47f05fe7b5 | |||

| 8fd53e0045 | |||

| 39fb96316f | |||

| 165ee0b5ff | |||

| 221b39256d | |||

| de2a46a2f9 | |||

| 8a6004232b | |||

| 1d01a70d92 | |||

| 4d27c0c467 | |||

| 9ced552e65 | |||

| d49cde41a7 | |||

| e4dcfaf1b3 | |||

| 8f63f565c6 | |||

| f15548ebeb | |||

| d4292300a0 | |||

| e3b4cd4671 | |||

| 300abd1439 | |||

| ccf53ad489 | |||

| 542f2470e7 | |||

| 98f51e0876 | |||

| c7b5280d3c | |||

| d3a48a891e | |||

| ce61e2452a | |||

| d6ae6650b2 | |||

| 1141b125d0 | |||

| d6c68ae1a5 | |||

| df71b84341 | |||

| d8d1007732 | |||

| 51f49a5fe4 | |||

| 4626b36e27 | |||

| d242dc0e72 | |||

| 2c84a5ecdd | |||

| 002da1b450 | |||

| 7c8ee5814a | |||

| 090d074399 | |||

| 8ec7cb8435 | |||

| e9d45da4c5 | |||

| 64cae2aab2 | |||

| 7d7c598647 | |||

| af252b709b | |||

| 891584c8d9 | |||

| c21afbe868 | |||

| 13476a807c | |||

| 3d00af4799 | |||

| 098962fa65 | |||

| d8c3b6bca4 | |||

| 2632e7eba7 | |||

| b5b3ae3cbe | |||

| 13e53fc7ee | |||

| 54b6ce2c0e | |||

| 64f63a7df2 | |||

| df0e1fb592 | |||

| 1c11bc067f | |||

| 3b3fc47f84 | |||

| 321cbd6829 | |||

| 43cb7040c6 | |||

| 644d68ee6f | |||

| 354bea8719 | |||

| e85c18f019 | |||

| 50aaf99da7 | |||

| 80c96de277 | |||

| eb07373477 | |||

| f1980e9be2 | |||

| 8777b5606d | |||

| 4497d6438c | |||

| 3d898adb26 | |||

| 842b09a280 | |||

| 91c69a80ab | |||

| c1199931de | |||

| 5e788b329d | |||

| 48dc4c624e | |||

| d2b99c0b62 | |||

| baa2a4d53f | |||

| 27c2701555 | |||

| a43ef6ec72 | |||

| c81b6680e7 | |||

| 8358b27445 | |||

| b9451ab458 | |||

| ce4e6f3dd9 | |||

| 53eb209387 | |||

| a84414f6de | |||

| 2c532713ad | |||

| fa65b95b9e | |||

| 0a0c6ea6ea | |||

| 7471035885 | |||

| 35cd771c97 | |||

| 1a3680d8a7 | |||

| 510f172c58 | |||

| 6a03e43cbc | |||

| 4acd811429 | |||

| be86f90490 | |||

| 26b84e6fd9 | |||

| 94f00b7d27 | |||

| 7820a539dd | |||

| 81eec9ba70 | |||

| 47601bab7c | |||

| 99901896cc | |||

| 5c7fe97753 | |||

| aa18556c56 | |||

| e6bf09db80 | |||

| 681ce93cc1 | |||

| 85ad682530 | |||

| e19ee681ac | |||

| 83d6d55d4b | |||

| 7dfb472424 | |||

| a78f8a0495 | |||

| 6175ee2c4c | |||

| a3537160dc | |||

| 75925b1aae | |||

| 1ef0f89a0c | |||

| e6ef85a711 | |||

| 6f2803e8a7 | |||

| 1c9d197693 | |||

| 592b1dd99f | |||

| 3240c0bb36 | |||

| e8fbcfcac3 | |||

| 1a8928c5a4 | |||

| 173dc3dedf | |||

| dbf44fe316 | |||

| 648fcb397c | |||

| 7aadb6d9ec | |||

| 49842e1961 | |||

| 44d0ac3f25 | |||

| 43a9a42991 | |||

| 145b13c238 | |||

| 8ace5532b2 | |||

| c1281b96ff | |||

| ca7b46209a | |||

| 81285f30a5 | |||

| c9b225d257 | |||

| af7414a67d | |||

| 6d6149cf81 | |||

| a31dfa3001 | |||

| afa7739131 | |||

| f1ee1e4c0f | |||

| ed5a7bff6b | |||

| 42a793e2f5 | |||

| eb8362bbe1 | |||

| 5733ea9f64 | |||

| 36c7e3b441 | |||

| 0e80648010 | |||

| be0e79c271 | |||

| 5acd392880 | |||

| 951119fcfa | |||

| 29d608f481 | |||

| 15de814bb4 | |||

| a29a12701e | |||

| 3bd50315a6 | |||

| 45186ee04e | |||

| c8e215b989 | |||

| d1735e098c | |||

| c53ea2c9f4 | |||

| f8e737648a | |||

| b1af297707 | |||

| 85c7b98307 | |||

| e41152e5f1 | |||

| 9f19ce6729 | |||

| ae85e185ad | |||

| 93762cc658 | |||

| ed608025eb | |||

| 14a293a6b3 | |||

| c7b744db79 | |||

| 250edccdda | |||

| 1daf087682 | |||

| d3d601d5c3 | |||

| 8083c9515f | |||

| 73cd16b7b5 | |||

| 65112b75bb | |||

| 3cf0b7a2d4 | |||

| afb171eefb | |||

| b07ea17f49 | |||

| 83ded43ee7 | |||

| 537c971a47 | |||

| ed0c962ff5 | |||

| eec0b9329d | |||

| 1929a84e1e | |||

| 522a6b6c17 | |||

| 462b65fe45 | |||

| 2b89fbf963 | |||

| b5c97f2039 | |||

| 64d2d19598 | |||

| a7dd034710 | |||

| ed0bcdac4f | |||

| bdeb3778d0 | |||

| 185c852088 | |||

| a1b7e42783 | |||

| 3c4b64785f | |||

| ab43d6aa5c | |||

| 3cf7034e9c | |||

| ddb37c353c | |||

| dbe3b9b99e | |||

| 5bc815e2e2 | |||

| 5a43a3a321 | |||

| 7ae63299a8 | |||

| 57de1d2677 | |||

| 383b5abb33 | |||

| d8ccd7d84c | |||

| df5b201c6b | |||

| 44d8e72ca8 | |||

| c37ee25be7 | |||

| c884daf96a | |||

| fcd213708d | |||

| 915a5db0c6 | |||

| d53a631608 | |||

| b4d0885203 | |||

| d04f6661ee | |||

| 80e1b262e5 | |||

| dd518985ff | |||

| a17cea104e | |||

| 3f9b310c6a | |||

| 06e49c0a87 | |||

| 6cf2cf5dae | |||

| 3faaf0916a | |||

| 6c9534e660 | |||

| 22295c4278 | |||

| 16182ea972 | |||

| ad69958e52 | |||

| f8a2829318 | |||

| 634f3692d8 | |||

| 2cc7f2cbac | |||

| 2896cf05fb | |||

| 776a28f053 | |||

| d75746be70 | |||

| 1dbe7fc0db | |||

| ff8a5b9a69 | |||

| 36267af51b | |||

| fef162cff8 | |||

| a8587916c8 | |||

| 77670ead76 | |||

| 360fb2f816 | |||

| a40f20ad6c | |||

| 407482eb37 | |||

| d9e7d6cd22 | |||

| dbf438f99d |

71

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

Normal file

71

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

Normal file

@ -0,0 +1,71 @@

|

||||

name: "\U0001F41B Bug Report"

|

||||

description: Submit a bug report to help us improve the library

|

||||

body:

|

||||

- type: textarea

|

||||

id: system-info

|

||||

attributes:

|

||||

label: System Info

|

||||

description: Please share your relevant system information with us

|

||||

placeholder: peft & accelerate & transformers version, platform, python version, ...

|

||||

validations:

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

id: who-can-help

|

||||

attributes:

|

||||

label: Who can help?

|

||||

description: |

|

||||

Your issue will be replied to more quickly if you can figure out the right person to tag with @

|

||||

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

|

||||

|

||||

All issues are read by one of the core maintainers, so if you don't know who to tag, just leave this blank and

|

||||

a core maintainer will ping the right person.

|

||||

|

||||

Please tag fewer than 3 people.

|

||||

|

||||

Library: @pacman100 @younesbelkada @sayakpaul

|

||||

|

||||

Documentation: @stevhliu and @MKhalusova

|

||||

|

||||

placeholder: "@Username ..."

|

||||

|

||||

- type: checkboxes

|

||||

id: information-scripts-examples

|

||||

attributes:

|

||||

label: Information

|

||||

description: 'The problem arises when using:'

|

||||

options:

|

||||

- label: "The official example scripts"

|

||||

- label: "My own modified scripts"

|

||||

|

||||

- type: checkboxes

|

||||

id: information-tasks

|

||||

attributes:

|

||||

label: Tasks

|

||||

description: "The tasks I am working on are:"

|

||||

options:

|

||||

- label: "An officially supported task in the `examples` folder"

|

||||

- label: "My own task or dataset (give details below)"

|

||||

|

||||

- type: textarea

|

||||

id: reproduction

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: Reproduction

|

||||

description: |

|

||||

Please provide a code sample that reproduces the problem you ran into. It can be a Colab link or just a code snippet.

|

||||

Please provide the simplest reproducer as possible so that we can quickly fix the issue.

|

||||

|

||||

placeholder: |

|

||||

Reproducer:

|

||||

|

||||

|

||||

|

||||

- type: textarea

|

||||

id: expected-behavior

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: Expected behavior

|

||||

description: "A clear and concise description of what you would expect to happen."

|

||||

30

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

Normal file

30

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

Normal file

@ -0,0 +1,30 @@

|

||||

name: "\U0001F680 Feature request"

|

||||

description: Submit a proposal/request for a new feature

|

||||

labels: [ "feature" ]

|

||||

body:

|

||||

- type: textarea

|

||||

id: feature-request

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: Feature request

|

||||

description: |

|

||||

A clear and concise description of the feature proposal. Please provide a link to the paper and code in case they exist.

|

||||

|

||||

- type: textarea

|

||||

id: motivation

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: Motivation

|

||||

description: |

|

||||

Please outline the motivation for the proposal. Is your feature request related to a problem?

|

||||

|

||||

- type: textarea

|

||||

id: contribution

|

||||

validations:

|

||||

required: true

|

||||

attributes:

|

||||

label: Your contribution

|

||||

description: |

|

||||

Is there any way that you could help, e.g. by submitting a PR?

|

||||

74

.github/workflows/build_docker_images.yml

vendored

Normal file

74

.github/workflows/build_docker_images.yml

vendored

Normal file

@ -0,0 +1,74 @@

|

||||

name: Build Docker images (scheduled)

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

workflow_call:

|

||||

schedule:

|

||||

- cron: "0 1 * * *"

|

||||

|

||||

concurrency:

|

||||

group: docker-image-builds

|

||||

cancel-in-progress: false

|

||||

|

||||

jobs:

|

||||

latest-cpu:

|

||||

name: "Latest Peft CPU [dev]"

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Cleanup disk

|

||||

run: |

|

||||

sudo ls -l /usr/local/lib/

|

||||

sudo ls -l /usr/share/

|

||||

sudo du -sh /usr/local/lib/

|

||||

sudo du -sh /usr/share/

|

||||

sudo rm -rf /usr/local/lib/android

|

||||

sudo rm -rf /usr/share/dotnet

|

||||

sudo du -sh /usr/local/lib/

|

||||

sudo du -sh /usr/share/

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v2

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_PASSWORD }}

|

||||

|

||||

- name: Build and Push CPU

|

||||

uses: docker/build-push-action@v4

|

||||

with:

|

||||

context: ./docker/peft-cpu

|

||||

push: true

|

||||

tags: huggingface/peft-cpu

|

||||

|

||||

latest-cuda:

|

||||

name: "Latest Peft GPU [dev]"

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Cleanup disk

|

||||

run: |

|

||||

sudo ls -l /usr/local/lib/

|

||||

sudo ls -l /usr/share/

|

||||

sudo du -sh /usr/local/lib/

|

||||

sudo du -sh /usr/share/

|

||||

sudo rm -rf /usr/local/lib/android

|

||||

sudo rm -rf /usr/share/dotnet

|

||||

sudo du -sh /usr/local/lib/

|

||||

sudo du -sh /usr/share/

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v2

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v1

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_PASSWORD }}

|

||||

|

||||

- name: Build and Push GPU

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

context: ./docker/peft-gpu

|

||||

push: true

|

||||

tags: huggingface/peft-gpu

|

||||

19

.github/workflows/build_documentation.yml

vendored

Normal file

19

.github/workflows/build_documentation.yml

vendored

Normal file

@ -0,0 +1,19 @@

|

||||

name: Build documentation

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

- doc-builder*

|

||||

- v*-release

|

||||

|

||||

jobs:

|

||||

build:

|

||||

uses: huggingface/doc-builder/.github/workflows/build_main_documentation.yml@main

|

||||

with:

|

||||

commit_sha: ${{ github.sha }}

|

||||

package: peft

|

||||

notebook_folder: peft_docs

|

||||

secrets:

|

||||

token: ${{ secrets.HUGGINGFACE_PUSH }}

|

||||

hf_token: ${{ secrets.HF_DOC_BUILD_PUSH }}

|

||||

16

.github/workflows/build_pr_documentation.yml

vendored

Normal file

16

.github/workflows/build_pr_documentation.yml

vendored

Normal file

@ -0,0 +1,16 @@

|

||||

name: Build PR Documentation

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.head_ref || github.run_id }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

build:

|

||||

uses: huggingface/doc-builder/.github/workflows/build_pr_documentation.yml@main

|

||||

with:

|

||||

commit_sha: ${{ github.event.pull_request.head.sha }}

|

||||

pr_number: ${{ github.event.number }}

|

||||

package: peft

|

||||

14

.github/workflows/delete_doc_comment.yml

vendored

Normal file

14

.github/workflows/delete_doc_comment.yml

vendored

Normal file

@ -0,0 +1,14 @@

|

||||

name: Delete doc comment

|

||||

|

||||

on:

|

||||

workflow_run:

|

||||

workflows: ["Delete doc comment trigger"]

|

||||

types:

|

||||

- completed

|

||||

|

||||

|

||||

jobs:

|

||||

delete:

|

||||

uses: huggingface/doc-builder/.github/workflows/delete_doc_comment.yml@main

|

||||

secrets:

|

||||

comment_bot_token: ${{ secrets.COMMENT_BOT_TOKEN }}

|

||||

12

.github/workflows/delete_doc_comment_trigger.yml

vendored

Normal file

12

.github/workflows/delete_doc_comment_trigger.yml

vendored

Normal file

@ -0,0 +1,12 @@

|

||||

name: Delete doc comment trigger

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [ closed ]

|

||||

|

||||

|

||||

jobs:

|

||||

delete:

|

||||

uses: huggingface/doc-builder/.github/workflows/delete_doc_comment_trigger.yml@main

|

||||

with:

|

||||

pr_number: ${{ github.event.number }}

|

||||

101

.github/workflows/nightly.yml

vendored

Normal file

101

.github/workflows/nightly.yml

vendored

Normal file

@ -0,0 +1,101 @@

|

||||

name: Self-hosted runner with slow tests (scheduled)

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

schedule:

|

||||

- cron: "0 2 * * *"

|

||||

|

||||

env:

|

||||

RUN_SLOW: "yes"

|

||||

IS_GITHUB_CI: "1"

|

||||

SLACK_API_TOKEN: ${{ secrets.SLACK_API_TOKEN }}

|

||||

|

||||

|

||||

jobs:

|

||||

run_all_tests_single_gpu:

|

||||

runs-on: [self-hosted, docker-gpu, multi-gpu]

|

||||

env:

|

||||

CUDA_VISIBLE_DEVICES: "0"

|

||||

TEST_TYPE: "single_gpu"

|

||||

container:

|

||||

image: huggingface/peft-gpu:latest

|

||||

options: --gpus all --shm-size "16gb"

|

||||

defaults:

|

||||

run:

|

||||

working-directory: peft/

|

||||

shell: bash

|

||||

steps:

|

||||

- name: Update clone & pip install

|

||||

run: |

|

||||

source activate peft

|

||||

git config --global --add safe.directory '*'

|

||||

git fetch && git checkout ${{ github.sha }}

|

||||

pip install -e . --no-deps

|

||||

pip install pytest-reportlog

|

||||

|

||||

- name: Run common tests on single GPU

|

||||

run: |

|

||||

source activate peft

|

||||

make tests_common_gpu

|

||||

|

||||

- name: Run examples on single GPU

|

||||

run: |

|

||||

source activate peft

|

||||

make tests_examples_single_gpu

|

||||

|

||||

- name: Run core tests on single GPU

|

||||

run: |

|

||||

source activate peft

|

||||

make tests_core_single_gpu

|

||||

|

||||

- name: Generate Report

|

||||

if: always()

|

||||

run: |

|

||||

pip install slack_sdk tabulate

|

||||

python scripts/log_reports.py >> $GITHUB_STEP_SUMMARY

|

||||

|

||||

run_all_tests_multi_gpu:

|

||||

runs-on: [self-hosted, docker-gpu, multi-gpu]

|

||||

env:

|

||||

CUDA_VISIBLE_DEVICES: "0,1"

|

||||

TEST_TYPE: "multi_gpu"

|

||||

container:

|

||||

image: huggingface/peft-gpu:latest

|

||||

options: --gpus all --shm-size "16gb"

|

||||

defaults:

|

||||

run:

|

||||

working-directory: peft/

|

||||

shell: bash

|

||||

steps:

|

||||

- name: Update clone

|

||||

run: |

|

||||

source activate peft

|

||||

git config --global --add safe.directory '*'

|

||||

git fetch && git checkout ${{ github.sha }}

|

||||

pip install -e . --no-deps

|

||||

pip install pytest-reportlog

|

||||

|

||||

- name: Run core GPU tests on multi-gpu

|

||||

run: |

|

||||

source activate peft

|

||||

|

||||

- name: Run common tests on multi GPU

|

||||

run: |

|

||||

source activate peft

|

||||

make tests_common_gpu

|

||||

|

||||

- name: Run examples on multi GPU

|

||||

run: |

|

||||

source activate peft

|

||||

make tests_examples_multi_gpu

|

||||

|

||||

- name: Run core tests on multi GPU

|

||||

run: |

|

||||

source activate peft

|

||||

make tests_core_multi_gpu

|

||||

|

||||

- name: Generate Report

|

||||

if: always()

|

||||

run: |

|

||||

pip install slack_sdk tabulate

|

||||

python scripts/log_reports.py >> $GITHUB_STEP_SUMMARY

|

||||

27

.github/workflows/stale.yml

vendored

Normal file

27

.github/workflows/stale.yml

vendored

Normal file

@ -0,0 +1,27 @@

|

||||

name: Stale Bot

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 15 * * *"

|

||||

|

||||

jobs:

|

||||

close_stale_issues:

|

||||

name: Close Stale Issues

|

||||

if: github.repository == 'huggingface/peft'

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: 3.8

|

||||

|

||||

- name: Install requirements

|

||||

run: |

|

||||

pip install PyGithub

|

||||

- name: Close stale issues

|

||||

run: |

|

||||

python scripts/stale.py

|

||||

49

.github/workflows/tests.yml

vendored

Normal file

49

.github/workflows/tests.yml

vendored

Normal file

@ -0,0 +1,49 @@

|

||||

name: tests

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

pull_request:

|

||||

|

||||

jobs:

|

||||

check_code_quality:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: "3.8"

|

||||

cache: "pip"

|

||||

cache-dependency-path: "setup.py"

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install .[dev]

|

||||

- name: Check quality

|

||||

run: |

|

||||

make quality

|

||||

|

||||

tests:

|

||||

needs: check_code_quality

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ["3.8", "3.9", "3.10"]

|

||||

os: ["ubuntu-latest", "macos-latest", "windows-latest"]

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: "pip"

|

||||

cache-dependency-path: "setup.py"

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

# cpu version of pytorch

|

||||

pip install -e .[test]

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

make test

|

||||

16

.github/workflows/upload_pr_documentation.yml

vendored

Normal file

16

.github/workflows/upload_pr_documentation.yml

vendored

Normal file

@ -0,0 +1,16 @@

|

||||

name: Upload PR Documentation

|

||||

|

||||

on:

|

||||

workflow_run:

|

||||

workflows: ["Build PR Documentation"]

|

||||

types:

|

||||

- completed

|

||||

|

||||

jobs:

|

||||

build:

|

||||

uses: huggingface/doc-builder/.github/workflows/upload_pr_documentation.yml@main

|

||||

with:

|

||||

package_name: peft

|

||||

secrets:

|

||||

hf_token: ${{ secrets.HF_DOC_BUILD_PUSH }}

|

||||

comment_bot_token: ${{ secrets.COMMENT_BOT_TOKEN }}

|

||||

@ -1 +0,0 @@

|

||||

include LICENSE

|

||||

31

Makefile

31

Makefile

@ -1,19 +1,36 @@

|

||||

.PHONY: quality style test docs

|

||||

|

||||

check_dirs := src examples

|

||||

check_dirs := src tests examples docs

|

||||

|

||||

# Check that source code meets quality standards

|

||||

|

||||

# this target runs checks on all files

|

||||

quality:

|

||||

black --check $(check_dirs)

|

||||

isort --check-only $(check_dirs)

|

||||

flake8 $(check_dirs)

|

||||

doc-builder style src --max_len 119 --check_only

|

||||

ruff $(check_dirs)

|

||||

doc-builder style src/peft tests docs/source --max_len 119 --check_only

|

||||

|

||||

# Format source code automatically and check is there are any problems left that need manual fixing

|

||||

style:

|

||||

black $(check_dirs)

|

||||

isort $(check_dirs)

|

||||

doc-builder style src --max_len 119

|

||||

|

||||

ruff $(check_dirs) --fix

|

||||

doc-builder style src/peft tests docs/source --max_len 119

|

||||

|

||||

test:

|

||||

python -m pytest -n 3 tests/ $(if $(IS_GITHUB_CI),--report-log "ci_tests.log",)

|

||||

|

||||

tests_examples_multi_gpu:

|

||||

python -m pytest -m multi_gpu_tests tests/test_gpu_examples.py $(if $(IS_GITHUB_CI),--report-log "multi_gpu_examples.log",)

|

||||

|

||||

tests_examples_single_gpu:

|

||||

python -m pytest -m single_gpu_tests tests/test_gpu_examples.py $(if $(IS_GITHUB_CI),--report-log "single_gpu_examples.log",)

|

||||

|

||||

tests_core_multi_gpu:

|

||||

python -m pytest -m multi_gpu_tests tests/test_common_gpu.py $(if $(IS_GITHUB_CI),--report-log "core_multi_gpu.log",)

|

||||

|

||||

tests_core_single_gpu:

|

||||

python -m pytest -m single_gpu_tests tests/test_common_gpu.py $(if $(IS_GITHUB_CI),--report-log "core_single_gpu.log",)

|

||||

|

||||

tests_common_gpu:

|

||||

python -m pytest tests/test_decoder_models.py $(if $(IS_GITHUB_CI),--report-log "common_decoder.log",)

|

||||

python -m pytest tests/test_encoder_decoder_models.py $(if $(IS_GITHUB_CI),--report-log "common_encoder_decoder.log",)

|

||||

|

||||

194

README.md

194

README.md

@ -21,24 +21,26 @@ limitations under the License.

|

||||

|

||||

Parameter-Efficient Fine-Tuning (PEFT) methods enable efficient adaptation of pre-trained language models (PLMs) to various downstream applications without fine-tuning all the model's parameters. Fine-tuning large-scale PLMs is often prohibitively costly. In this regard, PEFT methods only fine-tune a small number of (extra) model parameters, thereby greatly decreasing the computational and storage costs. Recent State-of-the-Art PEFT techniques achieve performance comparable to that of full fine-tuning.

|

||||

|

||||

Seamlessly integrated with 🤗 Accelerate for large scale models leveraging PyTorch FSDP.

|

||||

Seamlessly integrated with 🤗 Accelerate for large scale models leveraging DeepSpeed and Big Model Inference.

|

||||

|

||||

Supported methods:

|

||||

|

||||

1. LoRA: [LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS](https://arxiv.org/pdf/2106.09685.pdf)

|

||||

2. Prefix Tuning: [P-Tuning v2: Prompt Tuning Can Be Comparable to Fine-tuning Universally Across Scales and Tasks](https://arxiv.org/pdf/2110.07602.pdf)

|

||||

3. P-Tuning: [GPT Understands, Too](https://arxiv.org/pdf/2103.10385.pdf)

|

||||

4. Prompt Tuning: [The Power of Scale for Parameter-Efficient Prompt Tuning](https://arxiv.org/pdf/2104.08691.pdf)

|

||||

1. LoRA: [LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS](https://arxiv.org/abs/2106.09685)

|

||||

2. Prefix Tuning: [Prefix-Tuning: Optimizing Continuous Prompts for Generation](https://aclanthology.org/2021.acl-long.353/), [P-Tuning v2: Prompt Tuning Can Be Comparable to Fine-tuning Universally Across Scales and Tasks](https://arxiv.org/pdf/2110.07602.pdf)

|

||||

3. P-Tuning: [GPT Understands, Too](https://arxiv.org/abs/2103.10385)

|

||||

4. Prompt Tuning: [The Power of Scale for Parameter-Efficient Prompt Tuning](https://arxiv.org/abs/2104.08691)

|

||||

5. AdaLoRA: [Adaptive Budget Allocation for Parameter-Efficient Fine-Tuning](https://arxiv.org/abs/2303.10512)

|

||||

6. $(IA)^3$ : [Infused Adapter by Inhibiting and Amplifying Inner Activations](https://arxiv.org/abs/2205.05638)

|

||||

|

||||

## Getting started

|

||||

|

||||

```python

|

||||

from transformers import AutoModelForSeq2SeqLM

|

||||

from peft import get_peft_config, get_peft_model, LoRAConfig, TaskType

|

||||

from peft import get_peft_config, get_peft_model, LoraConfig, TaskType

|

||||

model_name_or_path = "bigscience/mt0-large"

|

||||

tokenizer_name_or_path = "bigscience/mt0-large"

|

||||

|

||||

peft_config = LoRAConfig(

|

||||

peft_config = LoraConfig(

|

||||

task_type=TaskType.SEQ_2_SEQ_LM, inference_mode=False, r=8, lora_alpha=32, lora_dropout=0.1

|

||||

)

|

||||

|

||||

@ -52,7 +54,7 @@ model.print_trainable_parameters()

|

||||

|

||||

### Get comparable performance to full finetuning by adapting LLMs to downstream tasks using consumer hardware

|

||||

|

||||

GPU memory required for adapting LLMs on the few-shot dataset `ought/raft/twitter_complaints`. Here, settings considered

|

||||

GPU memory required for adapting LLMs on the few-shot dataset [`ought/raft/twitter_complaints`](https://huggingface.co/datasets/ought/raft/viewer/twitter_complaints). Here, settings considered

|

||||

are full finetuning, PEFT-LoRA using plain PyTorch and PEFT-LoRA using DeepSpeed with CPU Offloading.

|

||||

|

||||

Hardware: Single A100 80GB GPU with CPU RAM above 64GB

|

||||

@ -63,9 +65,9 @@ Hardware: Single A100 80GB GPU with CPU RAM above 64GB

|

||||

| bigscience/mt0-xxl (12B params) | OOM GPU | 56GB GPU / 3GB CPU | 22GB GPU / 52GB CPU |

|

||||

| bigscience/bloomz-7b1 (7B params) | OOM GPU | 32GB GPU / 3.8GB CPU | 18.1GB GPU / 35GB CPU |

|

||||

|

||||

Performance of PEFT-LoRA tuned `bigscience/T0_3B` on `ought/raft/twitter_complaints` leaderboard.

|

||||

A point to note is that we didn't try to sequeeze performance by playing around with input instruction templates, LoRA hyperparams and other training related hyperparams. Also, we didn't use the larger 13B mt0-xxl model.

|

||||

So, we are already seeing comparable performance to SoTA with parameter effcient tuning. Also, the final checkpoint size is just `19MB` in comparison to `11GB` size of the backbone `bigscience/T0_3B` model.

|

||||

Performance of PEFT-LoRA tuned [`bigscience/T0_3B`](https://huggingface.co/bigscience/T0_3B) on [`ought/raft/twitter_complaints`](https://huggingface.co/datasets/ought/raft/viewer/twitter_complaints) leaderboard.

|

||||

A point to note is that we didn't try to squeeze performance by playing around with input instruction templates, LoRA hyperparams and other training related hyperparams. Also, we didn't use the larger 13B [mt0-xxl](https://huggingface.co/bigscience/mt0-xxl) model.

|

||||

So, we are already seeing comparable performance to SoTA with parameter efficient tuning. Also, the final checkpoint size is just `19MB` in comparison to `11GB` size of the backbone [`bigscience/T0_3B`](https://huggingface.co/bigscience/T0_3B) model.

|

||||

|

||||

| Submission Name | Accuracy |

|

||||

| --------- | ---- |

|

||||

@ -75,19 +77,21 @@ So, we are already seeing comparable performance to SoTA with parameter effcient

|

||||

|

||||

**Therefore, we can see that performance comparable to SoTA is achievable by PEFT methods with consumer hardware such as 16GB and 24GB GPUs.**

|

||||

|

||||

An insightful blogpost explaining the advantages of using PEFT for fine-tuning FlanT5-XXL: [https://www.philschmid.de/fine-tune-flan-t5-peft](https://www.philschmid.de/fine-tune-flan-t5-peft)

|

||||

|

||||

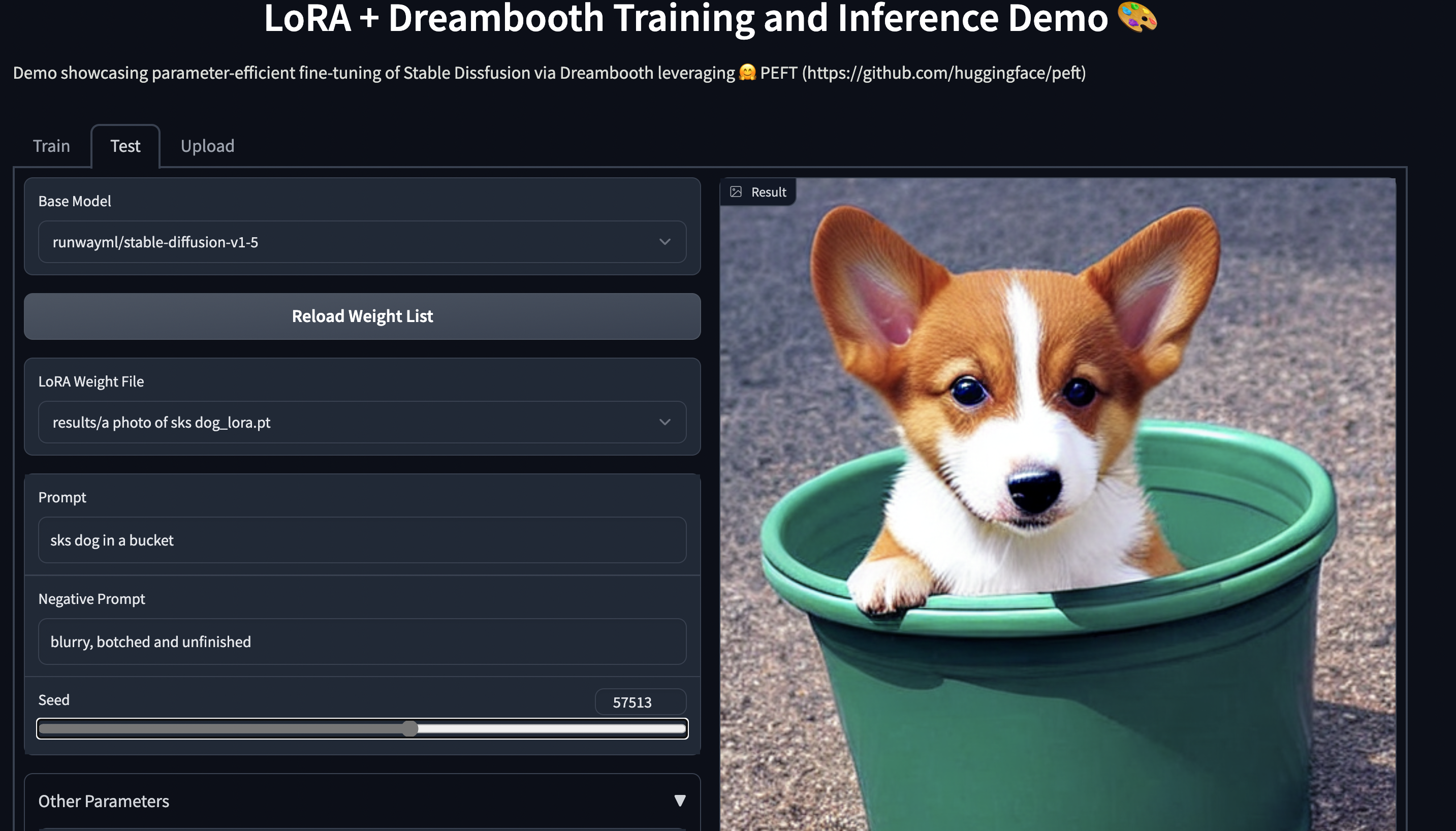

### Parameter Efficient Tuning of Diffusion Models

|

||||

|

||||

GPU memory required by different settings during training are given below. The final checkpoint size being `8.8 MB`.

|

||||

GPU memory required by different settings during training is given below. The final checkpoint size is `8.8 MB`.

|

||||

|

||||

Hardware: Single A100 80GB GPU with CPU RAM above 64G

|

||||

Hardware: Single A100 80GB GPU with CPU RAM above 64GB

|

||||

|

||||

| Model | Full Finetuning | PEFT-LoRA | PEFT-LoRA with Gradient Checkpoitning |

|

||||

| Model | Full Finetuning | PEFT-LoRA | PEFT-LoRA with Gradient Checkpointing |

|

||||

| --------- | ---- | ---- | ---- |

|

||||

| CompVis/stable-diffusion-v1-4 | 27.5GB GPU / 3.97GB CPU | 15.5GB GPU / 3.84GB CPU | 8.12GB GPU / 3.77GB CPU |

|

||||

|

||||

|

||||

**Training**

|

||||

An example of using LoRA for parameter efficient dreambooth training is given in `~examples/lora_dreambooth/train_dreambooth.py`

|

||||

An example of using LoRA for parameter efficient dreambooth training is given in [`examples/lora_dreambooth/train_dreambooth.py`](examples/lora_dreambooth/train_dreambooth.py)

|

||||

|

||||

```bash

|

||||

export MODEL_NAME= "CompVis/stable-diffusion-v1-4" #"stabilityai/stable-diffusion-2-1"

|

||||

@ -125,7 +129,18 @@ Try out the 🤗 Gradio Space which should run seamlessly on a T4 instance:

|

||||

|

||||

|

||||

|

||||

### Parameter Efficient Tuning of LLMs for RLHF components such as Ranker and Policy [ToDo]

|

||||

**NEW** ✨ Multi Adapter support and combining multiple LoRA adapters in a weighted combination

|

||||

|

||||

|

||||

### Parameter Efficient Tuning of LLMs for RLHF components such as Ranker and Policy

|

||||

- Here is an example in [trl](https://github.com/lvwerra/trl) library using PEFT+INT8 for tuning policy model: [gpt2-sentiment_peft.py](https://github.com/lvwerra/trl/blob/main/examples/sentiment/scripts/gpt2-sentiment_peft.py) and corresponding [Blog](https://huggingface.co/blog/trl-peft)

|

||||

- Example using PEFT for Instrction finetuning, reward model and policy : [stack_llama](https://github.com/lvwerra/trl/tree/main/examples/stack_llama/scripts) and corresponding [Blog](https://huggingface.co/blog/stackllama)

|

||||

|

||||

### INT8 training of large models in Colab using PEFT LoRA and bits_and_bytes

|

||||

|

||||

- Here is now a demo on how to fine tune [OPT-6.7b](https://huggingface.co/facebook/opt-6.7b) (14GB in fp16) in a Google Colab: [](https://colab.research.google.com/drive/1jCkpikz0J2o20FBQmYmAGdiKmJGOMo-o?usp=sharing)

|

||||

|

||||

- Here is now a demo on how to fine tune [whisper-large](https://huggingface.co/openai/whisper-large-v2) (1.5B params) (14GB in fp16) in a Google Colab: [](https://colab.research.google.com/drive/1DOkD_5OUjFa0r5Ik3SgywJLJtEo2qLxO?usp=sharing) and [](https://colab.research.google.com/drive/1vhF8yueFqha3Y3CpTHN6q9EVcII9EYzs?usp=sharing)

|

||||

|

||||

### Save compute and storage even for medium and small models

|

||||

|

||||

@ -133,22 +148,22 @@ Save storage by avoiding full finetuning of models on each of the downstream tas

|

||||

With PEFT methods, users only need to store tiny checkpoints in the order of `MBs` all the while retaining

|

||||

performance comparable to full finetuning.

|

||||

|

||||

An example of using LoRA for the task of adaping `LayoutLMForTokenClassification` on `FUNSD` dataset is given in `~examples/token_classification/PEFT_LoRA_LayoutLMForTokenClassification_on_FUNSD.py`. We can observe that with only `0.62 %` of parameters being trainable, we achieve performance (F1 0.777) comparable to full finetuning (F1 0.786) (without any hyerparam tuning runs for extracting more performance), and the checkpoint of this is only `2.8MB`. Now, if there are `N` such datasets, just have these PEFT models one for each dataset and save a lot of storage without having to worry about the problem of catastrophic forgetting or overfitting of backbone/base model.

|

||||

An example of using LoRA for the task of adapting `LayoutLMForTokenClassification` on `FUNSD` dataset is given in `~examples/token_classification/PEFT_LoRA_LayoutLMForTokenClassification_on_FUNSD.py`. We can observe that with only `0.62 %` of parameters being trainable, we achieve performance (F1 0.777) comparable to full finetuning (F1 0.786) (without any hyerparam tuning runs for extracting more performance), and the checkpoint of this is only `2.8MB`. Now, if there are `N` such datasets, just have these PEFT models one for each dataset and save a lot of storage without having to worry about the problem of catastrophic forgetting or overfitting of backbone/base model.

|

||||

|

||||

Another example is fine-tuning `roberta-large` on `MRPC` GLUE dataset suing differenct PEFT methods. The notebooks are given in `~examples/sequence_classification`.

|

||||

Another example is fine-tuning [`roberta-large`](https://huggingface.co/roberta-large) on [`MRPC` GLUE](https://huggingface.co/datasets/glue/viewer/mrpc) dataset using different PEFT methods. The notebooks are given in `~examples/sequence_classification`.

|

||||

|

||||

|

||||

## PEFT + 🤗 Accelerate

|

||||

|

||||

PEFT models work with 🤗 Accelerate out of the box. Use 🤗 Accelerate for Distributed training on various hardware such as GPUs, Apple Silicon devices etc during training.

|

||||

PEFT models work with 🤗 Accelerate out of the box. Use 🤗 Accelerate for Distributed training on various hardware such as GPUs, Apple Silicon devices, etc during training.

|

||||

Use 🤗 Accelerate for inferencing on consumer hardware with small resources.

|

||||

|

||||

### Example of PEFT model training using 🤗 Accelerate's DeepSpeed integation

|

||||

### Example of PEFT model training using 🤗 Accelerate's DeepSpeed integration

|

||||

|

||||

Currently DeepSpeed requires PR [ZeRO3 handling frozen weights](https://github.com/microsoft/DeepSpeed/pull/2653) to fix [[REQUEST] efficiently deal with frozen weights during training](https://github.com/microsoft/DeepSpeed/issues/2615) issue. Example is provided in `~examples/conditional_generation/peft_lora_seq2seq_accelerate_ds_zero3_offload.py`.

|

||||

a. First run `accelerate config --config_file ds_zero3_cpu.yaml` and answer the questionaire.

|

||||

DeepSpeed version required `v0.8.0`. An example is provided in `~examples/conditional_generation/peft_lora_seq2seq_accelerate_ds_zero3_offload.py`.

|

||||

a. First, run `accelerate config --config_file ds_zero3_cpu.yaml` and answer the questionnaire.

|

||||

Below are the contents of the config file.

|

||||

```

|

||||

```yaml

|

||||

compute_environment: LOCAL_MACHINE

|

||||

deepspeed_config:

|

||||

gradient_accumulation_steps: 1

|

||||

@ -172,8 +187,8 @@ Use 🤗 Accelerate for inferencing on consumer hardware with small resources.

|

||||

same_network: true

|

||||

use_cpu: false

|

||||

```

|

||||

b. run the below command to launch example script

|

||||

```

|

||||

b. run the below command to launch the example script

|

||||

```bash

|

||||

accelerate launch --config_file ds_zero3_cpu.yaml examples/peft_lora_seq2seq_accelerate_ds_zero3_offload.py

|

||||

```

|

||||

|

||||

@ -203,52 +218,85 @@ Use 🤗 Accelerate for inferencing on consumer hardware with small resources.

|

||||

```

|

||||

|

||||

### Example of PEFT model inference using 🤗 Accelerate's Big Model Inferencing capabilities

|

||||

|

||||

Example is provided in `~examples/causal_language_modeling/peft_lora_clm_accelerate_big_model_inference.ipynb`.

|

||||

An example is provided in `~examples/causal_language_modeling/peft_lora_clm_accelerate_big_model_inference.ipynb`.

|

||||

|

||||

|

||||

## Models support matrix

|

||||

|

||||

### Causal Language Modeling

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning |

|

||||

| --------- | ---- | ---- | ---- | ---- |

|

||||

| GPT-2 | ✅ | ✅ | ✅ | ✅ |

|

||||

| Bloom | ✅ | ✅ | ✅ | ✅ |

|

||||

| OPT | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-Neo | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-J | ✅ | ✅ | ✅ | ✅ |

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

|--------------| ---- | ---- | ---- | ---- | ---- |

|

||||

| GPT-2 | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| Bloom | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| OPT | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-Neo | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-J | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-NeoX-20B | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| LLaMA | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| ChatGLM | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

|

||||

### Conditional Generation

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning |

|

||||

| --------- | ---- | ---- | ---- | ---- |

|

||||

| T5 | ✅ | ✅ | ✅ | ✅ |

|

||||

| BART | ✅ | ✅ | ✅ | ✅ |

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| T5 | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| BART | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

|

||||

### Sequence Classification

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning |

|

||||

| --------- | ---- | ---- | ---- | ---- |

|

||||

| BERT | ✅ | ✅ | ✅ | ✅ |

|

||||

| RoBERTa | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-2 | ✅ | ✅ | ✅ | ✅ |

|

||||

| Bloom | ✅ | ✅ | ✅ | ✅ |

|

||||

| OPT | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-Neo | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-J | ✅ | ✅ | ✅ | ✅ |

|

||||

| Deberta | ✅ | | ✅ | ✅ |

|

||||

| Deberta-v2 | ✅ | | ✅ | ✅ |

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| BERT | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| RoBERTa | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

| GPT-2 | ✅ | ✅ | ✅ | ✅ | |

|

||||

| Bloom | ✅ | ✅ | ✅ | ✅ | |

|

||||

| OPT | ✅ | ✅ | ✅ | ✅ | |

|

||||

| GPT-Neo | ✅ | ✅ | ✅ | ✅ | |

|

||||

| GPT-J | ✅ | ✅ | ✅ | ✅ | |

|

||||

| Deberta | ✅ | | ✅ | ✅ | |

|

||||

| Deberta-v2 | ✅ | | ✅ | ✅ | |

|

||||

|

||||

### Token Classification

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning |

|

||||

| --------- | ---- | ---- | ---- | ---- |

|

||||

| BERT | ✅ | ✅ | | |

|

||||

| RoBERTa | ✅ | ✅ | | |

|

||||

| GPT-2 | ✅ | ✅ | | |

|

||||

| Bloom | ✅ | ✅ | | |

|

||||

| OPT | ✅ | ✅ | | |

|

||||

| GPT-Neo | ✅ | ✅ | | |

|

||||

| GPT-J | ✅ | ✅ | | |

|

||||

| Deberta | ✅ | | | |

|

||||

| Deberta-v2 | ✅ | | | |

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| BERT | ✅ | ✅ | | | |

|

||||

| RoBERTa | ✅ | ✅ | | | |

|

||||

| GPT-2 | ✅ | ✅ | | | |

|

||||

| Bloom | ✅ | ✅ | | | |

|

||||

| OPT | ✅ | ✅ | | | |

|

||||

| GPT-Neo | ✅ | ✅ | | | |

|

||||

| GPT-J | ✅ | ✅ | | | |

|

||||

| Deberta | ✅ | | | | |

|

||||

| Deberta-v2 | ✅ | | | | |

|

||||

|

||||

### Text-to-Image Generation

|

||||

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| Stable Diffusion | ✅ | | | | |

|

||||

|

||||

|

||||

### Image Classification

|

||||

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| ViT | ✅ | | | | |

|

||||

| Swin | ✅ | | | | |

|

||||

|

||||

### Image to text (Multi-modal models)

|

||||

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| Blip-2 | ✅ | | | | |

|

||||

|

||||

___Note that we have tested LoRA for [ViT](https://huggingface.co/docs/transformers/model_doc/vit) and [Swin](https://huggingface.co/docs/transformers/model_doc/swin) for fine-tuning on image classification. However, it should be possible to use LoRA for any compatible model [provided](https://huggingface.co/models?pipeline_tag=image-classification&sort=downloads&search=vit) by 🤗 Transformers. Check out the respective

|

||||

examples to learn more. If you run into problems, please open an issue.___

|

||||

|

||||

The same principle applies to our [segmentation models](https://huggingface.co/models?pipeline_tag=image-segmentation&sort=downloads) as well.

|

||||

|

||||

### Semantic Segmentation

|

||||

|

||||

| Model | LoRA | Prefix Tuning | P-Tuning | Prompt Tuning | IA3 |

|

||||

| --------- | ---- | ---- | ---- | ---- | ---- |

|

||||

| SegFormer | ✅ | | | | |

|

||||

|

||||

|

||||

## Caveats:

|

||||

@ -267,10 +315,10 @@ any GPU memory savings. Please refer issue [[FSDP] FSDP with CPU offload consume

|

||||

model = accelerator.prepare(model)

|

||||

```

|

||||

|

||||

Example of parameter efficient tuning with `mt0-xxl` base model using 🤗 Accelerate is provided in `~examples/conditional_generation/peft_lora_seq2seq_accelerate_fsdp.py`.

|

||||

a. First run `accelerate config --config_file fsdp_config.yaml` and answer the questionaire.

|

||||

Example of parameter efficient tuning with [`mt0-xxl`](https://huggingface.co/bigscience/mt0-xxl) base model using 🤗 Accelerate is provided in `~examples/conditional_generation/peft_lora_seq2seq_accelerate_fsdp.py`.

|

||||

a. First, run `accelerate config --config_file fsdp_config.yaml` and answer the questionnaire.

|

||||

Below are the contents of the config file.

|

||||

```

|

||||

```yaml

|

||||

command_file: null

|

||||

commands: null

|

||||

compute_environment: LOCAL_MACHINE

|

||||

@ -300,21 +348,19 @@ any GPU memory savings. Please refer issue [[FSDP] FSDP with CPU offload consume

|

||||

tpu_zone: null

|

||||

use_cpu: false

|

||||

```

|

||||

b. run the below command to launch example script

|

||||

```

|

||||

b. run the below command to launch the example script

|

||||

```bash

|

||||

accelerate launch --config_file fsdp_config.yaml examples/peft_lora_seq2seq_accelerate_fsdp.py

|

||||

```

|

||||

|

||||

2. When using `P_TUNING` or `PROMPT_TUNING` with `SEQ_2_SEQ` task, remember to remove the `num_virtual_token` virtual prompt predictions from the left side of the model outputs during evaluations.

|

||||

|

||||

3. `P_TUNING` or `PROMPT_TUNING` doesn't support `generate` functionality of transformers bcause `generate` strictly requires `input_ids`/`decoder_input_ids` but

|

||||

`P_TUNING`/`PROMPT_TUNING` appends soft prompt embeddings to `input_embeds` to create

|

||||

new `input_embeds` to be given to the model. Therefore, `generate` doesn't support this yet.

|

||||

2. When using ZeRO3 with zero3_init_flag=True, if you find the gpu memory increase with training steps. we might need to update deepspeed after [deepspeed commit 42858a9891422abc](https://github.com/microsoft/DeepSpeed/commit/42858a9891422abcecaa12c1bd432d28d33eb0d4) . The related issue is [[BUG] Peft Training with Zero.Init() and Zero3 will increase GPU memory every forward step ](https://github.com/microsoft/DeepSpeed/issues/3002)

|

||||

|

||||

## Backlog:

|

||||

1. Explore and possibly integrate `(IA)^3` and `UniPELT`

|

||||

2. Add tests

|

||||

3. Add more use cases and examples

|

||||

- [x] Add tests

|

||||

- [x] Multi Adapter training and inference support

|

||||

- [x] Add more use cases and examples

|

||||

- [x] Integrate`(IA)^3`, `AdaptionPrompt`

|

||||

- [ ] Explore and possibly integrate methods like `Bottleneck Adapters`, ...

|

||||

|

||||

## Citing 🤗 PEFT

|

||||

|

||||

@ -323,7 +369,7 @@ If you use 🤗 PEFT in your publication, please cite it by using the following

|

||||

```bibtex

|

||||

@Misc{peft,

|

||||

title = {PEFT: State-of-the-art Parameter-Efficient Fine-Tuning methods},

|

||||

author = {Sourab Mangrulkar, Sylvain Gugger},

|

||||

author = {Sourab Mangrulkar and Sylvain Gugger and Lysandre Debut and Younes Belkada and Sayak Paul},

|

||||

howpublished = {\url{https://github.com/huggingface/peft}},

|

||||

year = {2022}

|

||||

}

|

||||

|

||||

51

docker/peft-cpu/Dockerfile

Normal file

51

docker/peft-cpu/Dockerfile

Normal file

@ -0,0 +1,51 @@

|

||||

# Builds GPU docker image of PyTorch

|

||||

# Uses multi-staged approach to reduce size

|

||||

# Stage 1

|

||||

# Use base conda image to reduce time

|

||||

FROM continuumio/miniconda3:latest AS compile-image

|

||||

# Specify py version

|

||||

ENV PYTHON_VERSION=3.8

|

||||

# Install apt libs - copied from https://github.com/huggingface/accelerate/blob/main/docker/accelerate-gpu/Dockerfile

|

||||

RUN apt-get update && \

|

||||

apt-get install -y curl git wget software-properties-common git-lfs && \

|

||||

apt-get clean && \

|

||||

rm -rf /var/lib/apt/lists*

|

||||

|

||||

# Install audio-related libraries

|

||||

RUN apt-get update && \

|

||||

apt install -y ffmpeg

|

||||

|

||||

RUN apt install -y libsndfile1-dev

|

||||

RUN git lfs install

|

||||

|

||||

# Create our conda env - copied from https://github.com/huggingface/accelerate/blob/main/docker/accelerate-gpu/Dockerfile

|

||||

RUN conda create --name peft python=${PYTHON_VERSION} ipython jupyter pip

|

||||

RUN python3 -m pip install --no-cache-dir --upgrade pip

|

||||

|

||||

# Below is copied from https://github.com/huggingface/accelerate/blob/main/docker/accelerate-gpu/Dockerfile

|

||||

# We don't install pytorch here yet since CUDA isn't available

|

||||

# instead we use the direct torch wheel

|

||||

ENV PATH /opt/conda/envs/peft/bin:$PATH

|

||||

# Activate our bash shell

|

||||

RUN chsh -s /bin/bash

|

||||

SHELL ["/bin/bash", "-c"]

|

||||

# Activate the conda env and install transformers + accelerate from source

|

||||

RUN source activate peft && \

|

||||

python3 -m pip install --no-cache-dir \

|

||||

librosa \

|

||||

"soundfile>=0.12.1" \

|

||||

scipy \

|

||||

git+https://github.com/huggingface/transformers \

|

||||

git+https://github.com/huggingface/accelerate \

|

||||

peft[test]@git+https://github.com/huggingface/peft

|

||||

|

||||

# Install apt libs

|

||||

RUN apt-get update && \

|

||||

apt-get install -y curl git wget && \

|

||||

apt-get clean && \

|

||||

rm -rf /var/lib/apt/lists*

|

||||

|

||||

RUN echo "source activate peft" >> ~/.profile

|

||||

|

||||

# Activate the virtualenv

|

||||

CMD ["/bin/bash"]

|

||||

58

docker/peft-gpu/Dockerfile

Normal file

58

docker/peft-gpu/Dockerfile

Normal file

@ -0,0 +1,58 @@

|

||||

# Builds GPU docker image of PyTorch

|

||||

# Uses multi-staged approach to reduce size

|

||||

# Stage 1

|

||||

# Use base conda image to reduce time

|

||||

FROM continuumio/miniconda3:latest AS compile-image

|

||||

# Specify py version

|

||||

ENV PYTHON_VERSION=3.8

|

||||

# Install apt libs - copied from https://github.com/huggingface/accelerate/blob/main/docker/accelerate-gpu/Dockerfile

|

||||

RUN apt-get update && \

|

||||

apt-get install -y curl git wget software-properties-common git-lfs && \

|

||||

apt-get clean && \

|

||||

rm -rf /var/lib/apt/lists*

|

||||

|

||||

# Install audio-related libraries

|

||||

RUN apt-get update && \

|

||||

apt install -y ffmpeg

|

||||

|

||||

RUN apt install -y libsndfile1-dev

|

||||

RUN git lfs install

|

||||

|

||||

# Create our conda env - copied from https://github.com/huggingface/accelerate/blob/main/docker/accelerate-gpu/Dockerfile

|

||||

RUN conda create --name peft python=${PYTHON_VERSION} ipython jupyter pip

|

||||

RUN python3 -m pip install --no-cache-dir --upgrade pip

|

||||

|

||||

# Below is copied from https://github.com/huggingface/accelerate/blob/main/docker/accelerate-gpu/Dockerfile

|

||||

# We don't install pytorch here yet since CUDA isn't available

|

||||

# instead we use the direct torch wheel

|

||||

ENV PATH /opt/conda/envs/peft/bin:$PATH

|

||||

# Activate our bash shell

|

||||

RUN chsh -s /bin/bash

|

||||

SHELL ["/bin/bash", "-c"]

|

||||

# Activate the conda env and install transformers + accelerate from source

|

||||

RUN source activate peft && \

|

||||

python3 -m pip install --no-cache-dir \

|

||||

librosa \

|

||||

"soundfile>=0.12.1" \

|

||||

scipy \

|

||||

git+https://github.com/huggingface/transformers \

|

||||

git+https://github.com/huggingface/accelerate \

|

||||

peft[test]@git+https://github.com/huggingface/peft

|

||||

|

||||

RUN python3 -m pip install --no-cache-dir bitsandbytes

|

||||

|

||||

# Stage 2

|

||||

FROM nvidia/cuda:11.3.1-devel-ubuntu20.04 AS build-image

|

||||

COPY --from=compile-image /opt/conda /opt/conda

|

||||

ENV PATH /opt/conda/bin:$PATH

|

||||

|

||||

# Install apt libs

|

||||

RUN apt-get update && \

|

||||

apt-get install -y curl git wget && \

|

||||

apt-get clean && \

|

||||

rm -rf /var/lib/apt/lists*

|

||||

|

||||

RUN echo "source activate peft" >> ~/.profile

|

||||

|

||||

# Activate the virtualenv

|

||||

CMD ["/bin/bash"]

|

||||

19

docs/Makefile

Normal file

19

docs/Makefile

Normal file

@ -0,0 +1,19 @@

|

||||

# Minimal makefile for Sphinx documentation

|

||||

#

|

||||

|

||||

# You can set these variables from the command line.

|

||||

SPHINXOPTS =

|

||||

SPHINXBUILD = sphinx-build

|

||||

SOURCEDIR = source

|

||||

BUILDDIR = _build

|

||||

|

||||

# Put it first so that "make" without argument is like "make help".

|

||||

help:

|

||||

@$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

|

||||

|

||||

.PHONY: help Makefile

|

||||

|

||||

# Catch-all target: route all unknown targets to Sphinx using the new

|

||||

# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

|

||||

%: Makefile

|

||||

@$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

|

||||

267

docs/README.md

Normal file

267

docs/README.md

Normal file

@ -0,0 +1,267 @@

|

||||

<!---

|

||||

Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

-->

|

||||

|

||||

# Generating the documentation

|

||||

|

||||

To generate the documentation, you first have to build it. Several packages are necessary to build the doc,

|

||||

you can install them with the following command, at the root of the code repository:

|

||||

|

||||

```bash

|

||||

pip install -e ".[docs]"

|

||||

```

|

||||

|

||||

Then you need to install our special tool that builds the documentation:

|

||||

|

||||

```bash

|

||||

pip install git+https://github.com/huggingface/doc-builder

|

||||

```

|

||||

|

||||

---

|

||||

**NOTE**

|

||||

|

||||

You only need to generate the documentation to inspect it locally (if you're planning changes and want to

|

||||

check how they look before committing for instance). You don't have to commit the built documentation.

|

||||

|

||||

---

|

||||

|

||||

## Building the documentation

|

||||

|

||||

Once you have setup the `doc-builder` and additional packages, you can generate the documentation by

|

||||

typing the following command:

|

||||

|

||||

```bash

|

||||

doc-builder build peft docs/source/ --build_dir ~/tmp/test-build

|

||||

```

|

||||

|

||||

You can adapt the `--build_dir` to set any temporary folder that you prefer. This command will create it and generate

|

||||

the MDX files that will be rendered as the documentation on the main website. You can inspect them in your favorite

|

||||

Markdown editor.

|

||||

|

||||

## Previewing the documentation

|

||||

|

||||

To preview the docs, first install the `watchdog` module with:

|

||||

|

||||

```bash

|

||||

pip install watchdog

|

||||

```

|

||||

|

||||

Then run the following command:

|

||||

|

||||

```bash

|

||||

doc-builder preview {package_name} {path_to_docs}

|

||||

```

|

||||

|

||||

For example:

|

||||

|

||||

```bash

|

||||

doc-builder preview peft docs/source

|

||||

```

|

||||

|

||||

The docs will be viewable at [http://localhost:3000](http://localhost:3000). You can also preview the docs once you have opened a PR. You will see a bot add a comment to a link where the documentation with your changes lives.

|

||||

|

||||

---

|

||||

**NOTE**

|

||||

|

||||

The `preview` command only works with existing doc files. When you add a completely new file, you need to update `_toctree.yml` & restart `preview` command (`ctrl-c` to stop it & call `doc-builder preview ...` again).

|

||||

|

||||

---

|

||||

|

||||

## Adding a new element to the navigation bar

|

||||

|

||||

Accepted files are Markdown (.md or .mdx).

|

||||

|

||||

Create a file with its extension and put it in the source directory. You can then link it to the toc-tree by putting

|

||||

the filename without the extension in the [`_toctree.yml`](https://github.com/huggingface/peft/blob/main/docs/source/_toctree.yml) file.

|

||||

|

||||

## Renaming section headers and moving sections

|

||||

|

||||

It helps to keep the old links working when renaming the section header and/or moving sections from one document to another. This is because the old links are likely to be used in Issues, Forums, and Social media and it'd make for a much more superior user experience if users reading those months later could still easily navigate to the originally intended information.

|

||||

|

||||

Therefore, we simply keep a little map of moved sections at the end of the document where the original section was. The key is to preserve the original anchor.

|

||||

|

||||

So if you renamed a section from: "Section A" to "Section B", then you can add at the end of the file:

|

||||

|

||||

```

|

||||

Sections that were moved:

|

||||

|

||||

[ <a href="#section-b">Section A</a><a id="section-a"></a> ]

|

||||

```

|

||||

and of course, if you moved it to another file, then:

|

||||

|

||||

```

|

||||

Sections that were moved:

|

||||

|

||||

[ <a href="../new-file#section-b">Section A</a><a id="section-a"></a> ]

|

||||

```

|

||||

|

||||

Use the relative style to link to the new file so that the versioned docs continue to work.

|

||||

|

||||

|

||||

## Writing Documentation - Specification

|