mirror of

https://github.com/huggingface/accelerate.git

synced 2025-11-16 15:24:34 +08:00

Compare commits

37 Commits

fix-genera

...

v0.27.0

| Author | SHA1 | Date | |

|---|---|---|---|

| b7087be5f6 | |||

| f75c6245ba | |||

| 9c1d5bac15 | |||

| b0b867da85 | |||

| 433d693b70 | |||

| c3aec59b12 | |||

| 9467a62744 | |||

| 86228e321d | |||

| 06b138d845 | |||

| 0867c09318 | |||

| 0e1ee4b92d | |||

| d8a64cb79d | |||

| b703efdcc3 | |||

| 68f54720dc | |||

| 46f1391b79 | |||

| cd7ff5e137 | |||

| f4b411f84b | |||

| 7ba64e632c | |||

| 8b770a7dab | |||

| 3d8b998fbb | |||

| 03365a3d17 | |||

| 7aafa25673 | |||

| f88661b5d9 | |||

| 581fabba48 | |||

| e909eb34e2 | |||

| 7644a02e6b | |||

| 162a82164e | |||

| 0d6a5fa8ee | |||

| 53845d2596 | |||

| 5ec00da2be | |||

| 649e65b542 | |||

| 14d7c3fca6 | |||

| c7d11d7e40 | |||

| ec4f01a099 | |||

| f5c01eeb63 | |||

| 20ff458d80 | |||

| 6719cb6db3 |

@ -152,7 +152,7 @@ Follow these steps to start contributing:

|

||||

$ make test

|

||||

```

|

||||

|

||||

`accelerate` relies on `black` and `ruff` to format its source code

|

||||

`accelerate` relies on `ruff` to format its source code

|

||||

consistently. After you make changes, apply automatic style corrections and code verifications

|

||||

that can't be automated in one go with:

|

||||

|

||||

@ -235,4 +235,4 @@ $ python -m pytest -sv ./tests

|

||||

In fact, that's how `make test` is implemented (sans the `pip install` line)!

|

||||

|

||||

You can specify a smaller set of tests in order to test only the feature

|

||||

you're working on.

|

||||

you're working on.

|

||||

|

||||

18

Makefile

18

Makefile

@ -1,6 +1,6 @@

|

||||

.PHONY: quality style test docs utils

|

||||

|

||||

check_dirs := tests src examples benchmarks utils

|

||||

check_dirs := .

|

||||

|

||||

# Check that source code meets quality standards

|

||||

|

||||

@ -12,20 +12,17 @@ extra_quality_checks:

|

||||

|

||||

# this target runs checks on all files

|

||||

quality:

|

||||

black --required-version 23 --check $(check_dirs)

|

||||

ruff $(check_dirs)

|

||||

ruff format --check $(check_dirs)

|

||||

doc-builder style src/accelerate docs/source --max_len 119 --check_only

|

||||

|

||||

# Format source code automatically and check is there are any problems left that need manual fixing

|

||||

style:

|

||||

black --required-version 23 $(check_dirs)

|

||||

ruff $(check_dirs) --fix

|

||||

ruff format $(check_dirs)

|

||||

doc-builder style src/accelerate docs/source --max_len 119

|

||||

|

||||

# Run tests for the library

|

||||

test:

|

||||

python -m pytest -s -v ./tests/ --ignore=./tests/test_examples.py $(if $(IS_GITHUB_CI),--report-log "$(PYTORCH_VERSION)_all.log",)

|

||||

|

||||

test_big_modeling:

|

||||

python -m pytest -s -v ./tests/test_big_modeling.py ./tests/test_modeling_utils.py $(if $(IS_GITHUB_CI),--report-log "$(PYTORCH_VERSION)_big_modeling.log",)

|

||||

|

||||

@ -42,6 +39,15 @@ test_deepspeed:

|

||||

test_fsdp:

|

||||

python -m pytest -s -v ./tests/fsdp $(if $(IS_GITHUB_CI),--report-log "$(PYTORCH_VERSION)_fsdp.log",)

|

||||

|

||||

# Since the new version of pytest will *change* how things are collected, we need `deepspeed` to

|

||||

# run after test_core and test_cli

|

||||

test:

|

||||

$(MAKE) test_core

|

||||

$(MAKE) test_cli

|

||||

$(MAKE) test_big_modeling

|

||||

$(MAKE) test_deepspeed

|

||||

$(MAKE) test_fsdp

|

||||

|

||||

test_examples:

|

||||

python -m pytest -s -v ./tests/test_examples.py $(if $(IS_GITHUB_CI),--report-log "$(PYTORCH_VERSION)_examples.log",)

|

||||

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

# Builds GPU docker image of PyTorch

|

||||

# Builds GPU docker image of PyTorch specifically

|

||||

# Uses multi-staged approach to reduce size

|

||||

# Stage 1

|

||||

# Use base conda image to reduce time

|

||||

@ -19,7 +19,8 @@ ENV PATH /opt/conda/envs/accelerate/bin:$PATH

|

||||

# Activate our bash shell

|

||||

RUN chsh -s /bin/bash

|

||||

SHELL ["/bin/bash", "-c"]

|

||||

# Activate the conda env and install torch + accelerate

|

||||

# Activate the conda env, install mpy4pi, and install torch + accelerate

|

||||

RUN source activate accelerate && conda install -c conda-forge mpi4py

|

||||

RUN source activate accelerate && \

|

||||

python3 -m pip install --no-cache-dir \

|

||||

git+https://github.com/huggingface/accelerate#egg=accelerate[testing,test_trackers] \

|

||||

|

||||

@ -89,6 +89,8 @@

|

||||

title: Logging

|

||||

- local: package_reference/big_modeling

|

||||

title: Working with large models

|

||||

- local: package_reference/inference

|

||||

title: Distributed inference with big models

|

||||

- local: package_reference/kwargs

|

||||

title: Kwargs handlers

|

||||

- local: package_reference/utilities

|

||||

|

||||

20

docs/source/package_reference/inference.md

Normal file

20

docs/source/package_reference/inference.md

Normal file

@ -0,0 +1,20 @@

|

||||

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

|

||||

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

|

||||

rendered properly in your Markdown viewer.

|

||||

-->

|

||||

|

||||

# The inference API

|

||||

|

||||

These docs refer to the [PiPPy](https://github.com/PyTorch/PiPPy) integration.

|

||||

|

||||

[[autodoc]] inference.prepare_pippy

|

||||

@ -15,12 +15,18 @@ rendered properly in your Markdown viewer.

|

||||

|

||||

# Distributed Inference with 🤗 Accelerate

|

||||

|

||||

Distributed inference is a common use case, especially with natural language processing (NLP) models. Users often want to

|

||||

send a number of different prompts, each to a different GPU, and then get the results back. This also has other cases

|

||||

outside of just NLP, however for this tutorial we will focus on just this idea of each GPU receiving a different prompt,

|

||||

and then returning the results.

|

||||

Distributed inference can fall into three brackets:

|

||||

|

||||

## The Problem

|

||||

1. Loading an entire model onto each GPU and sending chunks of a batch through each GPU's model copy at a time

|

||||

2. Loading parts of a model onto each GPU and processing a single input at one time

|

||||

3. Loading parts of a model onto each GPU and using what is called scheduled Pipeline Parallelism to combine the two prior techniques.

|

||||

|

||||

We're going to go through the first and the last bracket, showcasing how to do each as they are more realistic scenarios.

|

||||

|

||||

|

||||

## Sending chunks of a batch automatically to each loaded model

|

||||

|

||||

This is the most memory-intensive solution, as it requires each GPU to keep a full copy of the model in memory at a given time.

|

||||

|

||||

Normally when doing this, users send the model to a specific device to load it from the CPU, and then move each prompt to a different device.

|

||||

|

||||

@ -55,7 +61,6 @@ a simple way to manage this. (To learn more, check out the relevant section in t

|

||||

|

||||

Can it manage it? Yes. Does it add unneeded extra code however: also yes.

|

||||

|

||||

## The Solution

|

||||

|

||||

With 🤗 Accelerate, we can simplify this process by using the [`Accelerator.split_between_processes`] context manager (which also exists in `PartialState` and `AcceleratorState`).

|

||||

This function will automatically split whatever data you pass to it (be it a prompt, a set of tensors, a dictionary of the prior data, etc.) across all the processes (with a potential

|

||||

@ -134,3 +139,97 @@ with distributed_state.split_between_processes(["a dog", "a cat", "a chicken"],

|

||||

|

||||

On the first GPU, the prompts will be `["a dog", "a cat"]`, and on the second GPU it will be `["a chicken", "a chicken"]`.

|

||||

Make sure to drop the final sample, as it will be a duplicate of the previous one.

|

||||

|

||||

## Memory-efficient pipeline parallelism (experimental)

|

||||

|

||||

This next part will discuss using *pipeline parallelism*. This is an **experimental** API utilizing the [PiPPy library by PyTorch](https://github.com/pytorch/PiPPy/) as a native solution.

|

||||

|

||||

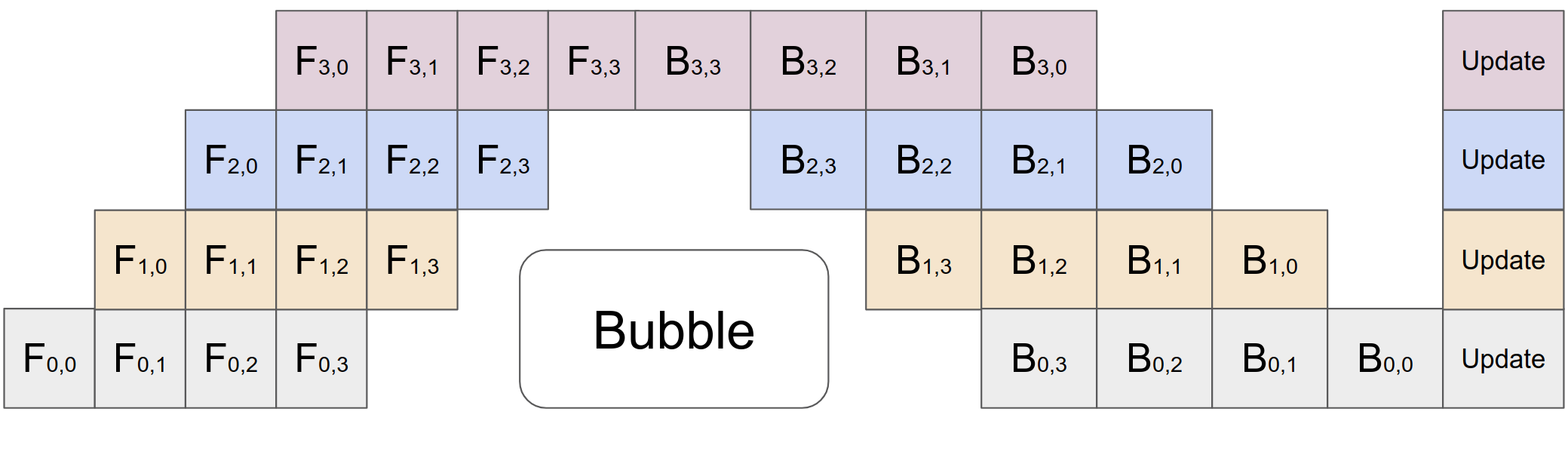

The general idea with pipeline parallelism is: say you have 4 GPUs and a model big enough it can be *split* on four GPUs using `device_map="auto"`. With this method you can send in 4 inputs at a time (for example here, any amount works) and each model chunk will work on an input, then receive the next input once the prior chunk finished, making it *much* more efficient **and faster** than the method described earlier. Here's a visual taken from the PyTorch repository:

|

||||

|

||||

|

||||

|

||||

To illustrate how you can use this with Accelerate, we have created an [example zoo](https://github.com/huggingface/accelerate/tree/main/examples/inference) showcasing a number of different models and situations. In this tutorial, we'll show this method for GPT2 across two GPUs.

|

||||

|

||||

Before you proceed, please make sure you have the latest pippy installed by running the following:

|

||||

|

||||

```bash

|

||||

pip install torchpippy

|

||||

```

|

||||

|

||||

We require at least version 0.2.0. To confirm that you have the correct version, run `pip show torchpippy`.

|

||||

|

||||

Start by creating the model on the CPU:

|

||||

|

||||

```{python}

|

||||

from transformers import GPT2ForSequenceClassification, GPT2Config

|

||||

|

||||

config = GPT2Config()

|

||||

model = GPT2ForSequenceClassification(config)

|

||||

model.eval()

|

||||

```

|

||||

|

||||

Next you'll need to create some example inputs to use. These help PiPPy trace the model.

|

||||

|

||||

<Tip warning={true}>

|

||||

However you make this example will determine the relative batch size that will be used/passed

|

||||

through the model at a given time, so make sure to remember how many items there are!

|

||||

</Tip>

|

||||

|

||||

```{python}

|

||||

input = torch.randint(

|

||||

low=0,

|

||||

high=config.vocab_size,

|

||||

size=(2, 1024), # bs x seq_len

|

||||

device="cpu",

|

||||

dtype=torch.int64,

|

||||

requires_grad=False,

|

||||

)

|

||||

```

|

||||

Next we need to actually perform the tracing and get the model ready. To do so, use the [`inference.prepare_pippy`] function and it will fully wrap the model for pipeline parallelism automatically:

|

||||

|

||||

```{python}

|

||||

from accelerate.inference import prepare_pippy

|

||||

example_inputs = {"input_ids": input}

|

||||

model = prepare_pippy(model, example_args=(input,))

|

||||

```

|

||||

|

||||

<Tip>

|

||||

|

||||

There are a variety of parameters you can pass through to `prepare_pippy`:

|

||||

|

||||

* `split_points` lets you determine what layers to split the model at. By default we use wherever `device_map="auto" declares, such as `fc` or `conv1`.

|

||||

|

||||

* `num_chunks` determines how the batch will be split and sent to the model itself (so `num_chunks=1` with four split points/four GPUs will have a naive MP where a single input gets passed between the four layer split points)

|

||||

|

||||

</Tip>

|

||||

|

||||

From here, all that's left is to actually perform the distributed inference!

|

||||

|

||||

<Tip warning={true}>

|

||||

|

||||

When passing inputs, we highly recommend to pass them in as a tuple of arguments. Using `kwargs` is supported, however, this approach is experimental.

|

||||

</Tip>

|

||||

|

||||

```{python}

|

||||

args = some_more_arguments

|

||||

with torch.no_grad():

|

||||

output = model(*args)

|

||||

```

|

||||

|

||||

When finished all the data will be on the last process only:

|

||||

|

||||

```{python}

|

||||

from accelerate import PartialState

|

||||

if PartialState().is_last_process:

|

||||

print(output)

|

||||

```

|

||||

|

||||

<Tip>

|

||||

|

||||

If you pass in `gather_output=True` to [`inference.prepare_pippy`], the output will be sent

|

||||

across to all the GPUs afterwards without needing the `is_last_process` check. This is

|

||||

`False` by default as it incurs a communication call.

|

||||

|

||||

</Tip>

|

||||

|

||||

And that's it! To explore more, please check out the inference examples in the [Accelerate repo](https://github.com/huggingface/accelerate/tree/main/examples/inference) and our [documentation](../package_reference/inference) as we work to improving this integration.

|

||||

|

||||

@ -73,7 +73,7 @@ accelerate launch examples/nlp_example.py

|

||||

|

||||

Currently, `Accelerate` supports the following config through the CLI:

|

||||

|

||||

`fsdp_sharding_strategy`: [1] FULL_SHARD (shards optimizer states, gradients and parameters), [2] SHARD_GRAD_OP (shards optimizer states and gradients), [3] NO_SHARD (DDP), [4] HYBRID_SHARD (shards optimizer states, gradients and parameters within each node while each node has full copy), [5] HYBRID_SHARD_ZERO2 (shards optimizer states and gradients within each node while each node has full copy)

|

||||

`fsdp_sharding_strategy`: [1] FULL_SHARD (shards optimizer states, gradients and parameters), [2] SHARD_GRAD_OP (shards optimizer states and gradients), [3] NO_SHARD (DDP), [4] HYBRID_SHARD (shards optimizer states, gradients and parameters within each node while each node has full copy), [5] HYBRID_SHARD_ZERO2 (shards optimizer states and gradients within each node while each node has full copy). For more information, please refer the official [PyTorch docs](https://pytorch.org/docs/stable/fsdp.html#torch.distributed.fsdp.ShardingStrategy).

|

||||

|

||||

`fsdp_offload_params` : Decides Whether to offload parameters and gradients to CPU

|

||||

|

||||

@ -91,7 +91,7 @@ Currently, `Accelerate` supports the following config through the CLI:

|

||||

|

||||

`fsdp_use_orig_params`: If True, allows non-uniform `requires_grad` during init, which means support for interspersed frozen and trainable parameters. This setting is useful in cases such as parameter-efficient fine-tuning as discussed in [this post](https://dev-discuss.pytorch.org/t/rethinking-pytorch-fully-sharded-data-parallel-fsdp-from-first-principles/1019). This option also allows one to have multiple optimizer param groups. This should be `True` when creating an optimizer before preparing/wrapping the model with FSDP.

|

||||

|

||||

`fsdp_cpu_ram_efficient_loading`: Only applicable for 🤗 Transformers models. If True, only the first process loads the pretrained model checkpoint while all other processes have empty weights. This should be set to False if you experience errors when loading the pretrained 🤗 Transformers model via `from_pretrained` method. When this setting is True `fsdp_sync_module_states` also must to be True, otherwise all the processes except the main process would have random weights leading to unexpected behaviour during training.

|

||||

`fsdp_cpu_ram_efficient_loading`: Only applicable for 🤗 Transformers models. If True, only the first process loads the pretrained model checkpoint while all other processes have empty weights. This should be set to False if you experience errors when loading the pretrained 🤗 Transformers model via `from_pretrained` method. When this setting is True `fsdp_sync_module_states` also must to be True, otherwise all the processes except the main process would have random weights leading to unexpected behaviour during training. For this to work, make sure the distributed process group is initialized before calling Transformers `from_pretrained` method. When using 🤗 Trainer API, the distributed process group is initialized when you create an instance of `TrainingArguments` class.

|

||||

|

||||

`fsdp_sync_module_states`: If True, each individually wrapped FSDP unit will broadcast module parameters from rank 0.

|

||||

|

||||

@ -161,6 +161,13 @@ When using transformers `save_pretrained`, pass `state_dict=accelerator.get_stat

|

||||

|

||||

You can then pass `state` into the `save_pretrained` method. There are several modes for `StateDictType` and `FullStateDictConfig` that you can use to control the behavior of `state_dict`. For more information, see the [PyTorch documentation](https://pytorch.org/docs/stable/fsdp.html).

|

||||

|

||||

|

||||

## Mapping between FSDP sharding strategies and DeepSpeed ZeRO Stages

|

||||

* `FULL_SHARD` maps to the DeepSpeed `ZeRO Stage-3`. Shards optimizer states, gradients and parameters.

|

||||

* `SHARD_GRAD_OP` maps to the DeepSpeed `ZeRO Stage-2`. Shards optimizer states and gradients.

|

||||

* `NO_SHARD` maps to `ZeRO Stage-0`. No sharding wherein each GPU has full copy of model, optimizer states and gradients.

|

||||

* `HYBRID_SHARD` maps to `ZeRO++ Stage-3` wherein `zero_hpz_partition_size=<num_gpus_per_node>`. Here, this will shard optimizer states, gradients and parameters within each node while each node has full copy.

|

||||

|

||||

## A few caveats to be aware of

|

||||

|

||||

- In case of multiple models, pass the optimizers to the prepare call in the same order as corresponding models else `accelerator.save_state()` and `accelerator.load_state()` will result in wrong/unexpected behaviour.

|

||||

|

||||

@ -207,6 +207,22 @@ In [/slurm/submit_multigpu.sh](./slurm/submit_multigpu.sh) the only parameter in

|

||||

|

||||

In [/slurm/submit_multinode.sh](./slurm/submit_multinode.sh) we must specify the number of nodes that will be part of the training (`--num_machines`), how many GPUs we will use in total (`--num_processes`), the [`backend`](https://pytorch.org/docs/stable/elastic/run.html#note-on-rendezvous-backend), `--main_process_ip` which will be the address the master node and the `--main_process_port`.

|

||||

|

||||

In both scripts, we run `activateEnviroment.sh` at the beginning. This script should contain the necessary instructions to initialize the environment for execution. Below, we show an example that loads the necessary libraries ([Environment modules](https://github.com/cea-hpc/modules)), activates the Python environment, and sets up various environment variables, most of them to run the scripts in offline mode in case we don't have internet connection from the cluster.

|

||||

|

||||

```bash

|

||||

# activateEnvironment.sh

|

||||

module purge

|

||||

module load anaconda3/2020.02 cuda/10.2 cudnn/8.0.5 nccl/2.9.9 arrow/7.0.0 openmpi

|

||||

source activate /home/nct01/nct01328/pytorch_antoni_local

|

||||

|

||||

export HF_HOME=/gpfs/projects/nct01/nct01328/

|

||||

export HF_LOCAL_HOME=/gpfs/projects/nct01/nct01328/HF_LOCAL

|

||||

export HF_DATASETS_OFFLINE=1

|

||||

export TRANSFORMERS_OFFLINE=1

|

||||

export PYTHONPATH=/home/nct01/nct01328/transformers-in-supercomputers:$PYTHONPATH

|

||||

export GPUS_PER_NODE=4

|

||||

```

|

||||

|

||||

## Finer Examples

|

||||

|

||||

While the first two scripts are extremely barebones when it comes to what you can do with accelerate, more advanced features are documented in two other locations.

|

||||

|

||||

@ -130,8 +130,6 @@ def training_function(config, args):

|

||||

accelerator = Accelerator(

|

||||

cpu=args.cpu, mixed_precision=args.mixed_precision, gradient_accumulation_steps=gradient_accumulation_steps

|

||||

)

|

||||

if accelerator.distributed_type not in [DistributedType.NO, DistributedType.MULTI_CPU, DistributedType.MULTI_GPU]:

|

||||

raise NotImplementedError("LocalSGD is supported only for CPUs and GPUs (no DeepSpeed or MegatronLM)")

|

||||

# Sample hyper-parameters for learning rate, batch size, seed and a few other HPs

|

||||

lr = config["lr"]

|

||||

num_epochs = int(config["num_epochs"])

|

||||

|

||||

@ -405,7 +405,7 @@ def main():

|

||||

f"The tokenizer picked seems to have a very large `model_max_length` ({tokenizer.model_max_length}). "

|

||||

"Picking 1024 instead. You can change that default value by passing --block_size xxx."

|

||||

)

|

||||

block_size = 1024

|

||||

block_size = 1024

|

||||

else:

|

||||

if args.block_size > tokenizer.model_max_length:

|

||||

logger.warning(

|

||||

|

||||

62

examples/inference/README.md

Normal file

62

examples/inference/README.md

Normal file

@ -0,0 +1,62 @@

|

||||

# Distributed inference examples with PiPPy

|

||||

|

||||

This repo contains a variety of tutorials for using the [PiPPy](https://github.com/PyTorch/PiPPy) pipeline parallelism library with accelerate. You will find examples covering:

|

||||

|

||||

1. How to trace the model using `accelerate.prepare_pippy`

|

||||

2. How to specify inputs based on what the model expects (when to use `kwargs`, `args`, and such)

|

||||

3. How to gather the results at the end.

|

||||

|

||||

## Installation

|

||||

|

||||

This requires the `main` branch of accelerate (or a version at least 0.27.0), `pippy` version of 0.2.0 or greater, and at least python 3.9. Please install using `pip install .` to pull from the `setup.py` in this repo, or run manually:

|

||||

|

||||

```bash

|

||||

pip install 'accelerate>=0.27.0' 'torchpippy>=0.2.0'

|

||||

```

|

||||

|

||||

## Running code

|

||||

|

||||

You can either use `torchrun` or the recommended way of `accelerate launch` (without needing to run `accelerate config`) on each script:

|

||||

|

||||

```bash

|

||||

accelerate launch bert.py

|

||||

```

|

||||

|

||||

Or:

|

||||

|

||||

```bash

|

||||

accelerate launch --num_processes {NUM_GPUS} bert.py

|

||||

```

|

||||

|

||||

Or:

|

||||

|

||||

```bash

|

||||

torchrun --nproc-per-node {NUM_GPUS} bert.py

|

||||

```

|

||||

|

||||

## General speedups

|

||||

|

||||

One can expect that PiPPy will outperform native model parallism by a multiplicative factor since all GPUs are running at all times with inputs, rather than one input being passed through a GPU at a time waiting for the prior to finish.

|

||||

|

||||

Below are some benchmarks we have found when using the accelerate-pippy integration for a few models when running on 2x4090's:

|

||||

|

||||

### Bert

|

||||

|

||||

| | Accelerate/Sequential | PiPPy + Accelerate |

|

||||

|---|---|---|

|

||||

| First batch | 0.2137s | 0.3119s |

|

||||

| Average of 5 batches | 0.0099s | **0.0062s** |

|

||||

|

||||

### GPT2

|

||||

|

||||

| | Accelerate/Sequential | PiPPy + Accelerate |

|

||||

|---|---|---|

|

||||

| First batch | 0.1959s | 0.4189s |

|

||||

| Average of 5 batches | 0.0205s | **0.0126s** |

|

||||

|

||||

### T5

|

||||

|

||||

| | Accelerate/Sequential | PiPPy + Accelerate |

|

||||

|---|---|---|

|

||||

| First batch | 0.2789s | 0.3809s |

|

||||

| Average of 5 batches | 0.0198s | **0.0166s** |

|

||||

79

examples/inference/bert.py

Normal file

79

examples/inference/bert.py

Normal file

@ -0,0 +1,79 @@

|

||||

# coding=utf-8

|

||||

# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import time

|

||||

|

||||

import torch

|

||||

from transformers import AutoModelForMaskedLM

|

||||

|

||||

from accelerate import PartialState, prepare_pippy

|

||||

from accelerate.utils import set_seed

|

||||

|

||||

|

||||

# Set the random seed to have reproducable outputs

|

||||

set_seed(42)

|

||||

|

||||

# Create an example model

|

||||

model = AutoModelForMaskedLM.from_pretrained("bert-base-uncased")

|

||||

model.eval()

|

||||

|

||||

# Input configs

|

||||

# Create example inputs for the model

|

||||

input = torch.randint(

|

||||

low=0,

|

||||

high=model.config.vocab_size,

|

||||

size=(2, 512), # bs x seq_len

|

||||

device="cpu",

|

||||

dtype=torch.int64,

|

||||

requires_grad=False,

|

||||

)

|

||||

|

||||

|

||||

# Create a pipeline stage from the model

|

||||

# Using `auto` is equivalent to letting `device_map="auto"` figure

|

||||

# out device mapping and will also split the model according to the

|

||||

# number of total GPUs available if it fits on one GPU

|

||||

model = prepare_pippy(model, split_points="auto", example_args=(input,))

|

||||

|

||||

# You can pass `gather_output=True` to have the output from the model

|

||||

# available on all GPUs

|

||||

# model = prepare_pippy(model, split_points="auto", example_args=(input,), gather_output=True)

|

||||

|

||||

# Move the inputs to the first device

|

||||

input = input.to("cuda:0")

|

||||

|

||||

# Take an average of 5 times

|

||||

# Measure first batch

|

||||

torch.cuda.synchronize()

|

||||

start_time = time.time()

|

||||

with torch.no_grad():

|

||||

output = model(input)

|

||||

torch.cuda.synchronize()

|

||||

end_time = time.time()

|

||||

first_batch = end_time - start_time

|

||||

|

||||

# Now that CUDA is init, measure after

|

||||

torch.cuda.synchronize()

|

||||

start_time = time.time()

|

||||

for i in range(5):

|

||||

with torch.no_grad():

|

||||

output = model(input)

|

||||

torch.cuda.synchronize()

|

||||

end_time = time.time()

|

||||

|

||||

# The outputs are only on the final process by default

|

||||

if PartialState().is_last_process:

|

||||

output = torch.stack(tuple(output[0]))

|

||||

print(f"Time of first pass: {first_batch}")

|

||||

print(f"Average time per batch: {(end_time - start_time)/5}")

|

||||

78

examples/inference/gpt2.py

Normal file

78

examples/inference/gpt2.py

Normal file

@ -0,0 +1,78 @@

|

||||

# coding=utf-8

|

||||

# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import time

|

||||

|

||||

import torch

|

||||

from transformers import AutoModelForSequenceClassification

|

||||

|

||||

from accelerate import PartialState, prepare_pippy

|

||||

from accelerate.utils import set_seed

|

||||

|

||||

|

||||

# Set the random seed to have reproducable outputs

|

||||

set_seed(42)

|

||||

|

||||

# Create an example model

|

||||

model = AutoModelForSequenceClassification.from_pretrained("gpt2")

|

||||

model.eval()

|

||||

|

||||

# Input configs

|

||||

# Create example inputs for the model

|

||||

input = torch.randint(

|

||||

low=0,

|

||||

high=model.config.vocab_size,

|

||||

size=(2, 1024), # bs x seq_len

|

||||

device="cpu",

|

||||

dtype=torch.int64,

|

||||

requires_grad=False,

|

||||

)

|

||||

|

||||

# Create a pipeline stage from the model

|

||||

# Using `auto` is equivalent to letting `device_map="auto"` figure

|

||||

# out device mapping and will also split the model according to the

|

||||

# number of total GPUs available if it fits on one GPU

|

||||

model = prepare_pippy(model, split_points="auto", example_args=(input,))

|

||||

|

||||

# You can pass `gather_output=True` to have the output from the model

|

||||

# available on all GPUs

|

||||

# model = prepare_pippy(model, split_points="auto", example_args=(input,), gather_output=True)

|

||||

|

||||

# Move the inputs to the first device

|

||||

input = input.to("cuda:0")

|

||||

|

||||

# Take an average of 5 times

|

||||

# Measure first batch

|

||||

torch.cuda.synchronize()

|

||||

start_time = time.time()

|

||||

with torch.no_grad():

|

||||

output = model(input)

|

||||

torch.cuda.synchronize()

|

||||

end_time = time.time()

|

||||

first_batch = end_time - start_time

|

||||

|

||||

# Now that CUDA is init, measure after

|

||||

torch.cuda.synchronize()

|

||||

start_time = time.time()

|

||||

for i in range(5):

|

||||

with torch.no_grad():

|

||||

output = model(input)

|

||||

torch.cuda.synchronize()

|

||||

end_time = time.time()

|

||||

|

||||

# The outputs are only on the final process by default

|

||||

if PartialState().is_last_process:

|

||||

output = torch.stack(tuple(output[0]))

|

||||

print(f"Time of first pass: {first_batch}")

|

||||

print(f"Average time per batch: {(end_time - start_time)/5}")

|

||||

55

examples/inference/llama.py

Normal file

55

examples/inference/llama.py

Normal file

@ -0,0 +1,55 @@

|

||||

# coding=utf-8

|

||||

# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import torch

|

||||

from transformers import AutoModelForCausalLM, AutoTokenizer

|

||||

|

||||

from accelerate import PartialState, prepare_pippy

|

||||

|

||||

|

||||

# sdpa implementation which is the default torch>2.1.2 fails with the tracing + attention mask kwarg

|

||||

# with attn_implementation="eager" mode, the forward is very slow for some reason

|

||||

model = AutoModelForCausalLM.from_pretrained(

|

||||

"meta-llama/Llama-2-7b-chat-hf", low_cpu_mem_usage=True, attn_implementation="sdpa"

|

||||

)

|

||||

model.eval()

|

||||

|

||||

# Input configs

|

||||

# Create example inputs for the model

|

||||

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-chat-hf")

|

||||

prompts = ("I would like to", "I really like to", "The weather is") # bs = 3

|

||||

tokenizer.pad_token = tokenizer.eos_token

|

||||

inputs = tokenizer(prompts, return_tensors="pt", padding=True)

|

||||

|

||||

# Create a pipeline stage from the model

|

||||

# Using `auto` is equivalent to letting `device_map="auto"` figure

|

||||

# out device mapping and will also split the model according to the

|

||||

# number of total GPUs available if it fits on one GPU

|

||||

model = prepare_pippy(model, split_points="auto", example_args=inputs)

|

||||

|

||||

# You can pass `gather_output=True` to have the output from the model

|

||||

# available on all GPUs

|

||||

# model = prepare_pippy(model, split_points="auto", example_args=(input,), gather_output=True)

|

||||

|

||||

# currently we don't support `model.generate`

|

||||

# output = model.generate(**inputs, max_new_tokens=1)

|

||||

|

||||

with torch.no_grad():

|

||||

output = model(**inputs)

|

||||

|

||||

# The outputs are only on the final process by default

|

||||

if PartialState().is_last_process:

|

||||

next_token_logits = output[0][:, -1, :]

|

||||

next_token = torch.argmax(next_token_logits, dim=-1)

|

||||

print(tokenizer.batch_decode(next_token))

|

||||

2

examples/inference/requirements.txt

Normal file

2

examples/inference/requirements.txt

Normal file

@ -0,0 +1,2 @@

|

||||

accelerate

|

||||

pippy>=0.2.0

|

||||

90

examples/inference/t5.py

Normal file

90

examples/inference/t5.py

Normal file

@ -0,0 +1,90 @@

|

||||

# coding=utf-8

|

||||

# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

import time

|

||||

|

||||

import torch

|

||||

from transformers import AutoModelForSeq2SeqLM

|

||||

|

||||

from accelerate import PartialState, prepare_pippy

|

||||

from accelerate.utils import set_seed

|

||||

|

||||

|

||||

# Set the random seed to have reproducable outputs

|

||||

set_seed(42)

|

||||

|

||||

# Create an example model

|

||||

model = AutoModelForSeq2SeqLM.from_pretrained("t5-small")

|

||||

model.eval()

|

||||

|

||||

# Input configs

|

||||

# Create example inputs for the model

|

||||

input = torch.randint(

|

||||

low=0,

|

||||

high=model.config.vocab_size,

|

||||

size=(2, 1024), # bs x seq_len

|

||||

device="cpu",

|

||||

dtype=torch.int64,

|

||||

requires_grad=False,

|

||||

)

|

||||

|

||||

example_inputs = {"input_ids": input, "decoder_input_ids": input}

|

||||

|

||||

# Create a pipeline stage from the model

|

||||

# Using `auto` is equivalent to letting `device_map="auto"` figure

|

||||

# out device mapping and will also split the model according to the

|

||||

# number of total GPUs available if it fits on one GPU

|

||||

model = prepare_pippy(

|

||||

model,

|

||||

no_split_module_classes=["T5Block"],

|

||||

example_kwargs=example_inputs,

|

||||

)

|

||||

|

||||

# You can pass `gather_output=True` to have the output from the model

|

||||

# available on all GPUs

|

||||

# model = prepare_pippy(

|

||||

# model,

|

||||

# no_split_module_classes=["T5Block"],

|

||||

# example_kwargs=example_inputs,

|

||||

# gather_outputs=True

|

||||

# )

|

||||

|

||||

# The model expects a tuple during real inference

|

||||

# with the data on the first device

|

||||

args = (example_inputs["input_ids"].to("cuda:0"), example_inputs["decoder_input_ids"].to("cuda:0"))

|

||||

|

||||

# Take an average of 5 times

|

||||

# Measure first batch

|

||||

torch.cuda.synchronize()

|

||||

start_time = time.time()

|

||||

with torch.no_grad():

|

||||

output = model(*args)

|

||||

torch.cuda.synchronize()

|

||||

end_time = time.time()

|

||||

first_batch = end_time - start_time

|

||||

|

||||

# Now that CUDA is init, measure after

|

||||

torch.cuda.synchronize()

|

||||

start_time = time.time()

|

||||

for i in range(5):

|

||||

with torch.no_grad():

|

||||

output = model(*args)

|

||||

torch.cuda.synchronize()

|

||||

end_time = time.time()

|

||||

|

||||

# The outputs are only on the final process by default

|

||||

if PartialState().is_last_process:

|

||||

output = torch.stack(tuple(output[0]))

|

||||

print(f"Time of first pass: {first_batch}")

|

||||

print(f"Average time per batch: {(end_time - start_time)/5}")

|

||||

@ -1,7 +1,3 @@

|

||||

[tool.black]

|

||||

line-length = 119

|

||||

target-version = ['py37']

|

||||

|

||||

[tool.ruff]

|

||||

# Never enforce `E501` (line length violations).

|

||||

ignore = ["E501", "E741", "W605"]

|

||||

@ -11,7 +7,13 @@ line-length = 119

|

||||

# Ignore import violations in all `__init__.py` files.

|

||||

[tool.ruff.per-file-ignores]

|

||||

"__init__.py" = ["E402", "F401", "F403", "F811"]

|

||||

"manim_animations/*" = ["ALL"]

|

||||

|

||||

[tool.ruff.isort]

|

||||

lines-after-imports = 2

|

||||

known-first-party = ["accelerate"]

|

||||

|

||||

[tool.ruff.format]

|

||||

exclude = [

|

||||

"manim_animations/*"

|

||||

]

|

||||

|

||||

33

setup.py

33

setup.py

@ -12,15 +12,28 @@

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

from setuptools import setup

|

||||

from setuptools import find_packages

|

||||

from setuptools import find_packages, setup

|

||||

|

||||

|

||||

extras = {}

|

||||

extras["quality"] = ["black ~= 23.1", "ruff >= 0.0.241", "hf-doc-builder >= 0.3.0", "urllib3 < 2.0.0"]

|

||||

extras["quality"] = [

|

||||

"black ~= 23.1", # hf-doc-builder has a hidden dependency on `black`

|

||||

"hf-doc-builder >= 0.3.0",

|

||||

"ruff ~= 0.1.15",

|

||||

]

|

||||

extras["docs"] = []

|

||||

extras["test_prod"] = ["pytest", "pytest-xdist", "pytest-subtests", "parameterized"]

|

||||

extras["test_dev"] = [

|

||||

"datasets", "evaluate", "transformers", "scipy", "scikit-learn", "deepspeed", "tqdm", "bitsandbytes", "timm"

|

||||

"datasets",

|

||||

"evaluate",

|

||||

"torchpippy>=0.2.0",

|

||||

"transformers",

|

||||

"scipy",

|

||||

"scikit-learn",

|

||||

"deepspeed<0.13.0",

|

||||

"tqdm",

|

||||

"bitsandbytes",

|

||||

"timm",

|

||||

]

|

||||

extras["testing"] = extras["test_prod"] + extras["test_dev"]

|

||||

extras["rich"] = ["rich"]

|

||||

@ -34,7 +47,7 @@ extras["sagemaker"] = [

|

||||

|

||||

setup(

|

||||

name="accelerate",

|

||||

version="0.27.0.dev0",

|

||||

version="0.27.0",

|

||||

description="Accelerate",

|

||||

long_description=open("README.md", "r", encoding="utf-8").read(),

|

||||

long_description_content_type="text/markdown",

|

||||

@ -54,7 +67,15 @@ setup(

|

||||

]

|

||||

},

|

||||

python_requires=">=3.8.0",

|

||||

install_requires=["numpy>=1.17", "packaging>=20.0", "psutil", "pyyaml", "torch>=1.10.0", "huggingface_hub", "safetensors>=0.3.1"],

|

||||

install_requires=[

|

||||

"numpy>=1.17",

|

||||

"packaging>=20.0",

|

||||

"psutil",

|

||||

"pyyaml",

|

||||

"torch>=1.10.0",

|

||||

"huggingface_hub",

|

||||

"safetensors>=0.3.1",

|

||||

],

|

||||

extras_require=extras,

|

||||

classifiers=[

|

||||

"Development Status :: 5 - Production/Stable",

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

__version__ = "0.27.0.dev0"

|

||||

__version__ = "0.27.0"

|

||||

|

||||

from .accelerator import Accelerator

|

||||

from .big_modeling import (

|

||||

@ -11,6 +11,7 @@ from .big_modeling import (

|

||||

load_checkpoint_and_dispatch,

|

||||

)

|

||||

from .data_loader import skip_first_batches

|

||||

from .inference import prepare_pippy

|

||||

from .launchers import debug_launcher, notebook_launcher

|

||||

from .state import PartialState

|

||||

from .utils import (

|

||||

|

||||

@ -373,8 +373,6 @@ class Accelerator:

|

||||

raise ValueError("You can only pass one `AutocastKwargs` in `kwargs_handler`.")

|

||||

else:

|

||||

self.autocast_handler = handler

|

||||

if self.fp8_recipe_handler is None and mixed_precision == "fp8":

|

||||

self.fp8_recipe_handler = FP8RecipeKwargs()

|

||||

|

||||

kwargs = self.init_handler.to_kwargs() if self.init_handler is not None else {}

|

||||

self.state = AcceleratorState(

|

||||

@ -388,6 +386,9 @@ class Accelerator:

|

||||

**kwargs,

|

||||

)

|

||||

|

||||

if self.fp8_recipe_handler is None and self.state.mixed_precision == "fp8":

|

||||

self.fp8_recipe_handler = FP8RecipeKwargs(backend="MSAMP" if is_msamp_available() else "TE")

|

||||

|

||||

trackers = filter_trackers(log_with, self.logging_dir)

|

||||

if len(trackers) < 1 and log_with is not None:

|

||||

warnings.warn(f"`log_with={log_with}` was passed but no supported trackers are currently installed.")

|

||||

@ -1753,10 +1754,11 @@ class Accelerator:

|

||||

for obj in result:

|

||||

if isinstance(obj, torch.nn.Module):

|

||||

model = obj

|

||||

model.train()

|

||||

elif isinstance(obj, (torch.optim.Optimizer)):

|

||||

optimizer = obj

|

||||

if optimizer is not None and model is not None:

|

||||

dtype = torch.bfloat16 if self.state.mixed_precision == "bf16" else torch.float32

|

||||

dtype = torch.bfloat16 if self.state.mixed_precision == "bf16" else None

|

||||

if self.device.type == "xpu" and is_xpu_available():

|

||||

model = model.to(self.device)

|

||||

model, optimizer = torch.xpu.optimize(

|

||||

|

||||

@ -38,11 +38,13 @@ from .utils import (

|

||||

infer_auto_device_map,

|

||||

is_npu_available,

|

||||

is_torch_version,

|

||||

is_xpu_available,

|

||||

load_checkpoint_in_model,

|

||||

offload_state_dict,

|

||||

parse_flag_from_env,

|

||||

retie_parameters,

|

||||

)

|

||||

from .utils.other import recursive_getattr

|

||||

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

@ -123,6 +125,7 @@ def init_on_device(device: torch.device, include_buffers: bool = None):

|

||||

if param is not None:

|

||||

param_cls = type(module._parameters[name])

|

||||

kwargs = module._parameters[name].__dict__

|

||||

kwargs["requires_grad"] = param.requires_grad

|

||||

module._parameters[name] = param_cls(module._parameters[name].to(device), **kwargs)

|

||||

|

||||

def register_empty_buffer(module, name, buffer, persistent=True):

|

||||

@ -395,7 +398,22 @@ def dispatch_model(

|

||||

else:

|

||||

weights_map = None

|

||||

|

||||

# When dispatching the model's parameters to the devices specified in device_map, we want to avoid allocating memory several times for the

|

||||

# tied parameters. The dictionary tied_params_map keeps track of the already allocated data for a given tied parameter (represented by its

|

||||

# original pointer) on each devices.

|

||||

tied_params = find_tied_parameters(model)

|

||||

|

||||

tied_params_map = {}

|

||||

for group in tied_params:

|

||||

for param_name in group:

|

||||

# data_ptr() is enough here, as `find_tied_parameters` finds tied params simply by comparing `param1 is param2`, so we don't need

|

||||

# to care about views of tensors through storage_offset.

|

||||

data_ptr = recursive_getattr(model, param_name).data_ptr()

|

||||

tied_params_map[data_ptr] = {}

|

||||

|

||||

# Note: To handle the disk offloading case, we can not simply use weights_map[param_name].data_ptr() as the reference pointer,

|

||||

# as we have no guarantee that safetensors' `file.get_tensor()` will always give the same pointer.

|

||||

|

||||

attach_align_device_hook_on_blocks(

|

||||

model,

|

||||

execution_device=execution_device,

|

||||

@ -404,6 +422,7 @@ def dispatch_model(

|

||||

weights_map=weights_map,

|

||||

skip_keys=skip_keys,

|

||||

preload_module_classes=preload_module_classes,

|

||||

tied_params_map=tied_params_map,

|

||||

)

|

||||

|

||||

# warn if there is any params on the meta device

|

||||

@ -433,6 +452,8 @@ def dispatch_model(

|

||||

model.to = add_warning(model.to, model)

|

||||

if is_npu_available():

|

||||

model.npu = add_warning(model.npu, model)

|

||||

elif is_xpu_available():

|

||||

model.xpu = add_warning(model.xpu, model)

|

||||

else:

|

||||

model.cuda = add_warning(model.cuda, model)

|

||||

|

||||

@ -441,6 +462,8 @@ def dispatch_model(

|

||||

# `torch.Tensor.to(<int num>)` is not supported by `torch_npu` (see this [issue](https://github.com/Ascend/pytorch/issues/16)).

|

||||

if is_npu_available() and isinstance(device, int):

|

||||

device = f"npu:{device}"

|

||||

elif is_xpu_available() and isinstance(device, int):

|

||||

device = f"xpu:{device}"

|

||||

if device != "disk":

|

||||

model.to(device)

|

||||

else:

|

||||

|

||||

@ -179,7 +179,11 @@ def get_cluster_input():

|

||||

|

||||

use_mps = not use_cpu and is_mps_available()

|

||||

deepspeed_config = {}

|

||||

if distributed_type in [DistributedType.MULTI_GPU, DistributedType.MULTI_NPU, DistributedType.NO] and not use_mps:

|

||||

if (

|

||||

distributed_type

|

||||

in [DistributedType.MULTI_GPU, DistributedType.MULTI_XPU, DistributedType.MULTI_NPU, DistributedType.NO]

|

||||

and not use_mps

|

||||

):

|

||||

use_deepspeed = _ask_field(

|

||||

"Do you want to use DeepSpeed? [yes/NO]: ",

|

||||

_convert_yes_no_to_bool,

|

||||

|

||||

@ -837,12 +837,26 @@ def prepare_data_loader(

|

||||

process_index = state.process_index

|

||||

|

||||

# Sanity check

|

||||

batch_size = dataloader.batch_size if dataloader.batch_size is not None else dataloader.batch_sampler.batch_size

|

||||

if split_batches and batch_size > 1 and batch_size % num_processes != 0:

|

||||

raise ValueError(

|

||||

f"To use a `DataLoader` in `split_batches` mode, the batch size ({dataloader.batch_size}) "

|

||||

f"needs to be a round multiple of the number of processes ({num_processes})."

|

||||

)

|

||||

if split_batches:

|

||||

if dataloader.batch_size is not None:

|

||||

batch_size_for_check = dataloader.batch_size

|

||||

else:

|

||||

# For custom batch_sampler

|

||||

if hasattr(dataloader.batch_sampler, "batch_size"):

|

||||

batch_size_for_check = dataloader.batch_sampler.batch_size

|

||||

else:

|

||||

raise ValueError(

|

||||

"In order to use `split_batches==True` you must have a `batch_size` attribute either in the passed "

|

||||

"`dataloader` or `dataloader.batch_sampler` objects, and it has to return a natural number. "

|

||||

"Your `dataloader.batch_size` is None and `dataloader.batch_sampler` "

|

||||

f"(`{type(dataloader.batch_sampler)}`) does not have the `batch_size` attribute set."

|

||||

)

|

||||

|

||||

if batch_size_for_check > 1 and batch_size_for_check % num_processes != 0:

|

||||

raise ValueError(

|

||||

f"To use a `DataLoader` in `split_batches` mode, the batch size ({dataloader.batch_size}) "

|

||||

f"needs to be a round multiple of the number of processes ({num_processes})."

|

||||

)

|

||||

|

||||

new_dataset = dataloader.dataset

|

||||

# Iterable dataset doesn't like batch_sampler, but data_loader creates a default one for it

|

||||

|

||||

@ -27,6 +27,7 @@ from .utils import (

|

||||

set_module_tensor_to_device,

|

||||

)

|

||||

from .utils.modeling import get_non_persistent_buffers

|

||||

from .utils.other import recursive_getattr

|

||||

|

||||

|

||||

class ModelHook:

|

||||

@ -165,7 +166,12 @@ def add_hook_to_module(module: nn.Module, hook: ModelHook, append: bool = False)

|

||||

output = module._old_forward(*args, **kwargs)

|

||||

return module._hf_hook.post_forward(module, output)

|

||||

|

||||

module.forward = functools.update_wrapper(functools.partial(new_forward, module), old_forward)

|

||||

# Overriding a GraphModuleImpl forward freezes the forward call and later modifications on the graph will fail.

|

||||

# Reference: https://pytorch.slack.com/archives/C3PDTEV8E/p1705929610405409

|

||||

if "GraphModuleImpl" in str(type(module)):

|

||||

module.__class__.forward = functools.update_wrapper(functools.partial(new_forward, module), old_forward)

|

||||

else:

|

||||

module.forward = functools.update_wrapper(functools.partial(new_forward, module), old_forward)

|

||||

|

||||

return module

|

||||

|

||||

@ -188,7 +194,12 @@ def remove_hook_from_module(module: nn.Module, recurse=False):

|

||||

delattr(module, "_hf_hook")

|

||||

|

||||

if hasattr(module, "_old_forward"):

|

||||

module.forward = module._old_forward

|

||||

# Overriding a GraphModuleImpl forward freezes the forward call and later modifications on the graph will fail.

|

||||

# Reference: https://pytorch.slack.com/archives/C3PDTEV8E/p1705929610405409

|

||||

if "GraphModuleImpl" in str(type(module)):

|

||||

module.__class__.forward = module._old_forward

|

||||

else:

|

||||

module.forward = module._old_forward

|

||||

delattr(module, "_old_forward")

|

||||

|

||||

if recurse:

|

||||

@ -227,6 +238,7 @@ class AlignDevicesHook(ModelHook):

|

||||

offload_buffers: bool = False,

|

||||

place_submodules: bool = False,

|

||||

skip_keys: Optional[Union[str, List[str]]] = None,

|

||||

tied_params_map: Optional[Dict[int, Dict[torch.device, torch.Tensor]]] = None,

|

||||

):

|

||||

self.execution_device = execution_device

|

||||

self.offload = offload

|

||||

@ -240,6 +252,11 @@ class AlignDevicesHook(ModelHook):

|

||||

self.input_device = None

|

||||

self.param_original_devices = {}

|

||||

self.buffer_original_devices = {}

|

||||

self.tied_params_names = set()

|

||||

|

||||

# The hook pre_forward/post_forward need to have knowledge of this dictionary, as with offloading we want to avoid duplicating memory

|

||||

# for tied weights already loaded on the target execution device.

|

||||

self.tied_params_map = tied_params_map

|

||||

|

||||

def __repr__(self):

|

||||

return (

|

||||

@ -249,9 +266,13 @@ class AlignDevicesHook(ModelHook):

|

||||

)

|

||||

|

||||

def init_hook(self, module):

|

||||

# In case the AlignDevicesHook is on meta device, ignore tied weights as data_ptr() is then always zero.

|

||||

if self.execution_device == "meta" or self.execution_device == torch.device("meta"):

|

||||

self.tied_params_map = None

|

||||

|

||||

if not self.offload and self.execution_device is not None:

|

||||

for name, _ in named_module_tensors(module, recurse=self.place_submodules):

|

||||

set_module_tensor_to_device(module, name, self.execution_device)

|

||||

set_module_tensor_to_device(module, name, self.execution_device, tied_params_map=self.tied_params_map)

|

||||

elif self.offload:

|

||||

self.original_devices = {

|

||||

name: param.device for name, param in named_module_tensors(module, recurse=self.place_submodules)

|

||||

@ -266,13 +287,28 @@ class AlignDevicesHook(ModelHook):

|

||||

for name, _ in named_module_tensors(

|

||||

module, include_buffers=self.offload_buffers, recurse=self.place_submodules, remove_non_persistent=True

|

||||

):

|

||||

# When using disk offloading, we can not rely on `weights_map[name].data_ptr()` as the reference pointer,

|

||||

# as we have no guarantee that safetensors' `file.get_tensor()` will always give the same pointer.

|

||||

# As we have no reliable way to track the shared data pointer of tied weights in this case, we use tied_params_names: List[str]

|

||||

# to add on the fly pointers to `tied_params_map` in the pre_forward call.

|

||||

if (

|

||||

self.tied_params_map is not None

|

||||

and recursive_getattr(module, name).data_ptr() in self.tied_params_map

|

||||

):

|

||||

self.tied_params_names.add(name)

|

||||

|

||||

set_module_tensor_to_device(module, name, "meta")

|

||||

|

||||

if not self.offload_buffers and self.execution_device is not None:

|

||||

for name, _ in module.named_buffers(recurse=self.place_submodules):

|

||||

set_module_tensor_to_device(module, name, self.execution_device)

|

||||

set_module_tensor_to_device(

|

||||

module, name, self.execution_device, tied_params_map=self.tied_params_map

|

||||

)

|

||||

elif self.offload_buffers and self.execution_device is not None:

|

||||

for name in get_non_persistent_buffers(module, recurse=self.place_submodules):

|

||||

set_module_tensor_to_device(module, name, self.execution_device)

|

||||

set_module_tensor_to_device(

|

||||

module, name, self.execution_device, tied_params_map=self.tied_params_map

|

||||

)

|

||||

|

||||

return module

|

||||

|

||||

@ -280,6 +316,8 @@ class AlignDevicesHook(ModelHook):

|

||||

if self.io_same_device:

|

||||

self.input_device = find_device([args, kwargs])

|

||||

if self.offload:

|

||||

self.tied_pointers_to_remove = set()

|

||||

|

||||

for name, _ in named_module_tensors(

|

||||

module,

|

||||

include_buffers=self.offload_buffers,

|

||||

@ -287,11 +325,32 @@ class AlignDevicesHook(ModelHook):

|

||||

remove_non_persistent=True,

|

||||

):

|

||||

fp16_statistics = None

|

||||

value = self.weights_map[name]

|

||||

if "weight" in name and name.replace("weight", "SCB") in self.weights_map.keys():

|

||||

if self.weights_map[name].dtype == torch.int8:

|

||||

if value.dtype == torch.int8:

|

||||

fp16_statistics = self.weights_map[name.replace("weight", "SCB")]

|

||||

|

||||

# In case we are using offloading with tied weights, we need to keep track of the offloaded weights

|

||||

# that are loaded on device at this point, as we will need to remove them as well from the dictionary

|

||||

# self.tied_params_map in order to allow to free memory.

|

||||

if name in self.tied_params_names and value.data_ptr() not in self.tied_params_map:

|

||||

self.tied_params_map[value.data_ptr()] = {}

|

||||

|

||||

if (

|

||||

value is not None

|

||||

and self.tied_params_map is not None

|

||||

and value.data_ptr() in self.tied_params_map

|

||||

and self.execution_device not in self.tied_params_map[value.data_ptr()]

|

||||

):

|

||||

self.tied_pointers_to_remove.add((value.data_ptr(), self.execution_device))

|

||||

|

||||

set_module_tensor_to_device(

|

||||

module, name, self.execution_device, value=self.weights_map[name], fp16_statistics=fp16_statistics

|

||||

module,

|

||||

name,

|

||||

self.execution_device,

|

||||

value=value,

|

||||

fp16_statistics=fp16_statistics,

|

||||

tied_params_map=self.tied_params_map,

|

||||

)

|

||||

|

||||

return send_to_device(args, self.execution_device), send_to_device(

|

||||

@ -311,6 +370,12 @@ class AlignDevicesHook(ModelHook):

|

||||

module.state.SCB = None

|

||||

module.state.CxB = None

|

||||

|

||||

# We may have loaded tied weights into self.tied_params_map (avoiding to load them several times in e.g. submodules): remove them from

|

||||

# this dictionary to allow the garbage collector to do its job.

|

||||

for value_pointer, device in self.tied_pointers_to_remove:

|

||||

del self.tied_params_map[value_pointer][device]

|

||||

self.tied_pointers_to_remove = None

|

||||

|

||||

if self.io_same_device and self.input_device is not None:

|

||||

output = send_to_device(output, self.input_device, skip_keys=self.skip_keys)

|

||||

|

||||

@ -329,6 +394,7 @@ def attach_execution_device_hook(

|

||||

execution_device: Union[int, str, torch.device],

|

||||

skip_keys: Optional[Union[str, List[str]]] = None,

|

||||

preload_module_classes: Optional[List[str]] = None,

|

||||

tied_params_map: Optional[Dict[int, Dict[torch.device, torch.Tensor]]] = None,

|

||||

):

|

||||

"""

|

||||

Recursively attaches `AlignDevicesHook` to all submodules of a given model to make sure they have the right

|

||||

@ -346,16 +412,23 @@ def attach_execution_device_hook(

|

||||

of the forward. This should only be used for classes that have submodules which are registered but not

|

||||

called directly during the forward, for instance if a `dense` linear layer is registered, but at forward,

|

||||

`dense.weight` and `dense.bias` are used in some operations instead of calling `dense` directly.

|

||||

tied_params_map (Optional[Dict[int, Dict[torch.device, torch.Tensor]]], *optional*, defaults to `None`):

|

||||

A map of data pointers to dictionaries of devices to already dispatched tied weights. For a given execution

|

||||

device, this parameter is useful to reuse the first available pointer of a shared weight for all others,

|

||||

instead of duplicating memory.

|

||||

"""

|

||||

if not hasattr(module, "_hf_hook") and len(module.state_dict()) > 0:

|

||||

add_hook_to_module(module, AlignDevicesHook(execution_device, skip_keys=skip_keys))

|

||||

add_hook_to_module(

|

||||

module,

|

||||

AlignDevicesHook(execution_device, skip_keys=skip_keys, tied_params_map=tied_params_map),

|

||||

)

|

||||

|

||||

# Break the recursion if we get to a preload module.

|

||||

if preload_module_classes is not None and module.__class__.__name__ in preload_module_classes:

|

||||

return

|

||||

|

||||

for child in module.children():

|

||||

attach_execution_device_hook(child, execution_device)

|

||||

attach_execution_device_hook(child, execution_device, tied_params_map=tied_params_map)

|

||||

|

||||

|

||||

def attach_align_device_hook(

|

||||

@ -367,6 +440,7 @@ def attach_align_device_hook(

|

||||

module_name: str = "",

|

||||

skip_keys: Optional[Union[str, List[str]]] = None,

|

||||

preload_module_classes: Optional[List[str]] = None,

|

||||

tied_params_map: Optional[Dict[int, Dict[torch.device, torch.Tensor]]] = None,

|

||||

):

|

||||

"""

|

||||

Recursively attaches `AlignDevicesHook` to all submodules of a given model that have direct parameters and/or

|

||||

@ -392,6 +466,10 @@ def attach_align_device_hook(

|

||||

of the forward. This should only be used for classes that have submodules which are registered but not

|

||||

called directly during the forward, for instance if a `dense` linear layer is registered, but at forward,

|

||||

`dense.weight` and `dense.bias` are used in some operations instead of calling `dense` directly.

|

||||

tied_params_map (Optional[Dict[int, Dict[torch.device, torch.Tensor]]], *optional*, defaults to `None`):

|

||||

A map of data pointers to dictionaries of devices to already dispatched tied weights. For a given execution

|

||||

device, this parameter is useful to reuse the first available pointer of a shared weight for all others,

|

||||

instead of duplicating memory.

|

||||

"""

|

||||

# Attach the hook on this module if it has any direct tensor.

|

||||

directs = named_module_tensors(module)

|

||||

@ -412,6 +490,7 @@ def attach_align_device_hook(

|

||||

offload_buffers=offload_buffers,

|

||||

place_submodules=full_offload,

|

||||

skip_keys=skip_keys,

|

||||

tied_params_map=tied_params_map,

|

||||

)

|

||||

add_hook_to_module(module, hook, append=True)

|

||||

|

||||

@ -431,6 +510,7 @@ def attach_align_device_hook(

|

||||

module_name=child_name,

|

||||

preload_module_classes=preload_module_classes,

|

||||

skip_keys=skip_keys,

|

||||

tied_params_map=tied_params_map,

|

||||

)

|

||||

|

||||

|

||||

@ -455,6 +535,7 @@ def attach_align_device_hook_on_blocks(

|

||||

module_name: str = "",

|

||||

skip_keys: Optional[Union[str, List[str]]] = None,

|

||||

preload_module_classes: Optional[List[str]] = None,

|

||||

tied_params_map: Optional[Dict[int, Dict[torch.device, torch.Tensor]]] = None,

|

||||

):

|

||||

"""

|

||||

Attaches `AlignDevicesHook` to all blocks of a given model as needed.

|

||||

@ -481,12 +562,20 @@ def attach_align_device_hook_on_blocks(

|

||||

of the forward. This should only be used for classes that have submodules which are registered but not

|

||||