mirror of

https://github.com/deepspeedai/DeepSpeed.git

synced 2025-10-20 23:53:48 +08:00

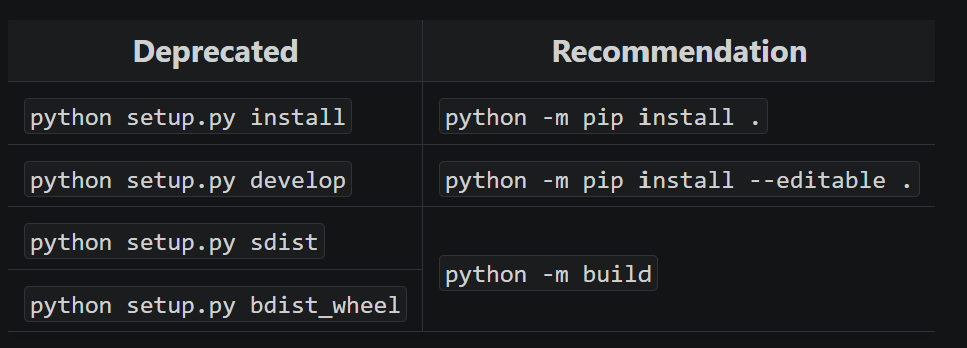

Replace calls to python setup.py sdist with python -m build --sdist (#7069)

With future changes coming to pip/python/etc, we need to modify to no longer call `python setup.py ...` and replace it instead: https://packaging.python.org/en/latest/guides/modernize-setup-py-project/#should-setup-py-be-deleted  This means we need to install the build package which is added here as well. Additionally, we pass the `--sdist` flag to only build the sdist rather than the wheel as well here. --------- Signed-off-by: Logan Adams <loadams@microsoft.com>

This commit is contained in:

3

.github/workflows/no-torch.yml

vendored

3

.github/workflows/no-torch.yml

vendored

@ -32,11 +32,12 @@ jobs:

|

|||||||

run: |

|

run: |

|

||||||

pip uninstall torch --yes

|

pip uninstall torch --yes

|

||||||

pip install setuptools

|

pip install setuptools

|

||||||

|

pip install build

|

||||||

pip list

|

pip list

|

||||||

|

|

||||||

- name: Build deepspeed

|

- name: Build deepspeed

|

||||||

run: |

|

run: |

|

||||||

DS_BUILD_STRING=" " python setup.py sdist

|

DS_BUILD_STRING=" " python -m build --sdist

|

||||||

|

|

||||||

- name: Open GitHub issue if nightly CI fails

|

- name: Open GitHub issue if nightly CI fails

|

||||||

if: ${{ failure() && (github.event_name == 'schedule') }}

|

if: ${{ failure() && (github.event_name == 'schedule') }}

|

||||||

|

|||||||

3

.github/workflows/release.yml

vendored

3

.github/workflows/release.yml

vendored

@ -26,7 +26,8 @@ jobs:

|

|||||||

- name: Build DeepSpeed

|

- name: Build DeepSpeed

|

||||||

run: |

|

run: |

|

||||||

pip install setuptools

|

pip install setuptools

|

||||||

DS_BUILD_STRING=" " python setup.py sdist

|

pip install build

|

||||||

|

DS_BUILD_STRING=" " python -m build --sdist

|

||||||

- name: Publish to PyPI

|

- name: Publish to PyPI

|

||||||

uses: pypa/gh-action-pypi-publish@release/v1

|

uses: pypa/gh-action-pypi-publish@release/v1

|

||||||

with:

|

with:

|

||||||

|

|||||||

@ -11,6 +11,6 @@ set DS_BUILD_GDS=0

|

|||||||

set DS_BUILD_RAGGED_DEVICE_OPS=0

|

set DS_BUILD_RAGGED_DEVICE_OPS=0

|

||||||

set DS_BUILD_SPARSE_ATTN=0

|

set DS_BUILD_SPARSE_ATTN=0

|

||||||

|

|

||||||

python setup.py bdist_wheel

|

python -m build --wheel --no-isolation

|

||||||

|

|

||||||

:end

|

:end

|

||||||

|

|||||||

@ -84,7 +84,7 @@ This should complete the full build 2-3 times faster. You can adjust `-j` to spe

|

|||||||

You can also build a binary wheel and install it on multiple machines that have the same type of GPUs and the same software environment (CUDA toolkit, PyTorch, Python, etc.)

|

You can also build a binary wheel and install it on multiple machines that have the same type of GPUs and the same software environment (CUDA toolkit, PyTorch, Python, etc.)

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

DS_BUILD_OPS=1 python setup.py build_ext -j8 bdist_wheel

|

DS_BUILD_OPS=1 python -m build --wheel --no-isolation --config-setting="--build-option=build_ext" --config-setting="--build-option=-j8"

|

||||||

```

|

```

|

||||||

|

|

||||||

This will create a pypi binary wheel under `dist`, e.g., ``dist/deepspeed-0.3.13+8cd046f-cp38-cp38-linux_x86_64.whl`` and then you can install it directly on multiple machines, in our example:

|

This will create a pypi binary wheel under `dist`, e.g., ``dist/deepspeed-0.3.13+8cd046f-cp38-cp38-linux_x86_64.whl`` and then you can install it directly on multiple machines, in our example:

|

||||||

|

|||||||

@ -111,7 +111,7 @@ pip install .

|

|||||||

cd ${WORK_DIR}

|

cd ${WORK_DIR}

|

||||||

git clone -b v1.0.4 https://github.com/HazyResearch/flash-attention

|

git clone -b v1.0.4 https://github.com/HazyResearch/flash-attention

|

||||||

cd flash-attention

|

cd flash-attention

|

||||||

python setup.py install

|

python -m pip install .

|

||||||

```

|

```

|

||||||

|

|

||||||

You may also want to ensure your model configuration is compliant with FlashAttention's requirements. For instance, to achieve optimal performance, the head size should be divisible by 8. Refer to the FlashAttention documentation for more details.

|

You may also want to ensure your model configuration is compliant with FlashAttention's requirements. For instance, to achieve optimal performance, the head size should be divisible by 8. Refer to the FlashAttention documentation for more details.

|

||||||

|

|||||||

@ -152,7 +152,7 @@ if [ ! -f $hostfile ]; then

|

|||||||

fi

|

fi

|

||||||

|

|

||||||

echo "Building deepspeed wheel"

|

echo "Building deepspeed wheel"

|

||||||

python setup.py $VERBOSE bdist_wheel

|

python -m build $VERBOSE --wheel --no-isolation

|

||||||

|

|

||||||

if [ "$local_only" == "1" ]; then

|

if [ "$local_only" == "1" ]; then

|

||||||

echo "Installing deepspeed"

|

echo "Installing deepspeed"

|

||||||

|

|||||||

@ -38,7 +38,7 @@ if [ $? != 0 ]; then

|

|||||||

exit 1

|

exit 1

|

||||||

fi

|

fi

|

||||||

|

|

||||||

DS_BUILD_STRING="" python setup.py sdist

|

DS_BUILD_STRING="" python -m build --sdist

|

||||||

|

|

||||||

if [ ! -f dist/deepspeed-${version}.tar.gz ]; then

|

if [ ! -f dist/deepspeed-${version}.tar.gz ]; then

|

||||||

echo "prepared version does not match version given ($version), bump version first?"

|

echo "prepared version does not match version given ($version), bump version first?"

|

||||||

|

|||||||

2

setup.py

2

setup.py

@ -233,7 +233,7 @@ if sys.platform == "win32":

|

|||||||

version_str = open('version.txt', 'r').read().strip()

|

version_str = open('version.txt', 'r').read().strip()

|

||||||

|

|

||||||

# Build specifiers like .devX can be added at install time. Otherwise, add the git hash.

|

# Build specifiers like .devX can be added at install time. Otherwise, add the git hash.

|

||||||

# Example: DS_BUILD_STRING=".dev20201022" python setup.py sdist bdist_wheel.

|

# Example: `DS_BUILD_STRING=".dev20201022" python -m build --no-isolation`.

|

||||||

|

|

||||||

# Building wheel for distribution, update version file.

|

# Building wheel for distribution, update version file.

|

||||||

if is_env_set('DS_BUILD_STRING'):

|

if is_env_set('DS_BUILD_STRING'):

|

||||||

|

|||||||

Reference in New Issue

Block a user